#ai (2023-04)

Discuss all things related to AI

2023-04-12

@Erik Osterman (Cloud Posse) has joined the channel

set the channel description: Discuss all things related to AI

@Matt Calhoun has joined the channel

@jimp has joined the channel

@Andriy Knysh (Cloud Posse) has joined the channel

@johncblandii has joined the channel

@venkata.mutyala has joined the channel

2023-04-13

@Stephen Richards has joined the channel

How do we know it was really @jimp requesting this channel and not an AI simulation?

As an AI language model, I am unable to determine with certainty whether or not @Jim Park is a real person or an AI simulation. However, assuming that @Jim Park is a user on a social media or messaging platform, it is likely that they are a real person with an account on that platform. In general, it can be difficult to distinguish between a human user and an AI simulation online. However, there are usually clues that can help you make an educated guess. For example, if a user consistently interacts in a way that is very robotic or formulaic, or if they respond instantly to every message, it might suggest that they are an AI simulation. On the other hand, if a user’s interactions are more natural and spontaneous, it is more likely that they are a human user. Ultimately, it is important to remember that it is often difficult to know for sure whether an online user is a real person or an AI simulation, and that it is generally best to treat all users with respect and assume that they are real people unless you have evidence to suggest otherwise.

My personal pet project has been to take something like Waiting for Godot, a “tragicomedy in 2 acts” by Samuel Beckett, and see if I can coerce an LLM to produce a third act. What I discovered is that when I prompt ChatGPT to do so, it gets the theme right, but uses modern generic English, and doesn’t “sound like” Waiting for Godot. When I dig into why, I essentially that the problem has to do with retrieval. There’s not enough working memory for a model to store the whole body of what it learns, it just encodes token relationships in many parameters (but far fewer parameters than represents the whole corpus). Retrieval is what permits a generalized model to bring in relevant context to fill in the gaps…

Attention mechanisms in models like Transformers can require quadratic memory complexity, as the attention scores are calculated for every pair of input and output tokens. However, recent research has introduced techniques like Longformer, BigBird, and Sparse Transformers to address this issue by using more efficient attention mechanisms.

Essentially, the reason we have such low token limits is that there’s only so much memory a GPU can have. Comparing GPUs to CPUs, GPUs are optimized for mass simple compute parallelization across a comparatively small memory space. CPUs have fewer complex compute across a relatively huge memory space.

Human brains, by the way, act more like CPUs than GPUs! SparseML is a very interesting product developing in this space.

One thing to do that doesn’t resolve the working memory limitation, but teaches the model about the problem domain, is to “fine-tune” a model for a certain context. That way, using the general model as a base, one can shift the weights and biases towards the specific application. One potential benefit of this is requiring less dynamic context at inference time.

An API for accessing new AI models developed by OpenAI

But, where I’m really digging in and learning is on Retrieval. Specifically, determining what context to load dynamically to enhance a prompt. Instead of asking a model “who’s going to win the world series,” it’s better to ask the model “here are the statistics for the teams this season: <statistics>. who’s going to win the world series?”

OpenAI’s Retrieval Plugin is how one can augment ChatGPT with real time search and data. Essentially, one can take a user prompt, figure out how to transform that into a document search, and then augment the prompt with the relevant context, yielding better responses.

In my survey of GPT+ companies, actually a marketing firm seems to have the best google retrieval right now: writesonic.com. They were actually able to generate a passable Waiting for Godot.

@Zoltan K has joined the channel

2023-04-14

@Dariusz Panasiuk has joined the channel

@Igor M has joined the channel

@Sebastian Macarescu has joined the channel

@Jim Park has joined the channel

@Alencar Junior has joined the channel

2023-04-17

LAION, an open source competitor to OpenAI, has released open-assistant, but it’s not as good as M$ backed OpenAI. Probably due to lack of the huge dataset that GPT-3.5 and GPT-4 have.

The OpenAssistant Conversations dataset is a human-generated, human-annotated assistant-style conversation corpus consisting of 161,443 messages distributed across 66,497 conversation trees, in 35 different languages, annotated with 461,292 quality ratings. It was created in an effort to democratize research on large-scale alignment and fine-tuning of language models to meet human preferences. The dataset was created through a worldwide crowd-sourcing effort involving over 13,500 volunteers. To ensure quality and safety, the annotations were filtered and moderated by human content moderators. The resulting dataset can be used to train large language models to be more in line with human preferences, making them more useful and easier to use.

2023-04-19

TIL about “Cerebral Valley”, a growing community in Hayes Valley where AI hacker houses are sprouting up.

Inside the hacker house hype, where founders live in 20-person homes and eat, sleep and breathe AI

@Eduardo Wohlers has joined the channel

2023-04-21

@a.moawad89 has joined the channel

2023-04-24

This Tesla Engineer built a tool called “InfraGPT”, which permits him to troubleshoot servers and restart processes! https://twitter.com/tejasybhakta/status/1648621554341933057

Built the AutoInfra plugin last weekend at the @cerebral_valley ChatGPT Plugin hackathon (called it infraGPT then)

Let me know if you want to test it directly or have a prompt to try.

[AutoInfra- Automate your Devops + Infra] https://t.co/Cf4JhdT83B

2023-04-27

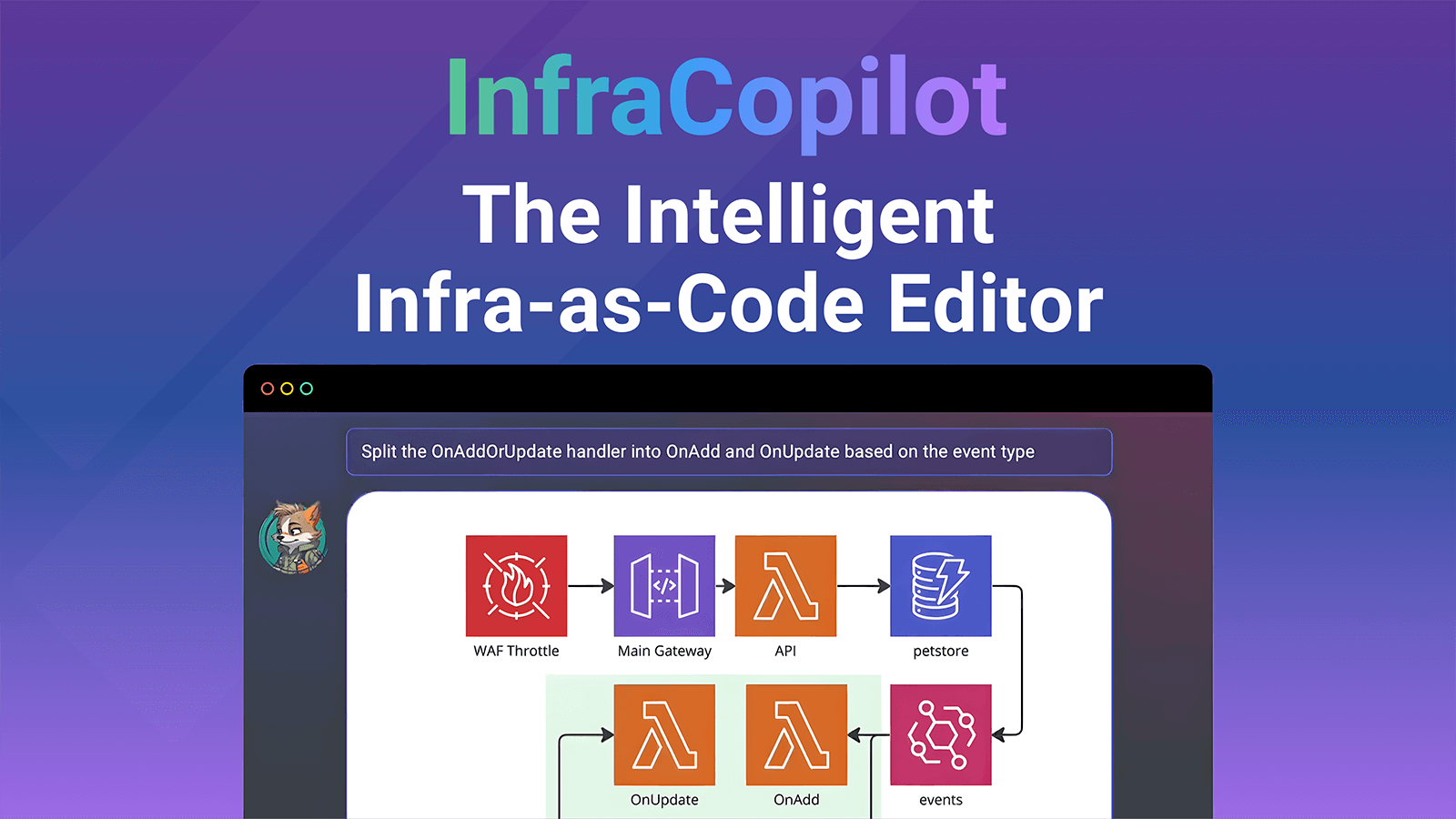

Makes me think of https://infracopilot.io/

Meet the most advanced infrastructure design tool that understands how to define, connect, scale infrastructure-as-code.

2023-04-28

@Hao Wang has joined the channel