#ai (2024-04)

Discuss all things related to AI

2024-04-11

Hao Wang

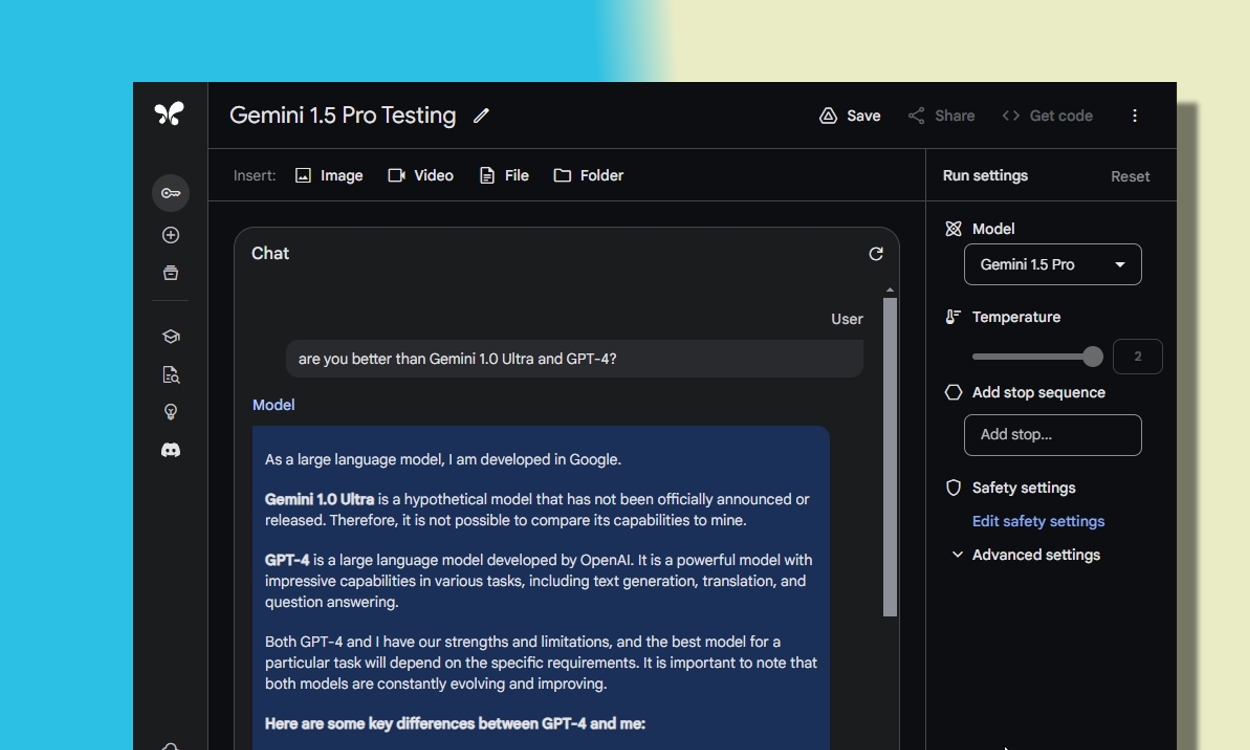

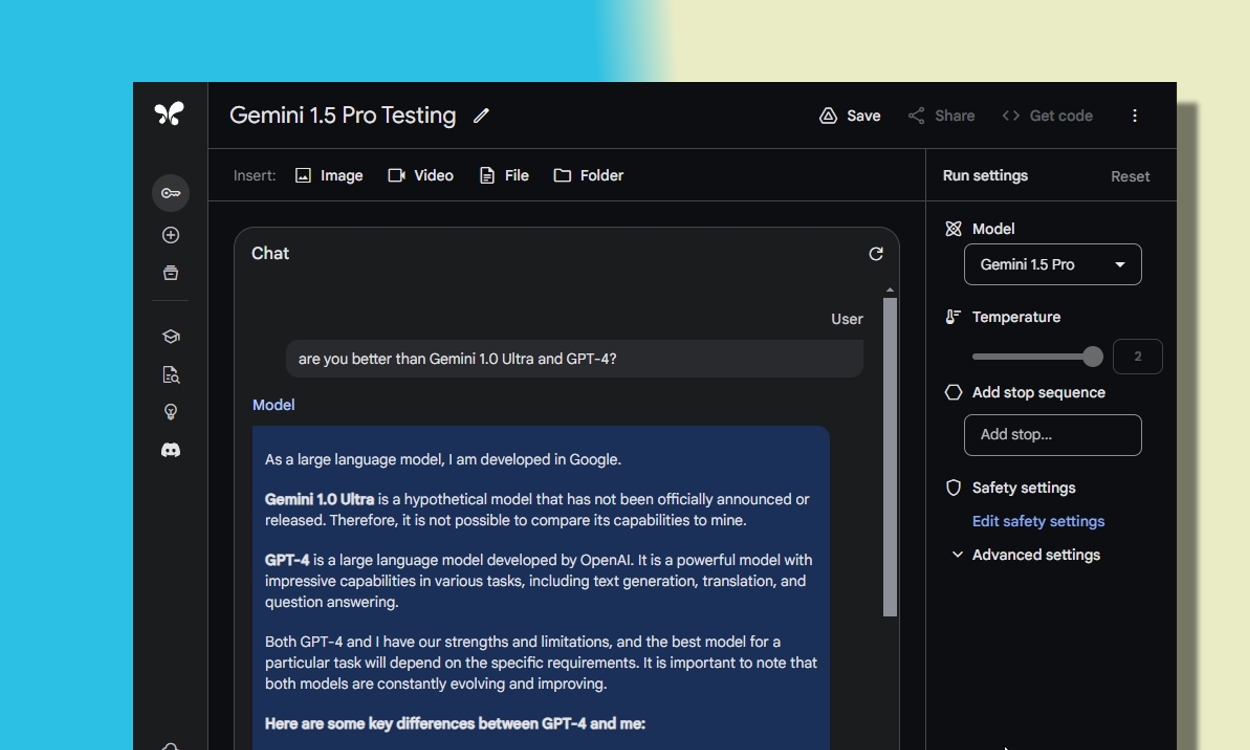

I Got Access to Gemini 1.5 Pro, and It's Better Than GPT-4 and Gemini 1.0 Ultra

I finally got access to Gemini 1.5 Pro with support for 1 million tokens, so I tested out the model and found it is better than Gemini Ultra & GPT-4.

2024-04-20

Hao Wang

princeton-nlp/SWE-agent

SWE-agent takes a GitHub issue and tries to automatically fix it, using GPT-4, or your LM of choice. It solves 12.29% of bugs in the SWE-bench evaluation set and takes just 1.5 minutes to run.

2024-04-26

Hao Wang

“Apple released *OpenELM*, a family of open language models (270 million - 3 billion parameters), designed to run on-device. OpenELM outperforms comparable-sized existing LLMs pretrained on publicly available datasets. Apple also released *CoreNet*, a library for training deep neural networks”