#announcements (2018-08)

Cloud Posse Open Source Community #geodesic #terraform #release-engineering #random #releases #docs

Cloud Posse Open Source Community #geodesic #terraform #release-engineering #random #releases #docs

This channel is for workspace-wide communication and announcements. All members are in this channel.

Archive: https://archive.sweetops.com

2018-08-01

hm can’t install gomplate on amazon linux

nvm

Have a look at https://github.com/cloudposse/packages

packages - Cloud Posse installer and distribution of native apps

We distribute a docker image with all the binaries we use

the install/ folder has the Makefile target for installation

2018-08-02

just saying hi, been lurking in the background for a few days reading over the code everyone has been publishing, they have been incredibly useful resources

just saying hi, been lurking in the background for a few days reading over the code everyone has been publishing, they have been incredibly useful resources

hi @stephen, welcome

Thanks @stephen ! It’s really encouraging to hear that. :-)

I’m helping a friend with a … gig of sorts. He’s working on material that would help teach people how to interview better.

Does anyone here have any thoughts on how to promote that idea?

from tech point of view, maybe a mobile app or mobile site or PWA (Progressive Web App) so people would use it on their phones

with server-side updates for new materials

@krogebry or you are asking about marketing?

Maybe, I’m not exactly sure on this one, it’s a totally new field.

I think interviewing people for technical positions is something we don’t do well in this business in general, specifically in the arena of the enterprise

So, between better interviews that are more able to create productive outcomes ( hiring people that are better fits ) and my theory that influence is the new currency of IT

I’m trying to figure out how to get my buddy into a position where we can …something something… create better interviews.

for me, it would be nice to have a mobile app (or mobile website) with learning materials, and then a test exam, and a real exam/test

Read reviews, compare customer ratings, see screenshots, and learn more about AWS Certified Solutions Arch.. Download AWS Certified Solutions Arch. and enjoy it on your iPhone, iPad, and iPod touch.

video resources would be nice (https://itunes.apple.com/us/app/professional-aws-sol-arch/id1240121535?mt=8)

Read reviews, compare customer ratings, see screenshots, and learn more about Professional - AWS Sol. Arch.. Download Professional - AWS Sol. Arch. and enjoy it on your iPhone, iPad, and iPod touch.

also, the question of why? would be more important and useful than what? and how? (as we see in many interview materials)

e.g.

(off the top of my head)

why would you use Terraform to provision cloud resources if you can do everything manually (and even faster) (stupid question

I’d love to see how someone can do everything manually faster than using tf modules

Oh and not just faster but more secure too

part of the the correct answer would be repeatability, maintainability, ownership of the code, version control, and security of cause

why and in what cases would you use EC2 instances to deploy a database instead of utilizing managed services like RDS or Aurora

why would you use Kubernetes instead of ECS (or otherwise)

yeah, Love those questions

Simon Sinek is a big fan of starting with why

So according to my friend, Christian, the biggest indicator of success in any role ends up being more around the idea of attitude

One of the questions he asked in interviews ( for microsoft ) was: how do you make friend

*friends

2018-08-06

i stumbled on https://github.com/cloudposse/bastion earlier today and it looks really nice, but i’m wondering if the primary use case is to connect to stuff in a preexisting k8s cluster. what about if the primary use case is terraforming/modifying the cluster itself? in that case, wouldn’t it be better if the bastion lived outside of the cluster entirely?

bastion - Secure Bastion implemented as Docker Container running Alpine Linux with Google Authenticator & DUO MFA support

@smoll the bastion can satisfy both use-cases, but we used it with Kubernetes.

bastion - Secure Bastion implemented as Docker Container running Alpine Linux with Google Authenticator & DUO MFA support

it’s still a great way to run a bastion on a classic EC2 instance b/c you jail the SSH session in a container reducing the blast radius.

Plus, containers are a great way to distribute/deploy software in a consistent, repeatable manner.

fair. i think i’m leaning towards skipping the EC2 instance altogether though (for a GKE Private Cluster), because i just need to poke a hole in the firewall just to deploy this one service, and then use the jumpbox to bootstrap everything else. brilliant!

so i tried using the helm chart you guys provided, but the bastion container keeps failing with /etc/ssh/sshd_config: No such file or directory and i see

{"job":"syncUsers","level":"error","msg":"Connection to [github.com](http://github.com) failed","subsystem":"jobs","time":"2018-08-08T17:14:10Z"}

{"job":"syncUsers","level":"error","msg":"Connection to [github.com](http://github.com) failed","subsystem":"jobs","time":"2018-08-08T17:19:11Z"}

in the logs for github-authorized-keys… however, when i SSH into the pod and try to ping github.com echo -e "GET /\n\n" | nc [github.com](http://github.com) 80, it looks okay. any ideas?

hello all

does anyone happen to know why I am getting the following odd error when trying to stand up an RDS cluster with terraform-aws-rds-cluster?

thx in advance

hi @jengstro

what db are you trying to deploy, MySQL or Postgres?

I think you are mixing some parameters from both

take a look here how we deploy Postgres https://github.com/cloudposse/terraform-root-modules/blob/master/aws/backing-services/aurora-postgres.tf#L40

terraform-root-modules - Collection of Terraform root module invocations for provisioning reference architectures

DBParameterGroupFamily

The DB cluster parameter group family name. A DB cluster parameter group can be associated with one and only one DB cluster parameter group family, and can be applied only to a DB cluster running a database engine and engine version compatible with that DB cluster parameter group family.

Aurora MySQL Example: aurora5.6, aurora-mysql5.7

Aurora PostgreSQL Example: aurora-postgresql9.6

Creates a new DB cluster parameter group.

oscar5.6 is for MySQL

if you use engine = "aurora-postgresql", then cluster_family should be aurora-postgresql9.6

if you use engine = "aurora", then cluster_family should be aurora-mysql5.7

@Andriy Knysh (Cloud Posse) Even though it appears that db.t2.medium appears to support aurora-postgresql9.6… I get an error…

1 sec

db.r4.large is the smallest instance type supported by Aurora Postgres

ahh ok

Learn about pricing for Amazon Aurora the MySQL-compatible database with pay as you go pricing with no upfront fees. There is no minimum fee.

db.r4.large is expensive for anything but prod (e.g. dev or staging)

MySQL is better with pricing

Ya pretty pricey

We have had luck deploying Postgres in Kubernetes for disposable environments like staging and dev

thanks guys

2018-08-07

hey guys, slightly off-topic perhaps, question…

Have you guys ever use an external Kibana with an AWS ElasticSearch domain?

I’ve just supposedly connected a Kibana on ec2 instance to ES domain, but kibana’s blank

We recently have been using that!

Did you know that AWS ElasticSearch ships with kibana

yeah but you can’t extend if plugins

aha

it with*

so we also have been using the helm chart for kibana on kubernetes

we pretty much only do containerized stuff these days

helmfiles - Comprehensive Distribution of Helmfiles. Works with helmfile.d

@Andriy Knysh (Cloud Posse) might have some insights

yea, we deployed Kibana on K8s and connected it to AWS Elasticsearch

we also used the Kibana that comes with Elasticsearch

@i5okie did you deploy the ES cluster into AWS public domain or into your VPC?

but @i5okie brings up a good point about the plugins. we haven’t yet gone deep on ES.

VPC

ok, good point about plugins, we need to look at it

about VPC… Did you create the correct Security Groups to connect from the EC2 instance to the cluster?

and also, did you use any IAM policies for ES?

this is when I start kibana in cli with -e and point to ES domain

right now my security policy on ES is just opened up completely. and security group’s open for the EC2 instances.

looks good

except every page in Kibana is blank, besides the menu

what do you mean by empty Kibana?

nothing on the right side?

if you click on Dev Tools

nope, only shows if i look at status. everything else is empty. including dev tools

hmm, never seen that

ikr

maybe broken Kibana?

hmm

could be. i installed it by downloading the rpm. so i can match the version

maybe i need to get the -oss flavor one?

not sure. we did install it from https://github.com/cloudposse/helmfiles/blob/master/helmfile.d/0630.kibana.yaml (it uses the Kibana chart)

ah docker.

charts - Curated applications for Kubernetes

I’m still a docker n00b for the most part. nevermind kubernetes

about to switch production app from heroku to elasticbeanstalk. and i’ve been getting a pretty clear message that ECS is probably the way to go instead..

so im going to have to do more learning

yea, that’s good

you can try diff versions from rpm

(it’s easy to break stuff with Kibana, even if server and client have diff versions, will be a lot of issues)

interesting. so you guys are using kibana 6.3.1… but AWS ES is on 6.2 there’s no version mismatch? like it doesn’t complain?

it did

hmm

we told it to use 6.0.0

for the client

helmfiles - Comprehensive Distribution of Helmfiles. Works with helmfile.d

KIBANA_IMAGE_TAG was set to 6.0.0

ah

ok i’ll try the docker route

thanks

while im here… So I know what docker is, i’ve built docker images, ran them locally, made one for circleci.. bam. What would be a good path to go from here? if you’re using Kubernetes, do you use aws EKS instead of ECS then?

at this point i just have the aws associate architect cert.

I think it depends a little bit about your use-case, how many services, how many engineers, etc

me myself and i are the only devops engineers here. at least for now. lol

I think kubernetes is awesome for teams of 5+ hands on engineers who will share devops responsibilities, and where you have a lot of services to deploy.

ah gotcha

But the kubernetes ecosystem moves so fast, that as a solo-engineer whose job is to maintain it, it will be a lot of work, as much as I hate to say it.

Kubernetes >> ECS. However, for simpler use-cases, it’s still awesome.

have you seen our modules for ECS?

I think the easiest way now is SSH to the EC2 instance and run Kibana in Docker, just to confirm everything is ok

so at what point does it make sense to go from elastic beanstalk which would manage about 16+ instances.. to ECS?

ah thats a good idea @Andriy Knysh (Cloud Posse)

We had really bad experiences with beanstalk. Namely, lots of failed deploys due to dependencies failing (temporary 503s, or broken rpm repos, etc). I think if we did it again, and had beanstalk as a requirement (e.g. due to customer), we would use beanstalk+containers.

but given the choice between beanstalk and ECS, ECS would definitely be my pick.

containers solve so many problems about packaging dependencies, testing, and deployment

i’ve been looking at Segment’s Stack (terraform modules) pretty opinionated. but kind of cool. so im using your modules to sort of setup a good infrastructure with public/private subnets, EB.. etc. (someone before me ended up putting everything except DB to public subnets)

yea, no fun taking over that kind of environment.

hopefully you can make some big inroads on how they architect things

added an integration to this channel: Google Calendar

2018-08-08

What’s everyone doing these days to learn kubernetes and terraform?

We don’t have any materials yet to start from ground zero.

But here are some resources I recently researched for another client.

This is a hands-on introduction to Kubernetes. Browse the examples: pods labels replication controllers deployments services service discovery health checks environment variables namespaces volumes secrets logging jobs nodes Want to try it out yourself? You can run all this on Red Hat’s distribution of Kubernetes, OpenShift. Follow the instructions here for a local setup or sign up for openshift.com for an online environment.

If you are looking for running Kubernetes on your Windows laptop, go to this tutorial.

Both are for getting started with kubernetes and learning by example using a local kubernetes cluster.

As for learning Terraform, I know @jamie has some tutorials he’s been working on, but not sure if they are public.

Oh, there’s also https://github.com/kelseyhightower/kubernetes-the-hard-way

kubernetes-the-hard-way - Bootstrap Kubernetes the hard way on Google Cloud Platform. No scripts.

@pericdaniel I’ve started an issue to track this so we can update our docs. Please add any links that you find useful. https://github.com/cloudposse/docs/issues/205

what we should provide a curated list of links why For people just getting started with Terraform and Kubernetes, references e.g. https://github.com/kelseyhightower/kubernetes-the-hard-way http://k…

Thank you so much!

Also this @pericdaniel https://medium.com/@pavanbelagatti/kubernetes-tutorials-resources-and-courses-d75c0ce56401

I am too active on LinkedIn and I always share posts on DevOps and about other DevOps tools. Since Kubernetes is really making it big in…

hi @pericdaniel

practice in writing TF modules and helm charts and provisioning real infrastructure - it’s a slow process

Hi all, wondering if someone could point me in the right direction to get the bastion docker image working with the github integration, both running on docker on the same host.

Does anyone have a working compose file I could look at?

@pericdaniel shameless plug, check out docs.cloudposse.com.. tons of open source modules on GitHub as well that will take the initial work of getting kubernetes running a much easier task than it really is… Then as @Andriy Knysh (Cloud Posse) said, nothing can replace just doing things on the cluster - use kubectl until you are comfortable with it and then start automating it out of your workflow

as @sarkis pointed out, take a look at our docs https://docs.cloudposse.com/ and also our reference architectures https://docs.cloudposse.com/reference-architectures/

Thank you! I have been looking through those modules and they look great. Just slowly learning and getting through it

I’ll say from experience aha moment will take a couple weeks. Once you get there though - you will wonder where these modules were all your tech life

Also please do contribute, ask here, or open issues as you go through if something is not clear…

@Luke how far along are you? If you share some context/dockerfiles/compose I could try and help.

@sarkis - I’m having issues getting the bastion talking to the github api.

If you have any docket files I can scan over that would be a massive help

Hm, I have not done this myself before, however first thing I’d check is the networking outbound from the bastion docker container

back to docker kibana… im now getting this. just wondering have you seen this issue too?

I found this place because of copyright-header

Any maintainer hanging around?

Hi @rfaircloth

@sarkis and @Erik Osterman (Cloud Posse) should be online around now too normally

Great project, I am looking at a missing feature if I have a license with a copy right in it. When a new release is produced the copy right year should be updated Is that something that can be done (I could be missing it)

@Erik Osterman (Cloud Posse)

@Erik Osterman (Cloud Posse) I’m following your copyright-header project I sent over a PR with some minor things I could fix/enhance. I’m not a ruby dev getting stuck on last tweak. When a release is issued for IP protection the new release should have updated copyright. in parser.rb/ def add(license) there is a if condition “if has_copyright?” I’m just not getting the syntax but using the regex “([cC]opyright(?:(C))?)([^ ]{2,20})” to replace and substitute updated year value would do the job rather than raise exception. If the substitution doesn’t change anything then raise the exception

@i5okie regarding kibana, did you use any IAM access policy to control access to the cluster?

no

its setup with VPN, and access policy is *

what is your?

also, another reason could be a time drift in Docker container, which prevents AWS request from being properly signed

right

right now im trying to figure out why docker isn’t exposing the kibana port.

| kibana_1 | {“type”“2018-08-08T1600Z”,“tags”1,“message”:“Server running at <http://0.0.0.0<i class=”em em-5601%22} | http”></i>//0.0.0.0:5601”}> |

@i5okie maybe this will help https://github.com/elastic/kibana/issues/12918

Kibana version: 5.5.0 Elasticsearch version: 5.5.0 Server OS version: Debian 8 with Docker 17.06.0-ce Original install method (e.g. download page, yum, from source, etc.): docker-compose.yml Descri…

but back to the authorization issue

oh i lost the –oss flag dang it

@i5okie same issues with credentials or signature headers?

signature headers… the xpack thing

@smoll i personally did not test bastion, can take a look. Maybe @Erik Osterman (Cloud Posse) will help faster when he is online

no worries @Andriy Knysh (Cloud Posse). the bastion error looks like it could be stemming from the fact that github-authorized-keys isn’t able to manage users properly (due to the connection failure). i wonder if i’m missing a required field from https://github.com/cloudposse/github-authorized-keys in https://github.com/cloudposse/charts/blob/master/incubator/bastion/values.yaml#L11

Back to this. Running Kibana from Docker. Looks like it connected to ellastic search. But the pages are all blank.

Oh well @i5okie how do you find those empty Kibanas :)

Can you try to delete all browser cache just in case, or use a different browser?

i dont know

wtf

it works in firefox

Haha

Browser cache

So you had it working before too

this is so odd

Just did a nice exercise with Docker, which is good for you too :)

kibana from AWS ES, loads fine in chrome.

this is same version too

i see.

its the basic auth. thats the issue

this happens when i have basic auth on nginx. for troubleshooting i turned nginx off and piped all traffic to docker instead. so firefox didn’t have the basic auth, and chrome had it.

Ok I see

ok for future reference: If someone sets up Kibana separate from ElasticSearch, proxied through NGINX, and gets a blank screen. After basic authentication Kibana will load, but when requesting data from elasticsearch NGINX passes the basic auth headers which ES doesn’t like. The following nginx config flushes the auth header.

Good sleuthing!

/etc/nginx/conf.d/kibana.conf

so it looks like this error Connection to [github.com](http://github.com) failed https://github.com/cloudposse/github-authorized-keys/blob/111b4401d4597c6a97b7a5316bcd35e697ab07ff/api/github.go#L32 is getting raised no matter what the actual error is, for example even if i change the oauth token to "x" i get the exact same error

github-authorized-keys - Use GitHub teams to manage system user accounts and authorized_keys

i take that back, i think this is just me… when i ran the docker image locally i definitely don’t get this exception, with the exact same credentials. there’s definitely something funky with how the service is running on my GKE cluster… not sure if it’s a problem with the chart or some configuration issue with my cluster though…

github-authorized-keys - Use GitHub teams to manage system user accounts and authorized_keys

Figured out the first problem: https://github.com/cloudposse/charts/blob/master/incubator/bastion/templates/deployment.yaml#L69 /etc volume mount blasts away etc/ssl which means it can’t initiate an SSL connection

we had to do some hacks to get it to work and currently don’t have it deployed because our custom is now using a far superior solution: telelport.

I think teleport is free for small clusters

and still affordable for enterprises.

teleport - Privileged access management for elastic infrastructure.

they have an “open core” model, where most features are available free in the open source version

and some enterprise features require a subscription.

ah gotcha, will check teleport out

Hey y’all - sorry, I am AWOL. Traveling today to Tulum, MX on vacation. Will be available intermittently. Looks like a lot has been discussed today! Will reply to all threads as soon as we get settled.

2018-08-09

If you’re still waiting on a PR code review or some other issue, let me know!

Just the PR I pushed to geodesic yesterday

Thanks! I have assigned it to @Andriy Knysh (Cloud Posse) to test

2018-08-10

I have a stupid question, but what is the correct set up for using geodesic modules in CI/CD? Is the goal to have changes to infrastructure auto-deployed through promotion through CD pipelines, or is the cloudposse approach all about pushing things from CLI using the geodesic wrapper?

Our ultimate goal is to do exactly what you want: test and deploy the geodesic-based containers as part of cicd.

The interactive cli was the easiest way to get started.

I think it’s about a week worth of effort to implement. Basically, the way we look at it is this: infrastructure as code is the same as all other code. Therefore we should treat it the same. Package the infrastructure inside a geodesic container. Test that container. If successful, push image to repo. Deploy to target environment by running container and calling apply.

So what I don’t like about Atlantis is that it violates this paradigm. It does not treat infrastructure as code like all other code. It requires a new standalone CI/CD system (the Atlantis daemon) to work, which is different from how we deploy all other software. I am not ripping on Atlantis; I think it’s cool what it does. I just wish it was compatible with the containerized approach we take, which is how all other modern software is deployed.

this cold be useful for dev and staging environments (low cost) https://aws.amazon.com/blogs/aws/aurora-serverless-ga/ (no Postgres yet)

You may have heard of , a custom built MySQL and PostgreSQL compatible database born and built in the cloud. You may have also heard of , which allows you to build and run applications and services without thinking about instances. These are two pieces of the growing AWS technology story that we’re really excited […]

2018-08-12

2018-08-13

There are no events this week

2018-08-15

So for terraform AWS ssm parameters store…. Do I use that module to store the ssm values that I want to store? Then use the data and resource piece of that into another terraform module I have to call those values?(sorry I’m still new to all this!) My goal is there I don’t want my access and secret keys in plain text so I want to have another module that will call those values and then I can pass those values as access and secret keys in AWS…(videos would be nice if you can explain it)

hi @pericdaniel

yes you can use https://github.com/cloudposse/terraform-aws-ssm-parameter-store to write secrets to SSM from one Terraform module and then read the secrets from another TF module

terraform-aws-ssm-parameter-store - Terraform module to populate AWS Systems Manager (SSM) Parameter Store with values from Terraform. Works great with Chamber.

terraform-aws-ssm-parameter-store - Terraform module to populate AWS Systems Manager (SSM) Parameter Store with values from Terraform. Works great with Chamber.

we also use https://github.com/segmentio/chamber to write and read secrets from SSM

chamber - CLI for managing secrets

take a look at our reference architectures https://docs.cloudposse.com/reference-architectures/ - shows how we use Terraform/chamber/SSM together

here are a few possible scenarios:

- Use

terraform-aws-ssm-parameter-storeto write secrets from one TF module, and useterraform-aws-ssm-parameter-storeto read secrets from another TF module - Use

terraform-aws-ssm-parameter-storeto write secrets from a TF module, and then usechamberin a CI/CD pipeline to read secrets from SSM and populate ENV vars for the application beign deployed - Use

chamberto write secrets to SSM, and then usechamberin a CI/CD pipeline to read secrets from SSM and populate ENV vars for the application beign deployed

Thank you! I’ll look into it tomorrow!

2018-08-17

Finally they caught up with the latest version 6.3 of Kibana and ES client

2018-08-20

There is 1 event this week

August 22nd, 2018 from 9:00 AM to 9:50 AM GMT-0700 at https://zoom.us/j/299169718

@here join us for our first open “Townhall” meeting this wednesday. Get to know everyone, ask questions, debate strategies, anything goes…. it’s an open discussion and everyone is invited!

timezone? also, that link opens a google calendar… is that shared somewhere or is it private?

With Google’s free online calendar, it’s easy to keep track of life’s important events all in one place.

I can also invite you directly

timezone is PST.

We selected 9am since it works well with GMT too

ahh my bad, just had to switch to the gsuite account associated with this workspace, now i see the event!

nice integration

2018-08-21

Been using the EB app and env TF repos y’all made. Love it.

TY!

@OGProgrammer thanks! Appreciate it

2018-08-22

August 22nd, 2018 from 9:00 AM to 9:50 AM GMT-0700 at https://zoom.us/j/299169718

good read on GitOps, https://queue.acm.org/detail.cfm?ref=rss&id=3237207

IaC + PR = GitOps: GitOps lowers the bar for creating self-service versions of common IT processes, making it easier to meet the return in the ROI calculation. GitOps not only achieves this, but also encourages desired behaviors in IT systems: better testing, reduction of bus factor, reduced wait time, more infrastructure logic being handled programmatically with IaC, and directing time away from manual toil toward creating and maintaining automation.

probot - A framework for building GitHub Apps to automate and improve your workflow

@loren this may be the GitOps article you mentioned in townhall? https://queue.acm.org/detail.cfm?id=3237207

IaC + PR = GitOps: GitOps lowers the bar for creating self-service versions of common IT processes, making it easier to meet the return in the ROI calculation. GitOps not only achieves this, but also encourages desired behaviors in IT systems: better testing, reduction of bus factor, reduced wait time, more infrastructure logic being handled programmatically with IaC, and directing time away from manual toil toward creating and maintaining automation.

yep

possibly: https://github.com/claranet/python-terrafile ?

python-terrafile - Manages external Terraform modules

yes

terrible - Let’s orchestrate Terraform configuration files with Ansible! Terrible!

this is perfect!!

thanks

You are welcome!

Thanks for arranging todays townhall @Erik Osterman (Cloud Posse), kudos!

ditto, good stuff

Regarding bitly/oauth2_proxy — I mentioned the “sidecar” authentication mode, which is the 401 redirect. Here’s someone else’s writeup of how to use it wtih nginx. (My usage is very similar…)

I wanted to set up a prometheus machine for me to monitor random stuff, but I was always postpone that because I didn’t want to use SSH port-forwarding, firewalls, create a VPC and/or setup an OpenVPN server or anything like that.

Can somebody remind me where is the self-hosted version of TF Registry? I remember I saw some repo before summer, but can’t remember now.

oh, i recall seeing an anouncement for that

but thought it was for enterprise

Terraform can load private modules from private registries via Terraform Enterprise.

no-no. It is someone’s open initiative, so code was on github 100%

aha

it was incomplete, but still better than starting from scratch probably…

Contribute to terraform-simple-registry development by creating an account on Github.

Thanks, yes!

https://github.com/outsideris/citizen - this is a bit newer

citizen - A Private Terraform Module Registry

@antonbabenko what are you working on ?

It is not for me

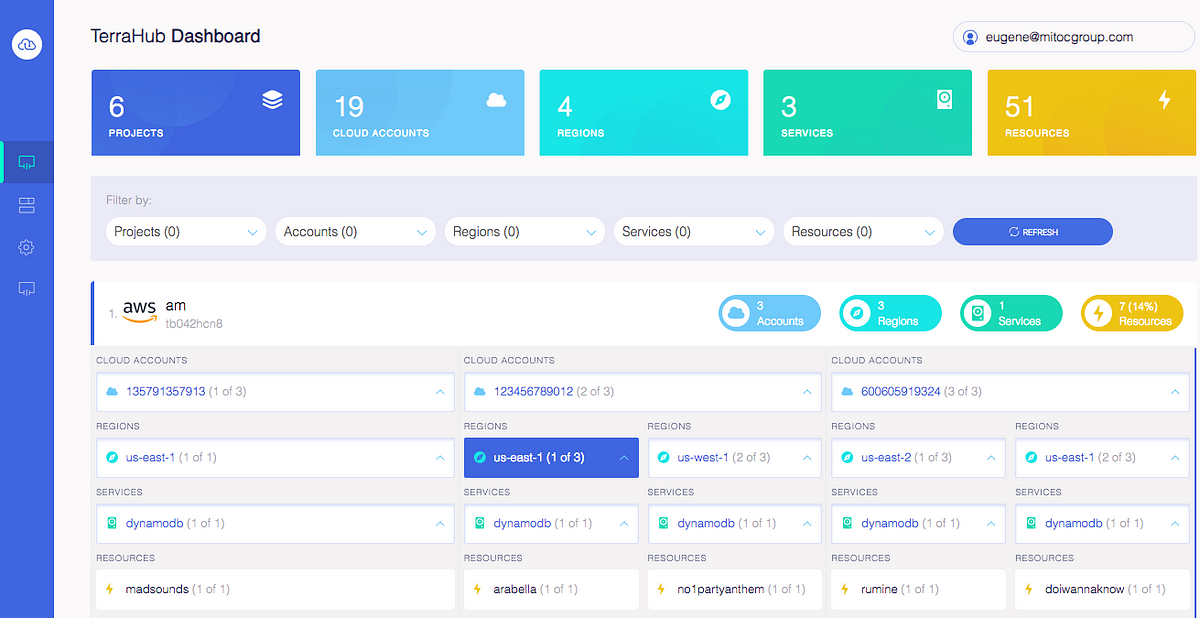

oh wow, that’s cool - terrahub

smart idea for SaaS

Over the last couple of months we have been working with several customers to reduce the development burden of terraform configuration and…

Better explanation

follow me on twitter - https://twitter.com/antonbabenko - I blog almost exclusively about Terraform

The latest Tweets from Anton Babenko (@antonbabenko). AWS / Terraform / Architecture / DevOps fanatic. I like organizing related events and speak often. Oslo, Norway

We have sailed 1500 miles from Spain to Greece and now back to the life on the land!

We had a successful “Town Hall” meeting. Unfortunately, I forgot to click “record”, but will do that next time.

I will be posting some meeting notes later on today

For now, I’ve setup a poll to vote for the next meeting time (for September 5th)

https://doodle.com/poll/d8z7m9u8n2ddtmfp (thanks @antonbabenko for suggestion)

Meetings will be conducted via Zoom (recorded) and held every couple weeks at different times to accommodate different geographies.

Howdy folks. n00b user here! :wave: We’re trying out the AWS “reference architecture” stuff (docs.cloudposse.com/reference-architectures/) and have some n00b questions.

First up… we’ve followed the cold-start process and now have root.example.com, prod.example.com etc repos, accounts stood up, k8s cluster up in prod. Which is cool, but the current Dockerfile is basically an intermingling of configuration and code, making updating it to track the “upstream” versions (e.g. [prod.cloudposse.co/Dockerfile](http://prod.cloudposse.co/Dockerfile`)) awkward. Are there plans to extract the configuration into a separate file in order to make the existing repo more usable longterm, or is this intended more as a “here’s an example of how you might glue this all together” repo rather than a tool you’d use directly?

@tarrall you’re a very advanced “n00b” if you got that far

congrats!

let’s move the discussion to #geodesic if you don’t mind

i’ll answer there

Well, actually much of this was set up when I got here and yup moving

aha, you’re at Flowtune

cool

Here’s are the minutes from today’s Town Hall meeting:

Today we had our first “Town Hall” meeting where members of our SweetOps community (slack.cloudposse.com) got together on a Zoom conference call to talk shop. Remember to vote when we should have our next call. Discussion Points GitOps - CI/CD Automation of Terraform Git ChatOps OAuth

2018-08-23

oh, i missed the mention of pullrequestreminders, that is awesome, just added it to our workspace

welcome @pecigonzalo

Hi all, We’re looking at implementing the Bastion container alongside github-authorized-keys, but despite following the docs to the letter, I keep getting the below error on github-authorized-keys when attempting to sync users, anyone experienced it before?

{"job":"syncUsers","level":"error","msg":"exit status 1","subsystem":"jobs","time":"2018-08-23T15:16:19Z"}

adduser: Specify only one name in this mode.

I’ve not defined either SYNC_USERS_GID or SYNC_USERS_GROUPS, so it should be defaulting to adduser {username} --disabled-password --force-badname --shell {shell}, which I can’t see why it would be triggering the above error

@adamstrawson I will get back to you in a couple hours.

@adamstrawson can you give me a little bit more context

are you deploying on kubernetes, ECS or regular instances?

adduser: Specify only one name in this mode.

yes, I’ve experienced similar problems before.

So our default command template works with alpine linux (if I recall correctly)

adduser is unfortunately not standardized across linux distributions

this is why we allow the command template to be defined with an environment variable

I presume you’re not running the github-authorized-keys daemon inside of the bastion container (which is not advisable)

@pecigonzalo anything we can help you out with?

Not really at the moment

Hi @Erik Osterman (Cloud Posse) Thanks for coming back to me. This is on a regular instance (on Digital Ocean), running Ubuntu 16.04

we’re not tied to using Ubuntu though, so if it’s better supported with Alpine or similar I don’t mind going with that

I just used Ubuntu out of habit for my test servers

@adamstrawson this might be helpful: https://github.com/cloudposse/terraform-template-user-data-github-authorized-keys/blob/master/templates/debian-systemd.sh

Contribute to terraform-template-user-data-github-authorized-keys development by creating an account on Github.

that defines the template compatible for debian systems

(albeit a year or two old)

Great, I’ll have a play with that - Thanks

@Erik Osterman (Cloud Posse) That did the trick, thanks. Next question i’m afraid, I’m struggling to see how you get the github-authorized-keys container and the bastion container to work together. They’re both running and working independently, but the bastion container doesn’t seem to want to do anything with the keys (eg. if I ssh to -p 1234, I get permission denied.) github-authorized-keys has synced the users and keys to the host machine (I can ssh fine to the host machine with a synced user), but via the bastion container it doesn’t detect any keys. Can’t see in the docs how the two work together

Sure, let me explain (but gimme a few to get back to you)

Thank you, no rush

Are you familiar with the sidecar pattern?

I am

cool

@adamstrawson I’m going to move this discussion to #security

hi there, any tips for getting https://github.com/cloudposse/bastion working on ECS (amazon linux)? I have both containers running (bastion, and github-authorized-keys) but I’m not really sure how one makes use of the other and the exact volume mounts I need

bastion - Secure Bastion implemented as Docker Container running Alpine Linux with Google Authenticator & DUO MFA support

@Dave would love to work with you to figure that out. Haven’t yet tried to deploy it that way.

I am about to head to bed, but maybe ping me tomorrow or open up a GitHub issue and we can have a discussion there

@Erik Osterman (Cloud Posse) ok thank you! will do

Basically, you would want to deploy a task with two containers

GitHub authorized keys would be a sidecar to the bastion

Are you familiar with Kubernetes?

I could show you an example there

not really, but I have been looking at https://github.com/cloudposse/charts/blob/master/incubator/bastion/templates/deployment.yaml

charts - The “Cloud Posse” Distribution of Kubernetes Applications

I think I’m close

Yes that’s the one

I’ll open an issue if I can’t figure it out by the end of the day (here in Melbourne, AU). I may open an issue anyway, to share my task definition

Would love for you to share that task definition if you get it working

I think others would dig it

yeah no worries

2018-08-24

Not sure if anyone else listens to this but generally some pretty good stuff on here: https://kubernetespodcast.com/

Thanks for sharing. Listened to an episode today in the car. Got a lot out of it.

2018-08-25

@Dave how did it go with the bastion on ECS?

2018-08-26

Hey everyone, I’ve invited @alex.somesan , he worked before with CoreOS on Tectonic, and will now work on the Kubernetes Provider at Hashicorp .. Welcome

Hi all! Thanks for having me around. This channel feels familiar. As Maarten said, i was involved in writing the installer tool for Tectonic on a few different cloud providers and before that i spent a few years at AWS. I hope I can help out around here. See you around!

And we both are two horrible Terraform User Group organizers in Berlin

The worst!

@alex.somesan welcome!

I never got to use Tectonic, but heard great things about the distribution

We could certainly learn a lot from someone with your background!

So you’re now working with the terraform-kubernetes-provider?

I’m starting with Hashicorp beginning of September. Most likely that provider will be my first endeavor there.

Cool - we haven’t started using that provider yet for anything. Mostly use helm for everything now. But I would be open to learning about complementary use-cases where the provider would help us out.

Hi @alex.somesanand welcome.

Who will be #100?

2018-08-27

There are no events this week

since we have quite a few new memembers since last week, I wanted to reintroduce the “Town Hall” meeting we have going on here

basically, we hold a ~1 hour call via Zoom. it’s an opportunity for everyone to get to know each other, share what their working on, ask questions, and help us steer the direction of what we’re building

We have a poll here: https://doodle.com/poll/d8z7m9u8n2ddtmfp

Meetings will be conducted via Zoom (recorded) and held every couple weeks at different times to accommodate different geographies.

Vote for the next time that works for you. We may run multiple meetings so we can be as inclusive as possible.

2018-08-30

Hello friends, I have a question regarding the rds_cluster_aurora_mysql terraform module - currently I have a security group with my IP as an ingress rule, then I utilize that security group using the module and specify the group within the “security_groups” parameter and when I try to connect from our IP it gives a connection refused. I can simply add the rule to the security group the module creates which is the exact same rule within the security group I attached to it and it’ll work properly. Any ideas?

Also does anyone use Mesosphere DC/OS ?

hi @Matthew

regarding terraform-aws-rds-cluster

you said and it'll work properly. Did you test it or just think it will work?

If i add my ingress IP to the generated security group the module creates it’ll work

But when i attach the security group with the same ingress IP rule in it to the generated security group the module creates it won’t work

did you test If i add my ingress IP to the generated security group the module creates?

Yes

did you place the cluster instances into public or private subnets?

public

If the module would create a security group and allow me to specify some ingress rules that would solve my problem

But as far as i can see, It only lets you attach pre-made security groups

do you see the other security group (with your IP) in the list of ingress rules for the cluster security group (that the module created)?

Yes

can you show images of the two SGs with ingress rules?

2018-08-31

Quick question about terraform-aws-rds-cluster outputs… How do I ask for the cluster endpoint?

hi @Jeff

The endpoint and reader_endpoint are not in the outputs of the module.

https://www.terraform.io/docs/providers/aws/r/rds_cluster.html#attributes-reference

If you need them, you can open an issue, and we’ll add.

But you can provide a Route53 Zone Id, and the module will create subdomains (more friendly names) for both master and replicas. https://github.com/cloudposse/terraform-aws-rds-cluster/blob/master/outputs.tf#L21

Using Route53 is better since if you use the URL in your app and then rebuild the cluster, you will not have to update the URL

(if you use endpoint and reader_endpoint, they will change after each rebuild).

Manages a RDS Aurora Cluster

Terraform module to provision an RDS Aurora cluster for MySQL or Postgres - cloudposse/terraform-aws-rds-cluster

ahh ok

do you have an example of that somewhere?

Collection of Terraform root module invocations for provisioning reference architectures - cloudposse/terraform-root-modules

Terraform module to provision an RDS Aurora cluster for MySQL or Postgres - cloudposse/terraform-aws-rds-cluster

Is there a recipe somewhere for setting up a service with WebSocket/WebRTC ingress under Kubernetes on AWS?

There shouldn’t be anything necessary to add. I believe @Daren and and @Max Moon haven’t had problems with this.

This is supported here: https://github.com/nginxinc/kubernetes-ingress/issues/322

The documentation on WebSockets mentions: To load balance a WebSocket application with NGINX Ingress controllers, you need to add the nginx.org/websocket-services annotation to your Ingress resourc…

(Note that annotation is for the commercial edition which does not apply to you)

NGINX Ingress Controller for Kubernetes https://kubernetes.github.io/ingress-nginx/ - kubernetes/ingress-nginx

“Support for websockets is provided by NGINX out of the box. No special configuration required.”

So I guess what I mean to say is just configure the ingress as you would any other service.

Thanks. I know ELB doesn’t support Websocket stickiness and I forgot we’re already using nginx.

For cloudposse/terraform-aws-rds-cluster… does anyone know why it won’t let me assign the value of a var for admin_password?

I get the following error…

i’ll check @Jeff

just created a cluster with the following config

module "rds_cluster_aurora_postgres" {

source = "git::<https://github.com/cloudposse/terraform-aws-rds-cluster.git?ref=master>"

name = "postgres"

engine = "aurora-postgresql"

cluster_family = "aurora-postgresql9.6"

cluster_size = "2"

namespace = "eg"

stage = "dev"

admin_user = "admin1"

admin_password = "Test123456789"

db_name = "dbname"

instance_type = "db.r4.large"

vpc_id = "vpc-XXXXXXXX"

availability_zones = ["us-east-1a", "us-east-1b"]

security_groups = []

subnets = ["subnet-XXXXXXXX", "subnet-XXXXXXXX"]

zone_id = "ZXXXXXXXXXXXX"

}

output "name" {

value = "${module.rds_cluster_aurora_postgres.name}"

description = "Database name"

}

output "user" {

value = "${module.rds_cluster_aurora_postgres.user}"

description = "Username for the master DB user"

}

output "password" {

value = "${module.rds_cluster_aurora_postgres.password}"

description = "Password for the master DB user"

}

output "cluster_name" {

value = "${module.rds_cluster_aurora_postgres.cluster_name}"

description = "Cluster Identifier"

}

output "master_host" {

value = "${module.rds_cluster_aurora_postgres.master_host}"

description = "DB Master hostname"

}

output "replicas_host" {

value = "${module.rds_cluster_aurora_postgres.replicas_host}"

description = "Replicas hostname"

}

Outputs:

cluster_name = eg-dev-postgres

master_host = master.postgres.XXXXXXXXX.net

name = dbname

password = Test123456789

replicas_host = replicas.postgres.XXXXXXXX.net

user = admin1

@Jeff not sure why you have the error