#announcements (2018-11)

Cloud Posse Open Source Community #geodesic #terraform #release-engineering #random #releases #docs

Cloud Posse Open Source Community #geodesic #terraform #release-engineering #random #releases #docs

This channel is for workspace-wide communication and announcements. All members are in this channel.

Archive: https://archive.sweetops.com

2018-11-01

hey everyone give a warm welcome to @solairerove! Good to have you here

1

1hi @solairerove what brings you here?

hi, erik send me link after interview

That’s great, welcome

thx)

hey everyone give a warm welcome to @inactive! Good to have you here

1

1Hi @inactive, what are you working on ?

Hi Maarten

I am currently working with Terraform. I found one of your modules and one thing led to another and eventually got me to join your Slack channel

hey everyone give a warm welcome to @onzyone! Good to have you here

Ah there are a few who can help you out in case you get stuck, especially in PST working hours

Yes, thanks. I submitted an issue on Github a few days ago. Just waiting for a response.

thanks @inactive, remind me what repo you submitted the issue to

Hello, I recently started using your module and was able to create a new Elastic Beanstalk environment successfully. However, I need my load balancers to be internal facing only. I could not find a…

need to look into that. If you know how to implement and test, submit a PR, we’ll review promptly

thank you, @Andriy Knysh (Cloud Posse). I am not entirely sure how to implement this myself. I know that it is possible to do, since it all boils down to a cloud formation template

welcome @solairerove @inactive @onzyone

hello!

hi there

hey everyone give a warm welcome to @andreveelken! Good to have you here

if you have questions or need help, just ask

Thank you!

hey @andreveelken

hey

I am looking for a simple module that I can use to create CNAMES for AWS resource endpoints

I did notice that there is one, but it also creates a cert …

Terraform module to request an ACM certificate for a domain name and create a CNAME record in the DNS zone to complete certificate validation - cloudposse/terraform-aws-acm-request-certificate

that one is to request an SSL cert and validate using DNS

take a look at these:

Terraform Module to Define Vanity Host/Domain (e.g. [brand.com](http://brand.com)) as an ALIAS record - cloudposse/terraform-aws-route53-alias

I looked at this one, but the type is hardcoded

Terraform Module to Define Vanity Host/Domain (e.g. [brand.com](http://brand.com)) as an ALIAS record - cloudposse/terraform-aws-route53-alias

but it makes sense based on the name of the module

line 11 ` type = “A”`

Terraform module to easily define consistent cluster domains on Route53 (e.g. [prod.ourcompany.com](http://prod.ourcompany.com)) - cloudposse/terraform-aws-route53-cluster-zone

Terraform module to define a consistent AWS Route53 hostname - cloudposse/terraform-aws-route53-cluster-hostname

This one looks like what I am looking for

Terraform module to define a consistent AWS Route53 hostname - cloudposse/terraform-aws-route53-cluster-hostname

if just for plain RDS (not Aurora), we have a similar module https://github.com/cloudposse/terraform-aws-rds/blob/master/main.tf#L91

Terraform module to provision AWS RDS instances. Contribute to cloudposse/terraform-aws-rds development by creating an account on GitHub.

terraform-aws-route53-alias is to create aliases to existing AWS resources

Aliases are the way to go saves the requester another request.

yes

interesting …

I have always used cnames

for hostnames, look at this example https://github.com/cloudposse/terraform-aws-rds-cluster/blob/master/main.tf#L107

Terraform module to provision an RDS Aurora cluster for MySQL or Postgres - cloudposse/terraform-aws-rds-cluster

but if there is a better way of doing it … I am open to try

better way is not to use CNAMEs as @maarten pointed out

ya it is for a Postgres rds instance right now

so the rds-cluster module will work well too

if you have already AWS resources, you can always create an ALIAS or a new hostname in a diff DNZ zone to point to a resource

this is perfect

what is the recommended way for sending in the zone_id?

you only need CNAME in prob just one case: you have a domain name (e.g. [example.com](http://example.com)) in let’s say GoDaddy, and you want to point it to a diff domain let’s say [prod.example.net](http://prod.example.net) in Route53

zone_id you can find in Route53

or, if you create it in TF, you already have that ID as the output

and you are right you want to use a new hostname for an RDS instance. Because if you use just aws_db_instance.default.address and give it to the application, next time you recreate the instance, the endpoint will change (and the app crash)

with a dedicated hostname (e.g. [postgres.example.net](http://postgres.example.net)), even if you recreate the instance, the hostname will be updated as well to point to the new instance

ya that is what I was driving to …

this is the first time that I have used TF … but it is a great tool so far

and cloudposse github is great too!!!

let’s move to #terraform

hey everyone give a warm welcome to @dustinvb! Good to have you here

Have you signed up for our Newsletter? It covers everything on our technology radar. Receive updates on what we’re up to on GitHub as well as awesome new projects we discover.

hey everyone give a warm welcome to @btai! Good to have you here

hey @dustinvb! check out the #release-engineering channel - that’s probably the most relevant

2018-11-02

1.9billion valuation!? Welp

has anyone worked on multi-region S3 website architecture in Terraform, similar to what’s documented here https://read.iopipe.com/multi-region-s3-failover-w-route53-64ff2357aa30

A couple weeks before writing this post, AWS had a single-region failure of S3. It was the worst failure of S3 ever, and it took down many…

@mmarseglia sounds interesting, but no, we did not work on that

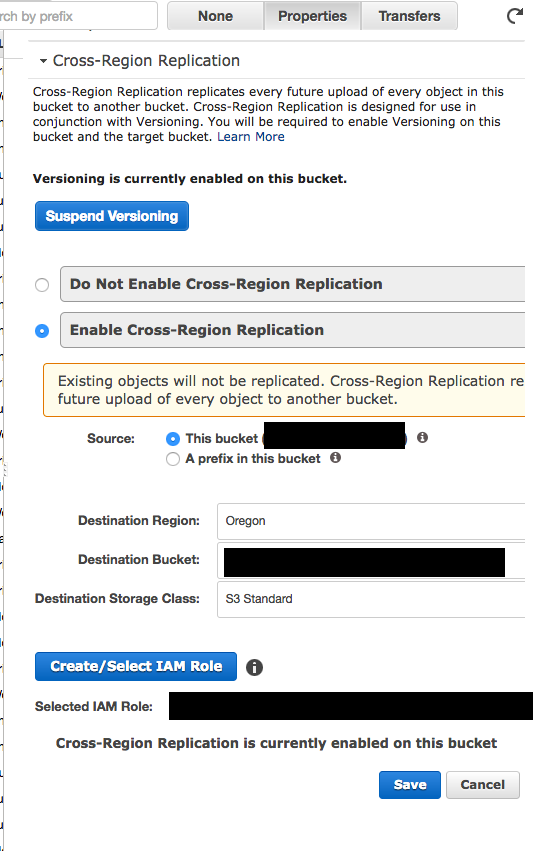

sounds like you need to create a) buckets with cross–region replication; b) health checks for buckets and CloudFront; c) Failover policy in Route53

i tried doing some of it but got stuck on the s3_bucket_policy

i have a module that creates the buckets. to do that, i had to pass two providers each configured for a different region.

but I got stuck on the policy because it doesn’t accept provider as a parameter. so it tries to create a policy using the default provider, which is in a different region than the replica bucket

i’d have to get some code available to fully illustrate, i guess.

did you look at this https://docs.aws.amazon.com/AmazonS3/latest/dev/setting-repl-config-perm-overview.html

When setting up cross-region replication, you must acquire necessary permissions as follows:

looks like you need just one policy

oh good!

i think i see a way forward

Yea the policy should be for the same provider as the source bucket

Both source and destination buckets must have versioning enabled.

The source and destination buckets must be in different AWS Regions.

Amazon S3 must have permissions to replicate objects from the source bucket to the destination bucket on your behalf.

2018-11-03

hey everyone give a warm welcome to @nnamani.kenechukwu! Good to have you here

Just a quick question. What could cause the Cloudfront loogging to S3 to abruptly stop? I have checked that the S3 has write permission enabled. Also, there were old logs from the cloud front in the S3 but not new ones.

Hi @nnamani.kenechukwu

So the cloudfront logs are not flushed in real time, I forgot how what the timeframe is in which the logs will be flushed to s3. So if a particular endpoint is not receiving enough traffic it can take a while. That could be one reason.

Use log files to get information about user requests for your objects.

“Note, however, that some or all log file entries for a time period can sometimes be delayed by up to 24 hours. When log entries are delayed, CloudFront saves them in a log file for which the file name includes the date and time of the period in which the requests occurred, not the date and time when the file was delivered. “

@maarten thank you for your prompt response. The last logs from the cloudfront to the S3 buckets dates back to 2017 which makes me think that something is wrong.

haha, yes, that’s not a normal delay

I’d use AWS Support for something like this, they can figure that out.

A few things you can do tho

Did logging stop or did traffic to the CF distribution stop

If you run apache bench against the cloudfront endpoint for a while you should see logs quite quickly normally

Alright. I will go with your recommendations. But I do know that the traffic to the CF didn’t stop. However l, will run the Apache bench and also reach out to the AWS support.

Thanks a lot

Sure, good luck!

hey everyone give a warm welcome to @github140! Good to have you here

1

1hey everyone give a warm welcome to @OScar! Good to have you here

Nice coffee cup! :-)

@here I am trying to setup CloudTrail logs for several AWS accounts. I deployed the trail and bucket with my config using this terraform repo https://github.com/cloudposse/terraform-aws-cloudtrail-s3-bucket . Do I have to deploy via Terraform with each of the accounts? sorry, first time using CloudTrail…

S3 bucket with built in IAM policy to allow CloudTrail logs - cloudposse/terraform-aws-cloudtrail-s3-bucket

Yes that’s accurate

If you check our root modules project on GitHub you’ll see some examples

Sorry - on phone so hard to link out

2018-11-04

hey everyone give a warm welcome to @ramesh.mimit! Good to have you here

Welcome Ramesh!

2018-11-05

hey everyone give a warm welcome to @rolf! Good to have you here

There are no events this week

welcome @rolf

@OScar here are some examples for CloudTrail. We setup the bucket in a separate AWS audit account (so just a few people if any will have access to it)

Collection of Terraform root module invocations for provisioning reference architectures - cloudposse/terraform-root-modules

and then setup CloudTrail(s) for all other accounts (root, prod, staging, etc) https://github.com/cloudposse/terraform-root-modules/tree/master/aws/cloudtrail

Collection of Terraform root module invocations for provisioning reference architectures - cloudposse/terraform-root-modules

@Andriy Knysh (Cloud Posse) thank you, it looks like with the sample [main.tf](http://main.tf) file on the root modules repo, we simply tell it to assume a role in order to deploy CloudTrail to that account as per your comment. Ok I will try this, thanks for your response!

@Andriy Knysh (Cloud Posse) @Erik Osterman (Cloud Posse) One other question; I managed to see logs for multiple accounts in that same bucket! But the data logged is basic. It appears i need to further configure in order to see VPC logs, or other services and sending logs to the S3 bucket, is this done via CloudWatch SNS Topics? or am I way off?

use event_selector variable

Provides a CloudTrail resource.

Terraform module to provision an AWS CloudTrail and an encrypted S3 bucket with versioning to store CloudTrail logs - cloudposse/terraform-aws-cloudtrail

@Andriy Knysh (Cloud Posse) how would i use the event_selector parameter for cloudtrail?

Terraform module to provision an AWS CloudTrail and an encrypted S3 bucket with versioning to store CloudTrail logs - cloudposse/terraform-aws-cloudtrail

it’s a variable in the module

yep understand ` event_selector = “”` what would a sample value look like?

Provides a CloudTrail resource.

event_selector = [ { read_write_type = “All” include_management_events = true

data_resource {

type = “AWS:<i class="em em-S3"></i>:Object”

values = [“arn<img src="/assets/images/custom_emojis/aws.png" alt="aws" class="em em--custom-icon em-aws">s3:::“]

} } ]

ah duh!

ok my goal is to be able to log events from a lot of services

it’s a list of maps

it looks like with the sample [main.tf](http://main.tf) file on the root modules repo, we simply tell it to assume a role in order to deploy CloudTrail to that account

assuming roles is not a feature of the module nor CloudTrail. It’s how we login to AWS accounts using geodesic. You need to consider the root modules as examples of invocation (not using them verbatim)

Thank you @Andriy Knysh (Cloud Posse) understood on not using root modules verbatim - i do appreciate the ability to assume a role since executing terraform under said role would help deploy the CloudTrail to that account…but totally understand your point. I am looking at the AWS:cloudtrail bucket events object now..

@here whenever i use the variable kms_key_id from this location https://github.com/cloudposse/terraform-aws-cloudtrail/blob/master/variables.tf i get a terraform error.

Error: Error running plan: 3 error(s) occurred:

- module.cloudtrail.output.cloudtrail_id: Resource ‘aws_cloudtrail.default’ not found for variable ‘aws_cloudtrail.default.id’

- module.cloudtrail.output.cloudtrail_arn: Resource ‘aws_cloudtrail.default’ not found for variable ‘aws_cloudtrail.default.arn’

- module.cloudtrail.output.cloudtrail_home_region: Resource ‘aws_cloudtrail.default’ not found for variable ‘aws_cloudtrail.default.home_region’

Terraform module to provision an AWS CloudTrail and an encrypted S3 bucket with versioning to store CloudTrail logs - cloudposse/terraform-aws-cloudtrail

any thoughts?

Are you using this output immediately?

sounds like a race condition

if you create the key in other module

thank you @Andriy Knysh (Cloud Posse) let me try that now

@Andriy Knysh (Cloud Posse) i think this is where the depends_on can be applied in terraform for cloudtrail module

so that the key gets created first

let me try that

If you have both the key creation in a module and this module you will need to use a depends on but you’ll have to use a null_resource to do the connection (I believe until 0.12 is out and stable)

hmm , @catdevman lost me a bit

Sorry. Is your KMS creation in a module?

to test the theory, use terraform apply -target to create the key first, and then -target to create the CloudTrail module. It could be something else so I’d test it first

normally dependencies also work cross-module ie. if kms_id comes as an attribute from the aws_kms_key resource, a condition like this would not happen.

Yeah just wanted to make sure that if the KMS key was being created by a module you didn’t run into: https://github.com/hashicorp/terraform/issues/18239

I know this has bit me numerous times in the past.

Terraform Version v0.11.7 Terraform Configuration Files Note that the below Terraform configuration file leaves out the actual modules some-module and another-module for sake of readability. module…

this is not really an issue as long as one can use the output of something as input for something else. Things get difficult when that’s not possible, and then your null_resource comes into play.

Thanks everyone - I think I first need to make sure I am creating all the resources for AWS KMS in order to use the key. As of now I only have this snippet:

resource “aws_kms_key” “cloudtrail_kms_key” { description = “KMS key used for encrypting logs.” deletion_window_in_days = 10 }

Terraform module to provision a KMS key with alias - cloudposse/terraform-aws-kms-key

thank you again @Andriy Knysh (Cloud Posse) let me add this module real quick!

if you do not provide the key, do you see the same errors? (just checking that it’s some kind of race condition issue and not something else)

@Andriy Knysh (Cloud Posse) if i don’t use the key parameter, cloudtrail module works

hey everyone give a warm welcome to @egonzales! Good to have you here

hi @egonzales

@Andriy Knysh (Cloud Posse) checkout my entire code base here..

I am still getting the error sadly

try terraform plan/apply -target=module.kms_key

to create the key first

if works, we’ll take a look at the race condition

and don’t use master for the module sources, pin to a release

@Andriy Knysh (Cloud Posse) this worked! terraform apply -target=module.kms_key

key is created

i’ll do the other command now

(you need to update the description and alias for kms_key module)

the other command could be just terraform apply (no need to use -target)

Thanks @Andriy Knysh (Cloud Posse) to confirm, I created the CloudTrail and S3 Bucket successfully. The CloudTrail module does include the kms_key_id which I pasted prior to running terraform apply

you don’t need to paste anything though

terraform apply -target=module.kms_key will save the created resource into the state file

next, terraform apply will read the state file and see the created key and use the ID

looks like my s3 bucket policy is not working…

` module.cloudtrail_s3_bucket.module.s3_bucket.aws_s3_bucket.default: 1 error(s) occurred:

- aws_s3_bucket.default: Error putting S3 policy: MalformedPolicy: Policy has invalid resource status code: 400, request id: B2B6570282B3F8D2`

that is the message after i executed my last command

MalformedPolicy is a new error that happened only after I used the kms_key_id in the cloudtrails module

so if you use kms_key_id = "", it works?

actually if i omit the kms_key_id param for the cloudtrail module, the CloudTrail gets created

if i use kms_key_id="" it does not throw and error

strange

if you could not find why it does that, we’ll have to take a look and apply the code with the key

so i also tried copying the key id from the web console and pasting it in the kms_key_id value, and that is when i got the policy error for the s3 bucket

So for now, i’ll have to forgo using encryption

@Andriy Knysh (Cloud Posse) just to be clear, I am not using Chamber, just wanted to create the key in AWS to use for the cloudtrail module

yea I understand. We did not, at least recently, test the CloudTrail module with an external KMS key . I’ll have to apply your code and see what happens. Thanks for finding the issues @OScar

can you open an issue here https://github.com/cloudposse/terraform-aws-cloudtrail/issues

Terraform module to provision an AWS CloudTrail and an encrypted S3 bucket with versioning to store CloudTrail logs - cloudposse/terraform-aws-cloudtrail

Hi @Andriy Knysh (Cloud Posse) just wanted to get back to you with this one. I did make it work, and mainly I was missing a KMS Policy that allowed CloudTrail to encrypt/decrypt. So I don’t believe the issue was with your KMS Module

Terraform module to provision an AWS CloudTrail and an encrypted S3 bucket with versioning to store CloudTrail logs - cloudposse/terraform-aws-cloudtrail

thanks

so it’s an addition to the CloudTrail module?

Yeah so because i was specifying a key in CloudTrail module, the key policy needed to be modified..

can you open a PR for that?

@Andriy Knysh (Cloud Posse) sure, for the CloudTrail Module you mean? The PR would be to ensure that the KMS Policy is added or correct when a user specifies a kms_key_id…correct?

yes please

I’ll clone and make a change, then do a PR for sure!

I am trying to create a codebuild project using bitbucket private repo using terraform, but when the project is created, on codebuild console, in source section, it shows the repo as public repo. However, I have used the private repo using Oauth

#terraform anyone faced this issue before? or how to create the codebuild project for private repo using terraform..

resource “aws_iam_role” “terraform” { name = “terraform”

assume_role_policy = <<EOF { “Version”: “2012-10-17”, “Statement”: [ { “Effect”: “Allow”, “Principal”: { “Service”: “codebuild.amazonaws.com” }, “Action”: “sts:AssumeRole” } ] } EOF }

resource “aws_iam_role_policy” “terraform” { role = “${aws_iam_role.terraform.name}”

policy = <<POLICY { “Version”: “2012-10-17”, “Statement”: [ { “Effect”: “Allow”, “Resource”: [ “” ], “Action”: [ “logs:CreateLogGroup”, “logs:CreateLogStream”, “logs:PutLogEvents” ] }, { “Effect”: “Allow”, “Action”: [ “ec2:CreateNetworkInterface”, “ec2:DescribeDhcpOptions”, “ec2:DescribeNetworkInterfaces”, “ec2:DeleteNetworkInterface”, “ec2:DescribeSubnets”, “ec2:DescribeSecurityGroups”, “ec2:DescribeVpcs” ], “Resource”: “” } ] } POLICY }

resource “aws_codebuild_project” “terraform” { name = “test-project” description = “test_codebuild_project” build_timeout = “5” service_role = “${aws_iam_role.terraform.arn}”

artifacts { type = “NO_ARTIFACTS” }

environment { compute_type = “BUILD_GENERAL1_SMALL” image = “aws/codebuild/nodejs:6.3.1” type = “LINUX_CONTAINER”

environment_variable {

"name" = "SOME_KEY1"

"value" = "SOME_VALUE1"

}

environment_variable {

"name" = "SOME_KEY2"

"value" = "SOME_VALUE2"

"type" = "PARAMETER_STORE"

} }

source { type = “BITBUCKET” location = “https://[email protected]/rameshmimit/puppet-module-puppet.git” git_clone_depth = 1 auth { type = “OAUTH” } } }

2018-11-06

hey everyone give a warm welcome to @Nikola Velkovski! Good to have you here

Hi everyone!

hey everyone give a warm welcome to @Tee! Good to have you here

1

1hey @Tee welcome

Thanks

@ramesh.mimit we did not use bitbucket with CodeBuild, but here’s how we access private GitHub repos using CodePipeline/CodeBuild (by providing the OAuth token with permissions to access the private repos)

Terraform Module for CI/CD with AWS Code Pipeline and Code Build - cloudposse/terraform-aws-cicd

Terraform Module for CI/CD with AWS Code Pipeline and Code Build - cloudposse/terraform-aws-cicd

Terraform Module to easily leverage AWS CodeBuild for Continuous Integration - cloudposse/terraform-aws-codebuild

@Andriy Knysh (Cloud Posse) thank you for replying. I have noticed, its not terraform issue. Its CodeBuild UI issue.

@here this is more of a question around some best practices regarding deploying central logging for multi-account AWS environment. My question is: do I deploy a single bucket for all accounts? Or a bucket per environment?

So we have say: Security_Test Logging_Test

Security_Prod Logging_Prod

What in your experience works best, if then all S3 bucket content needs to go into say Splunk anyway?

this may be wrong, so I am looking to see how you’ve done it.

that’s not a simple question @OScar, depends on many factors. How do you provision the resources for each account. How do you control security and access to the accounts. I would provision a bucker per account since it’s easier to deploy and destroy stuff in one account w/o affecting other accounts

on the other hand, CloudTrail logs feel like special (security and audit reasons)

Agreed, this is the scenario we call multi-account/Organization logging. Which I understand many companies want to implement

so what we do with just CloudTrail logs, we use a separate audit account for the bucket (which only special people could access)

we provision the bucket for CloudTrail logs in that audit account

and then provision CloudTrail(s) in the other accounts and point them to the bucket in audit

@Andriy Knysh (Cloud Posse) good stuff, so the bucket would be created literally in its own account for that sole purpose it sounds like vs. say, in an existing “master” account?

yes completely separate account

and you control what people can assume roles to access that account (admin or read-only)

here we provision audit CloudTrail and bucket https://github.com/cloudposse/terraform-root-modules/blob/master/aws/audit-cloudtrail/main.tf

Collection of Terraform root module invocations for provisioning reference architectures - cloudposse/terraform-root-modules

and here CloudTrail(s) for all other accounts pointing to the bucket in audit https://github.com/cloudposse/terraform-root-modules/blob/master/aws/cloudtrail/main.tf

Collection of Terraform root module invocations for provisioning reference architectures - cloudposse/terraform-root-modules

and here how we control access to the account (add users to the groups) https://github.com/cloudposse/terraform-root-modules/blob/master/aws/iam/audit.tf

Collection of Terraform root module invocations for provisioning reference architectures - cloudposse/terraform-root-modules

@Andriy Knysh (Cloud Posse) awesome, I am going to follow these steps to specifically provision the audit account and its cloudtrail and s3 bucket

hey everyone give a warm welcome to @javier.moya! Good to have you here

welcome @javier.moya!

We finally got some cloudposse stickers! PM me your mailing address if you want some

2018-11-07

Welcome!

@OScar we use a similar workflow to what @Andriy Knysh (Cloud Posse) explained, I believe its the “main” way for multi account

hey everyone give a warm welcome to @Richard Pearce! Good to have you here

@pecigonzalo thank you for confirming the approach, it is nice to know other folks agree!

@Richard Pearce welcome to our group!

thanks and greetings

I was looking at https://github.com/cloudposse/terraform-null-smtp-mail do you have anything similar that can send slack messages ?

Terraform module to send transactional emails via an SMTP server (e.g. mailgun) - cloudposse/terraform-null-smtp-mail

hey everyone give a warm welcome to @nian! Good to have you here

hi @Richard Pearce

for slack, I believe we don’t have anything for terraform

but we have this https://github.com/cloudposse/slack-notifier

Command line utility to send messages with attachments to Slack channels via Incoming Webhooks - cloudposse/slack-notifier

which we use in Docker containers to send Slack messages about build/deploy status from CI/CD pipelines

thank you @Andriy Knysh (Cloud Posse) I will take a look.

hey everyone give a warm welcome to @RobH! Good to have you here

here is an example of a Codefresh pipeline step that sends a Slack message when the app gets deployed to staging

send_slack_notification:

title: Send notification to Slack channel

stage: Deploy

image: cloudposse/slack-notifier

environment:

- "SLACK_WEBHOOK_URL=${{SLACK_WEBHOOK_URL}}"

- SLACK_USER_NAME=CodeFresh

- "SLACK_ICON_EMOJI=:rocket:"

- SLACK_FALLBACK=Deployed to Staging environment

- SLACK_COLOR=good

- SLACK_PRETEXT=${{CF_COMMIT_MESSAGE}}

- SLACK_AUTHOR_NAME=Auto Deploy Robot

- SLACK_AUTHOR_LINK=<https://cloudposse.com/>

- SLACK_AUTHOR_ICON=<https://cloudposse.com/wp-content/uploads/sites/29/2018/02/small-cute-robot-square.png>

- SLACK_TITLE=App Updated

- SLACK_TITLE_LINK=${{CF_BUILD_URL}}

- "SLACK_TEXT=The latest changes have been deployed to\n :point_right: https://${{APP_HOST}}"

- SLACK_THUMB_URL=<https://cloudposse.com/wp-content/uploads/sites/29/2018/02/SquareLogo2.png>

- SLACK_FOOTER=Helm Deployment

- SLACK_FOOTER_ICON=<https://cloudposse.com/wp-content/uploads/sites/29/2018/02/kubernetes.png>

- SLACK_FIELD1_TITLE=Environment

- SLACK_FIELD1_VALUE=Staging

- SLACK_FIELD1_SHORT=true

- SLACK_FIELD2_TITLE=Repository

- SLACK_FIELD2_VALUE=${{CF_REPO_OWNER}}/${{CF_REPO_NAME}}

- SLACK_FIELD2_SHORT=true

- SLACK_FIELD3_TITLE=Namespace

- SLACK_FIELD3_VALUE=${{NAMESPACE}}

- SLACK_FIELD3_SHORT=true

- SLACK_FIELD4_TITLE=Branch/Tag

- SLACK_FIELD4_VALUE=${{CF_BRANCH_TAG_NORMALIZED}}

- SLACK_FIELD4_SHORT=true

- SLACK_FIELD5_TITLE=Release

- SLACK_FIELD5_VALUE=App

- SLACK_FIELD5_SHORT=true

- SLACK_FIELD6_TITLE=Commit

- SLACK_FIELD6_VALUE=${{CF_SHORT_REVISION}}

- SLACK_FIELD6_SHORT=true

- SLACK_FIELD7_TITLE=Build Time

- SLACK_FIELD7_VALUE=<!date^${{CF_BUILD_UNIX_TIMESTAMP}}^{date_num} {time_secs}|Time format failed!!!>

- SLACK_FIELD7_SHORT=true

- SLACK_FIELD8_TITLE=Commit Time

- SLACK_FIELD8_VALUE=<!date^${{GIT_TIMESTAMP}}^{date_num} {time_secs}|Time format failed!!!>

- SLACK_FIELD8_SHORT=true

- SLACK_FIELD9_TITLE=Trigger

- SLACK_FIELD9_VALUE=@${{CF_BUILD_TRIGGER}}

- SLACK_FIELD9_SHORT=true

- SLACK_FIELD10_TITLE=Commit Author

- SLACK_FIELD10_VALUE=@${{CF_COMMIT_AUTHOR}}

- SLACK_FIELD10_SHORT=true

when:

condition:

all:

executeForPullRequest: "'${{CF_PULL_REQUEST_NUMBER}}' != ''"

executeForOpenPR: "'${{CF_PULL_REQUEST_ACTION}}' != 'closed'"

welcome @RobH

The same pattern we use for the smtp module can be extended to the slack notifier

Since it consumes envs for everything …

We do have a slack/sns module, but don’t think that’s what you are looking for

Terraform module to provision a lambda function that subscribes to SNS and notifies to Slack. - cloudposse/terraform-aws-sns-lambda-notify-slack

Hi, Since we already have a PagerDuty provider I think it would be cool to also have a Slack provider, this way we could automate some integrations like PagerDuty to Slack or other monitoring tools…

hey everyone give a warm welcome to @davidvasandani! Good to have you here

Terraform is a CRUD tool, that doesn’t really align with slack notifications. The slack-notifer docker solutions work for me

I agree with slack notifications bit, but I do see the use in having a provider to manage the notification webhooks and slack extensions…

or rather codifying all that

Have we helped you in some way? We’d love to know! If you could leave us a testimonial it would make our day.

hey everyone give a warm welcome to @mpmsimo! Good to have you here

Hey everyone!

hi @mpmsimo

Hey! Glad you joined Michael

@Andriy Knysh (Cloud Posse) is responsible for all of our extensions to Atlantis

Awesome, good to know! You guys have some awesome information out on the net about Terraform + Atlantis. Definitely looking forward to learning from you all.

@mpmsimo have you seen Erik’s presentation https://cloudposse.com/meetup/how-to-use-terraform-with-teams-using-atlantis/

GitOps is where everything, including infrastructure, is maintained in Git and controlled via a combination of Pull Requests and CI/CD pipelines. Reduce the learning curve for new devs by providing a familiar, repeatable process. Use Code Reviews to catch bugs and increase operational competency. Pr

2

2Yes! Funnily enough this is the article that pointed me over here. I was just giving that post and the slide deck a review earlier today.

GitOps is where everything, including infrastructure, is maintained in Git and controlled via a combination of Pull Requests and CI/CD pipelines. Reduce the learning curve for new devs by providing a familiar, repeatable process. Use Code Reviews to catch bugs and increase operational competency. Pr

@Erik Osterman (Cloud Posse) created a new channel #terraform-aws-modules. Join if this sounds interesting!

2018-11-09

hey everyone give a warm welcome to @g0nz0! Good to have you here

Welcome!

Hey there!

i’ve looked into Chef inspec to write tests for terraform, to ensure I’m creating resources correctly. anyone else doing testing beyond terraform validate and if so, what?

Taking a little different approach

Goal is to make it easy for anyone to write tests without installing ruby and the kitchen sink

Using bats-core we can write simple tests that will catch the essential problems

Plan, apply, reapply test for idempotency, destroy, etc

interesting..

i’ll have to look into this too..

thanks!

Sorry at DMV

Will share example

dept. of motor vehicles?

Ya

Takes for ever in Los Angeles

if the experience is anything like my state (RI), i wouldn’t wish it on my worst enemy.

Ya same!

OMG #SweetOps is the shizzle! @here, just wanted to thank you for all the stuff you’ve put out on github. I’ve got a todo on my part to enter an issue and then actually implement it very soon on the KMS module! Thank you, just thank you for all this goodness!

thanks @OScar! on behalf of all of us here, it really means a lot to us to know we’re helping others out…

I am committed to contributing! Thanks so much @Erik Osterman (Cloud Posse)

@Erik Osterman (Cloud Posse) created a new channel #aws-reinvent. Join if this sounds interesting!

So I don’t look forward to going to DMV myself. Just had this beast shipped from FL and now registering in CA!

hey everyone give a warm welcome to @ellisera! Good to have you here

2

2welcome @ellisera! have a look around…

thank you!

2018-11-10

• Are you hiring? Post a link to your job ad in our #jobs channel.

• Looking for work? Let everyone know by promoting what you do in the #jobs channel by sharing your LinkedIn profile and GitHub links.

• Are you a freelancer/consultant? Feel free to engage in self-promotion in the #jobs channel by sharing a link to your website and a tidbit about what you do.

2018-11-11

yo guys i keep on getting “unable to connect to helm server” with build harness

what build harness version should i be using

Hrmmm we are using the latest version with helm on Codefresh

hey, erik, weird it stopped working yesterday

i am using 0.5.5

might be a different problem

We don’t do anything funky in the container

We just install helm

On alpine

Let’s see… so can you try using kubectl?

Just to test the connection.

it breaks on make/helm/upsert

sure

what should i run?

Let’s move to #release-engineering

Actually let’s move to #codefresh

ok

@Erik Osterman (Cloud Posse) created a new channel #codefresh. Join if this sounds interesting!

Or #codefresh :-)

2018-11-12

@sarkis created a new channel #prometheus. Join if this sounds interesting!

There are no events this week

@maarten created a new channel #airship. Join if this sounds interesting!

hey everyone give a warm welcome to @Amos! Good to have you here

Hello there, I’ve got a quick question for someone about this project: https://github.com/cloudposse/terraform-aws-kops-vpc-peering

Terraform module to create a peering connection between a backing services VPC and a VPC created by Kops - cloudposse/terraform-aws-kops-vpc-peering

I’m attempting to peer a VPC in another region but get this error message

* module.cluster_to_vpn.module.vpc_peering.data.aws_vpc.requestor: data.aws_vpc.requestor: InvalidVpcID.NotFound: The vpc ID 'vpc-xxxxxx' does not exist

status code: 400

I have had success peering within my same region, but when I enter the vpc id for the remote region it breaks

welcome @Amos!

let’s move to #terraform

2018-11-13

Is AWS IAM down for everyone ?

not very fast here..

aws iam get-user 0.52s user 0.22s system 59% cpu 1.252 total

I get a 505

ok now it’s ok

hey everyone give a warm welcome to @masterwill! Good to have you here

hey @masterwill! let me know if we can help

hey everyone give a warm welcome to @ay-ay-ron! Good to have you here

hey there!

hey everyone give a warm welcome to @JustPerfect! Good to have you here

1

12018-11-14

hey everyone give a warm welcome to @Kasun! Good to have you here

1

1hey everyone give a warm welcome to @Jorge Rodrigues! Good to have you here

@Jorge Rodrigues @Kasun @JustPerfect @ay-ay-ron welcome!

hey everyone give a warm welcome to @nutellinoit! Good to have you here

Hi everyone!

hey @nutellinoit

hey everyone give a warm welcome to @bchain! Good to have you here

hey @bchain! let me know if we can help with anything…

hey everyone give a warm welcome to @rbadillo! Good to have you here

Hi Team, quick question

I found your tool: https://github.com/cloudposse/prometheus-to-cloudwatch

Utility for scraping Prometheus metrics from a Prometheus client endpoint and publishing them to CloudWatch - cloudposse/prometheus-to-cloudwatch

My understanding is that you tell that tool which exporter to scrape and it will send the metrics to AWS, is that correct?

yes

Utility for scraping Prometheus metrics from a Prometheus client endpoint and publishing them to CloudWatch - cloudposse/prometheus-to-cloudwatch

^ to scrape kube-state-metrics

it has some limitations though (see the open issue), which we did not address yet

let me check

I see, so let me explain my situation

let’s say that I have 1 EC2 server and I have the node_exporter running locally (At the Ec2 box) Port 9100. If I run your tool locally in the same EC2 server and tell it to scrape http://localhost:9100/metrics . Those metrics will ended up in CloudWatch. correct?

If that’s a Prometheus endpoint, yes

It uses Prometheus client to read the metrics

by prometheus endpoint you mean any /metrics endpoint that can be scrape by a Prometheus server ?

Yep

perfect

To be clear, the tool was just an experiment. The correct official way would be to write a Prometheus operator using the operator framework, which would be managed by Kubernetes and would scrape metrics and send them to CloudWatch

I understand but this is a great start for I was planning on writing

if you manage to resolve the issue, we accept PRs

I think I know how to fix it

I will take a look next week and let you know guys

that would be awesome! thanks

thanks @rbadillo

you’re welcome, talk to you later

hey everyone give a warm welcome to @Rok Carl! Good to have you here

1

1hey @Rok Carl welcome!

glad to be here

2018-11-15

@here i might have asked this, but maybe it wasn’t clear. Using the Geodesic Framework, setting up CloudTrails that send logs to central Audit account S3. All good there, I have that in place. My question is; what module do i use to send other AWS events to the bucket from all of the accounts?

I am super stoked to be using this framework with a company that I hope will be a Case Study for it!

Other than CloudTrail events, it’s on a case by case basis

Let’s move to #geodesic

hey everyone give a warm welcome to @bob! Good to have you here

1

1hey @bob! welcome

@bob you are welcome

2018-11-16

hey everyone give a warm welcome to @Jan! Good to have you here

o/

hi @Jan !

hey hey

Dutch or Afrikaans?

My Afrikaans is Braai

Dutch is better

Dutch I guess based on time

cool cool

I can also do a bit of Xhosa

when drunk

some nice ideas you have going on in your terraform

haha awesome, I can speak Dutch when drunk

and Xhosa a little

What are you working on atm ?

I was rewriting an example multi aws account org, with IAM roles an d sts assume patterns. Opinionated modules for vpc’s peering, logging etc

doing a workshop with some engineers for a company I joined recently

stumbled across your sweetops

would have been amazing to have this several years back

So today im exploring doing the same setup with your modules

and then see if I can work CIS benchmark compliance into it

Myself I’m not cloudposse, more of a pro-active lurker, but happy to help out, most of the cloudposse guys show up US working times.

makes sense

tx

Have folks implemented https://github.com/cloudposse/root.cloudposse.co out of the box switching out CP variables for their own? I’m hitting orderings issues which is expected during any bootstrap but they aren’t documented from what I can see..

Example Terraform Reference Architecture for Geodesic Module Parent (“Root” or “Identity”) Organization in AWS. - cloudposse/root.cloudposse.co

what sort of ordering issues?

Ordering of resources. i.e. account-settings > users > iam

I will be doing a similar test setup shortly

will let you know if I see the same issues

Only used in anger for an hour or so

@joshmyers I’m actually using the root, audit and stage modules on Monday for a client! Ping me here if you have questions, I’ll reciprocate

@OScar I got up and running pretty quick after poking around but wasn’t sure if I was missing some docs

Cool, one thing you might be missing, is when using the CloudTrail module and say if you wish to use KMS key to encrypt the content. There is a parameter for the KeyID to pass. I am going to use it, as we need to encrypt. But I’ll know more on Monday!

My point being, not sure how the cross account kms works since the audit trail bucket is on its own account and we are using a kms key from another account

Cool, good luck for your engagement on Monday!

You can grant KMS perms for cross account, but ONLY if you don’t use the standard KMS key and instead create a custom one. IIRC you can’t change policies easily on default generated KMS keys

Thanks @joshmyers I did create a key via Terraform, I think the permissions or policy attached to that key may be the problem it sounds like.

So on the audit account bucket, we would provide said permissions?

What is the problem you are seeing?

You may need a trust policy on the key, something like:

{

“Version”: “2012-10-17”,

“Id”: “key-default-1”,

“Statement”: [

{

“Sid”: “Enable IAM User Permissions”,

“Effect”: “Allow”,

“Principal”: {

“AWS”: “arn iam:root”

},

“Action”: “kms:“,

“Resource”: “”

}

]

}

iam:root”

},

“Action”: “kms:“,

“Resource”: “”

}

]

}

Note the resource is * because the policy is on the key.

Cool gonna give that a try later today, appreciate it @joshmyers

No worries, hope it helps

If first confirm if/what your problem is

Note that I’ve seen CloudTrail lie to me about why access to a key was denied.

To answer your question @joshmyers when I clicked and opened a log entry from an account in the audit central bucket, I would get access denied.

OK, so that would be calling a decrypt function, which needs access to the KMS key that was used to encrypt it. No need to tell it which key this is, it already knows, but probably can’t access it.

Grant CloudTrail permissions to use a CMK to encrypt logs.

hey everyone give a warm welcome to @mumoshu! Good to have you here

hey everyone give a warm welcome to @Shane! Good to have you here

@Erik Osterman (Cloud Posse) thanks for the invite

welcome @Shane & @mumoshu! awesome to have you guys onboard. We have an #atlantis channel where we can coordinate efforts. @Andriy Knysh (Cloud Posse) is the one on my team who worked on it.

@Erik Osterman (Cloud Posse) created a new channel #helmfile. Join if this sounds interesting!

I am considering using your terraform code build module. I have a few questions: 1. Does it support git repos in CodeCommit? 2. currently it creates a IAM role and policy. In our scenario we will be running code build in 1 AWS account. We will actually run terraform inside code build. We will be using terraform to provision infrastructure in other AWS accounts. so the role inside code build has to be able to assume roles in other accounts. This is why I was trying to determine if it is possible to update the policy statements for the role created by the module. Perhaps we can pass in a role Arn. I am open to any other suggestion you have for me. Thanks

i think we do exactly what you’re describing, far as using codebuild to run terraform. but we wrote our own module, rather than use cloudposse’s. we create iam policies in one module, and use the resulting policies in our ci module. here’s the ci module, https://github.com/plus3it/terraform-aws-codecommit-flow-ci

Implement an event-based CI workflow on a CodeCommit repository - plus3it/terraform-aws-codecommit-flow-ci

@Andriy Knysh (Cloud Posse) I can certainly open a PR. I reviewed the module code. My plan is to add a new parameter that will pass in the name of an existing Role ARN. In addition to this I was thinking of adding a new boolean that can be called createrole. If it is false role/policy/policy attachment will not be created. The supplied value of existing role will be used. Let me know if this is acceptable.

@rajcheval we can definitely provide an external IAM policy to the module. Please open a PR if you know how to do it. If not, open an issue and we’ll get to it

hey everyone give a warm welcome to @gyoza! Good to have you here

Hey John! welcome

yo

I work for blackberry now lol

(shrug)

cool! whatcha working on over there?

Well, Cylance got bought out, so we’re doing the same thing as of yesterday

just operating under blackberry

heh, i missed the news

yea its everywhere

Did you see that hashicorp absorbed atlantis?

im sure you have

yep! that was a surprise announcement. hope to see #atlantis will get more engineering resources

we’ve forked, but hoping to ultimately converge on functionality

are you guys using some flavor of atlantis?

i was planning on doing it

i had a demo working with bitbucket

but i stopped as soon as i saw it was going to be in terraform-0.12

also the pay-wallish features being released

im looking forward to that

hey everyone give a warm welcome to @dio! Good to have you here

hi @dio

Hello!

2018-11-17

hey everyone give a warm welcome to @demeringo! Good to have you here

Who is going to AWS re:invent and want to chat about stuff we do?

I’ll be there all week, let me know when we can meet up @antonbabenko

Also we created #aws-reinvent

I am!! Let’s meet up, grab a drink and talk shop!

2018-11-18

not this year

hey everyone give a warm welcome to @monsoon.anmol.nagpal! Good to have you here

Hi @monsoon.anmol.nagpal

Hi @monsoon.anmol.nagpal

Hello

Where would you say is the best place to get started

docs wise?

I have a 7 hour train journey tomorrow so I figure I can do a deep dive

@Jan do you want to get started with the reference architecture

We have our QuickStart guide that links to everything, but it needs a lot of work

Oh I did notice one thing just before, let me dig it up

was a dead link to local dev

There are a few changes I will explore, I need to build with CIS compliance in mind

will see how it works out

thanks for the quick-start, will take a look there

terraform is one of my strong areas so should be able to follow through most of it

I mean almost all the tool chain you are using is my strong area

Cool! I am afk today, but will be around all day tomorrow

epic

Will be here asking stuffs depending on my internet connection

2018-11-19

hey everyone give a warm welcome to @j.baldzer! Good to have you here

There are no events this week

@here I am excited this morning because I am deploying @AWS accounts using Geodesic!

Nice! How’d it go?

I am on it right now Guiding them, super stoked!

Anything missing from docs? Rough edges / ordering issues? What have you got running?

Yep i am going through the “Cold Start” for this exercise! https://docs.cloudposse.com/reference-architectures/cold-start/

I followed the Cold Start on my lab, and setup root and stage architectures

sorry I’ve been AWOL for the last few days. Drove up to Oregon for a mini work “vacay” for Thanksgiving

@Jan is doing the same right now

he found a couple of issues with the #docs

I found a few other things I wanna think through

cool - remember we’re so familiar with this stuff that getting your outside feedback is what we really need

I ma used to building vpc’s with terraform and rendering a k8s cluster.yaml template and launcing into the TF created vpc

@mcrowe banged his head against the while for a while until we got these docs online

I did see from the docs that you are launching from a rendered cluster spec

@Jan you mean using terraform to provision the VPC and launching kops in that VPC?

exactly

I only looked at your example

gotcha, yes, there are some pros with that and i’ve seen others do it that way

our approach was slightly different, but i’d be open to supporting both methods

and I think even if it its a supported case it should be easy enough to add

our approach is to let kops manage it’s VPC and all resources

Yea

and use terraform to manage it’s own VPC

then peer them

So I have in the past had vpc’s that have services running in them where I want to add k8s

I lied having the programmatic blast radius separation between k8s / infra / rds data

Geodesic is the fastest way to get up and running with a rock solid, production grade cloud platform built on top of strictly Open Source tools. https://slack.cloudposse.com/ - cloudposse/geodesic

this is the kops manifest template

I mean what happens for example if you create the vpc with kops and then after that launch other EC2 resources into said vpc

if you do a kops destroy cluster will it kill the vpc?

i believe so… but i just saw an issue raised by a customer where it was not, but haven’t looked into it

the nice aspect of the other wey is that you cant destroy a TF controlled vpc without first destroying the k8s cluster

blast radius control

any how I need to walk to my colleges house and its like -3 so no more typing

haha

ok, we can discuss more later

I am also keen to explore gitlab in place of github

as we use gitlab

We have a customer using GitLab. That’s not a blocker. Personally, I’m still not a fan or sold on GitLab.

Ah brilliant, would love to know more about any changes required to use to gitlab

anyone aware then terraform is going to support new ECS ARN formats?

I believe this will be auto supported

@ramesh.mimit just a quick update, I have enabled the new arns in our testing acccount for ECS and it seems that everything is working fine as before.

@maarten might know more about that

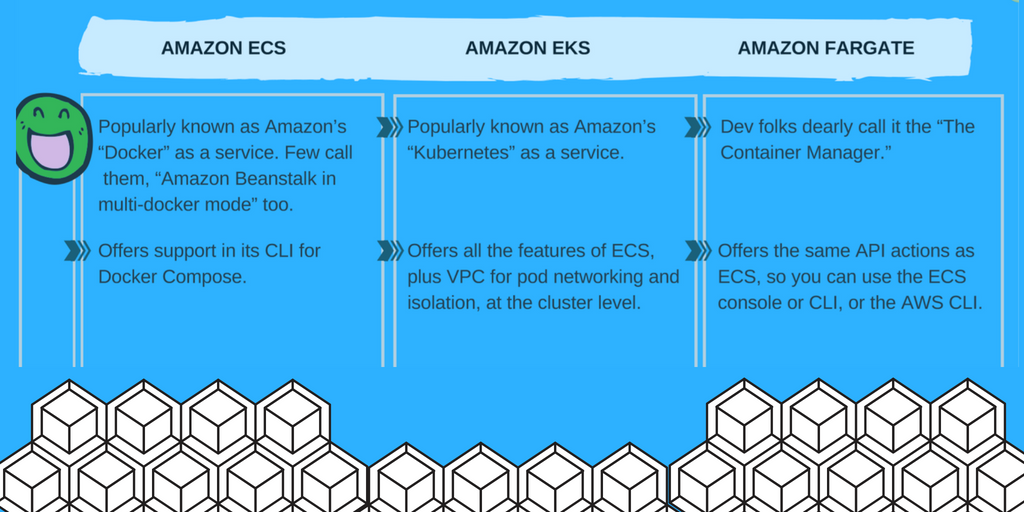

Hey People I am not sure if this is the right place to ask but does anyone have a comparison chart for fargate and ECS. I know that fargate is way more limited than ecs but I cannot seem to find anything that compares those 2 on the interwebz.

any help is appreciated

2018-11-20

“Are you a Docker person or a Kubernetes person?”

Maybe?

kinda

but thanks

I am slowly compiling the list I just need to dig through a lot of blogs, docs etc

hey everyone give a warm welcome to @zerocool.jothi! Good to have you here

@ramesh.mimit afaik, Terraform is not forcing any certain patterns on ARN’s. I’m running TF with the new format without problems. Resources affected are mostly resources Terraform doesn’t care about, container instances, task-id’s, and they mentioned services, although services don’t have an ARN afaik.

I guess it will be like when they switched the instances ids to longer ones, terraform will be agnostic in this case.

hey everyone give a warm welcome to @mishinev! Good to have you here

1

1The right thing to do, when @Erik Osterman (Cloud Posse) sends you stickers.. is put them on your boss’s office window @stobiewankenobi

Oh that is one handsome dude

in the reflection? I know right

HAHAH

that was fast

i just mailed them last friday.

@here pardon my ignorance, but i want to ask something that may make sense for others, just not clear to me:

The IAM User that is created for the [root.cloudposse.co](http://root.cloudposse.co) repository. Which is used to manage other accounts. Can that be a federated user since it assumes IAM Roles? or?

so there is no specific account designated as “the account to manage other accounts”

let’s move to #geodesic

@Erik Osterman (Cloud Posse) created a new channel #packages. Join if this sounds interesting!

hey everyone give a warm welcome to @integratorz! Good to have you here

hey @integratorz! what bring you around?

@maarten thanks for the confirmation

2018-11-21

@Nikola Velkovski Fargate is just extremely expensive, there is a reason AWS only has monthly pricing example for running Fargate tasks a few hours a day. A 1CPU fargate with 2GB of memory costs 54 USD/month. Leaving out EBS that’s about the same price as a c5.large..

Thanks @maarten

That sealed the deal more or less lol

so yeah for just a few services it’s perfect, also it’s good to isolate certain services for security purposes, and you can run Fargate next to regular ECS here.

Hey @Erik Osterman (Cloud Posse), just taking a look at some of the modules that Cloud Posse has for Terraform and wanted to hop into the slack channel.

awesome! feel free to ask questions in #terraform if you get stuck on anything

hey everyone give a warm welcome to @Martin! Good to have you here

@here I’m guessing folks are still working (you workaholics!). I should talk right? Just wanted to wish everyone in this amazing community a safe and happy Thanksgiving!

Thanks @OScar

Thanks @OScar !

Have a good one…

@here anyone have this issue trying to assume the role?

Enter passphrase to unlock /conf/.awsvault/keys/:

An error occurred (InvalidClientTokenId) when calling the GetCallerIdentity operation: The security token included in the request is invalid.

I am able to assume the cnc-root-admin via the web console however

Probably something wrong in the AWS config

Sec

If you want you can PM me your config assuming no secrets are in it!

I am also able to assume the role via a plain aws-vault login cnc-root-admin command and the web console opens with the assumed role

ok

Let’s move to #geodesic

2018-11-22

hey everyone give a warm welcome to @Duong! Good to have you here

hey everyone give a warm welcome to @Bogdan! Good to have you here

wellcome

Hey! I’m the HUG Zurich leader and long-time user/fan of CloudPosse’s TF modules joining at @antonbabenko’s invitation

welcome to the group!

We just had a Meetup focused TF with @antonbabenko as a guest speaker yesterday: https://www.meetup.com/Zurich-HashiCorp-User-Group/events/255859299/

Wed, Nov 21, 2018, 6:30 PM: Hi everyone!It is our pleasure to announce the launch of the Zurich HashiCorp User Group with a great selection of speakers.Agenda30 - 18 Arrival and networking18:3

1

1 1

1hey @Bogdan welcome! Thanks for sharing the link

@antonbabenko nice presentation https://www.slideshare.net/secret/k6svGkP445g45q

Zurich HUG meetup, 21 November 2018 - https://www.meetup.com/Zurich-HashiCorp-User-Group/events/255859299/

@Andriy Knysh (Cloud Posse) thanks.

If anyone wants to see my talk at Hashicorp User Group meetup in New York city 3rd of December - Join me at Terraform Modules and Best Practices http://meetu.ps/e/G3zHX/1MhZb/a

Mon, Dec 3, 2018, 6:00 PM: Join us for the next NYC HashiCorp User Group meetup with Anton Babenko and Microsoft!Schedule00 - 6:40PM Food, beverages, socializing6:40 - 7:10PM Anton Babenko, Terrafo

2018-11-23

hey everyone give a warm welcome to @gauravg! Good to have you here

welcome

Thanks

I need a help on https://github.com/cloudposse/terraform-datadog-monitor/tree/master/modules/cpu who can help me?

Terraform module to provision Standard System Monitors (cpu, memory, swap, io, etc) in Datadog - cloudposse/terraform-datadog-monitor

I think most of the folks are not around, given that its thanks giving holidays

I could try though

yeah

whats up?

I am getting this error Error: resource 'datadog_monitor.cpu_usage' config: unknown module referenced: label

im also on a high speed train crossing Germany right now so my internet is not super reliable

good luck

module "datadog_cpu_usage_us_east_1" {

source = "./module/cpu"

namespace = "test"

stage = "dev"

name = "els1"

attributes = "us-east-1"

delimiter = "-"

tags = "ci"

}

provider "datadog" {

api_key = "${var.datadog_api_key}"

app_key = "${var.datadog_app_key}"

}

variable datadog_api_key {}

variable datadog_app_key {}

This is my main.tf in root ^

let me see

module "label" {

source = "git::<https://github.com/cloudposse/terraform-null-label.git?ref=tags/0.3.1>"

namespace = "${var.namespace}"

stage = "${var.stage}"

name = "${var.name}"

attributes = "${var.attributes}"

delimiter = "${var.delimiter}"

tags = "${var.tags}"

}

Terraform module to provision Standard System Monitors (cpu, memory, swap, io, etc) in Datadog - cloudposse/terraform-datadog-monitor

did you do a terraform init

or terraform get -update

yes it’s giving error on same

mm

the difference is in source. Instead of using githib url, I am using my local which has same module of cpu

for sure

do you have a local copy of the "git::<https://github.com/cloudposse/terraform-null-label.git?ref=tags/0.3.1>" module?

oh yeah, got it now. Somehow that copy on different location

Let me try to move it and then try

it works

Thanks Jan

brilliant

Now let me see why monitor not created

its one of those things with terraform, if you are using local, relative path, modules you gotta be careful to validate the paths

@gauravg did you go through the cold-start?

for geodesic

nope

dang

oki

@Jan I can give you some tips on provisioning AWS accounts, if you ready

The docs were written when we provisioned accounts manually in the AWS console

Yea im ready

I suspected that might be the case

Collection of Terraform root module invocations for provisioning reference architectures - cloudposse/terraform-root-modules

just because of the env calls

so, in short, are you able to login to the root account from geodesic?

root means the master Org account

let’s switch to #geodesic

kk

BaaS helps you achieve your goals, making sure that you follow through on what you plan.

funny ^

hey everyone give a warm welcome to @ManojH! Good to have you here

hey everyone give a warm welcome to @Bryan! Good to have you here

2018-11-25

hey everyone give a warm welcome to @boz! Good to have you here

2018-11-26

There are no events this week

will be nice if AWS adds MongoDB and Kafka as managed services https://seekingalpha.com/news/3410999-aws-develops-new-services-amid-open-source-pushback

Amazon (AMZN -4.3%) continues to use open-source software to build out AWS despite tensions with the open-source community, according to The Information.AWS is reportedly developing two new cloud serv

Lol, it’s nice when my peers organically stumble on cloudposse projects: https://github.com/cloudposse/prometheus-to-cloudwatch in particular

whoot! yea, that’s been a popular one

@Andriy Knysh (Cloud Posse) worked on that

hey everyone give a warm welcome to @Ben Hecht! Good to have you here

2018-11-27

hey everyone give a warm welcome to @jerry! Good to have you here

2

2hey @jerry! glad you stopped by

Thanks Erik. Couldnt resist Thank you and the team for all the great content you have up there.

i wish i could open source the stuff i work on

i would get mad internet points

@gyoza Interested now…

i built an autohealing federated prometheus cluster thing using consul-template, consul, and some python..

sounds awesome!

we haven’t had the opportunity to explore this yet - would love to see this for kubernetes

so if the master goes down and a new one comes up it will resync, and the child nodes, if one of them dies its fine

a new one will come up and resync

as long as they all dont die at once

should be ok:P

not using LTS

any of you guys using thanos?

Exploring it

yea, i like it for the LTS stuff

RONCO style

hey everyone give a warm welcome to @samhagan! Good to have you here

3

3hey everyone give a warm welcome to @john294! Good to have you here

1

1Hello!

hey hey

Hey john! Glad you signed up

hey everyone give a warm welcome to @chialun! Good to have you here

4

42018-11-28

I really have to say this is the most active DevOps Slack team I’m apart of. Way to go!

thanks @davidvasandani!

hey i have a general question.. i’ve just configured my bastion host to automatically look at aws iam users, sync them, and copy their ssh public keys. so now i can ssh into bastion. great.

my previous method was sshing in using a shared key, then using a key on bastion to ssh into the private EB instances.

question: now that my users are sshing into bastion, and there are now like 10 users, how do I seamlessly ssh to the private instances without copying the key to all their .ssh folders?

extra note: i wrote a gem that replicates Heroku cli functionality. so its all automated $ mygem ssh –app myappname –env myenvstage #and it automatically sshes through bastion to one of the app instances. so internally now i have to change it to use ssh <iamuser>@privatehost instead of ec2-user@privatehost. and im just trying to figure this out. any suggestions?

how do I seamlessly ssh to the private instances without copying the key to all their .ssh folders

- Heroku app can instruct ssh (via

SSH(1)flag:-F configfile) to use a custom config file that enablesForwardAgent yesandUser <iamuser>– users usessh-agentand forwarding to access private hosts. - Also add <iamuser> accounts on all private hosts, so you can continue to track/attribute/remove access/usage.

thanks

key being, how can i automate this, maybe via a single line command.. instead of telling everyone to change their ssh_config

hey everyone give a warm welcome to @adamhkaplan! Good to have you here

@i5okie Try adding this into your .ssh/config and then directly ssh into the ec2 instance.

Host bastionhost

ForwardAgent yes

Host 10.* <- fix your range here

ProxyCommand ssh user@Bastionhost -W %h:%p

im trying to do it without that

welcome @adamhkaplan

@i5okie could your gem template some SSH config? A bit yuk.

ssh -t ec2-user@bastion ssh ec2-user@ec2node

I think it is generally acceptable for folks to have to add some SSH config in order to access things, and preferably not as a shared user.

yeah i just got this going on my bastion: https://github.com/widdix/aws-ec2-ssh

Manage AWS EC2 SSH access with IAM. Contribute to widdix/aws-ec2-ssh development by creating an account on GitHub.

pretty neat

a bit like https://github.com/kislyuk/keymaker

Lightweight SSH key management on AWS EC2. Contribute to kislyuk/keymaker development by creating an account on GitHub.

now the last leg… im trying to avoid modifying ssh_config.. in theory i could use sshkit and dynamically change ssh_config on the fly. but “yuk” is perfect word here

What is wrong with having users add some SSH config? I think this is pretty standard practice in onboarding docs

we have multiple aws accounts, multiple vpcs…

that sshconfig is going to look like a book

bigger hurdle is, there might be some vpc cidr overlapping

might be easier to script the code that syncs users, to also copy the ssh key into their home folders..

SSH config does wildcard matching which works nicely with CNAMEs

josh, one of the accounts has vpcs that overlap the other account’s ip address. different bastion hosts for similar address space

10.1.0.0 10.1.0.0 for example

Attach an EIP to each bastion and CNAME to it, bastion.production.bla, bastion.staging.bla ?

but ssh config starts with remote host.. if that wildcard matches, then it picks which bastion host.. right

so bastion host ip doesn’t matter. its the private instance ip address

i guess another option is to sftp the key to bastion, right before they ssh through it

or scp

stupid elastic beanstalk.

@Erik Osterman (Cloud Posse) created a new channel #lax. Join if this sounds interesting!

keymaker has been useful

my private hosts are all elastic beanstalk hosts.

with instance refresh, etc etc. so i need to get that automated somehow.

I’ve just added this copy_ssh_key function to the script i’ve been using: https://github.com/i5okie/aws-ec2-ssh/blob/master/import_users.sh#L161-L180

Manage AWS EC2 SSH access with IAM. Contribute to i5okie/aws-ec2-ssh development by creating an account on GitHub.

@i5okie have you seen our github-authorized-keys service?

nope

the idea here is that you use AuthorizedKeysCommand (or something like that) to avoid needing to provision keys

it can also provision users too

yeah the aws-ec2-ssh script does that by getting the ssh public key associated with your iam user

i think the bastion container implements support for that.

ah right - forgot you’re using aws iam (which is nice)

if we did it all over again, i would implement it slightly differently

i would use pam.d to automatically query IAM on user connection

which would then provision the user (if permitted) and then add their key

this is possible with pam_exec

hmm sounds like keymaker and aws-ec2-ssh are very similar. next question is, does it work with Amazon Linux 2 ami? because they’ve taken over the AuthorizedKeysCommand for use with ssm

Yeah, it’s pretty odd text in the release notes:

“to support an upcoming feature to read SSH public keys”

https://aws.amazon.com/amazon-linux-2/release-notes/

Amazon Linux 2 - 10/31/2018 Update

OpenSSH daemon configuration file /etc/ssh/sshd_config updates

The OpenSSH daemon configuration file /etc/ssh/sshd_config has been updated. The AuthorizedKeysCommand value is configured to point to a customized script, /opt/aws/bin/curl_authorized_keys to support an upcoming feature to read SSH public keys; from the EC2 instance metadata during the SSH connection process.

and broken functionality of all these scripts that use that command lol

haha

i didn’t know that

i don’t like how they are forcing people to use ssm to connect to instances.

if it was a one-line command, that’d be nice. otherwise its kinda like meh

ooh

“and home directory sharing (via optional EFS integration)” this is very interesting re: keymaker

hey everyone give a warm welcome to @Stephen! Good to have you here

3

3I setup https://www.linkedin.com/groups/13649396/ if LinkedIn is your thang and you want a badge on your profile

hey everyone give a warm welcome to @rohit! Good to have you here

hey @rohit!

Hi

I have a question related to cloudposse/terraform-null-label

sure, let’s move to #terraform

Just curious, how did you generate the image used here https://docs.cloudposse.com/helm-charts/

i see lot of people generating something like this

oh haha, that was actually just a screenshot from one of our slide decks

which I had a fancier answer

but lot of people are generating something like this

so i was wondering how people do it

i literally googled everyone of those projects to find a nice transparent logo and placed them

so i’ve seen various implementations

some use favicons

gotcha

i thought there was some tool to generate something like this

Easily embed any company’s logo in your project with this simple and free API from Clearbit

yea, something to generate a montage

if you find one, lmk!!

will do

@Erik Osterman (Cloud Posse) what did you use clearbit for ?

oh just googling logo api

never used it

when i searched for Cloud Posse your pic showed up

oh, haha

yea, i think their algo is weak

i tried another one of these services and it returned one of our customer’s logo for [cloudposse.com](http://cloudposse.com)

i guess they pulled it from our logo slider on the homepage

alas, google FTW

yeah their algo looks weak

2018-11-29

hey everyone give a warm welcome to @pigglesticks! Good to have you here

welcome @pigglesticks

Thanks @Andriy Knysh (Cloud Posse)

I think I might be in the wrong timezone over here

what timezone are you in?

I am based in Cape Town

UTC+2

oh nice, I have a friend here in Florida, he is from Cape Town originally

I’m from Cape Town

Live in Germany now

I am originally from the UK, but I’ve lived in SA for 8 years now

I’ve fallen for the lifestyle

How long in Germany Jan?

2 years

Almost

@pigglesticks Best out of the UK

Theresa May’s dancing is enough to scare anyone away for good

hahaha

@Kenny Inggs is also in South Africa - Cape Town if I am not mistaken

@Erik Osterman (Cloud Posse) created a new channel #ansible. Join if this sounds interesting!

2018-11-30

hey everyone give a warm welcome to @chhed13! Good to have you here

Hi everybody!

hey Andrey, where are you from?

From Russia. But currently I am on re:Invent

We’ve met once there with @antonbabenko

Hi @chhed13 ! Great to have you here, enjoy your trip further :)

Sorry we didn’t meet last night, it was difficult to get around!

Yeah, it was. Which city you are based at? See you at other events in the world

hey everyone give a warm welcome to @Valdemir! Good to have you here

hey @chhed13! what’d you think of #aws-reinvent this year?

It’s too crowded for me, worse then 2017. I am overwhelmed

yea, i went last year and had the same experience. decided to skip it this time around. then saw they were adding a lot more repeat talks and had some FOMO.

@Erik Osterman (Cloud Posse) created a new channel #packer. Join if this sounds interesting!

Anyone know of a module or something that can help me get all Cloudwatch logs from several accounts to the audit account?

you mean the new capability that was just announced?

(forget what they called it)

Organizational cloudtrail

Think the PR to add functuoanlity is merged but probably waiting for a release

Needs to be run in the master account org

Can’t you do that already? Just create the bucket in the audit account, and point the trail in each account at that bucket? At least, that’s how we’re doing it, and no limit on the org boundary, so still works if you need multiple payer accounts for compliance reasons…

That is you managing a individual trail per account and telling them all to log into a single bucket

Is this implementation different? Or is it just creating the trail for you, from the master account?

Organizational trails you create a new trail in master, with an added Boolean of isOrganizationalTrail and it will create and manage in all underling accounts of the org. Master config is applied to all

Meh. If it’s just creating the trail, I like our approach better. Rather have terraform manage the config for each trail

Hmm, yeah, think I’d rather less code and a single account to manage. There are other benefits like an underling account can’t turn it off or update the trail, which is an attack vector if an account is compromised

exactly, this is what I like about it. check & balances.

Yeah, we already manage these compliance related resources in child accounts, including the IAM role assignment, so no chance of that

Thank goodness for the permission boundary feature… Makes it possible to delegate IAM permissions to their roles without escalation risk

What if your production account IAM creds were compromised? Or someone managed to impersonate a service that has cloudtrail access?

So you’ve pwned an AWS account — congratulations — now what? You’re eager to get to the data theft, amirite? What about that whole cyber…

I don’t see how this feature changes that risk, you still have something, somewhere with permissions to these things, and it’s still centrally controlled

I generally want to setup alerts if anyone messes with CT

Because it is in one place that can be more locked down than each underling account

Hook that up to SNS

Eh, I don’t really see any difference. Once you have the module written, it’s just a matter of being able to implement it, and if you have multiple payer accounts then you need to be able to implement multiple times anyway

The org-centric features are certainly nice for those who do things manually, through the console though

I agree that where Terraform is concerned, sometimes it is just easier to manage a thing in each account rather than turn on in one and magic behind the scenes, treat others differently.

@joshmyers I was chatting with @Erik Osterman (Cloud Posse) the AWS Control Tower is going to be interesting to see in action, I feel it does a lot of what cloudposse framework tackles

Looks pretty nice. Hopefully not all magic behind the scenes and APIs are exposed (can’t see api docs for it)

Yea, hopefully it can make some things easier.

I just got off the phone with @Jan and he gave me some AWESOME ideas for how to simplify the geodesic coldstart process that I can’t wait to implement

2

2I’m not a fan of magic, either, but it might be nice for control tower to be a bit more magic than implied by this pic…

That’s actually the landing zone, but the two teams are working closely

Oooohh

i added a bunch more emoji. let me know what i’m missing!

Table flip is missing for sure