#atmos (2023-10)

2023-10-01

anybody already got some renovatebot config to perform component updates?

It’s not practical. Since you also need to be for in the component, it’s not as simple as bumping the version in the component.yaml

the vendor pull can be done via a github action

Also, the other problem we had with it is every single component.yaml is updated then when a new release is made even if no tf files were affected

should not be much of a problem when the grouping functionality can be used. Fro the human doing a visual check re the version in component.yaml and the repo version this would even be better (less explaining to do as why a lower version != behind)

I welcome you to try the experiment. I remain skeptical, or that different teams may have different preferences.

@Erik Osterman (Cloud Posse) as i see it now, the component updater also updates a component if it is not affected by the repo version bump

I can explain on next office hours how it works. We are also working on a better way to distribute individually versioned components as proper artifacts, that will replace the current method

TL;DR, the way it’s working right now is that: it’s not if the component changed in the commit of that version, it’s relative to your local copy. If your local copy differs from the component in that version, it will be updated. With out using tags for each component in the monorepo there would be no efficient way to do it. Hence why we are moving to a registry model using OCI.

and is there a way to suppress the output of the init (not skipping the init), eg when doing a atmos terraform show -json comp -s stack ?

if you try setting log level to a higher setting like Warning does that help accomplish what you wanted?

Use the atmos.yaml configuration file to control the behavior of the atmos CLI.

Given “Atmos will not log any messages (note that this does not prevent other tools like Terraform from logging).” I doubt that will be the case, but I’ll try

2023-10-02

Is there some built-in way to automatically wrap the atmos command with ATMOS_BASE_PATH ATMOS_CLI_CONFIG_PATH and setting it to PWD in order to use remote module states when working in the current dir, without using geodesic or moving the atmos file? I’m thinking makefile probably would be able to do this, but just wondering if there’s any other solutions

you can export the ENV vars in .bashrc (or similar file in other shells)

this is similar to what geodesic does

I use this in my .envrc in the root of my repo with direnv to auto load this

export ATMOS_CLI_CONFIG_PATH=$(git rev-parse --show-toplevel)

export ATMOS_BASE_PATH=$(git rev-parse --show-toplevel)

Are there any minimal examples of an atmos driven repo? As awesome as atmos/examples/complete is it’s a lot when first approaching something new. What would be the absolute minimal repo which deploys a single component in a single stack?

atmos/examples/complete is actually to test ALL Atmos functionality (and also to test bad/wrong configs, not only the correct ones), we agree it’s a bad name for the folder. We are going to create another folder with a minimal setup to configure a few Atmos components and stacks, and make it easy to understand and provision

this is already described in https://atmos.tools/category/quick-start (but w/o actually having the correct config itself, which we are going to add)

Take 20 minutes to learn the most important atmos concepts.

2023-10-03

Hey guys, I’m following atmos tutorial here and currently stuck on atmos vendor pull --component infra/vpc, keep getting:

No stack config files found in the provided paths:

- /<omitted>/atmos-poc/stacks/orgs/**/*

Check if `base_path`, 'stacks.base_path', 'stacks.included_paths' and 'stacks.excluded_paths' are correctly set in CLI config files or ENV vars.

My structure is:

.

├── atmos.yaml

├── components

│ └── terraform

│ └── infra

│ ├── vpc

│ │ └── component.yaml

│ └── vpc-flow-logs-bucket

│ └── component.yaml

└── stacks

└── orgs

└── cp

├── _defaults.yaml

└── tenant1

├── _defaults.yaml

└── dev

└── _defaults.yaml

My atmos.yaml:

base_path: "."

components:

terraform:

base_path: "components/terraform"

apply_auto_approve: false

deploy_run_init: true

init_run_reconfigure: true

auto_generate_backend_file: false

stacks:

base_path: "stacks"

included_paths:

- "orgs/**/*"

excluded_paths:

- "**/_defaults.yaml"

name_pattern: "{tenant}-{environment}-{stage}"

workflows:

base_path: "stacks/workflows"

logs:

file: "/dev/stdout"

level: Info

How do I troubleshoot exactly where is it failing?

atmos version: 1.45.3

apparently vendor pull does not work if the component is not referenced anywhere in the stack..

touch stacks/orgs/cp/tenant1/dev/my-stack.yaml will get rid of that error

you need at least one .yaml file in orgs/ that is not _defaults.yaml

thanks!

good luck

@jonjitsu thanks. Yes, this is an issue with cold start, we’ll fix it in Atmos (to better describe the error to the user), and in the docs

There is a lot of information related to the usage and way of thinking when building with atmos. The components also provide a nice way to better understand the opinionated concepts related to separating and deploying/destroying parts that relate to eachother. However, one important aspect I have failed to discover yet, is how to handle EKS service roles for the application services you are building. These service roles will all have some kind of dependence on other components of the infrastructure. Whats the best practise setting up something like that? Do you just write something custom for it and reference the arns from the remote state?

we have a bunch of examples for IRSA, see here https://github.com/cloudposse/terraform-aws-components/tree/main/modules/eks

for example:

``` locals { enabled = module.this.enabled

webhook_enabled = local.enabled ? try(var.webhook.enabled, false) : false webhook_host = local.webhook_enabled ? format(var.webhook.hostname_template, var.tenant, var.stage, var.environment) : “example.com” runner_groups_enabled = length(compact(values(var.runners)[].group)) > 0 docker_config_json_enabled = local.enabled && var.docker_config_json_enabled docker_config_json = one(data.aws_ssm_parameter.docker_config_json[].value)

github_app_enabled = length(var.github_app_id) > 0 && length(var.github_app_installation_id) > 0 create_secret = local.enabled && length(var.existing_kubernetes_secret_name) == 0

busy_metrics_filtered = { for runner, runner_config in var.runners : runner => try(runner_config.busy_metrics, null) == null ? null : { for k, v in runner_config.busy_metrics : k => v if v != null } }

default_secrets = local.create_secret ? [ { name = local.github_app_enabled ? “authSecret.github_app_private_key” : “authSecret.github_token” value = one(data.aws_ssm_parameter.github_token[*].value) type = “string” } ] : []

webhook_secrets = local.create_secret && local.webhook_enabled ? [ { name = “githubWebhookServer.secret.github_webhook_secret_token” value = one(data.aws_ssm_parameter.github_webhook_secret_token[*].value) type = “string” } ] : []

set_sensitive = concat(local.default_secrets, local.webhook_secrets)

default_iam_policy_statements = [ { sid = “AllowECRActions” actions = [ # This is intended to be everything except create/delete repository # and get/set/delete repositoryPolicy “ecr:BatchCheckLayerAvailability”, “ecr:BatchDeleteImage”, “ecr:BatchGetImage”, “ecr:CompleteLayerUpload”, “ecr:DeleteLifecyclePolicy”, “ecr:DescribeImages”, “ecr:DescribeImageScanFindings”, “ecr:DescribeRepositories”, “ecr:GetAuthorizationToken”, “ecr:GetDownloadUrlForLayer”, “ecr:GetLifecyclePolicy”, “ecr:GetLifecyclePolicyPreview”, “ecr:GetRepositoryPolicy”, “ecr:InitiateLayerUpload”, “ecr:ListImages”, “ecr:PutImage”, “ecr:PutImageScanningConfiguration”, “ecr:PutImageTagMutability”, “ecr:PutLifecyclePolicy”, “ecr:StartImageScan”, “ecr:StartLifecyclePolicyPreview”, “ecr:TagResource”, “ecr:UntagResource”, “ecr:UploadLayerPart”, ] resources = [“*”] } ]

s3_iam_policy_statements = length(var.s3_bucket_arns) > 0 ? [ { sid = “AllowS3Actions” actions = [ “s3:PutObject”, “s3:GetObject”, “s3:DeleteObjectVersion”, “s3:ListBucket”, “s3:DeleteObject”, “s3:PutObjectAcl” ] resources = flatten([ for arn in var.s3_bucket_arns : [arn, “${arn}/*”] ]) }, ] : []

iam_policy_statements = concat(local.default_iam_policy_statements, local.s3_iam_policy_statements) }

data “aws_ssm_parameter” “github_token” { count = local.create_secret ? 1 : 0

name = var.ssm_github_secret_path with_decryption = true }

data “aws_ssm_parameter” “github_webhook_secret_token” { count = local.create_secret && local.webhook_enabled ? 1 : 0

name = var.ssm_github_webhook_secret_token_path with_decryption = true }

data “aws_ssm_parameter” “docker_config_json” { count = local.docker_config_json_enabled ? 1 : 0 name = var.ssm_docker_config_json_path with_decryption = true }

module “actions_runner_controller” { source = “cloudposse/helm-release/aws” version = “0.10.0”

name = “” # avoids hitting length restrictions on IAM Role names chart = var.chart repository = var.chart_repository description = var.chart_description chart_version = var.chart_version wait = var.wait atomic = var.atomic cleanup_on_fail = var.cleanup_on_fail timeout = var.timeout

kubernetes_namespace = var.kubernetes_namespace create_namespace_with_kubernetes = var.create_namespace

eks_cluster_oidc_issuer_url = module.eks.outputs.eks_cluster_identity_oidc_issuer

service_account_name = module.this.name service_account_namespace = var.kubernetes_namespace

iam_role_enabled = true

iam_policy_statements = local.iam_policy_statements

values = compact([ # hardcoded values file(“${path.module}/resources/values.yaml”), # standard k8s object settings yamlencode({ fullnameOverride = module.this.name, serviceAccount = { name = module.this.name }, resources = var.resources rbac = { create = var.rbac_enabled } githubWebhookServer = { enabled = var.webhook.enabled queueLimit = var.webhook.queue_limit useRunnerGroupsVisibility = local.runner_groups_enabled ingress = { enabled = var.webhook.enabled hosts = [ { host = local.webhook_host paths = [ { path = “/” pathType = “Prefix” } ] } ] } }, authSecret = { enabled = true create = local.create_secret } }), local.github_app_enabled ? yamlencode({ authSecret = { github_app_id = var.github_app_id github_app_installation_id = var.github_app_installation_id } }) : “”, local.create_secret ? “” : yamlencode({ authSecret = { name = var.existing_kubernetes_secret_name }, githubWebhookServer = { secret = { name = var.existing_kubernetes_secret_name } } }), # additional values yamlencode(var.chart_values) ])

set_sensitive = local.set_sensitive

context = module.this.context }

module “actions_runner” { for_each = local.enabled ? var.runners : {}

source = “cloudposse/helm-release/aws” version = “0.10.0”

name = each.key chart = “${path.module}/charts/actions-runner”

kubernetes_namespace = var.kubernetes_namespace create_namespace = false # will be created by controller above atomic = var.atomic

eks_cluster_oidc_issuer_url = module.eks.outputs.eks_cluster_identity_oidc_issuer

values = compact([ yamlencode({ release_name = each.key pod_annotations = lookup(each.value, “pod_annotations”, “”) service_account_name = module.actions_runner_controller.service_account_name type = each.value.type scope = each.value.scope image = each.value.image dind_enabled = each.value.dind_enabled service_account_role_arn = module.actions_runner_controller.service_account_role_arn resources = each.value.resources storage = each.value.storage labels = each.value.labels scale_down_delay_seconds = each.value.scale_down_delay_seconds min_replicas = each.value.min_replicas max_replicas = each.value.max_replicas webhook_driven_scaling_enabled = each.value.webhook_driven_scaling_enabled webhook_startup_timeout = lookup(each.value, “webhook_startup_timeout”, “”) pull_driven_scaling_enabled = each.value.pull_driven_scaling_enabled pvc_enabled = each.value.pvc_enabled node_selector = each.value.node_selector tolerations = each.value.tolerations docker_config_json_enabl…

"cloudposse/helm-release/aws" 1) creates a Helm release; 2) creates an IRSA with the required permissions and assigns it to the Helm release k8s Service Account

ALB controller is another example https://github.com/cloudposse/terraform-aws-components/blob/main/modules/eks/alb-controller/main.tf#L23

iam_role_enabled = true

external-dns is yet another https://github.com/cloudposse/terraform-aws-components/blob/main/modules/eks/external-dns/main.tf#L44

iam_role_enabled = true

those use remote-state to get the EKS component from the remote state https://github.com/cloudposse/terraform-aws-components/blob/main/modules/eks/external-dns/remote-state.tf

module "eks" {

source = "cloudposse/stack-config/yaml//modules/remote-state"

version = "1.5.0"

component = var.eks_component_name

context = module.this.context

}

module "dns_gbl_delegated" {

source = "cloudposse/stack-config/yaml//modules/remote-state"

version = "1.5.0"

component = "dns-delegated"

environment = var.dns_gbl_delegated_environment_name

context = module.this.context

defaults = {

zones = {}

}

}

module "dns_gbl_primary" {

source = "cloudposse/stack-config/yaml//modules/remote-state"

version = "1.5.0"

component = "dns-primary"

environment = var.dns_gbl_primary_environment_name

context = module.this.context

ignore_errors = true

defaults = {

zones = {}

}

}

and then use the credentials from the EKS cluster in the helm and kubernetes providers` https://github.com/cloudposse/terraform-aws-components/blob/main/modules/eks/external-dns/provider-helm.tf

##################

#

# This file is a drop-in to provide a helm provider.

#

# It depends on 2 standard Cloud Posse data source modules to be already

# defined in the same component:

#

# 1. module.iam_roles to provide the AWS profile or Role ARN to use to access the cluster

# 2. module.eks to provide the EKS cluster information

#

# All the following variables are just about configuring the Kubernetes provider

# to be able to modify EKS cluster. The reason there are so many options is

# because at various times, each one of them has had problems, so we give you a choice.

#

# The reason there are so many "enabled" inputs rather than automatically

# detecting whether or not they are enabled based on the value of the input

# is that any logic based on input values requires the values to be known during

# the "plan" phase of Terraform, and often they are not, which causes problems.

#

variable "kubeconfig_file_enabled" {

type = bool

default = false

description = "If `true`, configure the Kubernetes provider with `kubeconfig_file` and use that kubeconfig file for authenticating to the EKS cluster"

}

variable "kubeconfig_file" {

type = string

default = ""

description = "The Kubernetes provider `config_path` setting to use when `kubeconfig_file_enabled` is `true`"

}

variable "kubeconfig_context" {

type = string

default = ""

description = "Context to choose from the Kubernetes kube config file"

}

variable "kube_data_auth_enabled" {

type = bool

default = false

description = <<-EOT

If `true`, use an `aws_eks_cluster_auth` data source to authenticate to the EKS cluster.

Disabled by `kubeconfig_file_enabled` or `kube_exec_auth_enabled`.

EOT

}

variable "kube_exec_auth_enabled" {

type = bool

default = true

description = <<-EOT

If `true`, use the Kubernetes provider `exec` feature to execute `aws eks get-token` to authenticate to the EKS cluster.

Disabled by `kubeconfig_file_enabled`, overrides `kube_data_auth_enabled`.

EOT

}

variable "kube_exec_auth_role_arn" {

type = string

default = ""

description = "The role ARN for `aws eks get-token` to use"

}

variable "kube_exec_auth_role_arn_enabled" {

type = bool

default = true

description = "If `true`, pass `kube_exec_auth_role_arn` as the role ARN to `aws eks get-token`"

}

variable "kube_exec_auth_aws_profile" {

type = string

default = ""

description = "The AWS config profile for `aws eks get-token` to use"

}

variable "kube_exec_auth_aws_profile_enabled" {

type = bool

default = false

description = "If `true`, pass `kube_exec_auth_aws_profile` as the `profile` to `aws eks get-token`"

}

variable "kubeconfig_exec_auth_api_version" {

type = string

default = "client.authentication.k8s.io/v1beta1"

description = "The Kubernetes API version of the credentials returned by the `exec` auth plugin"

}

variable "helm_manifest_experiment_enabled" {

type = bool

default = false

description = "Enable storing of the rendered manifest for helm_release so the full diff of what is changing can been seen in the plan"

}

locals {

kubeconfig_file_enabled = var.kubeconfig_file_enabled

kube_exec_auth_enabled = local.kubeconfig_file_enabled ? false : var.kube_exec_auth_enabled

kube_data_auth_enabled = local.kube_exec_auth_enabled ? false : var.kube_data_auth_enabled

# Eventually we might try to get this from an environment variable

kubeconfig_exec_auth_api_version = var.kubeconfig_exec_auth_api_version

exec_profile = local.kube_exec_auth_enabled && var.kube_exec_auth_aws_profile_enabled ? [

"--profile", var.kube_exec_auth_aws_profile

] : []

kube_exec_auth_role_arn = coalesce(var.kube_exec_auth_role_arn, module.iam_roles.terraform_role_arn)

exec_role = local.kube_exec_auth_enabled && var.kube_exec_auth_role_arn_enabled ? [

"--role-arn", local.kube_exec_auth_role_arn

] : []

# Provide dummy configuration for the case where the EKS cluster is not available.

certificate_authority_data = try(module.eks.outputs.eks_cluster_certificate_authority_data, "")

# Use coalesce+try to handle both the case where the output is missing and the case where it is empty.

eks_cluster_id = coalesce(try(module.eks.outputs.eks_cluster_id, ""), "missing")

eks_cluster_endpoint = try(module.eks.outputs.eks_cluster_endpoint, "")

}

data "aws_eks_cluster_auth" "eks" {

count = local.kube_data_auth_enabled ? 1 : 0

name = local.eks_cluster_id

}

provider "helm" {

kubernetes {

host = local.eks_cluster_endpoint

cluster_ca_certificate = base64decode(local.certificate_authority_data)

token = local.kube_data_auth_enabled ? one(data.aws_eks_cluster_auth.eks[*].token) : null

# The Kubernetes provider will use information from KUBECONFIG if it exists, but if the default cluster

# in KUBECONFIG is some other cluster, this will cause problems, so we override it always.

config_path = local.kubeconfig_file_enabled ? var.kubeconfig_file : ""

config_context = var.kubeconfig_context

dynamic "exec" {

for_each = local.kube_exec_auth_enabled && length(local.certificate_authority_data) > 0 ? ["exec"] : []

content {

api_version = local.kubeconfig_exec_auth_api_version

command = "aws"

args = concat(local.exec_profile, [

"eks", "get-token", "--cluster-name", local.eks_cluster_id

], local.exec_role)

}

}

}

experiments {

manifest = var.helm_manifest_experiment_enabled && module.this.enabled

}

}

provider "kubernetes" {

host = local.eks_cluster_endpoint

cluster_ca_certificate = base64decode(local.certificate_authority_data)

token = local.kube_data_auth_enabled ? one(data.aws_eks_cluster_auth.eks[*].token) : null

# The Kubernetes provider will use information from KUBECONFIG if it exists, but if the default cluster

# in KUBECONFIG is some other cluster, this will cause problems, so we override it always.

config_path = local.kubeconfig_file_enabled ? var.kubeconfig_file : ""

config_context = var.kubeconfig_context

dynamic "exec" {

for_each = local.kube_exec_auth_enabled && length(local.certificate_authority_data) > 0 ? ["exec"] : []

content {

api_version = local.kubeconfig_exec_auth_api_version

command = "aws"

args = concat(local.exec_profile, [

"eks", "get-token", "--cluster-name", local.eks_cluster_id

], local.exec_role)

}

}

}

you just need to copy (verbatim) the [provider.helm.tf](http://provider.helm.tf) fle into your component folder

and use the remote state module

module "eks" {

source = "cloudposse/stack-config/yaml//modules/remote-state"

version = "1.5.0"

component = var.eks_component_name

context = module.this.context

}

and enable the IAML role for IRSA iam_role_enabled = true

and provide the required permissions so the Pods will assume the role and will be able to access the AWS resources

everything here https://github.com/cloudposse/terraform-aws-components/tree/main/modules/eks is used in production by many companies, and many of those components create and use IRSA - take a look

Oh ok, yeah I looked at these. But they are very specifically tied to helm releases, and do not require you referencing a lot of different resources in your stack such as SQS, S3, SSM,… So was just wondering if there were any generic standards for solely the service roles for custom apps which are not deployed through terraform

Terraform module to provision an EKS IAM Role for Service Account

it’s also used by https://github.com/cloudposse/terraform-aws-helm-release

Create helm release and common aws resources like an eks iam role

2023-10-04

2023-10-05

2023-10-12

I think atmos vendor pull should not default to atmos vendor pull [STACK] when it will always give the error command 'atmos vendor pull --stack <stack>' is not implemented yet

it should insted default to atmos vendor pull [COMPONENT]

i.e. I want to run atmos vendor pull aurora-postgres

it’s the ergonomics i’d expect from the subcommand

we are planning to add support for stacks and other artifacts

so a parameter to specify the type is required

but the current behavior is basically error by default

will improve the error handling in the next release, which will be today/tomorrow, which btw will allow pulling artifacts (including terraform components) from OCI registries, eg AWS public ECR

I agree though that atmos vendor pull [COMPONENT] would feel more natural.

but in that case, we’ll not be able to pull any other types of artifacts. And if we add other types, then the command will not be symmetric

just added this error checking, will be in the next release

atmos vendor pull infra/vpc2

since inherits is an array and doesn’t merge when overridden, is there a solution where we could inherit without having to copy all other inherits from the main file?

ex:

• dev: retention_in_days: 3

• prod: retention_in_days: 120

we would rather not have 120 sprayed across ~68 files so would like to use inherits. doing so means updating the inheritance in dev and prod each time it changes (surely someone will forget and break prod).

just curious of any solutions.

Component Inheritance is one of the principles of Component-Oriented Programming (COP)

you can have a set of abstract base components (abstract so nobody would provision them even if attempted)

then another set of abstract base components that inherit from the first set and add more things

so you can combine it in diff combinations

what is this used for?

settings:

config:

is_prod: false

this just shows that the settings section is a free-form map, and you can put anything into it

then, it can be read in CI/CD (e.g. GHA) to make some decisions based on values

also, it’s present in the outputs of many Atmos commands, e.g. atmos describe component, which can be used in shell scripts, GHA, and Atmos custom commands

do you have an example of how you use it in GHA?

- key: ATMOS_IS_PROD

- 'echo settings.config.is_prod: "{{ .ComponentConfig.settings.config.is_prod }}"'

example in a custom command ^

this is a GHA that installs and uses Atmos https://github.com/cloudposse/github-action-atmos-affected-stacks/blob/main/action.yml

name: "Atmos Affected Stacks"

description: "A GitHub Action to determine the affected stacks for a given pull request"

author: [email protected]

branding:

icon: "file"

color: "white"

inputs:

default-branch:

description: The default branch to use for the base ref.

required: false

default: ${{ github.event.repository.default_branch }}

head-ref:

description: The head ref to checkout. If not provided, the head default branch is used.

required: false

install-atmos:

description: Whether to install atmos

required: false

default: "true"

atmos-version:

description: The version of atmos to install if install-atmos is true

required: false

default: "latest"

atmos-config-path:

description: The path to the atmos.yaml file

required: false

default: atmos.yaml

atmos-include-spacelift-admin-stacks:

description: Whether to include the Spacelift admin stacks of affected stacks in the output

required: false

default: "false"

install-terraform:

description: Whether to install terraform

required: false

default: "true"

terraform-version:

description: The version of terraform to install if install-terraform is true

required: false

default: "latest"

install-jq:

description: Whether to install jq

required: false

default: "false"

jq-version:

description: The version of jq to install if install-jq is true

required: false

default: "1.6"

jq-force:

description: Whether to force the installation of jq

required: false

default: "true"

outputs:

affected:

description: The affected stacks

value: ${{ steps.affected.outputs.affected }}

has-affected-stacks:

description: Whether there are affected stacks

value: ${{ steps.affected.outputs.affected != '[]' }}

matrix:

description: A matrix suitable for use for GitHub Actions of the affected stacks

value: ${{ steps.matrix.outputs.matrix }}

runs:

using: "composite"

steps:

- if: ${{ inputs.install-terraform == 'true' }}

uses: hashicorp/setup-terraform@v2

with:

terraform_version: ${{ inputs.terraform-version }}

- if: ${{ inputs.install-atmos == 'true' }}

uses: cloudposse/[email protected]

env:

ATMOS_CLI_CONFIG_PATH: ${{inputs.atmos-config-path}}

with:

atmos-version: ${{ inputs.atmos-version }}

install-wrapper: false

- if: ${{ inputs.install-jq == 'true' }}

uses: dcarbone/[email protected]

with:

version: ${{ inputs.jq-version }}

force: ${{ inputs.jq-force }}

# atmos describe affected requires the main branch of the git repo to be present on disk so it can compare the

# current branch to it to determine the affected stacks. This is different from a file-based git diff in that we

# look at the contents of the stack files to determine if any have changed.

- uses: actions/checkout@v3

with:

ref: ${{ inputs.default-branch }}

path: main-branch

fetch-depth: 0

- name: checkout head ref

id: head-ref

shell: bash

run: git checkout ${{ inputs.head-ref }}

working-directory: main-branch

- name: atmos affected stacks

id: affected

shell: bash

env:

ATMOS_CLI_CONFIG_PATH: ${{inputs.atmos-config-path}}

run: |

if [[ "${{ inputs.atmos-include-spacelift-admin-stacks }}" == "true" ]]; then

atmos describe affected --file affected-stacks.json --verbose=true --repo-path "$GITHUB_WORKSPACE/main-branch" --include-spacelift-admin-stacks=true

else

atmos describe affected --file affected-stacks.json --verbose=true --repo-path "$GITHUB_WORKSPACE/main-branch"

fi

affected=$(jq -c '.' < affected-stacks.json)

printf "%s" "affected=$affected" >> $GITHUB_OUTPUT

- name: build matrix

id: matrix

shell: bash

run: |

matrix=$(jq -c '{include:[.[]]}' < affected-stacks.json)

echo "matrix=$matrix" >> $GITHUB_OUTPUT

instead of atmos describe affected https://github.com/cloudposse/github-action-atmos-affected-stacks/blob/main/action.yml#L106, you can call any Atmos command including atmos describe component. The use jq or yq to select settings.config.xxxx (or whatever values in settings you have

atmos describe affected --file affected-stacks.json --verbose=true --repo-path "$GITHUB_WORKSPACE/main-branch" --include-spacelift-admin-stacks=true

this is another example of using Atmos commands in GHA https://github.com/cloudposse/github-action-atmos-terraform-select-components/blob/8be5c434fb10cf870ccabf94bda98d43d88971b0/src/filter.sh#L14

component_path=$(atmos describe component $component -s $stack --format json | jq -rc '.. | select(.component_info)? | .component_info.component_path')

and this is an example of using the settings section as a free-form map https://github.com/cloudposse/github-action-atmos-terraform-select-components/blob/8be5c434fb10cf870ccabf94bda98d43d88971b0/src/filter.sh#L3

JQ_QUERY=${JQ_QUERY:-'to_entries[] | .key as $parent | .value.components.terraform | to_entries[] | select(.value.settings.github.actions_enabled // false) | [$parent, .key] | join(",")'}

settings.github.actions_enabled was added to Atmos stacks, and the GHA reads it

2023-10-13

Seems Terraform is implementing stacks… US and foreign patents pending even! https://www.hashicorp.com/blog/new-terraform-testing-and-ux-features-reduce-toil-errors-and-costs

Terraform and Terraform Cloud improve developer usability and velocity with a test framework, generated module tests, and stacks.

Haha, yea, saw that! If anything, it’s vindication that we’ve been thinking about it the right way all the time.

Terraform and Terraform Cloud improve developer usability and velocity with a test framework, generated module tests, and stacks.

The patent it self, is on something else, more about how their pipeline is implemented.

Techniques, systems, and devices are disclosed for implementing a system that uses machine generated infrastructure code for software development and infrastructure operations, allowing automated deployment and maintenance of a complete set of infrastructure components. One example system includes a user interface and a management platform in communication with the user interface. The user interface is configured to allow a user to deploy components for a complete web system using a set of infrastructure code such that the components are automatically configured and integrated to form the complete web system on one or more network targets.

It’s also pending, so not granted and highly likely to be contested.

I’m about to do a bit of a spike on refactoring from a monolithic, landing zone terraform root module & state file to atmos… any tools or techniques to help w/ this migration… any tips? seems a bit daunting

What do you need to do? what steps you need to take?

well… i’m most worried about splitting up the state file into multiple state files…

ahhh that

there is some tools like tf-migrate , terraformer etc

now if you duplicate the state you could potentially just delete what you will not be using automagically

For example, if you were moving an RDS cluster over another stack/module, but the resource ids/names do not change and everything else in that state is not needed, you could move the code and the plan will only keep the RDS cluster and it will delete everything else since the code is not there.

that way if you screw up you just copy the state again and try it etc

you can use the move{} and import{} blocks in some cases too if you need to

This all depends on how the code is structured, obviously

yeah.. ok taht makes sense and gives me a few avenues to go down. I like the idea of copying state … also love the new move/import blocks. .have used those quite a bit already

v1.46.0 what

Update to Go 1.21 Update/improve Atmos help for atmos-specific commands Improve atmos vendor pull command error handling Add Atmos custom commands to atmos.yaml and docs Add examples of Rego policies for Atmos components and stacks validation. Update docs. See https://atmos.tools/core-concepts/components/validation/ Add Sprig functions support to Go templates in Atmos vendoring Improve context…

what

Update to Go 1.21 Update/improve Atmos help for atmos-specific commands Improve atmos vendor pull command error handling Add Atmos custom commands to atmos.yaml and docs Add examples of Rego …

Use JSON Schema and OPA policies to validate Components.

Sprig function in component vendoring! Great stuff!

what

Update to Go 1.21 Update/improve Atmos help for atmos-specific commands Improve atmos vendor pull command error handling Add Atmos custom commands to atmos.yaml and docs Add examples of Rego …

Use JSON Schema and OPA policies to validate Components.

v1.46.0 what

Update to Go 1.21 Update/improve Atmos help for atmos-specific commands Improve atmos vendor pull command error handling Add Atmos custom commands to atmos.yaml and docs Add examples of Rego policies for Atmos components and stacks validation. Update docs. See https://atmos.tools/core-concepts/components/validation/ Add Sprig functions support to Go templates in Atmos vendoring Improve context…

2023-10-16

It’d be nice to have developer tooling for atmos like a yaml spec in VScode for stack files

also the “schema” section of docs is not very comprehensive. https://atmos.tools/core-concepts/stacks/#schema

It would be nice to have a full reference page like github actions for their workflow files

If I want to make a separate environment in a different continent/account, what would the best property of the label component be to change to differentiate between the 2? Having a hard time choosing as it impacts other parts of the stack too. Ideally I think I would be tenant but then I also would need to have tenant in the name_pattern config for atmos. But this would break all of the previous stacks. For example, if I want to use something like aws-team-roles, if both continents have production environments they would both be in stack acme-gbl-prod ({namespace}-{environment}-{stage}) ,which would not work. So then naming them differently would be the only option.

if you want to separate the two environments completely, you can use a diff namespace (to make it a diff Org)

if you want to add tennant , you can do it on a set of the stacks w/o affecting the already existing stacks (which don’t use tenant). The label module has support for it, and you use those settings in Atmos YAML stack manifest for that part of the infra. In other words, you can have some part of the config using tenant and the other part not uisng it

depending on how you want to segregate your infra, you can use one way or another

here is the config w/o using tenant (you addd that to the _defauts.yaml stack manifest for the first part of the infra

terraform:

vars:

label_order:

- namespace

- environment

- stage

- name

- attributes

descriptor_formats:

account_name:

format: "%v"

labels:

- stage

stack:

format: "%v-%v"

labels:

- environment

- stage

if you want to separate the two environments completely, you can use a diff namespace (to make it a diff Org) (edited)

Unfortunately it will be part of the same org, so changing namespace will affect it own share of components

here is the config w/o using

tenant(you addd that to the_defauts.yamlstack manifest for the first part of the infra Does that also affect the atmos stack names? it doesnt right? Then I would need a separate config for it?

and here is the config to use tenant in Atmos stacks, you add it to some other stack manifest for the new infra

terraform:

vars:

label_order:

- namespace

- tenant

- environment

- stage

- name

- attributes

descriptor_formats:

account_name:

format: "%v"

labels:

- tenant

- stage

stack:

format: "%v-%v-%v"

labels:

- tenant

- environment

- stage

Does that also affect the atmos stack names?

if you add tenant it will affect everything

Ah I see, theres a separate descriptor for stacks which gets picked up by atmos as the stack name config?

for the existing stacks that currently don’t use tenenat, you can specify a “virtual” tenant and use it in Atmos stack names but w/o updating anything in the existing resources

you can open a PR and DM me, I’ll review and improve

for example, one company did not use tenant before, and all resource names and Atmos stack names were w/o tenant. Then we added a “virtual” tenant for them to preserve all the existing resources

import:

- orgs/<namespace>/_defaults

vars:

tenant: ""

then we used this config for the old part of infra w/o tenant

terraform:

vars:

label_order:

- namespace

- environment

- stage

- name

- attributes

descriptor_formats:

account_name:

format: "%v"

labels:

- stage

stack:

format: "%v-%v"

labels:

- environment

- stage

but in your case, I would add a “virtual” tenant for the existing part of the repo and start using it in the Atmos stacks (so everytging is consistent and symmetric)

I’d just don’t use tenant in the resource names for the existing part of the repo to not destroy everything that was already provisioned

so I would add

import:

- orgs/<namespace>/_defaults

vars:

tenant: core # or other name to name the "virtual" tenant

the all Atmos stack names would be namepsace-tenant-environment-name-attributes, and for the virtual tenant I’d use

namepsace-core-environment-name-attributes

and then I’d configure the existing part of the repo in Atmos stack manifest to not use tenant just for that part by using this config

terraform:

vars:

label_order:

- namespace

- environment

- stage

- name

- attributes

descriptor_formats:

account_name:

format: "%v"

labels:

- stage

stack:

format: "%v-%v"

labels:

- environment

- stage

and for the new part of the infra I’d add a new tenant, and used this config

terraform:

vars:

label_order:

- namespace

- tenant

- environment

- stage

- name

- attributes

descriptor_formats:

account_name:

format: "%v"

labels:

- tenant

- stage

stack:

format: "%v-%v-%v"

labels:

- tenant

- environment

- stage

so the existing resources would remain the same

So, does

stack:

format: "%v-%v-%v"

labels:

- tenant

- environment

- stage

override name_patternthen?

the new rsources will have tenant in the names and IDs

and all atmos commands would use tenant in the stack names (‘core’ for the old “virtual” one)

override name_patternthen?

no, name pattern in atmos.yaml - you change it to use tenant as well

so when you execute Atmos commands, you always use tenant in the stack names for consistency

so all existing Atmos stacks names would change to use tenant in the stack names, but all existing resources remanin the same (w/o tenant in the names/Ids)

Ah ok, I think thats the main issue right now. Because my current name_pattern is {namespace}-{environment}-{stage} , but as I realised now tenant would be more suited than namespace

yes, try that

add tenant to name_pattern

add tenant to the existing infra (with a proper name)

add the label configs (shown above) to the old config (not use tenant to preserve the resources), and to the new config (using tenant in the resource names)

What is descriptor.stack used for exactly? is it for backend storage location?

no, not backend. it’s used in the new account-map/dynamic-roles.tf

2023-10-17

Are there downsides to directly modifying the workspace_key_prefix to force the S3 backend structure to match a non-standard component hierarchy?

Example:

# local directory structure

components/

terraform/

account/

account-map/

vpc/

bespoke/

component-a/

component-b/

# auto-generated workspaces+prefixes in S3

account/core-ue1-root/terraform.tfstate

account-map/core-ue1-root/terraform.tfstate

...

bespoke-component-a/plat-ue2-devt/terraform.tfstate

bespoke-component-a/plat-ue2-prod/terraform.tfstate

...

# post hacking the workspace prefix to "bespoke/<component-name>"

account/core-ue1-root/terraform.tfstate

account-map/core-ue1-root/terraform.tfstate

...

bespoke/component-a/plat-ue2-devt/terraform.tfstate

bespoke/component-a/plat-ue2-prod/terraform.tfstate

...

I think the former approach could lead to a very flat S3 backend with potentially hundreds of top-level directories, with local sub-folders under components only distinguishable by prefix.

The second approach requires modifying the catalog of each new component to override the autogenerated workspace prefix that collapses all / characters to - , but would lead to a backend that:

a) mirrors the local component directory structure

b) groups all backend state files by their directory, whether this be a project, a team, service or “kind”

I’ve tested this approach in vanilla terraform, as there are some old unresolved github issues where people attempting this unsuccessfully, (resorting to hacking the key property), but it does appear to work in modern Terraform

Will this cause any problems with Atmos under the hood?

you can set workspace_key_prefix per component in backend.s3 section, similar to https://github.com/cloudposse/atmos/blob/master/examples/complete/stacks/catalog/terraform/vpc.yaml#L8

workspace_key_prefix: infra-vpc

this is what Atmos will use if you set it

I’m aware that its technically feasible, I’m curious about whether it has any downstream consequences with any of the other atmos cli commands (e.g. describe affected etc.)

Also conscious that CloudPosse explicitly did not take this approach with your own nested components (e.g. tgw/spoke , spacelift/worker-pool etc), and wondering why you preferenced a flat structure in S3?

I don’t want to deviate from the refarch if in doing so I break from some fundamental underlying assumption or principle that I might have missed in the documentation.

nothing is affected by the backend structure, atmos describe affected does not use it

it’s only for terraform

we don’t set workspace_key_prefix for all components and let Atmos generate it automatically

workspace_key_prefix is just when you want to override it for diff reasons, e.g. if you have already provisioned a component using some prefix, and want to keep it when you change Atmos component name w/o destroying the component first

I understand this does not directly answer your question. And the reason is that we did not consider anything else besides what is auto-generated by Atmos with the possibility of overriding it in some cases

I think that all makes sense, I appreciate my example represents a niche use-case of the tooling here.

It looks there’s nothing that prevents using workspace prefix to group components, whether to mirror their position in the monorepo or for any other purpose.

Thanks very much for the help.

yes, nothing prevents you from making your own structure. Atmos generates it and writes to backend.tf.json, and then TF uses it

it’s just that you’ll have to set workspace_key_prefix on all the components in the YAML stack manifests

backend.s3 section participates in all the inheritance chains and deep-merging

2023-10-18

Is there a way to unset a deep merged property in atmos? I need certain tags on all my resources so somewhere in the hierarchy, its included in the defaults. But for kubernetes resources these tags are not needed and/or error. Setting these to null gives me another error. The only other solution I see would be to be more selective in importing these default tags, but this means more imports, so rather want to “exclude” rather than “include”.

Atmos supports deep-merging of maps at any level, but currently does not support removing items from the maps

we use a Go YAML lib which did not support special YAML syntax to specify removal

they have a new version of the lib now, but that’s not in Atmos yet

I would create a bunch of base abstract components with different combination of the values, and inherit from them in the real components

Yeah, thats what I feared, makes sense

we will update and test the new YAML lib in Atmos that supports YAML syntax to remove attributes. The new version is actually completely different so will require some testing

Cool

You could possibly null the tag values and then use terraform to filter the tags

# stacks/catalog/abc.yaml

components:

abc:

tags:

my_default_tag: value

# stacks/org/dev/us-east-1.yaml

import:

- stacks/catalog/abc

components:

abc:

tags:

my_default_tag: null

Then in terraform

locals {

real_tags = {

for k, v in module.this.tags:

k => v

if v != "null"

}

}

And finally

module "something" {

# ...

tags = local.real_tags

}

The base abstracts make more sense in the long run tho. My proposal above is a bit of a hack

2023-10-19

For the atmos workflow files, it would be a nice addition to be able to tag the upper level steps, and be able to run all these steps specifying that tag (or skip), eg:

workflows:

main-step-1:

tags: [ slow ]

steps: [ ... ]

main-step-2:

tags: [ fast ]

steps: [ ... ]

main-step-3:

tags: [ slow ]

steps: [ ... ]

atmos workflow --use-tags fast -f dummy or atmos workflow --skip-tags slow -f dummy

(bit like ansible playbooks)

as an alternative, have these tags attached to the steps itself, getting

atmos workflow all --skip-tags slow -f dummy

(assuming we have an all that calls the main-step-*

nice feature, and can be added

please create an issue in the atmos repo

yea, that would be cool!

Describe the Feature

For the atmos workflow files, it would be a nice addition to be able to tag the steps, and be able to run all these steps specifying that tag (or skip), similar to how ansible playbooks have it.

eg atmos workflow all --skip-tags slow -f dummy

Expected Behavior

running a subset of the steps, based on tags, like ansible (https://docs.ansible.com/ansible/latest/playbook_guide/playbooks_tags.html)

• selecting tagged steps to run • selecting tagges steps not to run • optionally: use the principle of always / never

each step could have 0 or more tags

Use Case

eg running various validation steps in an atnmos workflow.

Some could be fast, others could be slow. Now need to define separate workflow commands. There could be other criteria as well. Now all these need additional workflow commands + another summary command to combine these as well.

Describe Ideal Solution

anisble-playbook like usage of tags to run selective steps in a workflow command

Alternatives Considered

multiple summary workflow commands

Additional Context

see: https://sweetops.slack.com/archives/C031919U8A0/p1697701370995069

2023-10-20

2023-10-24

I remember when running a terraform plan/apply, atmos would show the deep merge of the yaml stack component at the very top. I no longer see it and cannot find a way to turn it back on.

Is there a way to see that again using the atmos.yaml configuration?

Use the atmos.yaml configuration file to control the behavior of the atmos CLI.

Log level “Trace” will show all of that

ahhh! thank you!

2023-10-25

For atmos and subcommand, I see i can define a flag of type bool. How can I access this flag in the template, eg {{ .Flags.<flagname> }} throws a

template: step-0:5:14: executing "step-0" at <.Flags.xroot>: map has no entry for key "xroot"

@Andriy Knysh (Cloud Posse)

For atmos and subcommand, when a flag is like require-root , how can I access this flag in the template?

Quick question, has atmos ever had a 3rd party security audit? asking for a client that has high security requirements

Related question https://sweetops.slack.com/archives/C031KFY9C6M/p1698251886355849

Does cloudposse have some level of compliance like soc2 or similar ? or is compliance on the roadmap?

Not yet

Thanks for confirming Erik!

Is it on the road map by chance?

So to be clear, that’s more of a Cloud Posse question than an atmos question. Cloud Posse will probably pursue something like this if/when it pursues BAAs. It’s not on our current roadmap.

ah that makes sense. Thank you

v1.47.0 what

Add vendor.yaml as a new way of vendoring components, stacks and other artifacts Add/update docs

https://atmos.tools/core-concepts/vendoring/ https://atmos.tools/cli/commands/vendor/pull/

why Atmos natively supports the concept of “vendoring”, which is making copies of the 3rd party components, stacks, and other artifacts in your own…

what

Add vendor.yaml as a new way of vendoring components, stacks and other artifacts Add/update docs

https://atmos.tools/core-concepts/vendoring/ https://atmos.tools/cli/commands/vendor/pull/

…

Use Atmos vendoring to make copies of 3rd-party components, stacks, and other artifacts in your own repo.

Use this command to pull sources and mixins from remote repositories for Terraform and Helmfile components and stacks.

v1.47.0 what

Add vendor.yaml as a new way of vendoring components, stacks and other artifacts Add/update docs

https://atmos.tools/core-concepts/vendoring/ https://atmos.tools/cli/commands/vendor/pull/

why Atmos natively supports the concept of “vendoring”, which is making copies of the 3rd party components, stacks, and other artifacts in your own…

Hi there! New user of Atmos here. Just started experimenting yesterday and so far I like the promise of the framework/tool. I created a few simple stacks to experiment and am stuck with a terraform state related issue. I initially followed the guidance here (https://docs.cloudposse.com/components/library/aws/account/) to create a set of AWS accounts in a brand new AWS Master account (no other resource created). I am now trying to move the local state to S3 and am getting the following error:

Error: Error loading state error

│

│ with module.assume_role["hct-gbl-root-tfstate-purple-cow"].module.allowed_role_map.module.account_map.data.terraform_remote_state.data_source[0],

│ on .terraform/modules/assume_role.allowed_role_map.account_map/modules/remote-state/data-source.tf line 91, in data "terraform_remote_state" "data_source":

│ 91: backend = local.ds_backend

│

│ error loading the remote state: S3 bucket does not exist.

│

│ The referenced S3 bucket must have been previously created. If the S3 bucket

│ was created within the last minute, please wait for a minute or two and try

│ again.

│

│ Error: NoSuchBucket: The specified bucket does not exist

│ status code: 404, request id: W7M341334K7N0DVR, host id: mWPCc2QiX7Aw3ar+uB1wravpTivYZdCnVqVPoNaQXe7NNyOdKGyHbNcR5hJgZ0R5MoYY5TvwN+M=

This component is responsible for provisioning the full account hierarchy along with Organizational Units (OUs). It includes the ability to associate Service Control Policies (SCPs) to the Organization, each Organizational Unit and account.

My understanding is that the S3 bucket gets created as part of the execution of this TF script. What am I missing?

Command executed:

atmos terraform plan tfstate-backend --stack core-ue1-root

Any help will be greatly appreciated!

looks like you are trying to create tfstate-backend w/o having a TF state bucket to save the state in

for this to work, you need to comment out all the ref to the backend in the code and use a local backend to create the tfstate-backend component, which will crete an S3 state bucket and a Dynamo table

then enable the S3 backend for the component and run terraform init again

terraform will detect the backend change and will offer you to move the state for the component from the local state to the already created S3 bucket

Thanks @Andriy Knysh (Cloud Posse)! I am assuming local backend was created automatically when I created the accounts. As a matter of fact, I do see the state files on the disk within the “account” component. I do have references to backend : s3 in other components. I am going to comment those references out and try this command again.

you need to create the backend first (tfstate-backend component), and then create anything else with terraform, account and other components

I see. What is my recourse now that I have already executed the commands for account creation and these accounts have been created? That might explain why I am now getting these errors with account_map.

Error: Attempt to get attribute from null value

│

│ on ../account-map/modules/roles-to-principals/main.tf line 25, in locals:

│ 25: aws_partition = module.account_map.outputs.aws_partition

│ ├────────────────

│ │ module.account_map.outputs is null

│

│ This value is null, so it does not have any attributes.

╵

╷

│ Error: Attempt to get attribute from null value

│

│ on ../account-map/modules/roles-to-principals/main.tf line 25, in locals:

│ 25: aws_partition = module.account_map.outputs.aws_partition

│ ├────────────────

│ │ module.account_map.outputs is null

│

│ This value is null, so it does not have any attributes.

╵

There are more errors - just pasted a couple for reference.

Good morning @Andriy Knysh (Cloud Posse) Any thoughts on the best way to get around this issue? What would happen if I start over (delete local state in account module)? Would the account_map module get the right list of accounts based on current configuration?

you need to provision the components in the correct order:

tfstate-backendusing local state (comment out the S3 backend)- Uncomment the S3 backend and run

terraform applyontfstate-backendto move the state to S3 - Provision

account(this will use S3 backend) - Provision

account-map(use S3 backend) Note thataacount-mapis used in all the other components to get the correct TF role to assume

if you have already created account using local state, you need to move the state to S3 https://medium.com/drmax-dev-blog/migration-of-terraform-state-between-various-backends-d3e0efedd61

Sometimes the need to move existing Terraform state from your local machine to remote backend or between remote backends should popup. So…

so if you have already provisioned account using local state, you need to add S3 backend to the component and run terraform init - Terraform will ask you to migrate the state from local to S3

Thanks @Andriy Knysh (Cloud Posse) Will try this shortly.

It took me a while, but was able to move state for all current components to S3. I followed the following sequence of activities/commands:

atmos vendor pull -c infra/tfstate-backend

atmos describe component tfstate-backend --stack core-ue1-root

atmos terraform init tfstate-backend --stack core-ue1-root

atmos terraform plan tfstate-backend --stack core-ue1-root

atmos terraform apply tfstate-backend --stack core-ue1-root

atmos terraform generate backend tfstate-backend --stack core-ue1-root

atmos terraform generate backend account --stack core-gbl-root

atmos terraform plan account --stack core-gbl-root

atmos terraform generate backend account-map --stack core-gbl-root

I am now able to plan account and account-map properly. Big lessons learned here is that I should have taken care of the state first before creating the accounts.

if you set auto_generate_backend_file: true in atmos.yaml, Atmos will auto-generate all backends for all components

Use the atmos.yaml configuration file to control the behavior of the atmos CLI.

Thanks for that tip! I was wondering if I need to generate the backend for each component.

I did try to add vpc-flow-logs-bucket as was illustrated in the example after the above steps and am getting the following set of errors for account-map. “local.account_map is null”. Do I need to generate backend of each of the sub-modules of account-map as well? OR would the above flag address these issues?

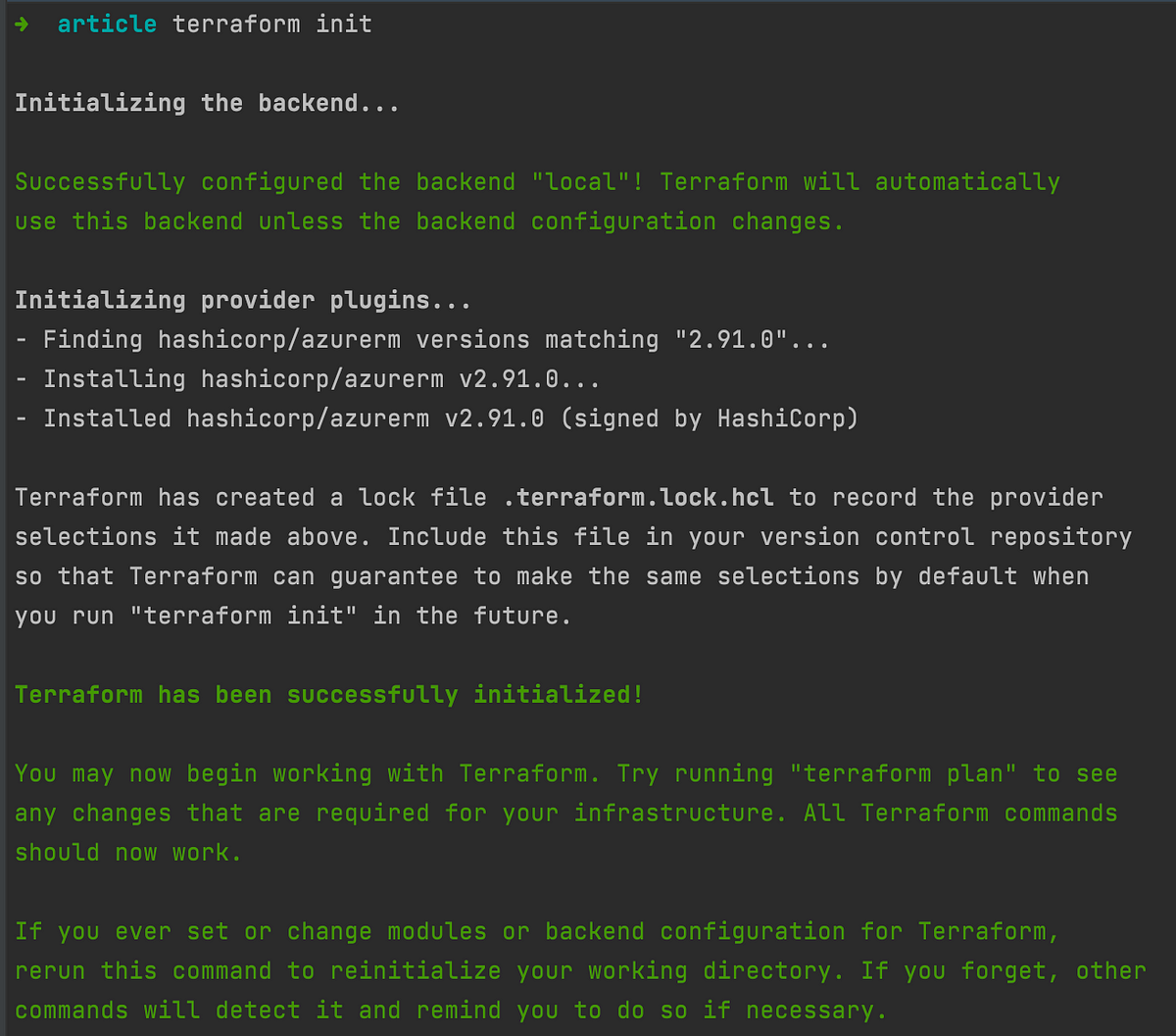

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Command info:

Terraform binary: terraform

Terraform command: plan

Arguments and flags: []

Component: infra/vpc-flow-logs-bucket

Stack: core-ue1-dev

Working dir: /atmos/components/terraform/infra/vpc-flow-logs-bucket

Executing command:

/usr/bin/terraform workspace select core-ue1-dev

Executing command:

/usr/bin/terraform plan -var-file core-ue1-dev-infra-vpc-flow-logs-bucket.terraform.tfvars.json -out core-ue1-dev-infra-vpc-flow-logs-bucket.planfile

module.iam_roles.module.account_map.data.utils_component_config.config[0]: Reading...

module.iam_roles.module.account_map.data.utils_component_config.config[0]: Read complete after 0s [id=b64bf6d1fbc423e85fa6975d72d99efc578fa53c]

module.iam_roles.module.account_map.data.terraform_remote_state.data_source[0]: Reading...

module.iam_roles.module.account_map.data.terraform_remote_state.data_source[0]: Read complete after 0s

Planning failed. Terraform encountered an error while generating this plan.

╷

│ Error: Attempt to get attribute from null value

│

│ on ../account-map/modules/iam-roles/main.tf line 32, in locals:

│ 32: profiles_enabled = coalesce(var.profiles_enabled, local.account_map.profiles_enabled)

│ ├────────────────

│ │ local.account_map is null

│

│ This value is null, so it does not have any attributes.

╵

╷

│ Error: Attempt to get attribute from null value

│

│ on ../account-map/modules/iam-roles/main.tf line 36, in locals:

│ 36: account_org_role_arn = local.account_name == local.account_map.root_account_account_name ? null : format(

│ ├────────────────

│ │ local.account_map is null

│

│ This value is null, so it does not have any attributes.

╵

@Andriy Knysh (Cloud Posse) Any thoughts on the above?

local.account_map is null

looks like you did not provision account-map

or did not specify all the correct context variables to get the account map

the context variables are namespace, tenant, environment, stage

they are defined in top-level Atmos stack manifests, usually in _defaults.yaml per namespace, tenant, environment, stage

@Andriy Knysh (Cloud Posse) What does “provision account-map” mean though? I ran terraform plan and terraform deploy on account _map.

Now I am seeing a different error though:

Error: Invalid index

│

│ on ../account-map/modules/iam-roles/main.tf line 38, in locals:

│ 38: local.account_map.full_account_map[local.account_name]

│ ├────────────────

│ │ local.account_map.full_account_map is object with 12 attributes

│ │ local.account_name is "dev"

│

│ The given key does not identify an element in this collection value.

@Nate did you find a solution to that issue?

Yes, I did. Thank you @Andriy Knysh (Cloud Posse) I was able to get through all these steps. I documented the steps I took so far – happy to share once I get to a stopping point.

@Nate Hi. Can you help me share the worked version of the Atmos tutorial with the remote backend and how to set up IAM user to be assumed in Atmos? I’m having trouble here.

@Quyen What kind of trouble are you having? Can you elaborate on the specific roadblock?

2023-10-26

2023-10-27

2023-10-28

I am getting the following error while provisioning a simple “vpc-flow-logs-bucket” component as outlined in the example.

Error: Invalid index

│

│ on ../account-map/modules/iam-roles/main.tf line 38, in locals:

│ 38: local.account_map.full_account_map[local.account_name]

│ ├────────────────

│ │ local.account_map.full_account_map is object with 12 attributes

│ │ local.account_name is "dev"

│

│ The given key does not identify an element in this collection value.

It looks like the iam-roles module (in account_map_ is looking for accounts using just the stage attribute where as the accounts created follow the format “tenant-stage”. The list of accounts from account_map module has an account called “plat-dev”, but the tenant name is not being included in the look up above. I did some more digging and it appears others had similar issue and they resolved it by passing an updated “description_format” variable to the stack.

descriptor_formats:

account_name:

format: "%v-%v"

labels:

- tenant

- stage

stack:

format: "%v-%v-%v"

labels:

- tenant

- environment

- stage

I did include the above block in my stack def - but nothing seems to be working. Did anyone else face a similar issue and if you did, what was the solution?

I got through the above issue (at least I think I did) by updating the variables for account_map as below:

identity_account_account_name: core-identity

dns_account_account_name: core-dns

audit_account_account_name: core-audit

artifacts_account_account_name: core-artifacts

I am now seeing the following error:

Error: Cannot assume IAM Role

│

│ with provider["registry.terraform.io/hashicorp/aws"],

│ on providers.tf line 1, in provider "aws":

│ 1: provider "aws" {

│

│ IAM Role (arn:aws:iam::xxxxxxxxxxxx:role/hct-plat-gbl-dev-terraform) cannot be assumed.

│

│ There are a number of possible causes of this - the most common are:

│ * The credentials used in order to assume the role are invalid

│ * The credentials do not have appropriate permission to assume the role

│ * The role ARN is not valid

│

│ Error: operation error STS: AssumeRole, https response error StatusCode: 403, RequestID: 33ebd829-b36b-4bbf-b360-d7169dfacf49, api error AccessDenied:

│ User: arn:aws:iam::yyyyyyyyyyyy:user/terraform is not authorized to perform: sts:AssumeRole on resource:

│ arn:aws:iam::xxxxxxxxxxxx:role/hct-plat-gbl-dev-terraform

After some research on current forums, I gathered that I need to “provision” iam roles - except I cannot find any documentation on how to do the “provisioning” of the roles. I see that the account_map output includes the role names, but these roles are not created in the root account or the identity account.

Assuming not provisioning the IAM roles is the primary issue here, can anyone please help with this or provide any guidance on how to get beyond this step?

@Andriy Knysh (Cloud Posse) Any thoughts on this?

you need to use these components to provision the primary roles (which are called teams) and the delegated roles (which are called team-roles)

Thanks @Andriy Knysh (Cloud Posse) I will take a look at those modules today.

@Andriy Knysh (Cloud Posse) I was able to successfully provision aws-teams and aws-team-roles modules. I checked the accounts, and the appropriate groups/users are created when I would expect them. However, when I try to deploy vpc-flow-logs-bucket module, I am still getting the following error. Do I need to add any code to the module/component to grab the roles from account_map and get them created in each of the accounts?

Error: Cannot assume IAM Role

│

│ with provider["registry.terraform.io/hashicorp/aws"],

│ on providers.tf line 1, in provider "aws":

│ 1: provider "aws" {

│

│ IAM Role (arn:aws:iam::xxxxxxxxxxx:role/hct-plat-gbl-dev-terraform) cannot be assumed.

After digging through the forums, I am starting to wonder if I should be logged in using the <namespace>-gbl-identity-admin while executing the rest of the provisioning. If yes, what is best way to assume this role and when do I do it?

@Nate yes, you should assume the identity-admin role to work with the infra. terraform will then assume the corresponding roles to access the backend and the other accounts

you can use https://www.leapp.cloud/ (we use it) to assume the initial identity-admin role

Manage your Cloud credentials locally and improve your workflow with the only open-source desktop app you’ll ever need.

I am using Leapp tool – based on the initial guidance from getting started docs. I will look into how to assume a role within the tool. I am now logged in using the “terraform” user I manually created in the root account as an IAM user. I probably should be going down the route of aws-sso to create a new account and assume this identity role.

How to configure an AWS IAM Role Chained session. An AWS IAM Role Chained session represents an AWS role chaining access. Role chaining is the process of assuming a role starting from another IAM role or user.