#aws (2024-01)

Discussion related to Amazon Web Services (AWS)

Discussion related to Amazon Web Services (AWS)

Discussion related to Amazon Web Services (AWS)

Discussion related to Amazon Web Services (AWS)

Archive: https://archive.sweetops.com/aws/

2024-01-05

Hi, I’ve being using the ECS module but I’m getting weird error now in a clean account:

Error: updating ECS Cluster Capacity Providers (XXXXXX): InvalidParameterException: Unable to assume the service linked role. Please verify that the ECS service linked role exists.

The role is not a requirement of the module, so not sure why this is failing, any hints?

I found the issue. In fact, it was an AWS issue. In a bare new account, the role AWSServiceRoleForECS is created when you create a cluster for the first time. It was not created and thus, it failed to allow creating the capacity provider. I found that information and just recreated the cluster.

we usually have a separate component to provision all service-linked roles that an account needs

and do it from just one place so it’s clear what roles are provisioned and in what account

and also, if a role already exists, you can’t provision it with TF (it will throw error). So the whole config is complicated, w/o a central place to manage all service-liked roles it’s not possible to know if a role was already provisioned, by ClickOps, by AWS (e.g. AWS will create a bunch of service-linked roles automatically when you first create EKS and ECS clusters, but only from the AWS console), or by terraform

thanks so much

2024-01-10

Hey Guys any inputs about on this ?

Hello Artisans,

I want to integrate ElastiCache Serverless Redis. I’ve updated the Redis configurations and it works fine.

I’m facing an issue with the Horizon queue:

Predis\Response\ServerException CROSSSLOT Keys in request don't hash to the same slot

/var/www/html/vendor/predis/predis/src/Client.php 370

Sometimes, I’m experiencing these issues too:

Predis\Connection\ConnectionException Error while reading line from the server.

[tcp://****.serverless.use2.cache.amazonaws.com:6379]

/var/www/html/vendor/predis/predis/src/Connection/AbstractConnection.php 155

Illuminate\View\ViewException Error while reading line from the server.

[tcp://***.serverless.use2.cache.amazonaws.com:6379] (View:

/var/www/html/resources/views/frontend/errors/500.blade.php)

/var/www/html/vendor/predis/predis/src/Connection/AbstractConnection.php 155

The config

config/database.php

'redis' => [

'client' => env('REDIS_CLIENT', 'predis'),

'options' => [

'cluster' => env('REDIS_CLUSTER', 'redis'),

'prefix' => env('REDIS_PREFIX', Str::slug(env('APP_NAME', 'laravel'), '_').'_database_'),

],

'clusters' => [

'default' => [

[

'scheme' => env('REDIS_SCHEME', 'tcp'),

'host' => env('REDIS_HOST', '127.0.0.1'),

'password' => env('REDIS_PASSWORD', null),

'port' => env('REDIS_PORT', 6379),

'database' => 0,

],

],

'cache' => [

[

'scheme' => env('REDIS_SCHEME', 'tcp'),

'host' => env('REDIS_HOST', '127.0.0.1'),

'password' => env('REDIS_PASSWORD', null),

'port' => env('REDIS_PORT', 6379),

'database' => 0,

],

],

],

],

.env

REDIS_SCHEME=tls

REDIS_HOST=*****.serverless.use2.cache.amazonaws.com

REDIS_PORT=6379

Please help me sort out the issues. Thanks in advance.

2024-01-11

hey all, was hoping to get a quick answer for this WAF config i need to override:

module "waf" {

source = "cloudposse/waf/aws"

name = "${local.app_env_name}-wafv2"

version = "1.0.0"

scope = "REGIONAL"

default_action = "allow"

visibility_config = {

cloudwatch_metrics_enabled = false

metric_name = "rules-default-metric"

sampled_requests_enabled = true

}

managed_rule_group_statement_rules = [

{

name = "rule-20"

priority = 20

statement = {

name = "AWSManagedRulesCommonRuleSet"

vendor_name = "AWS"

}

visibility_config = {

cloudwatch_metrics_enabled = true

sampled_requests_enabled = true

metric_name = "rule-20-metric"

}

},

{

name = "rule-30"

priority = 30

statement = {

name = "AWSManagedRulesAmazonIpReputationList"

vendor_name = "AWS"

}

visibility_config = {

cloudwatch_metrics_enabled = true

sampled_requests_enabled = true

metric_name = "rule-30-metric"

}

},

{

name = "rule-40"

priority = 40

statement = {

name = "AWSManagedRulesBotControlRuleSet"

vendor_name = "AWS"

}

visibility_config = {

cloudwatch_metrics_enabled = true

sampled_requests_enabled = true

metric_name = "rule-40-metric"

}

}

//bot prevention managed rule set

]

rate_based_statement_rules = [

{

name = "rule-50"

action = "block"

priority = 50

statement = {

limit = 1000

aggregate_key_type = "IP"

}

visibility_config = {

cloudwatch_metrics_enabled = true

sampled_requests_enabled = true

metric_name = "rule-50-metric"

}

}

]

}

I need to override AWS#AWSManagedRulesCommonRuleSet#SizeRestrictions_BODY to allow for bigger requests

each statement in the rules has rule_action_override in the variables

statement:

name:

The name of the managed rule group.

vendor_name:

The name of the managed rule group vendor.

version:

The version of the managed rule group.

You can set `Version_1.0` or `Version_1.1` etc. If you want to use the default version, do not set anything.

rule_action_override:

Action settings to use in the place of the rule actions that are configured inside the rule group.

You specify one override for each rule whose action you want to change.

you do it like that

- name: "AWS-AWSManagedRulesCommonRuleSet"

priority: 3

statement:

name: AWSManagedRulesCommonRuleSet

vendor_name: AWS

rule_action_override:

SizeRestrictions_BODY:

action: count

EC2MetaDataSSRF_BODY:

action: count

it’s in YAML for atmos, but you can convert it to HCL and use directly in Terraform vars

im confused what action: count does

action: count is just an example of what can be overridden

you can override anything you need

Baseline rule groups available from AWS Managed Rules.

see the doc, you can override all the configs

thanks

Baseline rule groups available from AWS Managed Rules.

for each item like SizeRestrictions_BODY, you have to find a separate doc from AWS with all the parameters that WAF supports and can be overridden, like action: count (WAF is not simple)

The list of available AWS Managed Rules rule groups.

you can run this command to see the Action for each parameter and what items each action has https://docs.aws.amazon.com/waf/latest/APIReference/API_DescribeManagedRuleGroup.html

Provides high-level information for a managed rule group, including descriptions of the rules.

Hey @Pavel I also had to this some time ago using the CP module. In our case we wanted to allow it only for a specific endpoint. First you have to set override count:

{

name = "AWSManagedRulesCommonRuleSet"

override_action = "none"

priority = 400

statement = {

name = "AWSManagedRulesCommonRuleSet"

vendor_name = "AWS"

rule_action_override = {

SizeRestrictions_BODY = {

action = "count"

}

CrossSiteScripting_BODY = {

action = "count"

}

}

}

visibility_config = {

cloudwatch_metrics_enabled = true

sampled_requests_enabled = true

metric_name = "AWSManagedRulesCommonRuleSet"

}

},

Then we had to create a new rule group resource:

resource "aws_wafv2_rule_group" "exclude_calculation_api_path" {

name = "${module.backend_waf_label.namespace}-managed-rules-exclusions"

scope = "REGIONAL"

capacity = 100

rule {

name = "exclude-calculation-api-path"

priority = 10

action {

block {}

}

statement {

# IF MATCHES ANY DEFINED LABEL AND IS NOT URI PATH == BLOCK

and_statement {

statement {

# IF MATCHES ANY DEFINED LABEL

or_statement {

statement {

label_match_statement {

scope = "LABEL"

key = "awswaf:managed:aws:core-rule-set:CrossSiteScripting_Body"

}

}

statement {

label_match_statement {

scope = "LABEL"

key = "awswaf:managed:aws:core-rule-set:SizeRestrictions_Body"

}

}

}

}

statement {

not_statement {

statement {

byte_match_statement {

field_to_match {

uri_path {}

}

positional_constraint = "STARTS_WITH"

search_string = "/api/floorplanproduct"

text_transformation {

priority = 0

type = "NONE"

}

}

}

}

}

}

}

visibility_config {

cloudwatch_metrics_enabled = false

metric_name = "exclude-calculation-api-path"

sampled_requests_enabled = false

}

}

visibility_config {

cloudwatch_metrics_enabled = true

metric_name = "${module.backend_waf_label.id}-managed-rules-exclusions"

sampled_requests_enabled = true

}

}

And last, reference the group in the module:

rule_group_reference_statement_rules = [

{

name = "${module.backend_waf_label.id}-managed-rules-exclusions"

priority = 990

override_action = "none"

statement = {

arn = aws_wafv2_rule_group.exclude_calculation_api_path.arn

}

visibility_config = {

cloudwatch_metrics_enabled = true

sampled_requests_enabled = true

metric_name = "${module.backend_waf_label.id}-managed-rules-exclusions"

}

}

]

Sorry, it’s a lot of code, the WAF has very verbose syntax, but hope it helps!

wow thats a lot. thanks for this

Hi. Has anyone worked on Keyfactor + cert manager acme?

2024-01-12

Hello AWS Pros! I was wondering if anyone had any good insight into handling configuration files with secrets in ECS Fargate. Some background additional background from me is that we’ve transitioned from EC2 based systems to ECS fargate but we’re still using ansible and ansible vault to maintain those configurations.

Other services of ours take advantage of Secrets Manager for secret retrieval at runtime. I also have a working example of using an init container to pull a base64 encoded secret and dumping it to a shared volume which is then mounted by the actual task/service.

ECS Tasks Definitions can pull secrets from Secrets Manager and SSM Parameter store are runtime

Instead of hardcoding sensitive information in plain text in your application, you can use Secrets Manager or AWS Systems Manager Parameter Store to store the sensitive data. Then, you can create an environment variable in the container definition and enter the ARN of the Secrets Manager or AWS Systems Manager secret as the value. This allows your container to retrieve sensitive data at runtime.

Thank you, I should have been more explicit, we are currently using value from in the task definition to fetch individual secrets

I guess it’s more about, what if you have a config file with multiple secrets, this might work for 10, but not 1000

I know that there’s a KV method of storing multiple secrets, but what if you want a config built

you can use a file stored in S3 too

Yeah that’s an interesting approach that I’ve heard of as well, but kinda worried about access to that bucket and what mechanism is used to updated/render that config file

yeah, I think env var proliferation might be considered, but I have no frame of reference of the need of your team. managing that many env vars just sounds rough.

secrets manager items can be quite large. you can save the config to a single item and read that.

Yeah and it seems that storing secrets in environment variables is kinda frowned upon for the event that a bad actor execs into your container

Ive done saving a config as a base64 encoded string to preserve the formatting, but not the config on its own, but I’m still faced with how that config gets rendered initially and updated

storing secrets in the env vars section of your task def is a no no, but using secrets binding to ssm/secrets manager isn’t an issue…you have bad actors execing to the container you have bigger issues to worry about than secrets.

just to clarify, it’s not in the env vars section, it’s in the “secrets” section which uses the arn to pull the secret at runtime and inject it as an env var, or at least that’s what I perceive is happening

but yes, I agree about being in a worse spot if a a bad actor execs into the container

I don’t see any security concerns with that, but I guess it depends on who all has exec access…ideal world it’d be nobody. if exec is granted to those that don’t also have access to the secrets, yeah that’s a concern.

yeah, least privs, especially in production

with 1000 secrets you also need to consider API usage, how often does the container need to restart, etc

yeah totally, we do have scaling set up, but I wouldn’t say we have that many tasks running at one time

and if that cost balloons, then I think it’s probably a good time to look at something like Vault, but it’s nice not having to manage anything extra right now

if it was me, I’d use less secrets with more data in them rather than 1:1 secrets

yeah, that seems like the better way, less api calls if they’re loaded into a KV secret

yeah, less things to keep track of all around

guessing rendering happens at runtime, but I’d hate for some non-escaped character messing up the config and causing the task to continuously reboot

that can def happen, but a blue:green deploy will prevent impact

that’s true, another thing to think about

or at least an automated job that pulls the secrets when it’s been updated and attempts to render it

yeah, monitoring for endless task spawns is good

or an integration test that reads the secret and validate a config can be parsed from it

you could even lambda that and run it when a new version is authored

yeah, that would be a cool little tool

I don’t think you can prevent the secret from being saved though

but you could have the lambda delete that version if you wanted to give it access

yeah definitely some interesting possibilities, the same could be said for storing in s3

storing the key in an environment variable to fetch the config

but I like the built in versioning aspect of secrets manager and better integration

I was initially intrigued by S3 because I thought it would be easier, but I ultimately went with a mix of SSM and Secrets Manager due to it being much easier to be confident that my task pulled the version I was expecting…sure you can do that with S3 file versioning, but I found the experience a lot better

yeah, kind of the same experience I had with storing lambdas in s3 vs ecr

The other idea I toyed with was using an efs mount for secrets and a dedicated manage task to update it periodically. Network failures aside, any other problems with this?

I would figure this would slow down task deployments

interesting, figured mounting would be pretty quick, but I haven’t tested it

I think creative solutions have their own problems, I try to stick w/the options detailed in the documentation unless there is very good not to.

I feel that

Because what happens when it breaks down, AWS support will just say you’re SOL

yeah, also makes onboarding others onto the project more difficult if you’re swimming upstream

true true

Have you looked at chamber? https://github.com/segmentio/chamber

CLI for managing secrets

I’ve heard of it, but haven’t tried it out yet, thank you for the reminder

In your entrypoint you’ll want something like

chamber exec <prefix> -- sh -c node main.js

Ahhh ok, so some kind of init script as the entry point

This v will convert parameter store entries under the prefix service as environment variables in the shell that executes your process in the entrypoint

Oh wow, it actually uses parameter store

And I’m guessing local dev isn’t bad, same entry point, just local creds to fetch secrets

For local you can configure a different backend

Would you use encrypted store like ansible vault in git for local? Or does chamber have its own backend/encryption method?

I don’t know. I only use it with secrets manager and parameter store and in those cases you can use AWS kms

If you have an entrypoint script you could customize the behavior of how your container launches based on if it in AWS or not

Just found this, a little old, but some decent info https://omerxx.com/ops/secrets-with-ssm-and-chamber/

Learning stuff about technology and the universe.

We use docker-compose locally and do stuff differently accordingly

Yeah, I’ve done something like this where if it detects an AWS access key in the environment variables, then it’s local, otherwise it’s running in fargate

I’d suggest using the AWS_EXECUTION_ENV env var for that — or ECS_CONTAINER_METADATA_URI_V4

I guess that’s more complete when a local dev container isn’t reaching out to AWS services

2024-01-13

2024-01-22

Hello, in ECR Repository is there any way with Lifecycle policy to automatically delete the images which were not pulled for 60 days? If no, what is the best practice for this, what you use? Thanks!

imageCountMoreThan

IMPORTANT ADVICE

We’ve got critical information about changes to Amazon Elastic #Kubernetes Service that directly impact your costs. Read on to make sure you’re in the know Starting April 1, 2024, Amazon EKS will implement a pricing shift for clusters running on a #Kubernetes version in extended support. Brace yourself for a significant increase to $0.60 per cluster per hour. Yes, you read it right - *an increase from the current $0.10 per hour.*

Take Action Now - optimize your infrastructure: Head to our GitHub page ASAP to explore strategies for optimizing your EKS versions and mitigating these increased costs.

Update EKS versions to sidestep extended support charges https://lnkd.in/dBRFsr_7

Spread the word: Share this update with your network! Please let your peers know about the impending pricing shift, and together, let’s go through these changes as a community.

Important update for #AWS #EKS Users

We've got critical information about changes to Amazon Elastic #Kubernetes Service that directly impact your costs.…

Hey all. I’m working to design a new AWS architecture for my current company to transition to. We’re in a bit of a growth stage so I want to design something that will be flexible for future use but also not overly complicated for the time being due to deadlines and a smaller team.

I’m hoping to get some feedback the AWS organisations. The current design I have is laid out to segregate production and non-production workloads. I specifically want to create a sandbox space for developers to utilise so they can create resources during their research and development stages.

I’m curious if people segregate their organisations into environments like production, staging, etc or by workloads and what might be some trade offs between?

For domain-driven design type environments, I prefer the approach similar to yours to avoid management sprawl. Top level OUs for core services (shared services), security, sandbox, prod, non-prod. I also like a suspended OU and migrated OU for future inbound/invited accounts from other sources. SCPs are generally well-defined in their scope to work with these OUs.

Thanks for your reply Chris. When you talk about core services are you referring to creating accounts that manage resources like VPC’s and Route53 across the entire organisation?

I’ve modified my diagram a bit to include some other OUs and accounts but I’m starting to fall down the rabbit hole and can feel the complexity rolling in. I’ll have all of this managed through Terraform and provisioned through pipelines so I’m hoping the management won’t be overly complicated but I’m hoping to keep this as simple as I can.

Any thoughts on the new diagram would be greatly appreciated.

P.s. I’ve read a few of your articles on your website. Lots of great information there.

Core services

• network account hosting the transit gateway and ingress/egress controls

• shared services account hosting managed services available to everyone (e.g. CI/CD, code scan tools, dashboard tools)

• anything else that should be ubiquitously available to your tenants (think managed services) I prefer to use 1 account : 1 domain/app with a vending machine that builds the landing zones for each. I usually build a lightweight python SDK that pipelines use to dynamically build out the terraform backend code to do this, then save the artifacts for later tshooting/sbom type needs.

The vending machine has 2 contracts:

• a platform contract of infra needed to plug into the landing zone (tgw, attachments, ipam, vpc, state bucket)

• a service contract of infra needed to supply (e.g. cognito, database(s), buckets for data loads/exfil) Metadata created from the vending process is used downstream and saved in a location of your choice (param store, vault, dynamo, etc.)

I’m also typically in GovCloud environments which has no method of automatically vending AWS accounts, so I’m having to request & provision (bring into control tower) the accounts through a manual approval process. much easier in commercial cloud.

I also just realized there’s a typo on my first diagram, the bottom backend account should be DEV not TEST

I like your ideas around the contracts and vending machine. I hadn’t specifically thought about the management of new project accounts so that’s valuable.

It almost makes me want to remove the project-based account structure all together to simplify management of everything, but I’ll see if I can come up with something to manage it. I have to find a balance as I’ll be the only one working on this while educating the team as I go, so if I can abstract as much as I can I will

This is some excellent information though Chris, I appreciate you passing it on.

2024-01-23

2024-01-24

Hi, I’m trying to set the min and max capacity for an ecs service but I can’t find how to do it in the terraform-aws-ecs-alb-service-task module. Anyone can point me where to look? Thanks

Terraform module which implements an ECS service which exposes a web service via ALB.

https://docs.aws.amazon.com/AmazonECS/latest/developerguide/cluster-capacity-providers.html

https://github.com/cloudposse/terraform-aws-ecs-alb-service-task/blob/main/variables.tf#L398

see the above ^

Amazon ECS capacity providers manage the scaling of infrastructure for tasks in your clusters. Each cluster can have one or more capacity providers and an optional capacity provider strategy.

variable "capacity_provider_strategies" {

Hi @Andriy Knysh (Cloud Posse) Thanks for your reply. I’m not sure we are talking of the same thing. I’m looking a way to control the min and max count of tasks in the service. This is the cloud formation reference:

Type: AWS::ScalableTarget Properties: RoleARN: !GetAtt AutoScalingRole.Arn MaxCapacity: 16 MinCapacity: 2

i’m not sure how it’s related to the CloudFormation reference, but maybe this will help:

https://github.com/cloudposse/terraform-aws-ecs-alb-service-task/blob/main/main.tf#L369

https://github.com/cloudposse/terraform-aws-ecs-web-app/blob/main/main.tf#L174

https://github.com/cloudposse/terraform-aws-ecs-web-app/blob/main/examples/complete/main.tf

2024-01-25

2024-01-29

Hello, I am working to transition to a multi-account aws architecture and have been considering using atmos instead of a DIY solution. It seems I need to create a tfstate-backend > account > accountmap & the use of a workflow might be best since docs say account must be provisioned prior to account map. Is my understanding correct ? What is needed to access the cold start document that I see referenced in some docs ?

What is needed to access the cold start document that I see referenced in some docs ?

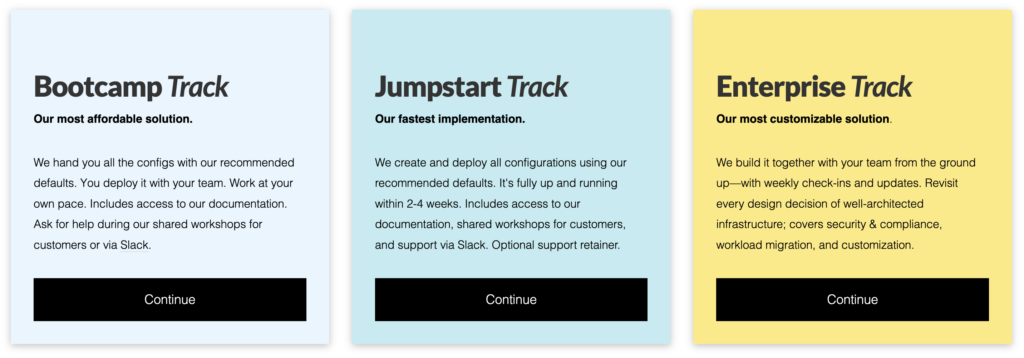

This is what we sell as our Bootcamp.

2024-01-30

Hi, is there something to consider when configuring a socket.io multi-instance server behind an ALB from EKS using alb controller? So far I have set cookie sickness, duration and host header preservation but doesn’t seem to be enough.Does anybody has experience with this kind of setup? Thanks

If this is for web sockets, I think you need to keep the connection alive. Are you losing it after 60 seconds or what’s happening?

Actually I get this error when connecting to it {"code":1,"message":"Session ID unknown"} so I think the connection not is even being established at first place, thanks for your reply!

I’m stuck on why a node.js app that was moved to an IIS7 server is now failing. I know IIS7 doesn’t support web sockets but my understanding was that socket.io would fall back to long polling if web

Is the app sending the sticky session cookie?

app relies on redis for sessions management

In theory if you scaled down to 1 it should work and because state is stored in redis (assuming it’s a separate pod) you should be able to kill the app and it should continue to work because the state is in redis. Also, i assume the TTL is long enough for you to do all of this.

If you are sure the state management is working as expected then feel free to ignore the above.

actually that’s a very good point that I missed, it should work by having only 1 backend instance, that would be a good test/starting point before start trying to make it work with multiple instances

Hhello all. Anybody has issues with route53 dns entries?

We use it in production. No issues that i know of right now. What are you noticing?

Few dns servers could not eesolve one of our entry, but it looks it was error on our side.

Hi, is there a way to provide a custom name to resources without following the cloudposse construction for tenant, namespace, environment, etc? I need to customize alb, redis, ecs modules to adapt to a specific naming convention and I can’t find a way to force it but with the name tag that not always works

Can you provide an example? It is customisable via label_order but you have to have at least one label.

from what I read I understand that labels are tags and not the name (I should look the code for the label module). What I need is for instance, pass a string as the ECS cluster name which can’t be done like that. I can pass a name that will be concatenated with the tenant, the namespace, the stage, etc. I tryed to setup the Name tag (it worked for the ALB module) but didn’t make it for the cluster name

For the ECS cluster, the name of the cluster is like this:

name = module.this.id

So you can do

label_order = [“name”]

I’m on mobile but I could provide a better example later if you need it

ok, I looked at the code and firs of all, you are right, labels are used to form the name. I will have to see how to modify the labels, order, etc for each resouce, but I think I can achieve what I need.

Yes, module.this.label_order = [“name”] will do. Sorry I didn’t understand that when I read the documentation and it was clear when I saw the code. Thanks for the reply

is there a way to provide a custom name to resources without following the cloudposse construction for tenant, namespace, environment,

Also, just to call out, all parameters are optional.

So if you don’t want to use our conventions, just do as @Joe Niland alluded to, which is commandeer name for everything.

but also, good to be familiar with this https://masterpoint.io/updates/terraform-null-label/

A post highlighting one of our favorite terraform modules: terraform-null-label. We dive into what it is, why it’s great, and some potential use cases in …

Later on this year, we hope to introduce support for arbitrary naming conventions

thanks @Erik Osterman (Cloud Posse) I’ve found Cloudposse module very useful and though some times I can’t figure how to use them propely, they I find that they can adapt to mostly every scenario. Thanks for your reply.