#gcp (2024-03)

Google Cloud Platform

2024-03-01

Question. In atmos.yaml we are using “auto_generate_backend_file: true” and seeing in S3 atmos creating a folder for component then a sub-folder for stack, which it them places the terraform.tf into. When we run the same layout of components/stacks/config against GCP GCS we are seeing only a state file be created, no folders, example core-usc1-auto.tfstate which it is renaming the terraform.tf to which is the name of the stage. Has anyone seen this behaviour or can advise? Thanks

Google Cloud Platform lets you build, deploy, and scale applications, websites, and services on the same infrastructure as Google.

did you correctly configure GCP backend in Atmos manifests? something like this:

terraform:

# Backend

backend_type: gcs

backend:

gcs:

bucket: "xxxxxxx-bucket-tfstate"

prefix: "terraform/tfstate"

Google Cloud Platform lets you build, deploy, and scale applications, websites, and services on the same infrastructure as Google.

In a _defaults.yaml in a parent stack folder that in imported we have:

terraform:

backend_type: gcs

backend:

gcs:

bucket: poc-tfstate-bucket

and seeing in S3 atmos creating a folder for component then a sub-folder for stack,

can you give more details on this? (I don’t understand “atmos creating a folder for component then a sub-folder for stack” - the folders for components and stacks must already exist in your repo)

In AWS the bucket has these folders, that are components.

Followed by these stacks folders

ok, so you are saying that the S3 backend creates those folders in the S3 bucket, but you are not seeing the GCP backend creating those folders in the GCP bucket?

yes

it depends on TF backend and workspaces. In AWS S3 backend, for example, the workspace_key_prefix (which you can specify in Atmos config for each component; if not specified, then Atmos component name is used) is the main folder name. The TF workspaces for diff stacks are subfolders. That’s how the S3 backend in TF works

(I’m not sure if GCP backend works the same way, or it just creates a flat folder structure - you need to confirm that in terrform docs for GCP)

Thanks

you need to confirm if GCP backend has a similar concept like workspace_key_prefix in the AWS S3 backend (which creates those top-level folders in the S3 state bucket)

Thank You, will look into that feature.

Terraform can store the state remotely, making it easier to version and work with in a team.

I think it’s the prefix (which is the same as orkspace_key_prefix in the S3 backend)

you can configure prefix for each component in Atmos manifests

components:

terraform:

<my-component>:

backend:

gcp:

prefix: <my-component>

Atmos does not generate prefix for GCP automatically, it only does it for S3 backend workspace_key_prefix (we will take a look at this to add it to Atmos to generate prefix automatically using Atmos components names if prefix is not configured for a component)

for now, try the above config on your components, it should generate the folders inside the state bucket (Named states for workspaces are stored in an object called <prefix>/<name>.tfstate.)

Thanks, that is working.

super

thank you for testing and pointing it out - we’ll add the auto-generation of prefix for GCP backend to Atmos (similar to what it does for workspace_key_prefix for S3 backend)

auto-generation of prefix for gcs backend was added to Atmos 1.65.0

2024-03-23

Hello Team, Im planning to start a GCP setup via atmos. Can some one provide me with basic structure ?

This one has some examples for GCP

An opinionated, multi-cloud, multi-region, best-practice accelerator for Terraform.

thanks @Erik Osterman (Cloud Posse)

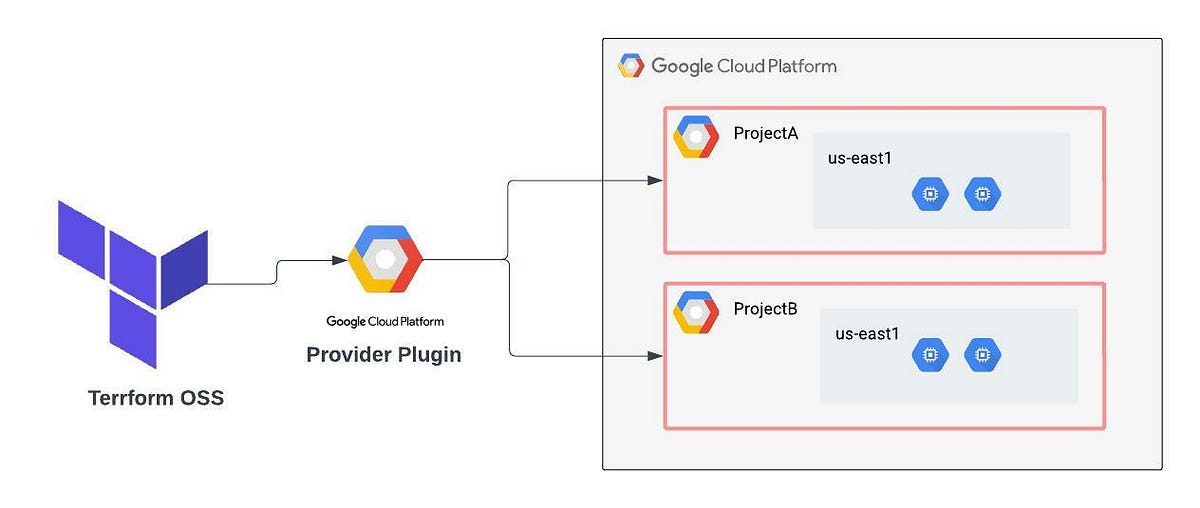

I want to target multiple services in different projects, example : cloudfunction should go to project1 and all cloudrun should be project2 and storage in project3. So how can i design such a way each stack can go to different project

That makes sense. Projects might be like accounts in AWS. So you have project level isolation.

yes !

In this article, you will not just learn about terraform providers but will also learn how to deploy cloud resources across multiple…

so i might have different service account for each project

@Monish Devendran please review these docs (they are for AWS, but you can update them for GCP)

https://atmos.tools/design-patterns/organizational-structure-configuration

Organizational Structure Configuration Atmos Design Pattern

Use the atmos.yaml configuration file to control the behavior of the atmos CLI.

the pattern is, you define your stack config per Org, project, account, etc. (configure the context variables in each). Then define or import the Atmos components into each top-level stack.

you can define defaults for each component in catalog, then import the defaults into the stacks, and override the component config per stack if needed

(you can DM me your setup, I’ll review)

@Andriy Knysh (Cloud Posse), Thanks . setup and will send

extended from atmos to leverage orchestration in terraform

very basic setup, im trying to read above links and trying to see. also can you send me a link to configure terraform cloud

i’ll review your repo, thanks for sharing

configure terraform cloud

Collaborate on version-controlled configuration using Terraform Cloud. Follow this track to build, change, and destroy infrastructure using remote runs and state.

yes , my statefiles i want to run in tf cloud

Or more specifically use terraform cloud strict as a state backend? Or also use the deployment capabilities as well of terraform cloud

@Monish Devendran I’ve sent you a zip file with the infra configuration for GCP with a few stages (dev, prod, staging) and a few GCP projects with multiple Atmos components in each (specific component type like Cloud Functions and GCS buckets per GCP project). We can sync anytime to review it

Hi @Erik Osterman (Cloud Posse), So my use case is to use terraform cloud to run terraform apply and store them in tf cloud

In that case you will need to commit the varfiles and configure terraform cloud to use the appropriate varfile with each workspace

To do that see this comment, https://github.com/hashicorp/terraform/issues/15966#issuecomment-1868823558

I was able to do this with Terraform Cloud by adding an environment variable to the workspace:

Key: TF_CLI_ARGS

Value: -var-file "dev.tfvars"

Now when I run terraform apply it turns into terraform apply -var-file dev.tfvars. It will do this for all terraform commands. (See here: https://developer.hashicorp.com/terraform/cli/config/environment-variables#tf_cli_args-and-tf_cli_args_name)

It would be better if we could apply this in the code itself though.

Heads up, @RB I saw you commented on this thread and this appears to be solution.

2024-03-24

2024-03-28

Can someone help me,

Im trying to pass a secret which is stored in akeyless,

data "akeyless_secret" "secret" {

path = "/GCP/Secrets/cf-triggers/tf-cf-triggers"

}

provider "google" {

project = "cf-triggers"

credentials = data.akeyless_secret.secret

}

resource "google_pubsub_topic" "example" {

name = "akeyless_topic"

message_retention_duration = "86600s"

}

❯ terraform apply

data.akeyless_secret.secret: Reading...

data.akeyless_secret.secret: Read complete after 1s [id=/GCP/Secrets/cf-triggers/tf-cf-triggers]

Planning failed. Terraform encountered an error while generating this plan.

╷

│ Error: Incorrect attribute value type

│

│ on main.tf line 38, in provider "google":

│ 38: credentials = data.akeyless_secret.secret

│ ├────────────────

│ │ data.akeyless_secret.secret is object with 4 attributes

│

│ Inappropriate value for attribute "credentials": string required.

Has anyone faced this issue, not able to pass secret from akeyless