#kubernetes (2019-06)

Archive: https://archive.sweetops.com/kubernetes/

2019-06-03

2019-06-04

Intresting tool that checks K8s best practices https://github.com/reactiveops/polaris

Validation of best practices in your Kubernetes clusters - reactiveops/polaris

Thanks

Validation of best practices in your Kubernetes clusters - reactiveops/polaris

It’s pretty nice .. for now it points out things like if you have set resource limits and it’s pretty basic, but I think this can be useful the more they add to it.

2019-06-05

what are you guy’s strategy for memory requests? for example looking at my historical data, my api pods use about 700Mi memory on average. I believe it’s better to set that memory request down to around that number, which will allow for more excess memory in the pool. I have it currently overallocated (1000Mi per api pod) and it adds up how much memory is being reserved but unusable by others that may need it.

Other considerations to take into account is (a) how much memory volatility there is… perhaps 30% variance is a bit high (b) disruptions - how bad is it if the service is evicted to another node?

I would suspect the more pods of a given service you run, the more insulated you are from disruptions of pod evictions

which means you can get by with a a 5-10% limit. make sure you monitor pod restarts.

so long as that number stays at or near 0, you’re good.

how are you all connecting kubectl into the k8s cluster these days?

via teleport

teleport supports both ssh and kubectl

SAML authentication

what they call proxy is ~ a bastion, for a centralized entry point

Make it easy for users to securely access infrastructure, while meeting the toughest compliance requirements.

Interesting ty

thanks @Erik Osterman (Cloud Posse) i think i can get away with closer to 5-10%. dont have that much memory volatility looking at my metrics

hi

Alex Co, [Jun 6, 2019 at 143 PM]: i’m having an issue while looping the helm template

env: {{- range .Values.app.configParams }} - name: {{ . | title }} valueFrom: secretKeyRef: name: “{{ .Values.app.secretName }}” key: {{ . | title }} {{- end }}

this is my code in the template to generate the environment var from the values.yaml

but when i ran the helm lint, it complaints like this

executing “uiza-api-v4/templates/deployment.yaml” at <.Values.app.secretName>: can’t evaluate field Values in type interface {}

i guess that helm template does not allow me to put the secretName value inside a loop

is there anyway to solve this ?

2019-06-06

nvm, it’s because i did not declare .Values.app.secretName as global variable

I encountered an issue with eks ebs volume provisioning, with small worker groups (less than 3) the pv was created before the pod and in the wrong AZ.

is settting volumeBindingMode: WaitForFirstConsumer enough on v1.12 to fix this problem?

Yes @nutellinoit It works. But I would also suggest to set an affinity policy for one AZ only, to ensure in case of pod restart or eviction, the pod be scheduled on the same AZ of the PVC

2019-06-07

Hi People , do you know of a best/sane way to install k8s on AWS. I see that there are multiple ways to do it. I am eyeing kops because terraform duh but before creating the cluster there’s still a lot of preparation to do like:

- creating vpc

- kops state bucket

- route53 record And than all of it has to be passed on to kops as a cli command. This is all fine but to me it looks like a bit too much. Is there any other way of doing it ?

I use EKS

with terraform

ok that’s a way

and how do you handle upgrades, i read somewhere that it’s a bit tricky with EKS

You mean master version upgrade ?

like k8s 1.2 -> 1.3 upgrade

Yeah, well its a pretty new cluster

right now my cluster is running on version 1.2

what are the challenges that you have heard of ?

I don’t remember the details but I think Erik mentioned something about the upgrade in EKS is not as easy

I might be wrong though

Kubernetes is rapidly evolving, with frequent feature releases, functionality updates, and bug fixes. Additionally, AWS periodically changes the way it configures Amazon Elastic Container Service for Kubernetes (Amazon EKS) to improve performance, support bug fixes, and enable new functionality. Previously, moving to a new Kubernetes version required you to re-create your cluster and migrate your […]

aws blog says its easy

:)))

fair enough

offcourse we have multiple environments

so we can upgrade the lower environment and check if it works

and proceed with upgrade

niice

they made it much easier recently - you can do it via the AWS console, just change the version

then upgrade your worker nodes afterwards

(but yes you’ll want to do it in non-prod first just in case)

Terraform has a parameter for version

oh that sounds promising

version – (Optional) Desired Kubernetes master version. If you do not specify a value, the latest available version at resource creation is used and no upgrades will occur except those automatically triggered by EKS. The value must be configured and increased to upgrade the version when desired. Downgrades are not supported by EKS.

and what about installing it, EKS gives you the master nodes only, what about getting the other nodes in EC2, is it just a matter of using cloud-init ?

We do with with auto-scaling-group

You can follow this

A Terraform configuration based introduction to EKS.

Thanks a lot people

I will try it out

i tried an upgrade with eks and terraform

from 1.11 to 1.12

is pretty smooth

control plane upgrades without downtime

to upgrade the workers the only thing to do is to update amis

and replace workers

and follow the directions on aws documentation to patch system deployment with new container versions

When a new Kubernetes version is available in Amazon EKS, you can update your cluster to the latest version. New Kubernetes versions introduce significant changes, so we recommend that you test the behavior of your applications against a new Kubernetes version before performing the update on your production clusters. You can achieve this by building a continuous integration workflow to test your application behavior end-to-end before moving to a new Kubernetes version.

ok, so I guess there’s a posibility to automate replacing the instances in the AS somehow

I will look into it

Thanks for your support people!

You can simply terminate one old instance at time and wait for autoscaling group to launch replacements

arhg ye gute ole click-ops

we’ve developed a lambda with step functions that does the instance replacement, step functions serving as a waiter

1

1so it’s fire and forget

takes a while but it’s atomic

@Nikola Velkovski I’ve found the k8s upgrades to be a bit slow. it increases in time (by like 5~7 minutes per worker node) so upgrades can take a long time. For me, I wouldn’t be comfortable letting the upgrade for a production cluster run unattended (i.e. overnight while im sleeping) and naturally your production cluster probably has the most worker nodes. What I’ve found works for me pretty well is just using terraform to spin up a new cluster, deploy to the new cluster, and doing the cutover at the DNS level. food for thought

I think an elegant approach is to spin up an additional node pool

2019-06-08

that sounds a lot like aws Elasticsearch @btai terraform apply usually times out when upgrading the ES cluster

thanks!

2019-06-09

@Nikola Velkovski give a try with rancher. It is the most easiest way to spin up k8s on multiple clouds as per our experience with the tool. https://rancher.com/

Rancher, open source multi cluster management platform, makes it easy for operations teams to deploy, manage and secure Kubernetes everywhere. Request a demo!

2019-06-10

2019-06-11

Hey Guys Does anyone configured SMTP as a grafana config-map for kubernetes?

Don’t have first hand experience

Let me know if you get it working though. We should setup the same in our helmfile.

Sure Erik

apiVersion: v1 kind: ConfigMap metadata: labels: app: grafana name: grafana-smtp-config-map namespace: monitoring data: grafana.ini: | enabled =true host=<host> user=<user> password=<password> skip_verify= false from_address=<email> from_name=Grafana welcome_email_on_sign_up=false

Ex: something like this

and adding this config map in kubernetes grafana deployment - configMap: defaultMode: 420 name: grafana-smtp-config-map name: grafana-smtp-config-map

i am trying using above methods to add smtp to grafana.ini

but i am unable to add smtp to grafana.ini, is there any documentation/suggestions which can help me here?

Does anyone have any experience scaling with custom metrics from Datadog across namespaces (or the external metrics API in general)?

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: service-template

spec:

minReplicas: 1

maxReplicas: 3

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: service-template

metrics:

- type: External

external:

metricName: k8s.kong.default_service_template_80.request.count

metricSelector:

matchLabels:

app: kong

targetAverageValue: 5

Warning FailedGetExternalMetric 117s (x40 over 11m) horizontal-pod-autoscaler unable to get external metric default/k8s.kong.default_service_template_80.request.count/&LabelSelector{MatchLabels:map[string]string{app: service-template,},MatchExpressions:[],}: no metrics returned from external metrics API

^ perm issue with Datadog API in the cluster-agent

hi, anyone here is using Gloo Gateway on K8s ?

i’m having a problem that the virtual service stopped accepting traffic after awhile, and status on the ELB to gloo gateway proxy show that it ’s OutOfService

wonder if anyone here got the same problem

2019-06-12

Hi all! Has someone faced this error before?

kernel:[22989972.720097] unregister_netdevice: waiting for eth0 to become free. Usage count = 1

Public #office-hours starting now! Join us on Zoom if you have any questions. https://zoom.us/j/684901853

2019-06-13

what ingress controller are you guys using? it seems like alb-ingress-controller isnt quite robust enough for me. things that i feel like its missing:

- new ingress object = new ALB so there would be a one-to-one mapping of ALBs to services for me (multi-tenant cluster)

- provisioned resources don’t get cleaned up, at this point i feel like i might want to terraform the load balancer resources i need with the cluster

yea, the 1:1 mapping between ingress an ALB sucks!

Im using Ambassador.. a lot of features regarding routing of traffic based on any kind headers, regex matching, Jaeger tracing. Name it :)

@maarten does ambassador spin up cloud resources for u? (load balancers, security groups, etc)

i realized that might not be a feature i want as of now in k8s. since terraform is better at managing cloud resource state

Can someone point me to best practices for setting up Traefik/Nginx-Proxy/etc as an ingress for Kubernetes running on 80? Everything is running but ClusterIP is internal and NodePort doesn’t allow ports below 30000. What am I missing?

Service of type Loadbalancer. Then cloud provider gives you IP or use something like metallb on bare metal. Deployment nginx ingress or whatever. Can replicate per AZ.

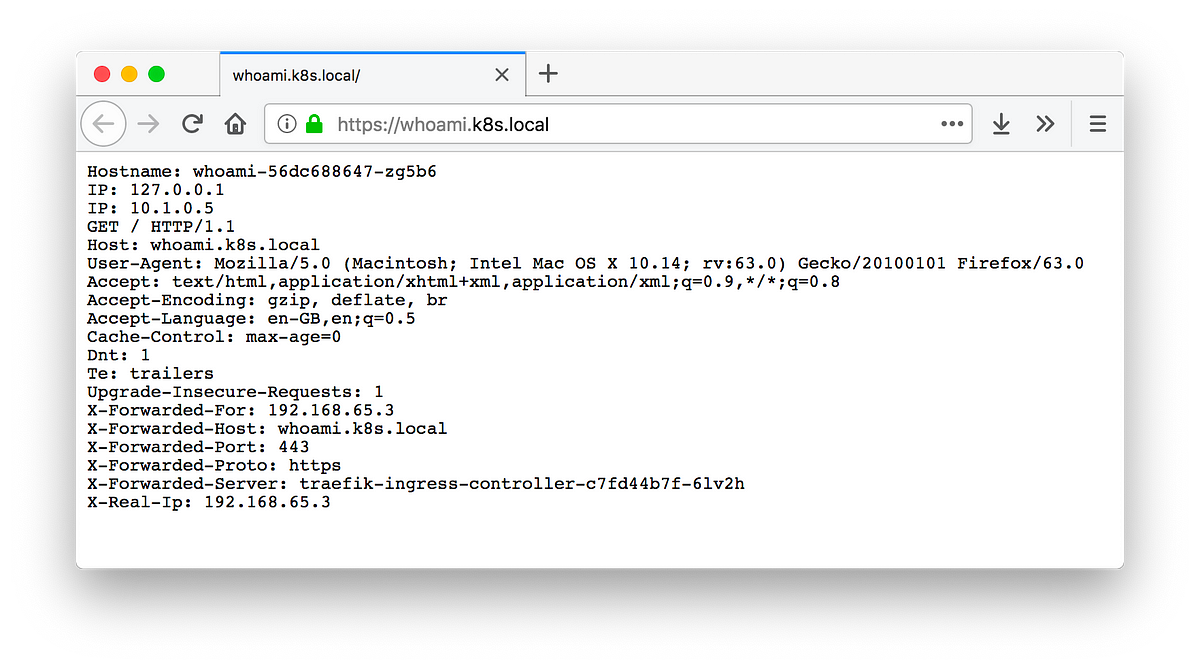

I was really excited about this post https://medium.com/localz-engineering/kubernetes-traefik-locally-with-a-wildcard-certificate-e15219e5255d

As a passionate software engineer at Localz, I get to tinker with fancy new tools (in my own time) and then annoy my coworkers by…

but he’s using a LoadBalancer w/ Docker for Mac Kubernetes which doesn’t make sense.

2019-06-16

2019-06-17

2019-06-18

Hello @davidvasandani thanks for the article that you’ve written. Could you please tell me the main capabilities that trafeik have as ingress-controller? Do you have any article with this capabilities?

Hi @Hugo Lesta. Not my article but Traefik has many capabilities. https://docs.traefik.io/configuration/backends/kubernetes/

Hi @Hugo Lesta. Not my article but Traefik has many capabilities. https://docs.traefik.io/configuration/backends/kubernetes/

This previous article you sent me seems worthy for me, I’ll try to improve my knowledhe about traefik over k8s.

Its good and helped me out but its incomplete. The author mentions using LoadBalancer locally but doesn’t describe how. With a lot of additional work I’ve gotten it working with MetalLB locally. This was a very useful article: https://medium.com/@JockDaRock/kubernetes-metal-lb-for-docker-for-mac-windows-in-10-minutes-23e22f54d1c8

2019-06-19

anyone using this on their clusters? https://github.com/buzzfeed/sso

sso, aka S.S.Octopus, aka octoboi, is a single sign-on solution for securing internal services - buzzfeed/sso

Looks interesting

Doesn’t support websockets, so it was a deal breaker for us

things like the k8s dashboard or grafana require that

bite the bullet. just deploy KeyCloak with Gatekeepers

havent heard of keycloak/gatekeeper

I can give you a demo

it’s open source, by redhat

we have the helmfiles for it too

does it integrate w/google saml?

yup, that’s the beauty with keycloak

it basically supports every saml provider

yeah it looks like

and we use it with gsuite

not only that, you an use it with https://github.com/mulesoft-labs/aws-keycloak

aws-vault like tool for Keycloak authentication. Contribute to mulesoft-labs/aws-keycloak development by creating an account on GitHub.

with aws

it can become the central auth service for everything

nice

we use it with kubernetes, teleport, atlantis, grafana, etc

yeah super nice, fully integrated

you can even integrate it with multiple auth providers at the same time

do helmfiles have a remote “helm chart” that is used as the base?

not sure, better to check in #helmfile

ah yeah it does

Comprehensive Distribution of Helmfiles. Works with helmfile.d - cloudposse/helmfiles

oh, “base” is a loaded term now

since helmfile has the concept of bases

this is not a base in that sense

like uh

base docker image base

aha

yes, we use the community image

but guess it would be more secure to run our own

given the role this plays

i guess im not being clear enough

i was curious if the helm chart is just abstracted out

for a helmfile

for the gatekeeper, we’re doing something a bit unusual/clever

we are defining an environment

then using that to generate a release for each service in the environment

the alternative is to use sidecars or automatic sidecar injetion

A Kubernetes controller to inject an authentication proxy container to relevant pods - [✩Star] if you’re using it! - stakater/ProxyInjector

ah

Here’s what our environments file looks like

services:

- name: dashboard

portalName: "Kubernetes Dashboard - Staging"

host: dashboard.xx-xxxxx-2.staging.xxxxxx.io

useTLS: true

skipUpstreamTlsVerify: true

upstream: <https://kubernetes-dashboard.kube-system.svc.cluster.local>

rules:

- "uri=/*|roles=kube-admin,dashboard|require-any-role=true"

debug: false

replicas: 1

- name: forecastle

host: portal.xx-xxxx-2.xxxx.xxxx.io

useTLS: true

upstream: <http://forecastle.kube-system.svc.cluster.local>

rules:

- "uri=/*|roles=kube-admin,user,portal|require-any-role=true"

...

i see

2019-06-20

Have you people seen this ? https://github.com/hjacobs/kubernetes-failure-stories

Compilation of public failure/horror stories related to Kubernetes - hjacobs/kubernetes-failure-stories

it’s worth resharing

Compilation of public failure/horror stories related to Kubernetes - hjacobs/kubernetes-failure-stories

I got to the spotify video, which is kinda cool, they admit their rookie mistakes around terraform

Hi guys, how to connect vpn from inside pods? any recommendation out there? So basically, I have to connect client data on premise, only using 1 ip

is anyone here using aws-okta with EKS? I’m having trouble granting additional roles access to the cluster.

2019-06-23

Hey @cabrinha I’d be interested in hearing anything you ran into with aws-okta and EKS. I’m going to be starting down that road this week.

2019-06-24

HI guys, Please could someone help me . I just created an EKS cluster and its unable to apply some changes to the cluster. I keep getting that error log.. I am using the same user I used to create the cluster. I am also using auth account. So the Users are not exactly in that account they assume role. Am not sure what am missing here being trying this for days now.. Thanks

How did you bring up the cluster? Are you using terraform?

@Erik Osterman (Cloud Posse) with terraform

Terraform module which creates EKS resources on AWS - howdio/terraform-aws-eks

The aws-auth ConfigMap is applied as part of the guide which provides a complete end-to-end walkthrough from creating an Amazon EKS cluster to deploying a sample Kubernetes application. It is initially created to allow your worker nodes to join your cluster, but you also use this ConfigMap to add RBAC access to IAM users and roles. If you have not launched worker nodes and applied the

point 3

@nutellinoit Its a new cluster. If my user can’t access the cluster, not sure if it can run aws-auth on the cluster if it can’t access it

We use Auth to manage IAM, The accounts are not directly in the cluster.

2019-06-25

2019-06-28

Kubernetes community content. Contribute to kubernetes/community development by creating an account on GitHub.

2019-06-30

I am stuck while installing Kubeadm in AWS on Amazon linux

Below is the error i get after running sudo install -y kubeadm

http://yum.kubernetes.io/repos/kubernetes-el7-x86_64/repodata/repomd.xml: [Errno -1] repomd.xml signature could not be verified for kubernetes

Please help me to get over it.

Hey @abkshaw, sounds a little similar to https://github.com/kubernetes/kubernetes/issues/60134

Is this a BUG REPORT or FEATURE REQUEST?: /kind bug What happened: I'm trying to install Kubernetes on Amazon Linux 2 as described here, but I get error: [[email protected] ~]$ sudo yum install …