#kubernetes (2020-02)

Archive: https://archive.sweetops.com/kubernetes/

2020-02-02

So i had a thought on the train this morning when I was thinking of writing some yoman generators for templating out gitops repos for flux so that teams don’t need to know exactly where to put what in which file.

And then i thought “you know what’s really good at templating yaml? helm!”

Is it crazy to use helm template to generate repos for flux (which will create flux helm crds)? I can’t see anything really stupid at face value - but feels like the kind of idea that requires a sanity check

I wouldn’t think so. Jenkins-x does exactly that if you opt to not use tiller for deployments I believe.

2020-02-03

writing helm charts and wanting some instant feedback ended up with this to check my rendered templates: watch -d 'helm template my-chart-dir | kubeval && helm template my-chart-dir'

nice trick

2020-02-04

I’m a bit stuck with GKE persistent disks that cannot be found:

│ Type Reason Age From Message │

│ ---- ------ ---- ---- ------- │

│ Normal Scheduled 73s default-scheduler Successfully assigned coral-firefly/coral-firefly-ipfs-ipfs-0 to gke-shared-europe-main-3d862732-mq45 │

│ Warning FailedAttachVolume 73s attachdetach-controller AttachVolume.Attach failed for volume "pvc-c018de57-476a-11ea-af39-42010a8400a9" : GCE persistent disk not found: diskName="gke-shared-europe-9030-pvc-c018de57-476a-11ea-af39-42010a8400a9" zone="europe-west1-b"

while the disk is just there in the google console

Not sure where to look for an error message i can actually use

the pvc even says that it is bound

ipfs-storage-coral-firefly-ipfs-ipfs-0 Bound pvc-c018de57-476a-11ea-af39-42010a8400a9 10Gi RWO standard 27m

anyone have an idea where to look?

hmmm, seems like provisioning too many disks at the same time is the culprit. is there a way to configure that in k8s, helm or helmfile?

Are you running helmfiles serially?

i recently moved to a lot more parallel to speed it up (we deploy clusters + services as a service, it needs to be as fast as possible)

2020-02-06

https://www.reddit.com/r/Terraform/comments/axp3tv/run_terraform_under_kubernetes_using_an_operator/ <— nice!!!!

@Erik Osterman (Cloud Posse) do you have a recommendation for using a k8s operator to manage aws resources? https://github.com/amazon-archives/aws-service-operator has been archived and uses cfn under the covers. Lol. Have you had luck with this or anything else?

@rms1000watt @Erik Osterman (Cloud Posse) I’m late to the convo but:

- I think aws-service-operator was just renamed (old one archived), not that the project was canceled: https://github.com/aws/aws-service-operator-k8s

- Crossplane looks interesting: https://crossplane.io/

The AWS Service Operator (ASO) manages AWS services from Kubernetes - aws/aws-service-operator-k8s

The open source multicloud control plane.

crossplane looks interesting for sure

You see this too? https://github.com/hashicorp/terraform-k8s/ https://www.hashicorp.com/blog/creating-workspaces-with-the-hashicorp-terraform-operator-for-kubernetes/

Terraform Operator for Kubernetes. Contribute to hashicorp/terraform-k8s development by creating an account on GitHub.

We are pleased to announce the alpha release of HashiCorp Terraform Operator for Kubernetes. The new Operator lets you define and create infrastructure as code natively in Kubernetes by making calls to Terraform Cloud.

(official one by hashicorp - but only works in combination with terraform cloud)

@rms1000watt not from first hand accounts, however, there have been a number of new operators to come out to address this lately

I did see the aws-service-operator was deprecated the other day when I was checking something else out.

sec - I think we talked about it recently in office hours

Kubernetes CRDs for Terraform providers. Contribute to kubeform/kubeform development by creating an account on GitHub.

What’s interesting “outwardly speaking” regarding kubeform is that it is by the people behind appscode

appscode is building operators for managing all kinds of durable/pesistent services under kubernetes

like https://kubedb.com/

KubeDB by AppsCode simplifies and automates routine database tasks such as provisioning, patching, backup, recovery, failure detection, and repair for various popular databases on private and public clouds

I don’t have any first hand accounts though of using it or other appscode services

Ah, very nice reference

Rancher has 2 projects they are working on.

Both are alpha grade and don’t know how practical

Use K8s to Run Terraform. Contribute to rancher/terraform-controller development by creating an account on GitHub.

Use K8s to Run Terraform. Contribute to rancher/terraform-controller development by creating an account on GitHub.

ohhhhh

they renamed it

never mind.

My feelings were hurt by rancher’s product in 2016. I’m sure they’ve improved vastly since then though, lol.

Yeah, II’m curious about the kubeform one

will probably give that a try

very nice references man

Kubernetes custom controller for operating terraform - danisla/terraform-operator

Yeah, I saw that one too

Just have no clue what people are actually usinig

Please report back if you get a chance to prototype/poc one of them.

I’ve wanted to do this for some time.

I think it could simplify deployments in many ways by using the standard deployment process with kubernetes

this is actually a part of a bigger discussion around ephemeral environments

ya

all the helmfile stuff is super straight forward

it’s the infrastructure beyond it tho

so while I love terraform, terraform is like a database migration tool that doesn’t support transactions

so when stuff breaks, no rollbacks

heh, yea

while webapps are usually trivial to rollback

so coupling these two might cause more instability where before there was none.

also, this is what was kind of nice (perhaps) with the aws-service-operator approach deploying cloudformation since cloudformation fails more gracefully

did you listen to this weeks office hours recording?

i havent

I think it’s relevant to the ephemeral preview environments conversation, which is also one of the reasons were exploring terraform workspaces more and more as one of the tricks to help solve it.

yeah, i was considering terraform workspaces

2020-02-07

Anyone address kubelet-extra-args with eks node groups?

Community Note Please vote on this issue by adding a reaction to the original issue to help the community and maintainers prioritize this request Please do not leave "+1" or "me to…

in short, i’m trying to build docker images on our new jenkins stack built 100% with node groups now and the docker builds fail due to networking

[0mErr:1 <http://security.debian.org/debian-security> buster/updates InRelease

Temporary failure resolving 'security.debian.org'

@Erik Osterman (Cloud Posse) @Andriy Knysh (Cloud Posse) I thought we could set the extra args for enabling the docker bridge, but it seems like that’s not how the https://github.com/awslabs/amazon-eks-ami/blob/master/files/bootstrap.sh#L341 is setup

Packer configuration for building a custom EKS AMI - awslabs/amazon-eks-ami

Are you doing docker builds under jenkins on kubernetes?

yuppers

planning to look at img, etc again, but the new jenkins needs to match the old for now

aha

yea, b/c I think getting away from dnd or mounting docker sock is a necessity

there’s seemingly a dozen ways now to build images without docker daemon.

are you mounting the host docker socket?

agreed. that’s the goal in an upcoming sprint

yuppers

they build fine. they can’t communicate externally

[0mErr:1 <http://security.debian.org/debian-security> buster/updates InRelease

Temporary failure resolving 'security.debian.org'

simple pipeline:

pipeline {

agent {

label 'xolvci'

}

stages {

stage('docker') {

steps {

container('jnlp-slave') {

writeFile file: 'Dockerfile', text: '''FROM openjdk:8-jre

RUN apt update && apt upgrade -y && apt install -y libtcnative-1'''

script {

docker.build('jbtest:latest')

}

}

}

}

}

}

hrmmm but they can talk to other containers in the cluster?

seems so

do you also have artifactory?

(thinking you could set HTTP_PROXY env)

and get caching to boot!

yes on artifactory

artifactory runnign in the cluster?

not yet

what would i set HTTP_PROXY to? cluster endpoint?

some other service in the cluster (assuming that the docker build can talk to services in the cluster)

e.g. deploy squid proxy or artifactory

that said, I did not have this problem when I did my prototype on digital ocean, so I am guessing whatever you are encountering is due to the EKS networkign

yeah, it is def’ eks. it isn’t an issue when using rancher either

ok

is rancher using ENIs?

not sure. just tinkered w/ it mostly

might can downgrade to 0.10.0 since it supports inline in userdata.tpl

worst day to fool w/ getting disparate nodes on a cluster.

lol

yea, trying to get this working with managed node groups might be wishful thinking

yeah, i ditched that. i’m back for this group to a custom one with https://github.com/cloudposse/terraform-aws-eks-cluster

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

AWS support said it literally is not possibly with node groups

are you back to using terraform-aws-eks-workers?

for this one group of workers, yes

it isn’t connecting, though

i say that and it connects

lol

it wouldn’t work with the --kubelet-extra-args='--node-labels=node_type=cloudbees-jobs', though

specifically:

bootstrap_extra_args = "--enable-docker-bridge=true --kubelet-extra-args='--node-labels=node_type=cloudbees-jobs'"

manually tweaked the launch template to not use = and that seemed to work for the bridge

trying again w/ the extra args

ugh…yup. frickin’ =

bootstrap_extra_args = "--enable-docker-bridge true --kubelet-extra-args '--node-labels=node_type=cloudbees-jobs'"

^

bootstrap_extra_args = "--enable-docker-bridge=true --kubelet-extra-args='--node-labels=node_type=cloudbees-jobs'"

^ booooo

have you seen this https://kubedex.com/90-days-of-aws-eks-in-production/

Come and read 90 days of AWS EKS in Production on Kubedex.com. The number one site to Discover, Compare and Share Kubernetes Applications.

and yes, you can connect managed nodes (terraform-aws-eks-node-group), unmanaged nodes (terraform-aws-eks-workers) and Fargate nodes (terraform-aws-eks-fargate-profile) to the same cluster (terraform-aws-eks-cluster)

an example here https://github.com/cloudposse/terraform-aws-eks-fargate-profile/blob/master/examples/complete/main.tf

Terraform module to provision an EKS Fargate Profile - cloudposse/terraform-aws-eks-fargate-profile

yeah, got managed and unmanaged now. waiting on fargate in us-west-2

i saw a link to that 90 days earlier. gonna peep

phew. come on saturday!

2020-02-10

Thought experiment..

Is there a fundamental difference between slow+smart rolling deployment vs. canary deployment?

Curious if I can hack the health checks of a rolling deployment to convert the behavior into a canary deployment

Canary can be restricted (e.g. with feature flags) to a segment of users.

of course, it could be defined that that segment is just some arbitrary % of users based on the number of updated pods

however, that can lead to inconsistent behavior

I think it’s hard to generalize for all use-cases, but some kind of “canary” style rolling deployment can be achieved using “max unavailable”

people always harp on canaries around “well you gotta make sure you’re running health checks on the right stuff.. p99, queue depth, DD metrics”

ya, btw, you’ve seen flagger, right?

Progressive delivery Kubernetes operator (Canary, A/B Testing and Blue/Green deployments) - weaveworks/flagger

Flagger is the first controller to automate the way canaries “should” be done

Oh yeah, this is the one istio integrates with

with prometheus metrics

it supports nginx-ingress too

hmm.. still requires 2 services. Not a deal breaker. Just interesting.

in a perfect world, I can still just helmfile apply and win

fwiw, we’re doing some blue/green deployments with helmfile

and using hooks to query kube api to determine the color

Ah, nice

that is pretty interesting

sounds familiar, but I’ll just say no

2020-02-11

Has anyone run into issues with downtime when nginx pods are terminated? I am testing our downtime during rollouts and are noticing a small blip with our nginx pods. Our normal API pods cause no downtime when rolled. Anyone have experience with this?

Ingress-nginx? That project has recently changed termination behavior. Details in change log.

So not actually ingress-nginx, we are just using an nginx container behind ambassador. This is a legacy port to Kubernetes from an ECS system

Our ingress comes through Ambassador

Okay. Hard to say, having a preStop sleep and termination grace should allow single requests enough time to finish. Websocket clients will need to reconnect though (onto new pod). So just server side isn’t enough.

Yeah I’ll have to play around with termination grace periods a bit more I think

Also make sure to tune maxUnavailable

2020-02-12

2020-02-13

Hey all, I have ran into this problem before and wanted to know if someone has a good solution for it. I run kube clusters using KOPS. For infrastructure (VPC), I create them using cloudposse terraform scripts, route 53 stuff and all using tf as well. Then I create kube cluster using KOPS. While everything works fine, I always run into trouble when deleting kube clusters

never mind, I found solution to my own problem

I use kops, but haven’t been in the habit of deleting clusters; out of curiosity was it just some setting or config somewhere?

so, when I setup my infra (vpc, route 53 etc) using old tf versions, there were some documented issues in github that the kops delete cluster was trying to delete everything, but then used to fail cz resources like VPC was created using Terraform. In an ideal scenario, kops delete should not even look at those resources

Whatever new stuff i put in (vpc etc) using Cloudposse, is working well (kops delete only deleting resources such as security groups, nodes, masters and other instance groups) and not touching VPC config, Elastic IP’s and all

My guess is, whatever we put up using standard terraform earlier, has incorrect tags or something attached, becuase those resources like vpc, does come in the list opf resources to delete when i do kops delete cluster without the --yes flag, to validate what all will be deleted

Has anyone seen their a pod not be able to talk to itself?

Context: Jenkins on K8s runs perfectly fine for building images, but the rtDockerPush call does not allow a connection.

java.net.ConnectException: connect(..) failed: Permission denied

Also: hudson.remoting.Channel$CallSiteStackTrace: Remote call to JNLP4-connect connection from ip-10-60-177-129.us-west-2.compute.internal/10.60.177.129:34956

at hudson.remoting.Channel.attachCallSiteStackTrace(Channel.java:1741)

at hudson.remoting.UserRequest$ExceptionResponse.retrieve(UserRequest.java:356)

at hudson.remoting.Channel.call(Channel.java:955)

at org.jfrog.hudson.pipeline.common.docker.utils.DockerAgentUtils.getImageIdFromAgent(DockerAgentUtils.java:292)

at org.jfrog.hudson.pipeline.common.executors.DockerExecutor.execute(DockerExecutor.java:64)

at org.jfrog.hudson.pipeline.declarative.steps.docker.DockerPushStep$Execution.run(DockerPushStep.java:96)

at org.jfrog.hudson.pipeline.declarative.steps.docker.DockerPushStep$Execution.run(DockerPushStep.java:71)

at org.jenkinsci.plugins.workflow.steps.AbstractSynchronousNonBlockingStepExecution$1$1.call(AbstractSynchronousNonBlockingStepExecution.java:47)

at hudson.security.ACL.impersonate(ACL.java:290)

at org.jenkinsci.plugins.workflow.steps.AbstractSynchronousNonBlockingStepExecution$1.run(AbstractSynchronousNonBlockingStepExecution.java:44)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

Caused: io.netty.channel.AbstractChannel$AnnotatedConnectException: connect(..) failed: Permission denied: /var/run/docker.sock

Ok…had a permission issue. We set fsGroup to 995 on our old cluster. It is 994 on the new one. All is well.

Now to get away from using the docker socket all together.

2020-02-14

2020-02-16

I got tired of reading the stupid guide every time I wanted to spin up Rancher on my local machine so I made a thing!

https://github.com/RothAndrew/k8s-cac/tree/master/docker-desktop/rancher

Configuration As Code for various kubernetes setups - RothAndrew/k8s-cac

Nifty. I’ll give it a whirl (as I’ve been looking for an excuse to load me up some rancher). Thanks for sharing

Configuration As Code for various kubernetes setups - RothAndrew/k8s-cac

got it installed the other night in minikube but the rancher chart defaults to using a loadbalancer IP that doesn’t work for me. I’ll tinker with it more. Either way, thx for sharing.

hmm. For me (Docker Desktop on Mac) it created an Ingress mapped to localhost, which worked great.

I don’t think I did anything special for it to do that.

2020-02-17

2020-02-19

What’s your approach to memory and cpu requests vs limits? I set requests equal to limits for both cpu and mem and forget about it. I’d like to hear from people who use more elaborate strategies.

@Karoline Pauls You’re trying to determine what those values should initially, or how much headroom to between request and limits?

@dalekurt I generally run a single pod, which i give 1 CPU, and do yes | xargs -n1 -P <number of worker threads/processes per pod + some num just to be mean> -I {UNUSED} curl <https://servicename.clustername.companyname.com/something_heavy> and see how much memory it takes, then give it 1 CPU and this much memory per pod, both in limits and requests.

Was just wondering if people do something smart with these settings.

That’s pretty good. I would be interested in hearing other methods

I’d give https://github.com/FairwindsOps/goldilocks a whirl. It uses a controller to deploy the VPA in recommendation mode on labeled namespaces’ deployments to attempt to calculate required resources for pods.

Get your resource requests “Just Right”. Contribute to FairwindsOps/goldilocks development by creating an account on GitHub.

Neat! Hadn’t seen goldilocks before

@Zachary Loeber If you are in NY or ever in NY I owe you a beer

ha, maybe if I actually authored the thing. I’m just a toolbag good sir. One tip with the chart since we are talking about it, don’t sweat setting up VPA ahead of time (there isn’t a good chart for it anyway), just use the InstallVPA: true value and it will run the deployment for the VPA for you. Also, the github repo docs are a bit out of date. The actual helm repo for goldilocks is stable and at https://charts.fairwinds.com/stable

Discover & launch great Kubernetes-ready apps

We always set same value for memory as not compressible or shareable. Headroom depends on app peak usage then healthy bit more as memory is cheap. CPU moved everything to burst with 3x. Because CFS issue causing high P99, limit unnecessary throttling, drive higher usage of worker nodes, spikey nature of requests.

2020-02-20

What are you guys using for multi cluster monitoring?

Prometheus. 1x Cluster Internal Prometheus for each cluster -> External Prometheus capturing all other prometheus via federation.

Interesting article on pros/cons of various kube cluster architecture strategies: https://learnk8s.io/how-many-clusters

Hi all. Any ideas on why we get basically a 503 trying to expose the port for WebStomp with RabbitMQ? It has us perplexed. Here is the YAML;

apiVersion: extensions/v1beta1 kind: Ingress metadata: annotations:

field.cattle.io/creatorId: user-vnwtv field.cattle.io/ingressState: ‘{“c3RvbXAvcmFiYml0bXEtaGEvc3RvbXAudGV4dHJhcy5jb20vL3N0b21wLzE1Njc0”:””}’

field.cattle.io/publicEndpoints: ‘[{“addresses”<i class=”em em-[“xxx.xx.xxx.x”],”port””></i>80,”protocol”<i class=”em em-“HTTP”,”serviceName””></i>“rabbitmq-ha<i class=”em em-rabbitmq-ha-prod”,”ingressName””></i>“rabbitmq-ha<i class=”em em-stomp”,”hostname””>stomp.ourdomain.com”,”path”:”/stomp”,”allNodes”:true}]’ nginx.org/websocket-services: rabbitmq-ha-prod creationTimestamp: “2020-01-29T0540Z” generation: 2 labels: cattle.io/creator: norman name: stomp namespace: rabbitmq-ha resourceVersion: “1653424” selfLink: /apis/extensions/v1beta1/namespaces/rabbitmq-ha/ingresses/stomp uid: f15178c6-3d10-4202-adbd-d9d1cc123bc5 spec: rules:

- host: stomp.ourdomain.com

http:

paths:

- backend: serviceName: rabbitmq-ha-prod servicePort: 15674 path: /stomp status: loadBalancer: ingress:

- ip: xxx.xx.xxx.x

It looks as though the RMQ http API used by our NodeJS code is fine as I see exchanges being created. So there is service running on that port.

Can you make this markdown or a snippet instead?

Ya my bad. The Yaml file went all wonky on mobile which is where I posted this from. I’ll post the Yaml from Mac in a snippet

I was looking at https://www.rabbitmq.com/web-stomp.html and notice it says if enabling the webstomp plugin use port 15674, also seems with TLS and SSL they are using 15673 not 15674. But maybe I am confused if this plugin is required

Using Rancher btw

How long have you been using Rancher? How do you like it?

Using Rancher for over 18months. Mixed reviews. Lot of open issues. Support is scattered. Product is getting better now though I feel they try to focus on their own products like k3s

Getting SSL was like pulling teeth.

2020-02-21

https://v1-14.docs.kubernetes.io/docs/concepts/workloads/controllers/statefulset/#parallel-pod-management wow, this makes statefulsets almost like deployments-but-with-provisioned-storage

Anyone managed to get nginx-ingress on GKE to work when both exposing custom TCP and UDP ports? It complains about LoadBalancer not being able to mix protocols

Alright, time to level up. Inviting @scorebot !

@scorebot has joined the channel

Thanks for adding me emojis used in this channel are now worth points.

Wondering what I can do? try @scorebot help

You can ask me things like @scorebot my score - Shows your points @scorebot winning - Shows Leaderboard @scorebot medals - Shows all Slack reactions with values @scorebot = 40pts - Sets value of reaction

anyone using kudo?

No but I did look into it out of curiosity. It has a krew plugin and only a few actual implementations but looks fairly promising for scaffolding out new operators (or that’s what I think its supposed to be used for….)

last time I checked it out there were only 2 apps in the repo

looks like the may have added a few though. You looking to make an operator?

we’re looking into defining a CRD to describe an opinionated “microservice” that meets a specific customer’s needs

we’re looking at it less as a way to share existing operators - so don’t mind if there’s a limited selection.

That’s sort of what drove me towards it before. Sorry for my non-answer but I definitely have interest in it if anyone else pipes up on this one.

hey - if it’s on your radar, it’s added validation in my

It just seem that there should be an easier way to create CRDs right?

I’ll revisit and try to deploy the spark operator one for the heck of it, I have a partial need for such a thing anyway

(spark on kube is awful migraine inducing yuckiness of technology….)

it’s really early in our exploration. basically, we want to be able to define something like

buildpack: microservice # the type of application lifecycle

deployment: { strategy: rolling }

public: true

healthchecks:

liveness: /healthz

readiness: /readyz

replias: [2, 10]

port: 8080

resources: { limits: { memory: 2g, cpu: 300m }, requests: { memory: … }}

variables: # standard environment variables

DEBUG: false

environments: [ preview, prod, staging ]

addons:

- redis # or memcache

- postgres # or mysql

- s3-bucket

and it will automatically configure our pipelines for a type of microservice and setup the backing services.

we want to be untethered to the CI/CD technology (E.g. use codefresh if we want to), use helm or helmfile if we want to, and not be vendor locked into some rancherish solution - which albeit is nice - locks us into technology decisions which we don’t get to make.

we’d like to be able to use Elasticache Redis in production, while a simple redis container in preview environments.

we’d like to have the settings to connect to these automatically populated in the namespace so the service can just “mount” them as envs

so will the addons be then deployed via service catalog then (or will you abstract calls to sc rather)?

something like that. so we can deploy the operator in dev (for preview environments for example), and it would use containers for postgres and redis

or we deploy the same operator in staging and then it uses RDS and elasticache

etc

really just riffing out loud right now. very early stages.

right right, I’m following. I so wish I were in a position to offer actual help on this but it’s been on my mind as well. It seems that complex helm charts simply isn’t enough for a holistic solution

it’s a building block. i still stand by helm.

i don’t want something that (we didn’t write - so to say) that tells me how to do everything

crossplane does some abstraction of elements for cluster deployments but doesn’t address the microservice/team needs

because the minute some customer says they want X instead of Y the entire solution is null and void

I don’t NOT stand by helm, it serves a purpose for certain

so the operator-as-a-pattern is interesting for these reasons

but then maybe not as powerful?

I don’t know about less powerful, but anything that requires an operator to function inherently makes it less maneuverable? Maybe not the right word.

That being said, have you done anything with OLM at all?

A management framework for extending Kubernetes with Operators - operator-framework/operator-lifecycle-manager

Hey, so right off the top, kubernetes 15.x seems to be expected to install an operator that I want to run:

though it seems operator specific, zookeeper installed without issue (because of course dumb ol’ zookeeper will work….)

I imagine it as a more contextually aware version of it but I’m probably not seeing the whole picture

I’ve been wanting to build a kubernetes operator just as a learning experience, but I haven’t had a proper idea that also seems feasible for my level of programming knowledge~

everytime i think i have an idea, i find a better way to do it with built in stuff

yea, we always finding something else that works well enough.

I’m always looking for more glue though

we’re never going to get away from go-template+sprig though are we

erik: 110 scorebot: 40 briantai35: 40 marco803: 25 eshepelyuk: 25 zloeber: 5

2020-02-23

2020-02-25

Hello Kubernetes Experts,

We have a K8s Deployment that scales up in response to the number of messages in a queue using HPA. There is a requirement to have the HPA scale up the pods however the scaling down must be handled by some custom code. Is it possible to configure the HPA to only handle the scale-up ?

https://cloud.google.com/kubernetes-engine/docs/best-practices/enterprise-multitenancy#checklist <- A worthy checklist regardless of the cloud vendor

@curious deviant I’m reading it that you don’t want autoscaling, you want scale up. If you have the scale down being handled via custom code what is preventing you from wrapping that all into a controller of your own to handle both? I know that you can disable cluster autoscale down with an annotation now, not certain about HPA though

Thank you for your response. Writing out custom code to handle both is definitely an option. I just wanted to be sure I wasn’t missing out on anything that’s already available OOB today. This helps.

its a great question either way. I’m still digging into it a little out of personal curiosity

How does HPA get/read the metric to scale up on queue messages?

Is there a way to more deterministically say something like “For every 50 messages in the queue, spin up a pod”?

That way when it checks, it can spin down to an appropriate amount as the backlog is handled

The prometheus adapter deployment allows for feeding custom metrics into HPA

If your deployment emits the right metrics, you should be able to scale on it I’d think

Anyone read through this nifty guide yet? https://kubernetes-on-aws.readthedocs.io/en/latest/index.html

I understand the nature of k8s is to be able to deploy onto different underlying platforms with ease, but any gotchas for peeps that made the move from kops on AWS to EKS?

@btai Technically, if the hype is to be believed, you just went to an easier to manage cluster . I’m not going to profess to be an aws eks master but I’d say that possibly managing your own storage maybe simplified by using ebs or efs within eks for your persistent volumes. On the other hand, you may see those storage costs go up if you were using local ec2 instance stores in your kops clusters (presented using something like minio) for high disk IO driven workloads and now are using EKS EBS presented storage instead.

I may be way off though and welcome corrections by smarter people in this channel

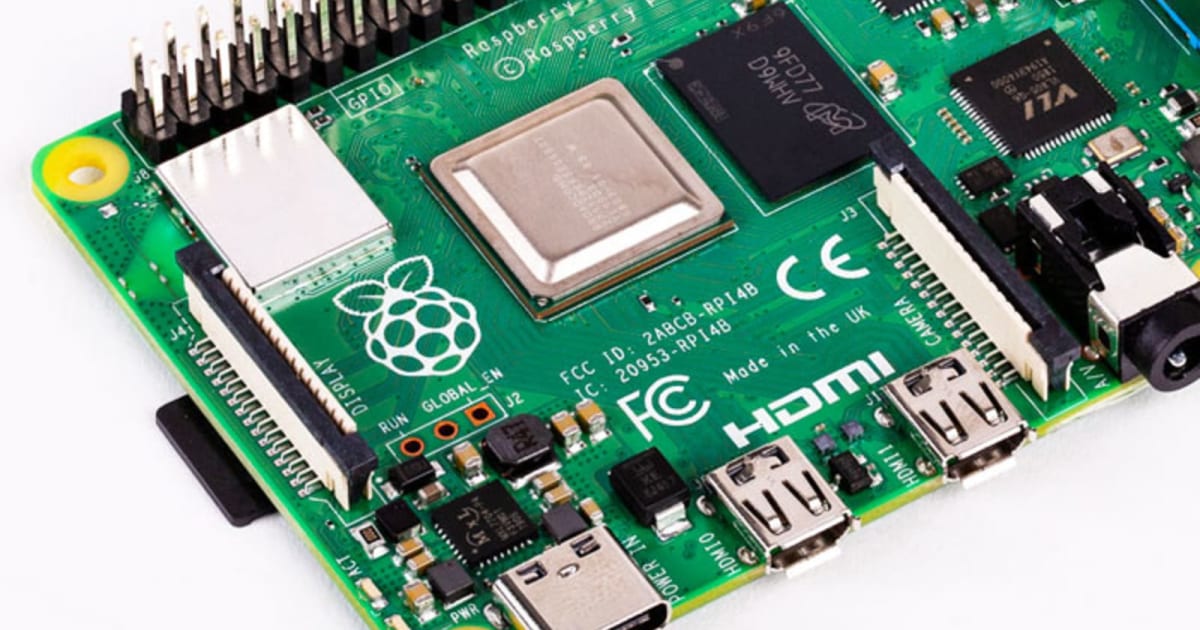

anyone running kubernetes on raspberry pi’s here ? just have few newbie questions - my intention is just for the fun/kicks on it for weekends

There’s a regular attendee of office hours who plays with this a lot, I can’t definitively remember his name though. I think maybe @dalekurt?

Hey

And @roth.andy

Sup

Cool, how many pi’s do i need ? raspberry pi 3 or should i got for the raspberry pi 4 ?

I have 6 3B’s. I wish they were 4s

The extra RAM is really nice. Once etcd and kubelet and whatever else you need is running there isn’t much room for anything real

Noted

Power Over Ethernet (PoE) is worth it. Much less hassle than having to figure out USB C power distribution

I went with kubeadm so I could have multi-master, but now that k3s supports multi master I would use that

from Zero to KUBECONFIG in < 1 min . Contribute to alexellis/k3sup development by creating an account on GitHub.

Thanks for the link

I’ve got 7 rock64 4gb and in the end back using 3 as masters and workers as VM with KVM (x86). I would just VM the lot next time (I tend to go in circles from full virt to metal and back)

The same price as the original when it launched in 2012.

2020-02-26

Hello all, I would like to set nologin in one of Deploy agent Pod so that no one can do kubectl exec --- Is there a way we can achieve this without having to look at k8s RBAC? I want to avoid k8s RBAC because we use EKS platform and not using RBAC extensively yet.

2020-02-27

Does anyone know how cluster-autoscaler finds out about expected taints of specific instance groups?

The terraform examples i know just add --register-with-taints=ondemand=true:NoSchedule to the kubelet args and in order to know about that, cluster autoscaler would have to scrape the instance setup script.

Does cluster-autoscaler even take node taints and pod tolerations into account?

I don’t know the answer to this, but maybe you could share what you want to accomplish?

sry, have a hard time remembering to start threads

afaik autoscaler does not auto-apply node taints from the last time I looked into it on aks clusters.

[10:29 AM] but I was not being extremely exhaustive on what I was doing either, I found that using taints for steering deployments was not super intuitive and leaned into using node affinities to force preferences for workload node pools/groups instead. [10:30 AM] annotations seemed to carry over to autoscaled nodes just fine

but I was not being extremely exhaustive on what I was doing either, I found that using taints for steering deployments was not super intuitive and leaned into using node affinities to force preferences for workload node pools/groups instead.

annotations seemed to carry over to autoscaled nodes just fine

answers there

The reason I want to understand the use-case is that it sounds like it might be related to working with spot instances.

Tries to move K8s Pods from on-demand to spot instances - pusher/k8s-spot-rescheduler

there’s another controller to manage the node taints - just struggling to remember what it was called

https://github.com/aws/aws-node-termination-handler is the other one I was thinking off

A Kubernetes Daemonset to gracefully handle EC2 instance shutdown - aws/aws-node-termination-handler

@Erik Osterman (Cloud Posse) So, since i didn’t want to play with webhooks (however threy’re called), i decided to:

• add a taint and a label to ondemand nodes

• add a label to spot nodes (unused but is there for completeness) Now, pods that must run on ondemand have:

• a toleration for the ondemand taint

• a nodeSelector targetting the ondemand label (except for daemonsets) Pods that do not have these, won’t schedule on ondemand.

(the “webhooks” i meant are called admission control)

I know about base ondemand capacity but I think that making sure critical workloads are scheduled on the ondemand nodes with a rescheduler would be “eventually consistent”.

also my ondemand nodes are 4 times smaller than the spot ones, they’re just to run critical things like cluster-autoscaler, alb ingress controller, metrics server, ingress merge (is it still necessary even?), job schedulers…

That’s a nice solution @Karoline Pauls! I like the approach and it makes a lot of sense the way you did it.

thanks

2020-02-28

afaik autoscaler does not auto-apply node taints from the last time I looked into it on aks clusters.

but I was not being extremely exhaustive on what I was doing either, I found that using taints for steering deployments was not super intuitive and leaned into using node affinities to force preferences for workload node pools/groups instead.

annotations seemed to carry over to autoscaled nodes just fine

Is anyone out there building up managed kube clusters, running a workload, then tearing them down as part of a deployment pipeline?

@btai

@Zachary Loeber i’m doing similar. but not just running a workload. our applications live on ephemeral clusters, when we are upgrading to a new k8s version we spin up a new cluster (i.e. 1.11 -> 1.12, CVEs) and for other things like helm3 migrations

@btai So you spin up the cluster, baseline config it, then kick off some deployment pipelines to shove code into it?

yep, spin up cluster with baseline “cluster services” which include logging daemonset, monitoring, etc. then kick off deployment pipelines to deploy our stateless applications to the new cluster. (and we do a route53 cutover once that is all done)

so do you prestage the vpcs and such?

thanks for your time btw

@Zachary Loeber the cluster and its vpc get provisioned together. During the provisioning stage, it gets vpc peered with other VPCs (rds vpc for example). I don’t mind chatting about this at all. We have a unique setup and I sometimes wish I had more peeps to bounce ideas off of. While its been great for doing things like CVE patches and cluster version upgrades (esp the ones that aren’t exactly push buttom i.e 1.11 -> 1.12), it definitely requires more creativity sometimes because many turnkey solutions for things we want to implement don’t usually take into account k8s cluster that aren’t long lived.