#office-hours (2022-02)

“Office Hours” are every Wednesday at 11:30 PST via Zoom. It’s open to everyone. Ask questions related to DevOps & Cloud and get answers! https://cloudposse.com/office-hours

Public “Office Hours” are held every Wednesday at 11:30 PST via Zoom. It’s open to everyone. Ask questions related to DevOps & Cloud and get answers!

https://cpco.io/slack-office-hours

Meeting password: sweetops

2022-02-01

Felix Torres has joined Public “Office Hours”

Rodrigo Quezada has joined Public “Office Hours”

2022-02-02

Ben Azoulay has joined Public “Office Hours”

@here office hours is starting in 30 minutes! Remember to post your questions here.

Mosh has joined Public “Office Hours”

Emile Fugulin has joined Public “Office Hours”

Yuri Lima has joined Public “Office Hours”

Isaac M has joined Public “Office Hours”

Jeremy Bouse has joined Public “Office Hours”

Erik Osterman (Cloud Posse) has joined Public “Office Hours”

ryan smith has joined Public “Office Hours”

Andy Roth has joined Public “Office Hours”

Jim Conner has joined Public “Office Hours”

Michael Jenkins has joined Public “Office Hours”

Vlad Ionescu has joined Public “Office Hours”

Andy Miguel (Cloud Posse) has joined Public “Office Hours”

Luis Juarez has joined Public “Office Hours”

Zachary Loeber has joined Public “Office Hours”

Matt Calhoun has joined Public “Office Hours”

David Hawthorne has joined Public “Office Hours”

Andre Bindewald has joined Public “Office Hours”

Justean Giger has joined Public “Office Hours”

wasim k has joined Public “Office Hours”

Shaun Wang has joined Public “Office Hours”

Marc Tamsky has joined Public “Office Hours”

Oliver Schoenborn has joined Public “Office Hours”

Mauricio Wyler has joined Public “Office Hours”

Question I have for if we have time: Given the experience Cloud Posse has with being an open source-first company, what advice do you have for new startups with open source products?

Sharif N has joined Public “Office Hours”

Benjamin Smith has joined Public “Office Hours”

Tim Gourley has joined Public “Office Hours”

Matteo Migliaccio has joined Public “Office Hours”

Mohammed Almusaddar has joined Public “Office Hours”

Mayur Sinha has joined Public “Office Hours”

Hao Wang has joined Public “Office Hours”

Jim Park has joined Public “Office Hours”

links from today’s session:

• https://slack.cloudposse.com

• https://thehackernews.com/2022/01/german-court-rules-websites-embedding.html

• https://github.com/earthly/earthly

• https://github.com/chriskinsman/github-action-dashboard

• https://www.cloud.service.gov.uk

Matt Gowie has joined Public “Office Hours”

Allen Lyons has joined Public “Office Hours”

Bhavik Patel has joined Public “Office Hours”

Chocks Subramanian has joined Public “Office Hours”

Tony Scott has joined Public “Office Hours”

Rodrigo Quezada has joined Public “Office Hours”

Eric Berg has joined Public “Office Hours”

Thank you for your patience while the service team reviewed your feedback.

The team have reversed the Automatic enabling of GuardDuty EKS Protection for customers and you will need to explicitly enable EKS once it’s done.

For current Amazon GuardDuty customers AWS will no longer enable by default GuardDuty for EKS Protection. GuardDuty for EKS Protection is the new container security coverage for Amazon Elastic Kubernetes Service (EKS). It was introduced on January 26, 2022 and allows GuardDuty to continuously monitor EKS workloads for suspicious activity, such as API operations performed by known malicious or anonymous users, misconfigurations that can result in unauthorized access to Amazon EKS clusters, and patterns consistent with privilege-escalation techniques.

All existing GuardDuty customers that currently have EKS Protection enabled will remain in a free usage period until Monday February 7, 2022, at which time GuardDuty for EKS Protection will no longer be on, and will remain off by default. Customers can then choose to re-enable GuardDuty for EKS Protection at the time of their choosing with a few clicks in the Amazon GuardDuty console or through the APIs. All accounts that enable GuardDuty for EKS Protection will receive 30 days of free usage and an estimated spend will be available in the GuardDuty console to help with planning purposes after the free period expires. After the free usage period, GuardDuty for EKS Protection will continue to monitor EKS workloads and can be disabled at any time. All EKS Protection security findings generated between January 26, 2022 and February 7, 2022 will be available for review for the next 90 days.

Because many customers have asked for a way to opt-in to an always-on enablement for new security coverage, later this year there will be a new feature introduced in GuardDuty that will allow customers to opt in to all future service coverage of GuardDuty.

The team will also look into the way these kind of information is communicated to the contacts on the account, to make sure the relevant information is shared with the correct billing preferences on file.

Florain Drescher has joined Public “Office Hours”

guardduty_v2 on the recent log4j2 https://aws.amazon.com/blogs/security/using-aws-security-services-to-protect-against-detect-and-respond-to-the-log4j-vulnerability/

Overview In this post we will provide guidance to help customers who are responding to the recently disclosed log4j vulnerability. This covers what you can do to limit the risk of the vulnerability, how you can try to identify if you are susceptible to the issue, and then what you can do to update your […]

Jim Conner has joined Public “Office Hours”

Contribute to inception-health/otel-export-trace-action development by creating an account on GitHub.

I just got this notice of ARM support from Alert Logic…been asking for over a year.

About ARM processor – we currently support it with our MDR platform and we also support it with our remote collectors. Please see links below.

<https://docs.alertlogic.com/requirements/agent.htm>

<https://docs.alertlogic.com/prepare/remote-log-collector-linux.htm?Highlight=arm>

Daniel Akinpelu has joined Public “Office Hours”

wasim k has joined Public “Office Hours”

Engineering, DevOps & Cloud Computing

Sherif Abdel-Naby has joined Public “Office Hours”

Regarding Open Source, if it’s not Core to the business, participating in the broader Open Source community has far reaching implications for making the baseline better for everyone!

Stelios L has joined Public “Office Hours”

Carlos Abreu has joined Public “Office Hours”

2022-02-07

Does it make sense to run terraform/CDK deployments in the same pipeline as your app’s ci/cd? We use CDK and it’s inside our monorepo, but wanted…

In short yes - but only where the unit deployment can be a self contained unit. Eg Lambda or ECS based deployments.

That assumes that the general infrastructure or platform exists and it is deployed so you can deploy it on top of it.

Does it make sense to run terraform/CDK deployments in the same pipeline as your app’s ci/cd? We use CDK and it’s inside our monorepo, but wanted…

• static infra

• dynamic infra + app codebase I think I know you Alan? GDN? right

Oh, just realised that name is familiar. Long time no see Yes, that’s correct. (GDN).

Also relates to conversation a couple weeks ago https://sweetops.slack.com/archives/CHDR1EWNA/p1643223964566839?thread_ts=1643223688.329659&cid=CHDR1EWNAe

What are your thoughts on having terraform code in the application repository for resources specific to the application and more static resources like databases and VPCs in their own repo?

2022-02-08

Two new cool announcements from AWS for discussion:

• https://aws.amazon.com/blogs/aws/new-for-app-runner-vpc-support/

• https://aws.amazon.com/blogs/aws/new-replicate-existing-objects-with-amazon-s3-batch-replication/

With AWS App Runner, you can quickly deploy web applications and APIs at any scale. You can start with your source code or a container image, and App Runner will fully manage all infrastructure including servers, networking, and load balancing for your application. If you want, App Runner can also configure a deployment pipeline for […]

Starting today, you can replicate existing Amazon Simple Storage Service (Amazon S3) objects and synchronize your buckets using the new Amazon S3 Batch Replication feature. Amazon S3 Replication supports several customer use cases. For example, you can use it to minimize latency by maintaining copies of your data in AWS Regions geographically closer to your […]

2022-02-09

@here office hours is starting in 30 minutes! Remember to post your questions here.

I’ll be joining a little late after I get out of my CCB meeting which is unfortunately scheduled to start at the same time

Q: what pitfalls might I encounter I develop a feature by deploying live resources namespaced by my current git branch?

good one

very good question

btw, can you clarify what you mean by “deploying live resources”

Deploying terraform-defined infrastructure to a public cloud.

in other words, deploying stuff that’s hooked up to a credit card

Terraform module to manage AWS Budgets. Contribute to cloudposse/terraform-aws-budgets development by creating an account on GitHub.

Nuke a whole AWS account and delete all its resources. - GitHub - rebuy-de/aws-nuke: Nuke a whole AWS account and delete all its resources.

Q1: is it possible to set cloudfront to cache an image only after it has responded to the client request? The normal behavior is to cache the image before it’s sent down to the client, but I’d like to delay that until after responding to the client if possible.

@matt @Yonatan Koren

I don’t think by default, but with lambda at edge you should be able to do this…

I’d ask what the use case is?

Like do they believe there’s latency in the caching operation?

I don’t think it’s possible even with Lamdba@Edge — you can customize each step of the CDN flow, but you can’t change the order AFAIK

Specific CloudFront events can be used to trigger execution of a Lambda function.

Yes we’re reasonably sure the caching operation has latency.

You’re right @Yonatan Koren it’s a pull-through cache, so I don’t think you can change the order of operations

Q2: is it possible to set 2 origins (both s3 buckets) as part of a cloudfront behavior? The idea is that if a file is not present in one origin, the other origin is checked.

A failover S3 origin? Yes

Terraform module to easily provision CloudFront CDN backed by an S3 origin - terraform-aws-cloudfront-s3-cdn/main.tf at 013262a1fb9156475904ab7ca7a2f61e8534ba80 · cloudposse/terraform-aws-cloudfron…

Hi folks, just following up on this question I asked during the last call. I’m getting an AccessDenied error when I try to pull from the fallback bucket. I’ve made the fallback bucket publicly accessible, confirmed that the object being accessed exists (apparently s3 sometimes gives AccessDenied errors if the object doesn’t exist in the bucket) and also updated the lambda’s execution role to grant the lambda to access the bucket. The role has 3 policies, as shown below (bucket1 is a bucket the lambda already pulls from, bucket2 is the bucket I want to use as a fallback and which is throwing the AccessDenied error):

- the AWS managed

arn:aws:iam::aws:policy/AWSXRayDaemonWriteAccess, specifically:{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "xray:PutTraceSegments", "xray:PutTelemetryRecords", "xray:GetSamplingRules", "xray:GetSamplingTargets", "xray:GetSamplingStatisticSummaries" ], "Resource": [ "*" ] } ] } - the AWS managed

arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole, specifically:{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "logs:CreateLogGroup", "logs:CreateLogStream", "logs:PutLogEvents" ], "Resource": "*" } ] } - our custom policy, specifically:

{ "Version": "2012-10-17", "Statement": [ { "Action": [ "logs:*" ], "Resource": "arn:aws:logs:us-east-1:*:log-group:/aws/lambda/*:*:*", "Effect": "Allow" }, { "Action": [ "s3:GetObject" ], "Resource": [ "arn:aws:s3:::us-east-1-bucket1/*", "arn:aws:s3:::us-east-1-bucket2/*" ], "Effect": "Allow" } ] }What else could I be missing?

Hey @Naija Ninja , there’s a policy you can add on the bucket itself to give access to the lambda function to the bucket objects. Please check if that policy exists. Public ACLs alone not always do the trick.

@Naija Ninja check both the bucket policy (as mentioned above) and bucket ACLs

Control ownership of new objects that are uploaded to your Amazon S3 bucket and disable access control lists (ACLs) for your bucket using S3 Object Ownership.

Thanks guys, my code was using s3’s listObjects method so that’s what was unauthorized. I needed to add the s3:listBucket action to the policy to make it work.

However, the latest problem is that the lambda returns an error when I try to set the video as the body of the response. The error is ERROR Validation error: The Lambda function returned an invalid json output, json is not parsable.. This is what my code does:

const videoFile = await getVidS3(fileName);

response.status = 200;

response.headers['Content-Type'] = [

{ key: 'Content-Type', value: videoFile.contentType },

];

response.headers['Content-Disposition'] = [

{ key: 'Content-Disposition', value: 'inline' },

];

response.headers['Cache-Control'] = [

{ key: 'Cache-Control', value: 'public,max-age=1' },

];

response.headers['Access-Control-Allow-Methods'] = [

{

key: 'Access-Control-Allow-Methods',

value: 'GET,PUT,POST,DELETE',

},

];

response.statusDescription = 'OK';

response.body = videoFile.video;

and I’ve tried modifying the last line to this:

const base64Vid = videoFile.video.toString('base64');

response.body = base64Vid;

response.bodyEncoding = 'base64';

but the problem persists. videoFile.video is the buffer of the video returned by s3. Also worth mentioning that the videos are not hitting the 6mb payload limit for lambda. The video I’m using to test is 873.9 KB in size.

Any ideas what this problem could be?

Hey @Naija Ninja , Couple things, assuming the video is a binary file: binary content breaks the response body because response is json;

• you will need to base64-encode file contents in response.body and set response.Base64Encoded: true

• beware that AWS poses limit on how much data the Lambda function can return in response. Current limit is 1Mb, if the whole response json structure is bigger than 1Mb, you will get an error

Thanks @Constantine Kurianoff It appears the size is my problem. The video itself doesn’t hit the limit, but converting it to base64 causes it to exceed the limit. Are there known ways to work around this problem?

Check S3 Presigned URL works for your usecase

https://docs.aws.amazon.com/AmazonS3/latest/userguide/ShareObjectPreSignedURL.html

Describes how to set up your objects so that you can share them with others by creating a presigned URL to download the objects.

Hmm thanks for this but it won’t be ideal in my case. I need the video bytes themselves because it’s getting played in an html <video> tag.

@Naija Ninja have you considered some of the AWS services specifically designed for video processing and streaming? I’m not familiar with them but I know they exist. Maybe they cover your use-case better than CloudFront.

Hey @Yonatan Koren thanks for the suggestion and sorry for the late response, had to switch focus to some other tasks and put this on the back burner for a bit. I’ve done some reading on other options but I’m reluctant to make sweeping changes because AWS is not my forte. I tried revisiting your suggestion for a failover s3 origin but ran into a problem with deploying new changes to the cloudformation stack. It always fails to update and I think it’s stuck in an unrecoverable state. My organization’s plan doesn’t have access to aws support so I can’t ask them to fix it. Do you have any idea how to address such a problem? I posted about it on stack overflow in case you want to get some more details.

Update There are other errors that show up after this error, but it seems clear to me (based on the timestamps, descriptions and affected resources) that they’re just failures that happen because t…

Andy Miguel (Cloud Posse) has joined Public “Office Hours”

Yonatan Koren (Cloud Posse) has joined Public “Office Hours”

Vlad Ionescu has joined Public “Office Hours”

Amer Zec has joined Public “Office Hours”

Steven Vargas has joined Public “Office Hours”

Erik Osterman (Cloud Posse) has joined Public “Office Hours”

Mike Crowe has joined Public “Office Hours”

Alexandr Vorona has joined Public “Office Hours”

Ray Myers has joined Public “Office Hours”

Isaac M has joined Public “Office Hours”

Mikey Carr has joined Public “Office Hours”

venkata mutyala has joined Public “Office Hours”

Sharif N has joined Public “Office Hours”

Zachary Loeber has joined Public “Office Hours”

Andy Roth has joined Public “Office Hours”

David Hawthorne has joined Public “Office Hours”

Mauricio Wyler has joined Public “Office Hours”

Tennison Yu has joined Public “Office Hours”

Darrin Freeman has joined Public “Office Hours”

Jim Park has joined Public “Office Hours”

Ralf Pieper has joined Public “Office Hours”

Matt Calhoun has joined Public “Office Hours”

Ben Azoulay has joined Public “Office Hours”

Steven Kalt has joined Public “Office Hours”

Guilherme Borges has joined Public “Office Hours”

Mohammed Almusaddar has joined Public “Office Hours”

Sherif Abdel-Naby has joined Public “Office Hours”

Paul Bullock has joined Public “Office Hours”

Matt Gowie has joined Public “Office Hours”

Andrew Thompson has joined Public “Office Hours”

Matteo has joined Public “Office Hours”

Isa has joined Public “Office Hours”

Andre Bindewald has joined Public “Office Hours”

Naija ninja has joined Public “Office Hours”

Bryan Dady has joined Public “Office Hours”

Marcos Soutullo has joined Public “Office Hours”

Benjamin Smith has joined Public “Office Hours”

Eric Berg has joined Public “Office Hours”

Patrick Joyce has joined Public “Office Hours”

PePe Amengual has joined Public “Office Hours”

Jeremy Bouse has joined Public “Office Hours”

Jack Louvton has joined Public “Office Hours”

Shaun Wang has joined Public “Office Hours”

Bref is a framework to write and deploy serverless PHP applications on AWS Lambda.

Andre Bindewald has joined Public “Office Hours”

stelios L has joined Public “Office Hours”

Michael Jenkins has joined Public “Office Hours”

Oh, that’s a good one:)

Oliver Schoenborn has joined Public “Office Hours”

stelios L has joined Public “Office Hours”

Arjun Venkatesh has joined Public “Office Hours”

Matteo has joined Public “Office Hours”

stelios L has joined Public “Office Hours”

A recent project that supposedly solves this: https://github.com/Pix4D/terravalet

A tool to help with some Terraform operations. Contribute to Pix4D/terravalet development by creating an account on GitHub.

However, after reading through their README… I’m not sold.

A state move bash script borrowed from @matt https://gist.github.com/jim80net/1a81ebfd687c36283046d34113dbe96e

Such bash script hate, @Matt Gowie

Hahah don’t get me wrong, I write way too much bash. But doesn’t mean I’m happy about it.

Automated refactoring for Terraform. Contribute to craftvscruft/tfrefactor development by creating an account on GitHub.

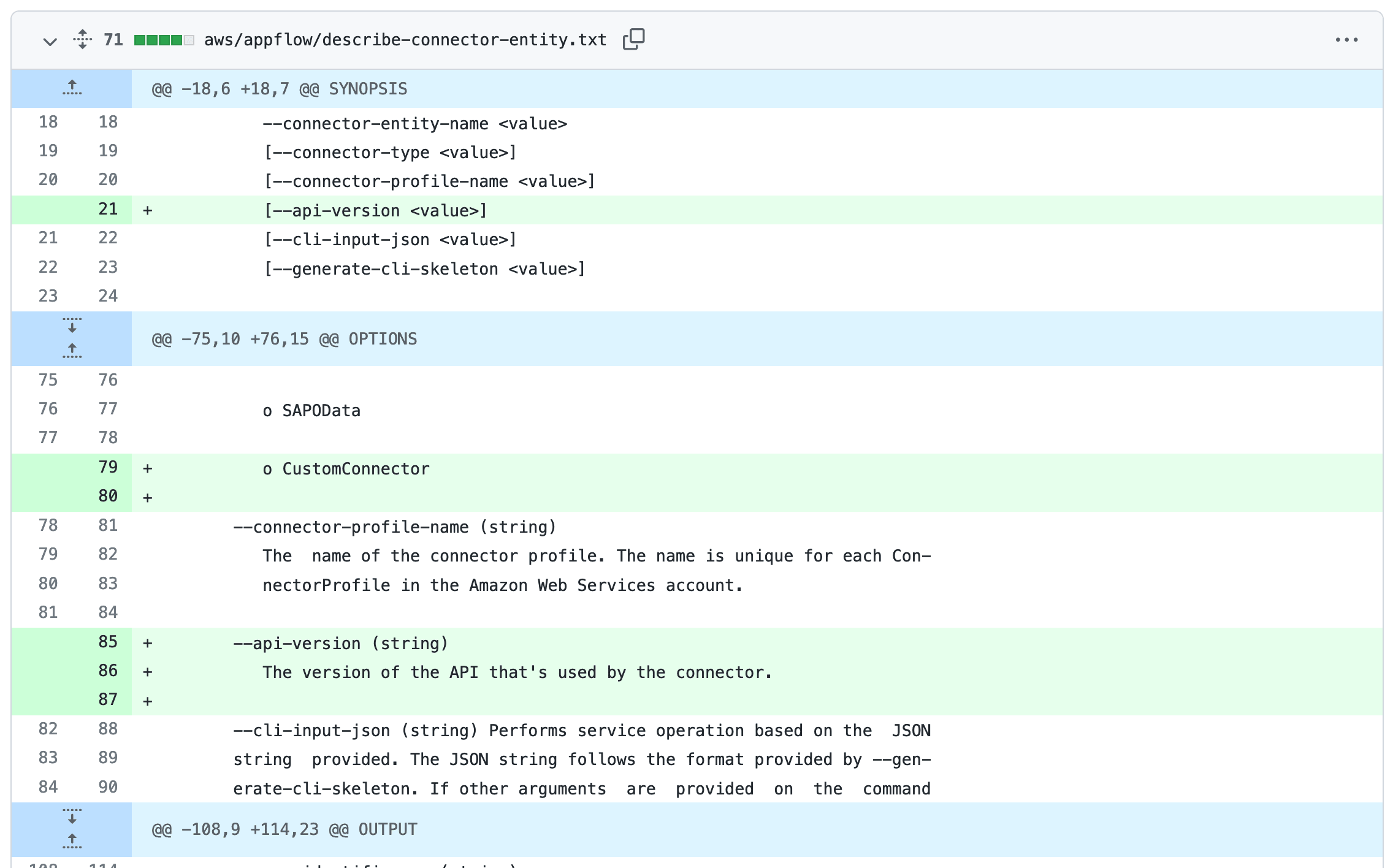

Did somebody mention this on the zoom call ? https://simonwillison.net/2022/Feb/2/help-scraping/

I’ve been experimenting with a new variant of Git scraping this week which I’m calling Help scraping. The key idea is to track changes made to CLI tools over time …

Initial thread announcing moved block with discussion on cross-statefile moved idea. https://discuss.hashicorp.com/t/request-for-feedback-config-driven-refactoring/30730/6

Thanks for pointing that out. I’ve restored the deleted branch so the doc links in the first post should work again. We’ll update the links later.

I can’t seem to find the GH issue… And if I don’t soon then I’ll create one.

Another cross-statefile move bash script, but more atmos / GitOps focused: https://gist.github.com/Gowiem/c5245cea0256598f00fd03f0ce43e5f8

2022-02-10

Has anyone found a tool that can facilitate mass migration of data from one tier of Glacier to the other?

If this is something that is going on today’s agenda, if possible please move it towards the end. I will be joining late

Has anyone found a tool that can facilitate mass migration of data from one tier of Glacier to the other?

2022-02-11

2022-02-13

2022-02-14

2022-02-15

Slight off topic question (need not be office-hours question) - we have a PEN test finding which states certificate pinning should be implemented. I understand it there are different strategies regarding which certificates in the chain to pin which have different effectiveness and different migration/rotation complications. What are people doing in the wild with respect to pinning for ACM generated certificates?

I feel like this is something that should be considered optional. I get it, I’d prefer pinned certs, but it’s a big lift.

2022-02-16

@here office hours is starting in 30 minutes! Remember to post your questions here.

Heard in a previous Office Hours some discussion of giving the infra deploy pipeline full admin in AWS vs fine-grained permissions that seem more secure but troublesome to manage. Unfortunately I can’t join today but if there’s a past video or other resource on the tradeoffs please link, or I’ll ask next time.

Very late talking point.

• In your centralized logging system (ELK/Loki), How do you deal with a spike of logs that overwhelms your pipeline ? Do you drop logs ? How valuable are each log line is for your team ?

Or a rephrase, what is the SLA do you give to teams when it comes to logging pipelines ?

Kerri Rist (Cloud Posse) has joined Public “Office Hours”

wasim k has joined Public “Office Hours”

Andy Miguel (Cloud Posse) has joined Public “Office Hours”

Christopher Picht has joined Public “Office Hours”

Ralf Pieper has joined Public “Office Hours”

Jim Park has joined Public “Office Hours”

Erik Osterman (Cloud Posse) has joined Public “Office Hours”

Vlad Ionescu has joined Public “Office Hours”

Sherif Abdel-Naby has joined Public “Office Hours”

Martin Dojcak has joined Public “Office Hours”

Ben Azoulay has joined Public “Office Hours”

Matt Gowie has joined Public “Office Hours”

dario erregue has joined Public “Office Hours”

Phil Boyd has joined Public “Office Hours”

Isaac M has joined Public “Office Hours”

Andrew Bost has joined Public “Office Hours”

Michael Padgett has joined Public “Office Hours”

Allen Lyons has joined Public “Office Hours”

Amer Zec has joined Public “Office Hours”

Houman Jafarnia has joined Public “Office Hours”

Emem has joined Public “Office Hours”

Darrin Freeman has joined Public “Office Hours”

Maged Abdelmoeti has joined Public “Office Hours”

Ben Azoulay has joined Public “Office Hours”

PePe Amengual has joined Public “Office Hours”

james has joined Public “Office Hours”

Jim Conner has joined Public “Office Hours”

I was referring to this comment regarding rumor of AWS 4.0 migration tooling from hashi:

We are planning to spend some time next week investigating new approaches to migration tooling and will follow up with the results of our investigation.

Hey y’all,

Thank you for taking the time to raise this issue, and for everyone who has reacted to it so far, or linked to it from other issues. It’s important for us to hear this kind of feedback, and the cross-linking that several folks have done really helps to collect all of this information in one place. There are some great points being made here, and we wanted to take time to address each of them and to provide more information around some of the decisions that were made in regards to the S3 bucket refactor. We recognize that this is a major change to a very popular resource, and acknowledge that a change this large was bound to cause headaches for some. Ultimately, we hope that the information provided here will help give a better idea of why we felt it was necessary to do so.

A bit of background

The aws_s3_bucket resource is one of the oldest and most used resources within the AWS provider. Since it’s inception, the resource has grown significantly in functionality, and therefore complexity. Under the hood, this means that a single configuration is handled by a myriad of separate API calls. This not only adds to the difficulty of maintaining the resource by way of it being a large amount of code, but also means that if a bug is inadvertently introduced anywhere along the line, the entire resource is affected.

This monolithic approach has been noted as potentially problematic by maintainers and practitioners alike for quite some time, with a number of issues being raised going back to at least 2017 (#4418, #1704, #6188, #749; all of which were referenced in our planning for this change). In addition to these types of requests, we’ve seen numerous bugs over time that were either a direct result of or exacerbated by the former structure of the resource; for example #17791.

The idea of separating the resource into several distinct/modularized resources also provides the opportunity to more quickly adapt to changes and features introduced to S3, with the added benefit of easier testing to help ensure that bugs are fewer less disruptive.

With this information in mind, we knew that changes were needed in order to ensure the long-term viability and resilience of managing the lifecycle of S3 buckets within the provider, and to address the desires of the community at large.

Paths we considered

It’s important that we note that the path that we landed on for the 4.0 release was not the only option that we considered. In the interest of transparency, we’d like to discuss some of the other options that we considered, and why we ultimately decided against them.

Alternative Option 1: Remove a subset of S3 bucket resource arguments and create new S3 bucket related resources

We considered the more aggressive approach of removing a subset of the root-level arguments and introducing additional S3 bucket related resources to replace them. This would have forced practitioners to refactor their configurations immediately before proceeding. Due to the drastic impact this change would have, it was ultimately decided that this was not the right approach.

Alternative Option 2: Move existing S3 bucket resource to a new resource and refactor S3 bucket resource

This was an approach that was mentioned here already as a suggested alternate approach, and was something that we considered while planning for this major release. This option would imply that the existing schema would be maintained, but moved to a new resource in the provider, e.g. aws_s3_bucket_classic, while the aws_s3_bucket resource was moved to the new refactor with the separate resources that were ultimately introduced in 4.0. This would create two separate resources for managing S3 buckets in different manners, with future support/backporting for the aws_s3_bucket_classic resource ending once the major version was released.

Going this route would mean that practitioners would have needed to either adopt the new aws_s3_bucket and associated resources standards (as is needed with the path we ultimately chose) or go the route of moving all existing instances of aws_s3_bucket to aws_s3_bucket_classic in the state. The latter of which would involve the use of terraform state rm and terraform import.

Adopted Option: Deprecate subset of S3 Bucket resource arguments, marking them as Computed (read-only) until the next major milestone, and create new S3 bucket related resources

The approach we decided was the least impactful of the options given the downsides of the approaches outlined above, and after discussing the options with a number of practitioners, including large enterprise customers with significant Terraform adoption. Much like alternative option 2, and as has been noted here, this does still introduce the need to import resources and make adjustments to configurations long-term, but was intended to allow as much functionality to be retained as was possible while still introducing the necessary refactor. This approach also allowed us an opportunity that option 2 did not: the ability to leave the “deprecated” attributes in place until version 5.0.0, allowing for a longer deprecation period.

With the above in mind, we want to take the opportunity to address the use of “deprecate” here. We noted in the pinned issue detailing the changes:

for the purpose of this release, this equates to marking previously configurable arguments as read-only/computed and with a Deprecated message

This was not reflected in the blog post about the release (something we’re working to address), and we recognize that this doesn’t necessarily reflect what “deprecated” means in the software world. The thought here is that this would not break configurations, but rather that there would be no drift detection for computed attributes.

We will update the blog post to reflect the language in our pinned issue and clear up any inconsistencies.

Migration Tooling

Another consideration that we took into account whilst working on this, that has been mentioned here as well, is the idea of tooling to help aid the transition between the versions. In speaking with users/practitioners while trying to determine the “correct” course of action, the general feedback we received was that the thought of using some type of script to modify large state files, particularly those stored in Terraform Enterprise or Terraform Cloud, was an undesirable path. Manipulating state outside of Terraform has inherent risks associated with it, including loss of tracked resources, which may lead to loss of money, time, or even compromises to the security of infrastructure. In addition, migration is a two part problem; the state and the configuration. This multi-part problem would have necessitated a significantly more complex set of tooling, leading to even greater possibilities for errors. Due to the significant risk associated with it, this ultimately led to the decision to not include migration tooling.

Given the feedback we’ve received so far from the community after the release, we’re beginning to discuss whether or not this is a position that we should reconsider. It’s too early in those discussions to say what the outcome will be, but we feel it’s important to note that we are not shutting down the idea based solely on our preconceived notions. We are planning to spend some time next week investigating new approaches to migration tooling and will follow up with the results of our investigation.

Moving forward

We recognize that this was a big change and there are, understandably, que…

David Hawthorne has joined Public “Office Hours”

Mauricio Wyler has joined Public “Office Hours”

Shaun Wang has joined Public “Office Hours”

Vigneshkumar Sadasivam has joined Public “Office Hours”

Ben Smith (Cloud Posse) has joined Public “Office Hours”

Anere Faithful has joined Public “Office Hours”

Andy Roth has joined Public “Office Hours”

Michael Bottoms has joined Public “Office Hours”

Tony Scott has joined Public “Office Hours”

Yusuf has joined Public “Office Hours”

Steven Vargas has joined Public “Office Hours”

please has anyone had to deal with uploading and offloading child accounts. I had like over 50 accounts to create on newrelic and i had to manually add this accounts on the UI another downside i faced with newrelic was that when i needed to delete an account i will need to send a mail to my newrelic account officer to remove the accounts on their end because even when deleted it on my account it was some how cached

We manage our DataDog and Opsgenie accounts with Terraform and it’s pretty helpful. Also sets up team membership and escalations etc

So we add a user to one data file and the accounts get created / deleted

Parallel S3 and local filesystem execution tool.

Yusuf has joined Public “Office Hours”

Igor B has joined Public “Office Hours”

Emem has joined Public “Office Hours”

Someone dropped cycle.io in the office hours chat, but didn’t pitch it. I skimmed the landing page and I’m curious what people have to say in favor of (or against it).

I’m inclined to follow the thread. the offering looks good but as a practitioner, their pricing looks crazy.

However, I guess their intended audience are the teams that would rather spend the money to get something in place quickly and easily, particularly if there are no skilled devs/ops on the team.

2022-02-17

2022-02-22

2022-02-23

@here office hours is starting in 30 minutes! Remember to post your questions here.

(Repost, link me if previously covered): What are tradeoffs of giving the infra deploy pipeline full admin in AWS vs fine-grained permissions that seem more secure but troublesome to manage?

We did cover this on last week’s office hours

Great I’ll watch that one thanks

Kerri Rist (Cloud Posse) has joined Public “Office Hours”

Erik Osterman (Cloud Posse) has joined Public “Office Hours”

Andy Miguel (Cloud Posse) has joined Public “Office Hours”

Kerri Rist (Cloud Posse) has joined Public “Office Hours”

Jim Conner has joined Public “Office Hours”

Yusuf has joined Public “Office Hours”

David Hawthorne has joined Public “Office Hours”

Jim Park has joined Public “Office Hours”

Mohammed Almusaddar has joined Public “Office Hours”

Sherif Abdel-Naby has joined Public “Office Hours”

Kevin Mahoney has joined Public “Office Hours”

Darrin Freeman has joined Public “Office Hours”

Michael Jenkins has joined Public “Office Hours”

venkata mutyala has joined Public “Office Hours”

Ralf Pieper has joined Public “Office Hours”

Guilherme Borges has joined Public “Office Hours”

Ray Myers has joined Public “Office Hours”

Eric Berg has joined Public “Office Hours”

Tim Gourley has joined Public “Office Hours”

Ken Y.y has joined Public “Office Hours”

Christopher Picht has joined Public “Office Hours”

Isaac M has joined Public “Office Hours”

Vignesh Sathasivam has joined Public “Office Hours”

Isaac M has joined Public “Office Hours”

Florain Drescher has joined Public “Office Hours”

The Kubernetes project is continually integrating new features, design updates, and bug fixes. The community releases new Kubernetes minor versions, such as 1.21, as generally available approximately every three months. Each minor version is supported for approximately twelve months after it’s first released.

Shaun Wang has joined Public “Office Hours”

Uwaila Adams has joined Public “Office Hours”

Uzuazoraro Etobro has joined Public “Office Hours”

Patrick Joyce has joined Public “Office Hours”

Oscar Blanco has joined Public “Office Hours”

james has joined Public “Office Hours”

Anton Shakh has joined Public “Office Hours”

@Wédney Yuri has joined Public “Office Hours”

Amer Zec has joined Public “Office Hours”

https://tech.loveholidays.com/dynamic-alert-routing-with-prometheus-and-alertmanager-f6a919edb5f8

^ This Article is as close as to the system I built for Alerting based on labels.

TL;DR Dynamically route alerts to relevant Slack team channels by labelling Kubernetes resources with team and extracting team label within…

And that’s the feature i am trying to add to KSM so you don’t need to do very complex Joins https://github.com/kubernetes/kube-state-metrics/pull/1689

Really appreciate a discussion here

silly project, stand up wireguard on any cloud provider https://github.com/jbraswell/terraform-wireguard

James Corteciano has joined Public “Office Hours”

2022-02-24

2022-02-25

Let me know if I should ask this somewhere else but…. If I am making a job for a gitlab pipeline in .gitlab-ci.yml with a script section and I extends that first job into a second job and the first job has its own script section and the second job has its own script section, will the second script section overwrite what was in the first job? If that doesnt make sense let me know…

when using extend, any property declared in the second job will override the extended property

thank you

This has all the feels of an interview question

That new reference feature is pretty recent, if you are onprem you may need to ensure your version supports it btw