#terraform-aws-modules (2019-12)

Terraform Modules

Terraform Modules

Discussions related to https://github.com/terraform-aws-modules

Archive: https://archive.sweetops.com/terraform-aws-modules/

2019-12-12

I’m building out a new infra for a small team and I’m making some use of the terraform modules, which are nice building blocks

One thing that I’m pondering, but don’t have an answer to at the moment is how folk manage their fleet of nodes in ASGs created by https://github.com/cloudposse/terraform-aws-ec2-autoscale-group; generally in the past I’ve used a pattern of naming the ASG after its LC with a create_before_destroy lifecycle, so as new LCs are produced, you end up with two ASGs behind one LB for the blue/green deploy.

My reading of the docs (and a bit of a poke at the code) looks to me like this sort of model isn’t supported, so how are folk doing rollouts of new config?

Admittedly, I’ve generally done this behind ELBs, not ALBs/TGs, so I’m still piecing that together

And now I realise I’m probably in the wrong channel. Doh

Is fine in here, just slow in the US atm , maybe @Erik Osterman (Cloud Posse) / @Andriy Knysh (Cloud Posse) have thoughts

or some of the other regulars

I think Cloudposse prefers using their null-label module to standardize naming. A lot of their modules ask for three parts and use the null-label module behind the scenes as well.

Sounds like your use case is to create some blue/green fanciness, which in my experience doesn’t play well with IAC. It has the same “what’s the SDLC of this object” problem where you might create it with Terraform, but some scripting manages flipping things around or creation or what not.

I’m not sure what you mean by “LC” in your description there though.

we did not test https://github.com/cloudposse/terraform-aws-ec2-autoscale-group with blue/green, but you can create two ASGs in terraform and, if you use Kubernetes, assign diff kubernetes labels to the nodes so you could schedule pods on one group or the other

Terraform module to provision Auto Scaling Group and Launch Template on AWS - cloudposse/terraform-aws-ec2-autoscale-group

how to drain it and switch between the groups, a separate script/workflow needs to be created for that

@bazbremner if you see anything on how to improve the module, especially for that use-case. PRs are always welcome

I think it was likely more a question of is there a reason not to do it as @bazbremner mentioned, in particular the hinging of the ASG name to the Launch config name, so you get a new ASG behind the same LB, rather than mutating the current ASG but a diferent launch config for blue/green …. if that makes any sense…

Makes sense

Reasonable use-case, just not one that we’ve considered.

the resources’ names don’t matter here. You can create two launch configurations (or launch templates) and then create two ASGs using their own LC or LT

The ASG name hinging on the LC (or template) name, forces the ASG to also be replaced when ever the LC is replaced, which is the thing the module is currently missing. Not sure how that would play nicely for folks not expecting it and doing blue/green with the same ASG

Well, the resource name is relevant in most patterns I’ve seen because the new launch config name and the link to the ASG name with the appropriate lifecycle is what allows for the seamless cut over

snap, what @joshmyers said

the module uses name-prefix https://github.com/cloudposse/terraform-aws-ec2-autoscale-group/blob/master/main.tf#L15

Terraform module to provision Auto Scaling Group and Launch Template on AWS - cloudposse/terraform-aws-ec2-autoscale-group

which will allow you to create new LT

name or name-prefix for LT and ASG should probably be made configurable (TF vars) to support that use-case

This is the original post that describes the model: https://groups.google.com/forum/#!msg/terraform-tool/7Gdhv1OAc80/iNQ93riiLwAJ

Notably I think controlling via variables isn’t good enough as the two need to change in step and that’s all internal to the module

Note I’m used to using launch configurations, vs templates which the module uses. Since I’ve not RTFMed yet, I don’t know how significant that is

Yes, it’s a question not about the modules, it’s about orchestrating the process. Whatever the workflow says, terraform will execute, including changing names or deleting/creating resources

Aye

I mean, I asked because understanding why the module works the way it does and how the workflow/processes around that are assumed to work, which Erik touched on is useful to me

we use this as the base module for provisioning the node pools

since there are multiple node pools, one just drains/cordons one at a time and replace it

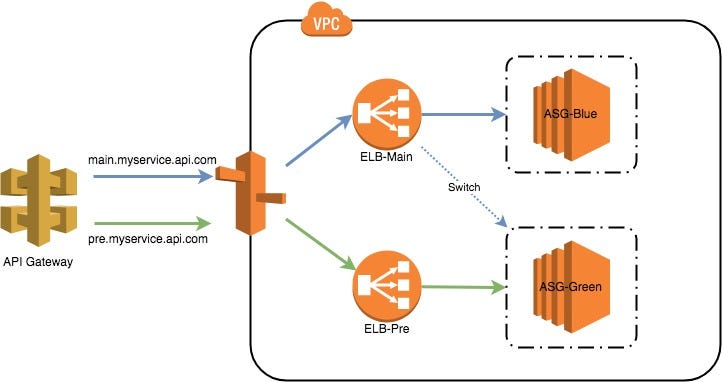

some examples with ALB https://medium.com/@acharya.naveen/blue-green-deployment-with-aws-application-load-balancer-e551cd00d2c9

Earlier this year, teams at Intuit migrated the AWS infrastructure for their web services to the Application Load Balancer (ALB) from the…

Currently doing similar to above for our ECS deployments, canary.<service>.<domain> + main.<service>.<domain>

@Alex Siegman LC == launch configuration, sorry.

not sure why my brain didn’t connect that, makes sense, thanks

@Erik Osterman (Cloud Posse) OK, good to know, thanks.

2019-12-20

Hi all I’m currently evaluating using the terraform-aws-eks module. Could someone help me understand what the intention of the “resource “random_pet” “workers” is/