#terraform (2018-12)

Discussions related to Terraform or Terraform Modules

Discussions related to Terraform or Terraform Modules

Archive: https://archive.sweetops.com/terraform/

2018-12-01

Deploying cloudposse/terraform-aws-eks-cluster https://github.com/cloudposse/terraform-aws-eks-cluster/tree/master/examples/complete but running into the following errors:

module.eks_cluster.aws_security_group_rule.ingress_security_groups: aws_security_group_rule.ingress_security_groups: value of 'count' cannot be computed

module.eks_workers.module.autoscale_group.data.null_data_source.tags_as_list_of_maps: data.null_data_source.tags_as_list_of_maps: value of 'count' cannot be computed

I see that autoscale_group was updated https://github.com/cloudposse/terraform-aws-ec2-autoscale-group/pull/5 to use terraform-terraform-label which from searching the Slack history seemed to be an issue.

and eks_cluster is also failing at ingress_security_groups https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/main.tf#L69

I’m not passing in any tags or security group so the terraform-aws-eks-cluster module is just using the defaults.

Yep. I’ve been going through that.

Ok :-)

When targeting the eks_cluster module replacing:

allowed_security_groups = ["${distinct(compact(concat(var.allowed_security_groups_cluster, list(module.eks_workers.security_group_id))))}"]

with

allowed_security_groups = ["sg-09264f5790c8e28e1"] resolves the issue.

So right now I’m trying to target the eks_workers module.

How do you correctly format the tag variable?

@Andriy Knysh (Cloud Posse) we should have a Makefile in that example

tags="map('environment','operations')"

No you can pass tags just like normal

A map

Some places show it written using interpolation because it used to be that way in older versions of terraform

And that got copied and pasted everywhere

I’ll have to check it again, when we tested a few months ago, we didn’t see those errors

Passing in the tags while targeting the eks_workers module didn’t work.

Aside from the hard coding you did as a workaround, no other changes from the example?

Commenting out the null_data_source all together when targeting the eks_workers module resolves the issue.

# data "null_data_source" "tags_as_list_of_maps" {

# count = "${var.enabled == "true" ? length(keys(var.tags)) : 0}"

#

# inputs = "${map(

# "key", "${element(keys(var.tags), count.index)}",

# "value", "${element(values(var.tags), count.index)}",

# "propagate_at_launch", true

# )}"

# }

resource "aws_autoscaling_group" "default" {

count = "${var.enabled == "true" ? 1 : 0}"

name_prefix = "${format("%s%s", module.label.id, var.delimiter)}"

...

service_linked_role_arn = "${var.service_linked_role_arn}"

launch_template = {

id = "${join("", aws_launch_template.default.*.id)}"

version = "${aws_launch_template.default.latest_version}"

}

# tags = ["${data.null_data_source.tags_as_list_of_maps.*.outputs}"]

lifecycle {

create_before_destroy = true

}

}

@Erik Osterman (Cloud Posse) no I tried to pass in minimal variables and use the eks module defaults.

With the null_data_source in eks_workers commented out I had hoped the full eks module would work (I reverted the eks_cluster allowed_security_groups back to the default) but eks_cluster still errors.

module.eks_cluster.aws_security_group_rule.ingress_security_groups: aws_security_group_rule.ingress_security_groups: value of 'count' cannot be computed

Unfortunately don’t know what is causing this. Seems like something has changed in terraform recently that breaks it.

On a serious note, on AWS use kops for Kubernetes

We wrote these modules to evaluate the possibility of using EKS but consider terraform as not the right tool for the job

No automated rolling upgrades

No easy way to do drain and cordon

So from a lifecycle management perspective we only use kops with our customers

Ah. Ok. Easy enough. Thanks @Erik Osterman (Cloud Posse)

But it doesn’t help with upgrades yet either

I’ve heard lots of great things about kops so I’ll just roll with that. The goal is to deploy the statup app and test namespacing per PR.

Thanks for your help @Erik Osterman (Cloud Posse) @Andriy Knysh (Cloud Posse)

np, sorry the EKS module didn’t work for you. We tested it many times, but it was a few months ago and we didn’t touch it since then. Those count errors are really annoying. Maybe HCL 2.0 will solve those issues

Hi. I am facing some sort of issue with dns cert validation using cloudflare provider

it works perfectly fine in a different tf module

but fails in my compute module

i am not able to figure out what’s going on

@rohit what error are you receiving?

* aws_alb_listener.my_app-443: Error creating LB Listener: CertificateNotFound: Certificate 'arn:aws:acm:us-east-1:011123456789:certificate/a7f83c2e-57c4-4046-b81a-f9a6fe153ad0' not found

status code: 400, request id: 6c1234e8-f111-12e3-af9f-19f5bf9b2312

2018/12/01 11:41:44 [ERROR] root.compute.my_app: eval: *terraform.EvalSequence, err: 1 error(s) occurred:

* aws_alb_listener.my_app-443: Error creating LB Listener: CertificateNotFound: Certificate 'arn:aws:acm:us-east-1:011123456789:certificate/a7f83c2e-57c4-4046-b81a-f9a6fe153ad0' not found

status code: 400, request id: 6c1234e8-f111-12e3-af9f-19f5bf9b2312

Error: Error applying plan:

3 error(s) occurred:

* module.compute.module.my_app.cloudflare_record.my_app_cloudflare_public: 1 error(s) occurred:

* cloudflare_record.my_app_cloudflare_public: Error finding zone "crs.org": ListZones command failed: error from makeRequest: HTTP status 400: content "{\"success\":false,\"errors\":[{\"code\":6003,\"message\":\"Invalid request headers\",\"error_chain\":[{\"code\":6103,\"message\":\"Invalid format for X-Auth-Key header\"}]}],\"messages\":[],\"result\":null}"

* module.compute.module.my_app.cloudflare_record.dns_cert_validation: 1 error(s) occurred:

* cloudflare_record.dns_cert_validation: Error finding zone "crs.org": ListZones command failed: error from makeRequest: HTTP status 400: content "{\"success\":false,\"errors\":[{\"code\":6003,\"message\":\"Invalid request headers\",\"error_chain\":[{\"code\":6103,\"message\":\"Invalid format for X-Auth-Key header\"}]}],\"messages\":[],\"result\":null}"

* module.compute.module.my_app.aws_alb_listener.my_app-443: 1 error(s) occurred:

* aws_alb_listener.my_app-443: Error creating LB Listener: CertificateNotFound: Certificate 'arn:aws:acm:us-east-1:011123456789:certificate/a7f83c2e-57c4-4046-b81a-f9a6fe153ad0' not found

status code: 400, request id: 6c1234e8-f111-12e3-af9f-19f5bf9b2312

i replaced original values with dummy values but that’s the error

The certificate not found error for me has always been when I accidentally created it in one region but try to use it in a different region

i will double check

and also if DNS is not configured correctly

then AWS can’t read the record and validation fails

Seems that authentication doesn’t work to cloudflare.

i use similar code for dns validation in different module and it does work

This issue was originally opened by @JorritSalverda as hashicorp/terraform#2551. It was migrated here as part of the provider split. The original body of the issue is below. In a CloudFlare multi-u…

@rohit you sure that Cloudflare owns the zone [crs.org](http://crs.org)? Name servers point to

IN ns13.dnsmadeeasy.com. 86400

IN ns15.dnsmadeeasy.com. 86400

IN ns11.dnsmadeeasy.com. 86400

IN ns12.dnsmadeeasy.com. 86400

IN ns10.dnsmadeeasy.com. 86400

IN ns14.dnsmadeeasy.com. 86400

i replaced the original zone with a different value

kk

check if DNS works for the zone (name servers, etc.)

using nslookup ?

any tool to read the records from the zone

or it’s just what it says Invalid format for X-Auth-Key header

Do you want to request a feature or report a bug? Reporting a bug What did you do? Ran traefik in a windows container and set cloudlfare to be the dnsProvider. What did you expect to see? I expecte…

somehow it worked now

cert validation does take forever

it usually takes up to 5 minutes

yeah it does

how do you perform dns cert validation ?

Terraform module to request an ACM certificate for a domain name and create a CNAME record in the DNS zone to complete certificate validation - cloudposse/terraform-aws-acm-request-certificate

same DNS validation

basically use route53 instead of cloudflare

Yes

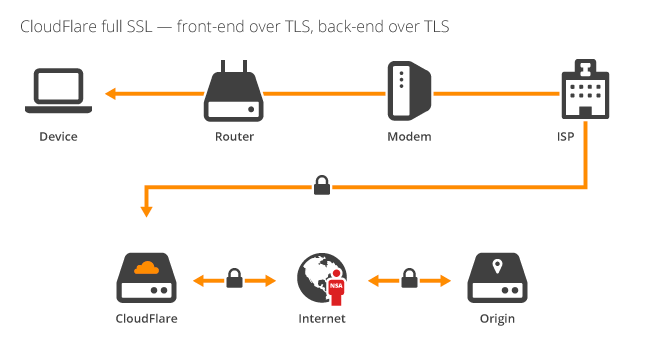

We recommend 2 types of domains

One is for infrastructure and service discovery

The other is for branded (aka vanity) domains

Host vanity domains on CloudFlare

And CNAME those to your infra domains

Ensure that Cloudflare is configured for end to end encryption

@Erik Osterman (Cloud Posse) how can i ensure that it is configured for end to end encryption ?

we do use https for all our connections and TLSv1.2

It’s a flag in their UI presumably exposed via API

Is it called Always Use HTTPS ?

Yup

I am sure it is checked but i will double check

I am on my phone so can’t screenshot it

no worries

but i didn’t understand how it is important in this context

It’s relative to our suggestion of creating 2 levels of domains

Use ACM with route53 for service discovery domain

Use Cloudflare with vanity domains

Use end to end encryption to ensure privacy

we do use route53 for internal services and use cloudflare for publicly exposed endpoints

Just red flags go up for me if using Cloudflare to validate acm certificates

because the name on the cert matches with the endpoint we have to use cloudflare to do the validation

Ok

Just curious, if we create internal alb, then then the only way to is to do email validation, correct ?

So I think that with Cloudflare things can work differently

We are using route53 with acm on internal domain for docs.cloudposse.com

Internal domain is .org

We use DNS validation

Then we use Cloudflare to cname that to the origin

I am pretty certain we didn’t need the cert name to match because I don’t think CloudFlare is passthru

Cloudflare requests the content from the origins and performs transformations on the content

Which is only possible if it first decrypts the content MITM style

E.g. compression and minificarion

It is not a simple reverse proxy

after what you said, when i think about it i am not able to remember why we had to do it this way

i will have to check with someone from OPS team

did you see my above question ? because you are on phone i will paste it again

if we create internal alb, then then the only way to is to do email validation, correct ?

Ohhhhhhhh I see

Internal alb, so non routable

Good question. I have not tried acm dns validation with internal domains, but that would make the most sense since there is no way for AWS to reach it due to the network isolation.

we currently use email validation for internal alb but was not sure if that can be automated in terraform

Perhaps there are some work arounds where you allow some kind of AWS managed vpc endpoint to reach it kind of like you can do with Private S3 buckets

For example with classic ELBs we could allow some AWS managed security group access to the origin.

Now with this being a VPC not sure if they’ve done anything to accommodate it.

Please let me know if you find a conclusive answer yes/no

will do. I am trying to find more about this or any other alternative in terraform for internal alb

Let’s start with a quick quiz: Take a look at haveibeenpwned.com (HIBP) and tell me where the traffic is encrypted between: You see HTTPS which is good so you know it’s doing crypto things in your browser, but where’s the other end of the encryption? I mean at what

@Erik Osterman (Cloud Posse) my google terraform search for anything now returns one of your modules

Lol yes we tend to do that…

that’s pretty awesome

As far as I know, there is no way to do email validation using TF. Maybe some third party tools exist

@Andriy Knysh (Cloud Posse) that’s what i thought. so what happens when i pass validation_method = "EMAIL" to the aws_acm_certificate resource

FAQs for AWS Certificate Manger

Aha you need to establish a private CA

Q: What are the benefits of using AWS Certificate Manager (ACM) and ACM Private Certificate Authority (CA)?

ACM Private CA is a managed private CA service that helps you easily and securely manage the lifecycle of your private certificates. ACM Private CA provides you a highly-available private CA service without the upfront investment and ongoing maintenance costs of operating your own private CA.

i think that would work

i am assuming there is a terraform resource to create private cert

Yea let me know!

Provides a resource to manage AWS Certificate Manager Private Certificate Authorities

That’s a lot of effort for an internal network with SSL offloading by the ALB, meaning it ends up to be HTTP in the same network anyway.

” curious, if we create internal alb, then then the only way to is to do email validation, correct ?”

That has nothing to do with it, as long as ACM can check if the CNAME resolves to the .aws-domain it will work out with creating the certificate.

Cool - are you doing this with #airship?

@maarten so if i create a record for the internal alb in route53 private hosted zone then i don’t have to do any cert validation ?

Cert validation is independent of any load balancer.

For AWS it’s just a mechanism to know that you control that domain, either through E-mail or DNS.

but i still need a cert for internal service for https, correct ?

yes

let me look it up for you

AWS Certificate Manager (ACM) does not “currently” support SSL certificates for private hosted zones.

Good to know… so your options are in fact then to

- create your own ssl authority or

- use a public domain instead

Certificate creation is outside the scope of the module, as it’s more likely to have one wildcard cert for many services

Let’s continue here Rohit, sub-threads confuse me

i noticed that when i do terraform destory it destroys all the resources but the certificate manager still has the certs that were created by terraform. Is there flag that needs to be passed for it to destroy those certs ?

That’s odd, are you 100% sure ?

yes

I saw the cert gets destroyed

maybe you can try and see if you can repeat that behaviour

trying now

Looks like it was just that one time

when i tried again everything was destroyed gracefully

i am trying to pass params to my userdata template,

data "template_file" "user-data" {

template = "${file("${path.cwd}/modules/compute/user-data.sh.tpl")}"

vars = {

app_env = "${terraform.workspace}"

region_name = "${local.aws_region}"

chef_environment_name = "${local.get_chef_environment_name}"

}

}

and in my template, i have the following

export NODE_NAME="$$(app_env)app-$$(curl --silent --show-error --retry 3 <http://169.254.169.254/latest/meta-data/instance-id>)" # this uses the EC2 instance ID as the node name

the app_env is not being evaluated

it just stays as is

is there anything wrong in my template ?

@rohit i think you need one $, not two, and curly braces

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

the format is ${var}

i tried that

export NODE_NAME="$(app_env)app-$$(curl --silent --show-error --retry 3 <http://169.254.169.254/latest/meta-data/instance-id>)" # this uses the EC2 instance ID as the node name

curly braces

yeah that was a silly mistake by me

when i updated the template and ran terraform plan followed by terraform apply the userdata on the launch template did not get updated

i am assuming it is because of the difference between tempalte vs resource

@Andriy Knysh (Cloud Posse) is this true ?

Renders a template from a file.

template_file uses the format ${var}

so i would have to wrap curl command in ${}

yes

ah wait

not sure

you use ${var} format in the template if you set the var in template_file

this $$(curl --silent --show-error --retry 3 <http://169.254.169.254/latest/meta-data/instance-id>) is not evaluated by TF I suppose

is it called when the file gets executed on the instance?

yes, the userdata gets executed on the instance

yea those formats are different and often confusing, have to always look them up

yeah. similarly, i always have to look up symlink syntax for source and destination

I am using terraform-terraform-labeland terraform-aws-modules/vpc/aws module, i noticed that even though i have name = "VPC" in terraform-terraform-label it is converting it to lowercase

and setting the Name as myapp-vpc instead of myapp-VPC

it does convert to lower case https://github.com/cloudposse/terraform-terraform-label/blob/master/main.tf#L4

Terraform Module to define a consistent naming convention by (namespace, stage, name, [attributes]) - cloudposse/terraform-terraform-label

yeah i saw that, can i know why is it being converted to lowercase?

i believe for consistency. is it a problem for you? (it does not convert tags)

yeah i was wanting to keep whatever was defined in the name if possible

Is there anything i could do to achieve this ?

i mean i could do the same thing in my module but without converting the tags to lowercase

locals {

enabled = "${var.enabled == "true" ? true : false }"

id = "${local.enabled == true ? join(var.delimiter, compact(concat(list(var.namespace, var.stage, var.name), var.attributes))) : ""}"

name = "${local.enabled == true ? format("%v", var.name) : ""}"

namespace = "${local.enabled == true ? format("%v", var.namespace) : ""}"

stage = "${local.enabled == true ? format("%v", var.stage) : ""}"

attributes = "${local.enabled == true ? format("%v", join(var.delimiter, compact(var.attributes))) : ""}"

# Merge input tags with our tags.

# Note: `Name` has a special meaning in AWS and we need to disamgiuate it by using the computed `id`

tags = "${

merge(

map(

"Name", "${local.id}",

"Namespace", "${local.namespace}",

"Stage", "${local.stage}"

), var.tags

)

}"

}

Do you have plans to do the same in your module ?

we use it in many other modules and we convert to lower case. actually, we always specify lower-case names. So for backwards compatibility we would not change it to not convert. But you might want to open a PR against our repo with added flag to specify whether to convert or not (e.g. var.convert_case) with default true

i can definitely do that

i don’t think that would take a lot of effort

i might be wrong

i guess the tricky part would be to figure out nested conditional statements. I believe nested conditional statements will become easier in terraform v0.12

i am not sure if this is a valid syntax in terraform

id = "${local.enabled == true ? (local.convert_case == true ? lower(join(var.delimiter, compact(concat(list(var.namespace, var.stage, var.name), var.attributes)))) : join(var.delimiter, compact(concat(list(var.namespace, var.stage, var.name), var.attributes))))) : ""}"

can anyone please confirm ?

more or less, but i would store the non-transformed value somewhere as a local

rather than duplicate it twice

yeah that would make more sense

how do i get access to push my git branch to your repository ?

oh, start by creating a github fork of the repo

then make your changes to the fork

from there, you can open up a PR against our upstream

sorry, i thought that i can push my feature branch directly

i opened a PR

can you paste the link

PR link ?

reviewed

Also, can you add your business use-case to the “why” of the description

i wanted to use convert_case variable but to make it match your variable naming convention i used convertcase but i took your suggestion and used i wanted to use convert_case variable but to make it match your variable naming convention i used convertcase`

i think we use underscores everywhere

if we don’t it’s because the upstream resource did not (e.g. terraform)

@Erik Osterman (Cloud Posse) thanks. Even though this was a small change it was fun working on it

yea, this will make it easier for you to contribute other changes

yea

thanks! we’ll get this merged tomorrow probably

that would be great. when do you generally push to registry ?

oh, registry picks up changes in real-time

so as soon as we merge and tag a release it will be there.

that is awesome

since it’s late, it’s best to have andriy review too

i miss things

no worries

what tool do you use to automatically push to registry?

the registry actually is just a proxy for github

all we need to do is register a module with the registry

it’s not like docker registry where you have to push

ohh i thought it was similar to docker registry

but this is better i guess, this may have it’s pros and cons

i like that there’s zero overhead as a maintainer

nothing we need to remember to do

everytime i need to push a package to rubyforge i gotta dig up my notes

i’m curious - is anyone else peeved by the TF_VAR_ prefix? I find us having to map a lot of envs. Plus, when using chamber they all get uppercased.

I want a simple cli that normalizes environment variables for terraform. it would work like the existing env cli, but support adding the TF_VAR_ prefix and auto-lower everything after it.

e.g.

tfenv terraform plan

tfenv command

this could be coupled with chamber.

chamber exec foobar -- tfenv terraform plan

(not to be confused with the rbenv cli logic)

2018-12-02

If case is the main complaint, I wonder if there’s been any discussion in making the env case insensitive in terraform core?

I’m not turning up any discussion around it, but I’m on my phone and not searching all that extensively

i know i’ve tested it for case insensitivity and it does not work

so i have a prototype of the above that i’m about to push

it supports whitelists and blacklists (regex) for security (e.g. exclude AWS_* credentials)

this is going to make interoperability between all of our components much cleaner and our documentation easier to write.

Oh, I know it’s case sensitive for sure, I mean something like a feature request to make it case insensitive so upper case works (which is more inline with env convention), without breaking backwards compatibility

Ah gotcha, yea can’t find any feature request for that

I don’t see that getting approved though…

Might be worth having the conversation at least, since they really are going against env convention as it is…

Not related to the main discussion, but still :) consider using direnv tool to automatically set env per directory. I use it with terragrunt to set correctly IAM role to assume per directory.

Do you use –terragrunt-working-dir with that? Or just change directory first?

I just change directory

never had a need to play with –terragrunt-working-dir

Ahhh ok, I only use the flag myself

yea, direnv is nice for that

my problem is that we use kops, gomplate, chamber, etc

and the TF_VAR_ stuff makes it cumbersum

chamber upper cases everything

kops should not depend on TF_VAR_ envs

so we end up duplicating a lot of envs (or reassigning them)

so i want a canonical list of normal envs that we can map to terraform

@antonbabenko though i’ve not gone deep on direnv

do you see a way to achieve the same thing in a DRY manner?

if so, i’d prefer to use direnv

basically, i’d like to normalize envs (lowercase) and add TF_VAR_ prefix without defining by hand for each env

Do kops and chamber not namespace the envs they read?

If not, I suppose where I’m going is, why not use the TF_VAR_ env in those configs? All the prefixing would be annoying, but at least the values would only be defined once

I don’t like making terraform the canonical source of truth

Oh chamber upper cases things. Blergh

Yea plus that

I guess I don’t see how to inform the different utilities which envs to use or which env namespaces/prefixes to apply per utility… I think it would require going down the road you started with a new wrapper utility with it’s own config file

I once used a shell script which converted env vars for similar reason, but it was before I discovered direnv.

I think I am going to combine what I have with direnv

Per your tip :-)

Yeah, sometimes a little copy-paste is still the simplest abstraction…

@tamsky are you also using direnv?

we added support for this yesterday

0.41.0

2018-12-03

The problem I found with using direnv previously on a large project was that users of the project started to expect a .envrc file anytime any thing could or needed to be set in env vars. Maybe worth thinking about if there is a clear cut separation between what IS in direnv and what is NOT

@Jan true - and to your point that you raised earlier with me about having a clear cut delineation of what goes where

i don’t have a “best practice” in mind yet. i need to let this simmer for a bit.

consistency is better than correctness

indeed.

@Jan going to move to #geodesic

@Andriy Knysh (Cloud Posse) Did you get a chance to review my PR ?

Is it possible to attach ASG to existing cloudwatch event using terraform ?

yes, that’s possible

are you using our autoscale group module?

here’s an example: https://github.com/cloudposse/terraform-aws-ec2-autoscale-group/blob/master/autoscaling.tf

Terraform module to provision Auto Scaling Group and Launch Template on AWS - cloudposse/terraform-aws-ec2-autoscale-group

@Jake Lundberg (HashiCorp) it was just brought to our attention that the registry is mangling html entites: https://registry.terraform.io/modules/cloudposse/ansible/null/0.4.1

while on github we see

OK, I’d open an issue in Github.

I can’t find the appropriate repo to open it against.

@Erik Osterman (Cloud Posse) i am using aws_autoscaling_group resource, so once an ASG is created i want to go and add the ASG name in cloudwatch event so that it will disassociate nodes from chef server

makes sense ?

@rohit I’m not sure on the question there

I’ve seen those disassociation scripts put in as shutdown scripts

Although that does need a clean shutdown event

Let me explain this in a better way. When autoscaling event is triggered, the userdata script gets executed and it does associate the node with the chef server. When a node in this ASG is terminated, a cloudwatch event is triggered which inturn calls a lambda function to disassociate the node from the chef server

i was planning to do this in terraform

so i am using aws_autoscaling_group resource to create ASG

OK, still not sure what the question is :D

@rohit as @Erik Osterman (Cloud Posse) pointed out, you can connect the ASG to CloudWatch alarms using Terraform https://github.com/cloudposse/terraform-aws-ec2-autoscale-group/blob/master/autoscaling.tf#L27

Terraform module to provision Auto Scaling Group and Launch Template on AWS - cloudposse/terraform-aws-ec2-autoscale-group

i want to connect the ASG to cloudwatch event rule

{

"source": [

"aws.autoscaling"

],

"detail-type": [

"EC2 Instance Terminate Successful"

],

"detail": {

"AutoScalingGroupName": [

"MY-ASG"

]

}

}

Looks like you are on the right track for that. I’m still not seeing a question or explanation of what problem you are having. Have you looked at what @Andriy Knysh (Cloud Posse) posted?

I think what i need is aws_cloudwatch_event_rule and not aws_cloudwatch_metric_alarm

i am struggling to figure out how to add my asg name to cloudwatch event rule

Events in Amazon CloudWatch Events are represented as JSON objects. For more information about JSON objects, see RFC 7159 . The following is an example event:

resource "aws_cloudwatch_event_rule" "console" {

name = "name"

description = "....."

event_pattern = <<PATTERN

{

"detail-type": [

"..."

]

,

"resources": [

"${aws_autoscaling_group.default.name}"

]

}

PATTERN

}

try this ^

Provides a CloudWatch Event Rule resource.

what i am trying to do is exactly mentioned here https://aws.amazon.com/blogs/mt/using-aws-opsworks-for-chef-automate-to-manage-ec2-instances-with-auto-scaling/

Step 7: Add a CloudWatch rule to trigger the Lambda function on instance termination

@rohit

take a look at this as well https://blog.codeship.com/cloudwatch-event-notifications-using-aws-lambda/

In this article, we will see how we can set up an AWS Lambda function to consume events from CloudWatch. By the end of this article, we will have an AWS Lambda function that will post a notification to a Slack channel. However, since the mechanism will be generic, you should be able to customize it to your use cases.

will do. Thanks

i am really struggling with this today

Maybe https://github.com/brantburnett/terraform-aws-autoscaling-route53-srv/blob/master/main.tf helps?

Manages a Route 53 DNS SRV record to refer to all servers in a set of auto scaling groups - brantburnett/terraform-aws-autoscaling-route53-srv

this is helpful

You don’t want "${join("\",\"", var.autoscaling_group_names)}" though, right

I think i will still have to add my ASG name to the cloudwatch event

i am not thinking straight today

so pardon me

Yup, you should do that

has anyone used the resource aws_mq_broker https://www.terraform.io/docs/providers/aws/r/mq_broker.html in conjunction Hashicorp vault to store the users as a list?

Provides an MQ Broker Resource

I have tried to use them together to display a list a users from Vault but I think the Terraform resource checks for its dependancies before anything is ran against the API

I have tried the following:

Serving Individual users with attributes

user = [

{

username = "${data.vault_generic_secret.amq.data["username"]}"

password = "${data.vault_generic_secret.amq.data["password"]}"

console_access = true

}

]

&

Sending a dictionary of users

user = [${data.vault_generic_secret.amq.data["users"]}]

what’s the problem you’re having?

the issue is that in order to use this resources you would have to keep user login information in version control

what I want to do is keep the user information in Vault separate of the source code

is this possible

I am currently having to manually replace the user credentials with REDACTED with every change to Terraform

I hope I am explaining my issue accurately

i am a confused because from the example, it looks like to me they are omnig from vault?

username = "${data.vault_generic_secret.amq.data["username"]}"

password = "${data.vault_generic_secret.amq.data["password"]}"

and not form source control

those are my examples of what I have tried (or close to that)

but they don not work

aha, i see

it fails before it makes an API call

ok, so what you’re trying to do looks good more or less. now we need to figure out why it’s failing.

can you share the invocation of vault_generic_secret?

which leads me to believe that it does some sort of dependency check for required attributes

well I have gone as far as checking to make sure Terraform is getting the secret which it is

but Terraform fails even before it queries Vault

I have verified this by outputting the invocation of the secret. Which works when I do not use the AMQ Resource but does not when I include the AMQ resource in the Terraform config

can you share the precise error?

lemme see if I can create a quick mock

I thought I saved my work in a branch but didn’t

ok here is the error with some trace

ok, please share the invocation of module.amq

and the invocation of data.vault_generic_secret

sorry but what do you mean by invocation? do you want to see how I am executing it our do you want the entire stack trace?

oh, yes, that’s ambiguous

i mean the HCL code

I don’t see data.vault_generic_secret

and I don’t see module.amq

wasn’t done, I keep getting distracted

this one is the root config and the former was the module I created

ok

I would explore the data structures.

Error: module.amq.aws_mq_broker.amq_broker: "user.0.password": required field is not set

this gives me a hint that perhaps the input data structure is wrong

perhaps ${data.vault_generic_secret.amq.data["users"]} is not in the right structure.

is users a list of maps?

[{ username: "foo", password: "bar"}, {username: "a", password: "b"}]

also, I have seen problem with this

amq_users = ["${data.vault_generic_secret.amq.data["users"]}"]

where in ["${...}"] the content of the ${...} interpolation returns a list.

so we get a list of lists.

terraform is supposed to flatten this, however, I have found this not the case when working with various providers.

my guess is that the authors don’t properly handle the inputs.

perhaps try this too

amq_users = "${data.vault_generic_secret.amq.data["users"]}"

thanks I’ll give that a shot

do you know if Terraform first tries to validate the resource dependancies before actually executing the resource? During the plan or pre-apply stages?

validate the resource dependancies

not from what i’ve seen. there’s very little “schema” style validation

I noticed that my change for convert_case to terraform-terraform-label defaults the value "true" but in [main.tf](http://main.tf) i am using it as boolean

Terraform Module to define a consistent naming convention by (namespace, stage, name, [attributes]) - cloudposse/terraform-terraform-label

Terraform Module to define a consistent naming convention by (namespace, stage, name, [attributes]) - cloudposse/terraform-terraform-label

so i am going to submit another PR to fix this issue

sorry about that

@rohit wait

everything is ok

you convert it to boolean in the locals

i feel something is wrong

and you need to add it

sorry, we missed it

instead of using var.convert_case i used local.convert_case

locals {

convert_case = "${var.convert_case == "true" ? true : false }"

}

add this ^

yeah that’s what i am doing now

i found this when i was trying to use it in my project

and use one locals, no need to make multiple

will do

@Andriy Knysh (Cloud Posse) i am sorry about not noticing that earlier

yea, we did not notice it either

submitted another PR

tested this time?

what is the recommended way to test it ?

run terraform plan/apply on the example https://github.com/cloudposse/terraform-terraform-label/blob/master/examples/complete/main.tf

Terraform Module to define a consistent naming convention by (namespace, stage, name, [attributes]) - cloudposse/terraform-terraform-label

Looks good to me

please run terraform fmt, lint is failing https://travis-ci.org/cloudposse/terraform-terraform-label/builds/463143725?utm_source=github_status&utm_medium=notification

didn’t realize that formatting would cause The Travis CI build to fail

pushed the change

thanks, merged

awesome

which tool do you use for secrets management ?

my 2¢, I love credstash works really well with Terraform.

if you can use KMS in AWS

is there an API for credstash ?

we use chamber https://github.com/segmentio/chamber

CLI for managing secrets. Contribute to segmentio/chamber development by creating an account on GitHub.

any thoughts about using hashicorp vault ?

Valut is really cool but is a higher barrier to start using (setting up servers) vs credstash is serverless.

that is great

what about chamber ?

chamber uses AWS Systems Manager Parameter Store also serverless.

I’m reading https://segment.com/blog/the-right-way-to-manage-secrets/ and probably would have picked chamber if it was available when I started using credstash

good to know. will read about it

I am looking for something that will play nicely with Chef

I’m looking at implementing vault at my work but that’s to cover multiple requirements not just terraform secrets.

Yea, it’s pretty sweet!

i am looking at something that is not limited to terraform secrets as well

but something that works with AWS + Chef

I use credstash for .env in our rails app, terraform secrets, and secrets in Salt. Having said that after reading more about chamber I probably would have gone with that instead.

2018-12-04

anyone here use terraform to spin up EKS/AKS/GKE and use the kubernetes/helm providers

Yes I spin up EKS and Helm as well as Concourse using Terraform @btai

@Matthew do you have RBAC enabled? if so, when you spin up your EKS cluster are you automatically running a helm install in the same terraform apply?

Yes to both of those

scenario being, running a helm install to provision the cluster with roles, role bindings, cluster role bindings, possibly cluster wide services, etc.

so im not using EKS, im using AKS so im not sure if you run into this issue

but im attempting to create some very basic clusterrolebindings

provider "kubernetes" {

host = "${module.aks_cluster.host}"

client_certificate = "${base64decode(module.aks_cluster.client_cert)}"

client_key = "${base64decode(module.aks_cluster.client_key)}"

cluster_ca_certificate = "${base64decode(module.aks_cluster.ca_cert)}"

}

Give me a moment and we can discuss

kk

anyone here have experience with terraform enterprise? is it worth the cost in your opinion?

i didnt do too much digging, but i wasnt a fan of having to have a git repo per module. they do this because they use git tags for versioning modules, but i didnt want to have that many repos

i have no clue why this wont work

-> this should fix it owners = ["137112412989"]

also you can simplify your filters

filter {

name = "name"

values = ["amzn-ami-hvm-*-x86_64-gp2"]

}

# Grab most recent linux ami

data "aws_ami" "linux_ami" {

most_recent = true

owners = ["137112412989"]

executable_users = ["self"]

filter {

name = "name"

values = ["amzn-ami-hvm-*-x86_64-gp2"]

}

}

also I’m not sure if values = ["amzn-ami-hvm-*"] is enough so I’ve updated it to values = ["amzn-ami-hvm-*-x86_64-gp2"]

cc @pericdaniel

Thank you so much!!

Seems like owners is the key component

@pericdaniel Always use owner for Amazon AMIs. Otherwise you could get anyone’s AMI with a similar name. And there are many of those. Same thing for other vendor AMIs. Here is a terraform module that handles a number of vendors https://github.com/devops-workflow/terraform-aws-ami-ids

Terraform module to lookup AMI IDs. Contribute to devops-workflow/terraform-aws-ami-ids development by creating an account on GitHub.

Thank you!!!

2018-12-05

hello! i have a problem with terraform and cloudfront, wondering if someone has seen this issue

I have a website hosted statically on S3 with Private ACL. I am using Cloudfront to serve the content

I am using an S3 origin identity, which requires an access identity resource

and I see that it creates the access identity successfully, but the problem is that it does not attach it to the cloudfront distro

when I go back to the AWS console, the access id is listed in the dropdown but not selected

i suspect the problem has to do with this section of the code:

any tips?

@inactive here is what we have and it’s working, take a look https://github.com/cloudposse/terraform-aws-cloudfront-s3-cdn/blob/master/main.tf

Terraform module to easily provision CloudFront CDN backed by an S3 origin - cloudposse/terraform-aws-cloudfront-s3-cdn

mmm… you have the same code as i do. the s3_origin_config snippet references the access_id, which I expected would set it

not sure why its not doing in my case

you can share your complete code, we’ll take a look (maybe something else is missing)

ok

@inactive try deleting this

website {

index_document = "${var.webui_root_object}"

}

it prob creates the bucket as website (need to verify if that statement is enough for that), but anyway, if it creates a website, it does not use origin access identity - cloudfront distribution just points to the public website URL

we also have this module to create CloudFront distribution for a website (which could be an S3 bucket or a custom origin) https://github.com/cloudposse/terraform-aws-cloudfront-cdn

Terraform Module that implements a CloudFront Distribution (CDN) for a custom origin. - cloudposse/terraform-aws-cloudfront-cdn

and here is how to create a website from S3 bucket https://github.com/cloudposse/terraform-aws-s3-website

Terraform Module for Creating S3 backed Websites and Route53 DNS - cloudposse/terraform-aws-s3-website

in short, CloudFront could be pointed to an S3 bucket as origin (in which case you need origin access identity) AND also to an S3 bucket as a website - they have completely diff URLs in these two cases

ok let me try that

i get this error Resource ‘aws_security_group.dbsg’ not found for variable ‘aws_security_group.dbsg.id’ even tho i created the sg… hmmm

@pericdaniel need help or found the solution?

@inactive how it goes?

i found a new solution! thank you tho!

i think i did a local and an outpiut

to make it work

Learn about the inner workings of Terraform and examine all the elements of a provider, from the documentation to the test suite. You’ll also see how to create and contribute to them.

my favorite is the first word in the first sentence

Terrafom is an amazing tool that lets you define your infrastructure as code. Under the hood it’s an incredibly powerful state machine that makes API requests and marshals resources.

ill have to watch this

Quick question to @here does the Cloudtrail Module support setting the SNS settings? and is that really needed, just have not used it….

Our terraform-aws-cloudtrail does not current have any code for that

this module created by Jamie does something similar

Terraform module for creating alarms for tracking important changes and occurances from cloudtrail. - cloudposse/terraform-aws-cloudtrail-cloudwatch-alarms

with SNS alarms

I have not personally deployed it.

@davidvasandani someone else reported the exact same EKS problem you ran into

- module.eks_workers.module.autoscale_group.data.null_data_source.tags_as_list_of_maps: data.null_data_source.tags_as_list_of_maps: value of ‘count’ cannot be computed

@patrickleet is trying it right now

maybe you can compare notes

@patrickleet has joined the channel

Good to know I’m not alone.

or let him know what you tried

kops is pretty easy/peasy right?

So easy.

I was up and running in no time and have started learning to deploy statup.

haha ok so you moved to kops

kops is much easier to manage the full SDLC of a kubernetes cluster

rolling updates, drain+cordon

yea I have a couple of kops clusters

and a gke one

ok, yea, so you know the lay of the land

I was specifically trying to play with eks using terraform and came across your modules

we rolled out EKS ~2 months ago for the same reason

these modules were the byproduct of that. @Andriy Knysh (Cloud Posse) is not sure what changed. we always leave things in a working state.

yea - I’m able to plan the vpc

which has tags calculated

which is where it’s choking

it’s not able to get the count

Same here.

haven’t actually tried applying the vpc plan and moving further though

@patrickleet I think we ran into the same issue.

With the null_data_source in eks_workers commented out I had hoped the full eks module would work (I reverted the eks_cluster allowed_security_groups back to the default) but eks_cluster still errors.

module.eks_cluster.aws_security_group_rule.ingress_security_groups: aws_security_group_rule.ingress_security_groups: value of 'count' cannot be computed

Wait.

I was able to plan the VPC just fine.

make your enabled var false

it will plan the VPC with the tags count just fine.

but then errors out when I set it to true, see the Slack link above.

I’ll take a look at the EKS module tomorrow. Not good to have it in that state :(

Is there a comprehensive gitignore file for any terraform project ?

this should be good enough https://github.com/cloudposse/terraform-null-label/blob/master/.gitignore

Terraform Module to define a consistent naming convention by (namespace, stage, name, [attributes]) - cloudposse/terraform-null-label

that’s what i was using

do you generally state the plan files somewhere if not in your repo ?

you mean to add the plan files to .gitignore?

A collection of useful .gitignore templates. Contribute to github/gitignore development by creating an account on GitHub.

i mean do you store the generated plan somewhere

if you do it on your local computer, the state is stored locally. We use S3 state backend with DynamoDB table for state locking, which is a recommended way of doing things https://github.com/cloudposse/terraform-aws-tfstate-backend

Provision an S3 bucket to store terraform.tfstate file and a DynamoDB table to lock the state file to prevent concurrent modifications and state corruption - cloudposse/terraform-aws-tfstate-backend

are you asking about the TF state or about the files that terraform plan can generate and then use for terraform apply?

i was asking about the later. when you run terraform plan --var-file="config/app.tfvars" --out=app.storage.plan and then terraform apply app.storage.plan

do you store the generated plan file somewhere or just ignore it ?

since we store TF state in S3, we don’t use the plan files, you can say we ignore them

cool

if i have tfvars inside a config dir, ex: config/app1.tfvars, config/app2.tfvars, i think including *.tfvars in gitignore will restrict both these files to be checkedin in my code repo, correct ?

maybe **/*.tfvars?

i thought *.tfvars would be enough, maybe i was wrong

should be enough

will try that

Also, what is the importance of lifecycle block here https://github.com/cloudposse/terraform-aws-tfstate-backend/blob/d7da47b6ee33511bfe99c0fdc49e9e9ac4ab88ec/main.tf#L55

Provision an S3 bucket to store terraform.tfstate file and a DynamoDB table to lock the state file to prevent concurrent modifications and state corruption - cloudposse/terraform-aws-tfstate-backend

in this case it’s not actually needed. but if you use https://github.com/cloudposse/terraform-aws-dynamodb-autoscaler, then it will help to prevent TF from recreating everything when the auto-scaler changes the read/write capacity

Terraform module to provision DynamoDB autoscaler. Contribute to cloudposse/terraform-aws-dynamodb-autoscaler development by creating an account on GitHub.

Terraform module that implements AWS DynamoDB with support for AutoScaling - cloudposse/terraform-aws-dynamodb

2018-12-06

Life cycle hooks can also be super useful for doing X when Y happens. A common case I have is when destroying a Cassandra cluster I need to unmount the ebs volumes from the instances so I can do a fast snapshot and then terminate

Nifty idea!

Need to see if I can publish that

actually this is self explanatory

provisioner "remote-exec" {

inline = [

"sudo systemctl stop cassandra",

"sudo umount /cassandra/data",

"sudo umount /cassandra/commitlog",

]

when = "destroy"

}

}

On Event terminate remote-exec umount

Also great for zero downtime changes, create before destroy

Etc

In the case you posted I would expect its to let terraform know that it doesn’t need to care about any. Changes of the values

lifecycle { ignore_changes = [“read_capacity”, “write_capacity”] }

makes sense

never use lifecycle hooks so it is new to me

In general, where are the lifecycle hooks used ?

Can be used under different conditions. A common one could be where Terraform isn’t the only thing managing a resource, or element of resources.

or if a resource could change outside of TF state like in the example with DynamoDB auto-scaler, which can update the provisioned capacity and TF would see different values and try to recreate it

Seems like this could be considered a bug…. If the table is enabled for autoscaling, then TF shouldb automatically ignore changes to provisioned capacity values…

yea, but auto-scaling is a separate AWS resource and TF would have know how to reconcile two resources, which is not easy

Oh it’s two resources? I see… Hmmm… Maybe it could be handled in one, kind of like rules in a security group have two modes, inline or attached…

@Andriy Knysh (Cloud Posse) I am happy to report that your suggestion fixed my problem, re: CloudFront

I still don’t quite understand how it’s still able to pull the default html page, since I thought that I had to explicitly define it under the index_document parameter

but it somehow works

tyvm for your help

glad it worked for you @inactive

did you deploy the S3 bucket as a website?

i assume no, since I removed the whole website {…} section

but CF doesn’t seem to care, as it serves the content without that designation

we just released new version of https://github.com/cloudposse/terraform-aws-eks-cluster

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

which fixes value of 'count' cannot be computed errors

the example here should work now in one-phase apply https://github.com/cloudposse/terraform-aws-eks-cluster/tree/master/examples/complete

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

@davidvasandani @patrickleet

Thanks @Andriy Knysh (Cloud Posse) and @Erik Osterman (Cloud Posse)

While this module works y’all still prefer kops though right?

Yea…

This is more a proof of concept or looking for folks to ask/add additional features?

Since we don’t have a story for that, we aren’t pushing it. It’s mostly there on standby pending customer request to use EKS over Kops

Then we will invest in the upgrade story.

I really like the architecture of the modules

And the way we decomposed it makes it easy to have different kinds of node pools

The upgrade story would probably use that story to spin up multiple node pools and move workloads around and then then scale down the old node pool

Almost like replicating the Kubernetes strategy for deployments and replica sets

But for auto scale groups instead

2018-12-07

is this section working for anyone ? https://github.com/cloudposse/terraform-aws-elasticsearch/blob/master/main.tf#L159

Terraform module to provision an Elasticsearch cluster with built-in integrations with Kibana and Logstash. - cloudposse/terraform-aws-elasticsearch

it sets a router53 record for me but it’s not working

dig production-kibana.infra.mytonic.com

;; QUESTION SECTION:

;production-kibana.infra.mytonic.com. IN A

;; ANSWER SECTION:

production-kibana.infra.mytost.com. 59 IN CNAME vpc-fluentd-production-elastic-43nbkjmxhatoajegdhyxekul3a.ap-southeast-1.es.amazonaws.com/_plugin/kibana/.

;; AUTHORITY SECTION:

. 86398 IN SOA a.root-servers.net. nstld.verisign-grs.com. 2018120700 1800 900 604800 86400

;; Query time: 333 msec

;; SERVER: 8.8.8.8#53(8.8.8.8)

but if I hit <http://production-kibana.infra.mytost.com> it’s not working.

I have to add <http://production-kibana.infra.mytost.com/_plugin/kibana/> manually

@Andriy Knysh (Cloud Posse)

I have a generalish terraform / geodesic question so not sure if I should ask in here or #geodesic

I’d like to spin up . 4 accounts with 4 identical vpc’s (with the exception of name and cidr) in which 3 will have kops managed k8s clusters

2 of those 3 the k8s cluster would be launched after the vpc needs to be around for some time

What I have not quite yet under stood is how the two different flows will look.

1.a) create vpc b) create resources in that vpc c) create k8s cluster with kops in existing vpc 2.a) create vpc using kops b) create resources in the vpc created by kops

for flow 1 in a geodesig way

im not sure I understand how or if it is supported to launch into and EXISTING vpc

Terraform module to create a peering connection between a backing services VPC and a VPC created by Kops - cloudposse/terraform-aws-kops-vpc-peering

terraform-aws-kops-vpc-peering - Terraform module to create a peering connection between a backing services VPC and a VPC created by Kops

So is it expected that any geodesic kops created k8s cluster will run in its own vpc?

@Jan i’ll help you

so yes, you can deploy kops cluster into an existing VPC

but we don’t do that for a few reason

you don’t want to manage CIDR overlaps for example

I know I can and how to, its the pattern I have followed

so we deploy kops into a separate VPC

mmm

Not sure I understand the rational there

and then deploy a backing-services VPC for all other stuff like Aurora, Elasticsearch, Redis etc.

then we do VPC peering

we don’t want to manage CIDR overlaps

there is no overlap

the k8s cluster uses the vpc cidr

but you can deploy kops into an existing VPC

there is no diff, but just more separation

IP’s are still managed by the dhcp optionset in the vpc

mmm

and we have TF modules that do it already

interesting

i can show you the complete flow for that (just deployed it for a client)

Alright I think I will write a TF module to do k8s via kops into an existing and make a pr

Yea I have seen the flow for creating a backing VPC + kops (k8s)vpc with peering

when we tried to deploy kops into existing VPC, we ran into a few issues, but i don’t remember exactly which ones

I recall there being weirdness and cidr overlaps if you dont use the subnet =ids and stuff

deploying into a separate VPC, did not see any issues

so for example I have some thing like this

corporate network === AWS DirectConnect ==> [shared service-vpc(k8s)] /22 cidr —> peering –> [{prod,pre-prod}-vpc(k8s)] /16 cidr

where we run shared monitoring and logging and ci/cd services in the shared-services vpc (mostly inside k8s)

k8s also has ingress rules that expose services in the peered prod and pre prod accounts tot he corp network

overhead on the corp network is just the routing of the /22

so you already using vpc peering?

I am busy setting this all up

corp network will have direct connect to a /22 vpc

the /22 with have peering to many vpcs within a /16

that /16 we will split as many times as we need, probably into /24’s

or 23’s

@Jan your question was how to deploy kops into an existing VPC?

My question was more if there is geodesic support for that flow or if i should add a module to do so

we deploy kops (from geodesic, but it’s not related) from a template, which is here https://github.com/cloudposse/geodesic/blob/master/rootfs/templates/kops/default.yaml

Geodesic is the fastest way to get up and running with a rock solid, production grade cloud platform built on top of strictly Open Source tools. https://slack.cloudposse.com/ - cloudposse/geodesic

the template deploys it into a separate vpc

Yep this I used to do having terraform render a go templated

ok I will play with a module and see where the overlap/handover is

if we want to deploy into an existing vpc using the template, it should be modified

there is no TF module for that

Yep, I will create one

TF module comes into play when we need to do vpc peering

just figured Id ask before I made one

you thinking about a TF module to deploy kops?

a new tf module to fetch vpc metadatas (maybe), and deploy k8s via kops into the existing vpc

vpc metadata would not be needed if it vpc was created in tf

for kops metadata, take a look https://github.com/cloudposse/terraform-aws-kops-metadata

Terraform module to lookup resources within a Kops cluster for easier integration with Terraform - cloudposse/terraform-aws-kops-metadata

yea so this is that flow in reverse

example here https://github.com/cloudposse/terraform-root-modules/blob/master/aws/backing-services/kops-metadata.tf

Collection of Terraform root module invocations for provisioning reference architectures - cloudposse/terraform-root-modules

or here we lookup both vpcs, kops and backing-services https://github.com/cloudposse/terraform-root-modules/blob/master/aws/eks-backing-services-peering/main.tf

Collection of Terraform root module invocations for provisioning reference architectures - cloudposse/terraform-root-modules

@Jan not sure if it answers your questions :disappointed: but what we have is 1) kops template to deploy kops into a separate VPC; 2) TF modules for VPC peering and kops metadata lookup

we played with deploying kops into an existing vpc, but abandoned it for a few reasons

@sohel2020 you have to manually add _plugin/kibana/ to <http://production-kibana.infra.mytost.com> because URL paths are not supported for CNAMEs (and [production-kibana.infra.mytost.com](http://production-kibana.infra.mytost.com) is a CNAME to the Elasticsearch URL generated by AWS)

https://stackoverflow.com/questions/9444055/using-dns-to-redirect-to-another-url-with-a-path https://webmasters.stackexchange.com/questions/102331/is-it-possible-to-have-a-cname-dns-record-point-to-a-url-with-a-path

I’m trying to redirect a domain to another via DNS. I know that using IN CNAME it’s posible. www.proof.com IN CNAME www.proof-two.com. What i need is a redirection with a path. When someone type…

I’ve registered several domains for my nieces and nephews, the idea being to create small static webpages for them, so they can say ‘look at my website!’. In terms of hosting it, I’m using expres…

even if you do it in the Route53 console, you get the error

The record set could not be saved because:

- The Value field contains invalid characters or is in an invalid format.

Yea you want to inject kops into a vpc. We don’t have that but I do like it and we have a customer that did that but without modularizing it.

I have done it in several ways in tf

I will look to make a module and contribute it

We would like to have a module for it, so that would be awesome

thanks @Jan

just a quick sanity check. when was the last time someone used the terraform-aws-vpc module… i copy pasta’d the example

module "vpc" {

source = "git::<https://github.com/cloudposse/terraform-aws-vpc.git?ref=master>"

namespace = "cp"

stage = "prod"

name = "app"

}

and it gave me Error: module "vpc": missing required argument "vpc_id"

We use it all the time

sorta funny when thats what im trying to create

perhaps the sample is messed

Last week most recently. @Andriy Knysh (Cloud Posse) any ideas?

Most current examples are in our root modules folder

Sec

@solairerove will be adding and testing all examples starting next week probably

@solairerove has joined the channel

just used it yesterday on the EKS modules

used the example that pulled the master branch

maybe im an idiot

let me go back and check

nm nothing to see here

tested this yesterday https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/examples/complete/main.tf#L36

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

i musta gotten my pasta messed up

at least it didnt take me all day

the error must have been from another module?

yeah

heres another question… https://github.com/cloudposse/terraform-aws-cloudwatch-flow-logs

Terraform module for enabling flow logs for vpc and subnets. - cloudposse/terraform-aws-cloudwatch-flow-logs

whats kinesis in there for.

shipping somewhere is suppose

Could have lambda slurp off the Kinesis stream, for example

This module was done more for checking a compliance checkbox

All logs stored in s3, but nothing immediately actionable

ahh cool. they have shipping to cwl now, was just wondering if there was a specific purpose

Yea, unfortunately not.

2018-12-08

how to know the best value for max_item_size for memcache aws_elasticache_parameter_group ?

does it depend on the instance type ?

2018-12-09

@rohit Are you hitting a problem caused by the default max item size?

@joshmyers no. i just want to know how the max_item_size works

memcached development tree. Contribute to memcached/memcached development by creating an account on GitHub.

Max item size is the length of the longest value stored. If you are serializing data structures or session data, this could get quite large. If you are just storing simple key value pairs a smaller number is probably fine. This is a hint to memcache for how to organize the data and the size of slabs for storing objects.

What kinda things are you storing in there?

we are using memcache for tomcat session storage

so basically session information

Is 1mb object storage enough for you?

I think so but i will have to check

Looks like availability_zones option is deprecated in favor of preferred_availability_zones

https://github.com/cloudposse/terraform-aws-elasticache-memcached/blob/65a0655e8bde7fb177516bbcdd394eddc8cfcc88/main.tf#L76

Terraform Module for ElastiCache Memcached Cluster - cloudposse/terraform-aws-elasticache-memcached

Could you add an issue for this?

Terraform Module for ElastiCache Memcached Cluster - cloudposse/terraform-aws-elasticache-memcached

sure can

Thanks!

I think i also know how to fix it

so will probably submit a PR sometime tomorrow

Is there a way to view what is stored in dynamodb table ? I was not able to find anything but empty table

The Dynamodb table can be viewed and modified via the DynamoDB UI. There is nothing hiding it Once a Terraform config is setup correctly to write to S3 with locking and applied successfully, it will write to that table. Utill then it will be empty

ohh ok

I am using the terraform-aws-vpc module https://github.com/terraform-aws-modules/terraform-aws-vpc

Terraform module which creates VPC resources on AWS - terraform-aws-modules/terraform-aws-vpc

is it possible to update openvpn configuration,after a vpc is created using this module ?

openvpn? <– not related to vpc

Is there a way to provide subnet information instead of cidr_blocks in resource aws_security_group ingress and egress ?

Can you share an example? Pseudo code

I am on my phone

# create efs resource for storing media

resource "aws_efs_file_system" "media" {

tags = "${merge(var.global_tags, map("Owner","${var.app_owner}"))}"

encrypted = "${var.encrypted}"

performance_mode = "${var.performance_mode}"

throughput_mode = "${var.throughput_mode}"

kms_key_id = "${var.kms_key_id}"

}

resource "aws_efs_mount_target" "media" {

count = "${length(var.aws_azs)}"

file_system_id = "${aws_efs_file_system.media.id}"

subnet_id = "${element(var.vpc_private_subnets, count.index)}"

security_groups = ["${aws_security_group.media.id}"]

depends_on = ["aws_efs_file_system.media", "aws_security_group.media"]

}

# security group for media

resource "aws_security_group" "media" {

name = "${terraform.workspace}-media"

description = "EFS"

vpc_id = "${var.vpc_id}"

lifecycle {

create_before_destroy = true

}

ingress {

from_port = "2049" # NFS

to_port = "2049"

protocol = "tcp"

cidr_blocks = ["${element(var.vpc_private_subnets,0)}","${element(var.vpc_private_subnets,1)}","${element(var.vpc_private_subnets,2)}"]

description = "vpc private subnet"

}

ingress {

from_port = "2049" # NFS

to_port = "2049"

protocol = "tcp"

cidr_blocks = ["${element(var.vpc_public_subnets,0)}","${element(var.vpc_public_subnets,1)}","${element(var.vpc_public_subnets,2)}"]

description = "vpc public subnet"

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = "${merge(var.global_tags, map("Owner","${var.app_owner}"))}"

}

i am getting the following error

any reason you’re not using our module? …just b/c our goal is to make the modules rock solid, rather than manage multiple bespoke setups

It’s just that some of the parameters were not being passed in your module

for example, kms_key_id,throughput_mode

Provides a security group resource.

yes, i can but i want to know if it is possible to directly pass subnet info

Provides a security group resource.

or vpc id

You can pass cidr, security group, or prefix. VPC doesn’t make sense in this context. But I gave you an example of how to get subnets from the vpc in other thread

I don’t think we can pass subnet groups in cidr_blocks

You can pass a list of cidrs. How do you mean subnet group differently?

But it is not hard to use data to look up the cidr blocks

@Steven could you please share an example ?

I am using terraform-aws-modules/vpc/aws module

For the VPC, you are creating the subnets. So, there is no other option than providing the cidrs you want. But once the subnets have been created, you can query for them instead of hard coding them into other code.

data “aws_vpc” “vpc” { tags { Environment = “${var.environment}” } }

data “aws_subnet_ids” “private_subnet_ids” { vpc_id = “${data.aws_vpc.vpc.id}”

tags { Network = “private” } }

data “aws_subnet” “private_subnets” { count = “${length(data.aws_subnet_ids.private_subnet_ids.ids)}” id = “${data.aws_subnet_ids.private_subnet_ids.ids[count.index]}” }

Example of using data from above def:

vpc_id = “${data.aws_vpc.vpc.id}” subnets = “${data.aws_subnet_ids.private_subnet_ids.ids}” ingress_cidr = “${data.aws_subnet.private_subnets.*.cidr_block}”

@Erik Osterman (Cloud Posse) i am using cloudposse/terraform-aws-efs module https://github.com/cloudposse/terraform-aws-efs/blob/1ad219e482eba444eb31b6091ecb6827a0395644/main.tf#L38

Terraform Module to define an EFS Filesystem (aka NFS) - cloudposse/terraform-aws-efs

and i have to pass security_groups

when i execute the following code

i get the following error

any ideas why ?

2018-12-10

mmm

@rohit does it work without the aws_efs_mount_target resource? Does it create the security group?

It looks legit on first inspection (under caffeinated at the mo)

yea I would agree, nothing jumps out as being wrong

Could be something like https://github.com/hashicorp/terraform/issues/18129

Seemingly, when a validation error occurs in a resource (due to a failing ValidateFunc), terraform plan returns missing resource errors over returning the original validation error that caused the …

Also, you shouldn’t need that explicit depends_on, unless that was you testing the graph

i thought it was dependency error so added depends_on

without aws_efs_mount_target, i get the following error

* module.storage.module.kitemedia.aws_security_group.media: "ingress.0.cidr_blocks.0" must contain a valid CIDR, got error parsing: invalid CIDR address: subnet-012345d8ce0d89dbc

So that is the problem. The error when wanting to create the SG is not bubbling up through the code and the SG isn’t actually created because of above error parsing the CIDR address which is actually a subnet

and the error you are receiving instead is just saying I can’t find that SG id

fix the ^^ error with CIDR and try re running without the depends_on and with aws_efs_mount_target uncommented

i think that fixed the issue

@joshmyers thanks

also, i am not able to view output variables

Are you outputting the variables?

yes

for efs, i am now facing the following issue

* module.storage.module.kitemedia.aws_efs_mount_target.kitemedia[0]: 1 error(s) occurred:

* aws_efs_mount_target.kitemedia.0: MountTargetConflict: mount target already exists in this AZ

status code: 409, request id: 74cdca17-fc8a-11e8-bdb0-d7feddd82bcc

* module.storage.module.kitemedia.aws_efs_mount_target.kitemedia[11]: 1 error(s) occurred:

* aws_efs_mount_target.kitemedia.11: MountTargetConflict: mount target already exists in this AZ

status code: 409, request id: 7eca3ec0-fc8a-11e8-b0cb-e76fd688df15

* module.storage.module.kitemedia.aws_efs_mount_target.kitemedia[4]: 1 error(s) occurred:

* aws_efs_mount_target.kitemedia.4: MountTargetConflict: mount target already exists in this AZ

status code: 409, request id: 755597d2-fc8a-11e8-a0c5-25395ed55c14

* module.storage.module.kitemedia.aws_efs_mount_target.kitemedia[24]: 1 error(s) occurred:

What is var.aws_azs set to?

It looks like that count is way higher than I’d imagine the number of AZs available…

@joshmyers you were correct

i updated the count to use the length

` count = “${length(split(“,”, var.aws_azs))}”`

but i still get 2 errors

* module.storage.module.kitemedia.aws_efs_mount_target.kitemedia[2]: 1 error(s) occurred:

* aws_efs_mount_target.kitemedia.2: MountTargetConflict: mount target already exists in this AZ

status code: 409, request id: c11d8b5d-fc8c-11e8-a6ac-03687caf52eb

* module.storage.module.kitemedia.aws_efs_mount_target.kitemedia[0]: 1 error(s) occurred:

* aws_efs_mount_target.kitemedia.0: MountTargetConflict: mount target already exists in this AZ

status code: 409, request id: c11db25f-fc8c-11e8-adc4-b7e10e019ae2

Any reason not to declare aws_azs as a list, rather than csv?

and have they actually been created already but not written to the Terraform state?

If so, manually delete the EFS mount targets and re run TF

that worked

i am still not able to view the outputs

i have them in outputs.tf

output "kitemedia_dns_name" {

value = "${aws_efs_file_system.kitemedia.id}.efs.${var.aws_region}.amazonaws.com"

}

when i run terraform output kitemedia_dns_name

The state file either has no outputs defined, or all the defined

outputs are empty. Please define an output in your configuration

with the `output` keyword and run `terraform refresh` for it to

become available. If you are using interpolation, please verify

the interpolated value is not empty. You can use the

`terraform console` command to assist.

this is what i am seeing

Inspect the statefile - is ^^ correct and there are no outputs defined in there?

and is that output in the module that you are calling?

do i have to use fully qualified name ?

@rohit try just terraform output from the correct folder and see what it outputs

when i navigate to the correct folder, i get

The module root could not be found. There is nothing to output.

i am able to see them in the state file

but not on the command line using terraform output

@rohit again, are you outputting the value in the module, and then also in the thing which calls the module as my example above?

yeah tried that

still same issue

Without being able to see any code

¯_(ツ)_/¯

this is what i have inside [outputs.tf](http://outputs.tf) under kitemedia module

output "kitemedia_dns_name" {

value = "${aws_efs_file_system.kitemedia.id}.efs.${var.aws_region}.amazonaws.com"

}

and then this is what i have inside [outputs.tf](http://outputs.tf) that calls kitemedia module

output "kitemedia_dns_name" {

value = "${module.storage.kitemedia.kitemedia_dns_name}"

}

Where has “storage” come from in module.storage.kitemedia.kitemedia_dns_name

Please post full configs including all vars and outputs for both the module and the code calling the module in a gist

@rohit what IDE/Editor are you using?

suggest to try https://www.jetbrains.com/idea/, it has a VERY nice Terraform plugin, shows and highlights all errors, warning, and other inconsistencies like wrong names, missing vars, etc.

Capable and Ergonomic Java IDE for Enterprise Java, Scala, Kotlin and much more…

vscode is actually quite nice too - never thought I’d say that about an MS product but times are a changin’

yea, i tried it, it’s nice

Also has good TF support

i am using vscode

im using vscode too, works well enough

yep

just tried to understand where storage comes from in module.storage.kitemedia.kitemedia_dns_name as @joshmyers pointed out

It’s actually module.kitemedia.kitemedia_dns_name

Working now?

nope

i am not sure what’s wrong

can you post the full code?

i will try

i have another question

so i have the following structure,

modules/compute/app1/main.tf, modules/compute/app2/main.tf, modules/compute/app3/main.tf

i want to use an output variable from modules/compute/app1/main.tf in modules/compute/app2/main.tf

so i am writing my variable to outputs.tf

now, how do i access the variable in modules/compute/app2/main.tf ?

does this makes sense ?

it’s easy if all of those folders are modules, then you use a relative path to access the module and use its outputs, e.g. https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/examples/complete/main.tf#L62

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

I was not aware that something like this can be done

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

i am sure there are many more features like this

@Andriy Knysh (Cloud Posse) i tried what you suggested but i am still facing problems

@rohit post here your code and the problems you are having

It is hard to paste the entire code but i will try my best

In my modules/compute/app1/outputs.tf i have

output "ec2_instance_security_group" {

value = "${aws_security_group.instance.id}"

}

In my modules/compute/app2/main.tf i am trying to do something like this

data "terraform_remote_state" "instance" {

backend = "s3"

config {

bucket = "${var.namespace}-${var.stage}-terraform-state"

key = "account-settings/terraform.tfstate"

}

}

security_groups = ["${data.terraform_remote_state.instance.app1.ec2_instance_security_group}"]

i feel that i am missing something here

you did not configure your remote state, you just using ours

I looked at the state file and it is listed under

"path": [

"root",

"compute",

"app1"

],

"outputs": {

"ec2_instance_security_group": {

"sensitive": false,

"type": "string",

"value": "REDACTRED"

}

}

config {

bucket = "${var.namespace}-${var.stage}-terraform-state"

key = "account-settings/terraform.tfstate"

}

^ needs to be updated to reflect your bucket and your folder path

yes i did that

i did not wanted to paste the actual values

hmm

there is no secrets here

config {

bucket = "${var.namespace}-${var.stage}-terraform-state"

key = "account-settings/terraform.tfstate"

}

Is the output from my state file helpful ?

update and paste the code

data "terraform_remote_state" "compute" {

backend = "s3"

workspace = "${terraform.workspace}"

config {

bucket = "bucketname"

workspace_key_prefix = "tf-state"

key = "terraform.tfstate"

region = "us-east-1"

encrypt = true

}

}

is that helpful ?

if you look at the state bucket, do you see the file under terraform.tfstate?

it’s probably under one of the app subfolders

e.g. app1 or app2

the state file is under, bucketname/tf-state/eakk/terraform.tfstate

key = "tf-state/eakk/terraform.tfstate"