#terraform (2019-10)

Discussions related to Terraform or Terraform Modules

Discussions related to Terraform or Terraform Modules

Archive: https://archive.sweetops.com/terraform/

2019-10-01

Good morning, I’m having issues with block_device_mappings in a launch template

Terraform 0.12

block_device_mappings {

device_name = "/dev/xvda"

ebs {

volume_type = "gp2"

volume_size = 64

}

}

block_device_mappings {

device_name = "/dev/sdb"

ebs {

volume_type = "io1"

iops = var.iops

volume_size = var.volume_size

}

}

That’s resulting on this

Any idea what am I doing wrong ?

what’s the issue?

terraform is adding iops to the gp2 volume

don’t know why

so maybe that’s a default

Actually everything is good

false alarm

thanks for your help

need some gurus with this. I have a powershell script to pull an artifact from S3 and install it application during the EC2 bootstrap process. Standalone, the Powershell script works. When applied via the User Data with property i get the following in the Ec2ConfigLog when i remote into the instance to see what happened:

2019-10-01T16:13:54.710Z: Ec2HandleUserData: Message: Start running user scripts

2019-10-01T16:13:54.726Z: Ec2HandleUserData: Message: Could not find <runAsLocalSystem> and </runAsLocalSystem>

2019-10-01T16:13:54.726Z: Ec2HandleUserData: Message: Could not find <powershellArguments> and </powershellArguments>

2019-10-01T16:13:54.726Z: Ec2HandleUserData: Message: Could not find <persist> and </persist>

I’ve ruled out the Powershell version since v4 works with it and even base64-decoded what Terraform does and uploads when creating the resource. The target instance is a Windows Server 2012 box. Ideas appreciated.

2019-10-02

figured out my problem…the custom AMI i was using didn’t have user data script execution enabled. The little things we miss…

Helpful question stored to <@Foqal> by @oscar:

Why isn't my User Data script running?

@here public #office-hours starting now! join us to talk shop https://zoom.us/j/508587304

Do sweetops has any terraform module to create Kubernetes cluster using kops?

no, we use kops directly, but we use terraform to provision backing services needed by kops

i have seen some modules out there do what you say though…

(not by us)

@Erik Osterman (Cloud Posse) Could you please give me a link?

GitHub is where people build software. More than 40 million people use GitHub to discover, fork, and contribute to over 100 million projects.

Anyone can recommend 0.12-ready ECS service module?

I tried airship but can’t even get pass terraform init unfortunately

2019-10-03

@Erik Osterman (Cloud Posse)

Many moons ago we discussed that Dockerfile is for global variables (#geodesic), .envrc is good for slightly less global variables, but shared across applications, and that for terraform only variables it should live in an auto.tfvars file.

I’ve since followed that rule but I’ve noticed the following warning:

Using a variables file to set an undeclared variable is deprecated and will

become an error in a future release. If you wish to provide certain "global"

settings to all configurations in your organization, use TF_VAR_...

environment variables to set these instead.

It must be time to move away from that approach and actually start placing Terraform variables in our .envrc with USE tfenv right?

I don’t think that’s what this means.

It means you have a variable in one of your .tfvars files that does not have a corresponding variable block:

variable "...." {

}

Hi  . I’m dealing with some really old terraform files that sadly don’t specify the version of the modules that have been used to apply the infrastructure. Is there a way to tell that from the tf state file?

. I’m dealing with some really old terraform files that sadly don’t specify the version of the modules that have been used to apply the infrastructure. Is there a way to tell that from the tf state file?

Hrmmmm that could be tricky. I can’t recall if the tfstate file persists that. Have you had a look at it?

Does anyone have a terraform script that creates a stack with prometheus and thanos?

i built a VPC pre TF v0.12 using the terraform-aws-modules/vpc/aws. I forgot to pin down the version. Anyone know the last compatible version with TF v0.11.x? Getting the following error:

Error downloading modules: Error loading modules: module vpc: Error parsing .terraform/modules/21a99daec297cf2c47674e5f63337da8/terraform-aws-modules-terraform-aws-vpc-5358041/main.tf: At 2:23: Unknown token: 2:23 IDENT max

ask in #terraform-aws-modules, the channel for https://github.com/terraform-aws-modules

Collection of Terraform AWS modules supported by the community - Terraform AWS modules

Terraform module which creates VPC resources on AWS - terraform-aws-modules/terraform-aws-vpc

any reson why this : https://github.com/cloudposse/terraform-aws-kms-key/blob/0.11/master/main.tf#L43 got deleted from the TF 0.12 version ?

Terraform module to provision a KMS key with alias - cloudposse/terraform-aws-kms-key

looks like there is already a pull request for this :

Terraform module to provision a KMS key with alias - cloudposse/terraform-aws-kms-key

Brought master branch in line with 0.11/master branch

it was added to TF 0.11 branch after it was converted to 0.12

I see so that PR should be ok to merge ?

after a few minor issues fixed, yes (commented in the PR)

awesome

Is it possible to disable the log bucket for the terraform-aws-s3-website module? It seems like it was going to be configureable but I can’t find it.

https://github.com/cloudposse/terraform-aws-s3-website/issues/21#issuecomment-420829113

module Parameters module "dev_front_end" { source = "git://github.com/cloudposse/terraform-aws-s3-website.git?ref=master"> namespace = "namespace" stage = "…

I’m not sure off the bat, however, @Andriy Knysh (Cloud Posse) is working on terraform all next week (as this week)

module Parameters module "dev_front_end" { source = "git://github.com/cloudposse/terraform-aws-s3-website.git?ref=master"> namespace = "namespace" stage = "…

he’s currently working on the beanstalk modules

we can maybe get to this after that (or if you want to open a PR)

it’s easy to implement: a new var.logs_enabled and dynamic block for logging

but when it’s enabled, it’s still can’t be destroyed automatically w/o adding force_destroy as is done for the main bucket https://github.com/cloudposse/terraform-aws-s3-website/blob/master/main.tf#L45

Terraform Module for Creating S3 backed Websites and Route53 DNS - cloudposse/terraform-aws-s3-website

that’s probably another var.logs_force_destroy

Cool! Thanks @Andriy Knysh (Cloud Posse) and @Erik Osterman (Cloud Posse) I’ll work on a PR. Sorry for not just looking at the code and realizing that a PR would resolve it.

what’s happening here -> ` count = “${var.add_sns_policy != “true” && var.sns_topic_arn != “” ? 0 : 1}” ` can someone explain ? referring from - https://github.com/cloudposse/terraform-aws-efs-cloudwatch-sns-alarms/blob/master/main.tf

Terraform module that configures CloudWatch SNS alerts for EFS - cloudposse/terraform-aws-efs-cloudwatch-sns-alarms

take a look here, https://bit.ly/1mz5q0g wikipedia article on the ternary operator

Terraform module that configures CloudWatch SNS alerts for EFS - cloudposse/terraform-aws-efs-cloudwatch-sns-alarms

2019-10-04

Hi guys. This morning i just copied a working geodesic in a new one and i got an error when trying to upload tfstate to tfstate-backend S3 bucker (403). Digging into this i found a bucket policy that was not present in the other buckets. I don’t really understand from where it can come since all the versions and image tags are fixed ones. Does somebody already faced such a case ?

403 is auth. You’re likely trying to upload to the bucket of the old account/geodesic shell

Go into your Terraform project’s directory and run env | grep -i bucket and see what the S3 bucket is to confirm or disprove that theory.

old one:

TF_BUCKET_REGION=eu-west-3

TF_BUCKET=go-algo-dev-terraform-state

new one:

TF_BUCKET_REGION=eu-west-3

TF_BUCKET=go-algo-commons-terraform-state

Hm. Not what I thought

in fact i dont understand how old one doesn’t had the Bucket Policy wich is clearly defined in aws-tfstate-backend (still it doesn’t explain why it was blocking me but …)

the usage was :

- start geodesic

- assume-role

- go in tfstate-backend folder

- comment s3 state backend

- init-terraform

- terraform plan/apply

- uncomment s3 backend

- re init-terraform and answer yes about uploading existing state -> 403

and the error is :

Initializing modules...

- module.tfstate_backend

- module.tfstate_backend.base_label

- module.tfstate_backend.s3_bucket_label

- module.tfstate_backend.dynamodb_table_label

Initializing the backend...

Do you want to copy existing state to the new backend?

Pre-existing state was found while migrating the previous "local" backend to the

newly configured "s3" backend. No existing state was found in the newly

configured "s3" backend. Do you want to copy this state to the new "s3"

backend? Enter "yes" to copy and "no" to start with an empty state.

Enter a value: yes

Releasing state lock. This may take a few moments...

Error copying state from the previous "local" backend to the newly configured "s3" backend:

failed to upload state: AccessDenied: Access Denied

status code: 403, request id: XXXXXXXXXXXXX, host id: xxxxxxxxxxxxxxxx

The state in the previous backend remains intact and unmodified. Please resolve

the error above and try again.

deleting bucket policy allowed upload with the same command

The state in the local tfstate file is your old bucket

I suspect it is using the same .terraform directory as the old geodesic / account?

I’ve had that a few times when I forget to reinit properly.

humm should not since this ignored in dockerignore no ?

will have to clone it again so i will check about it

and also the terraform-state folder is comming from FROM cloudposse/terraform-root-modules:0.106.1 as terraform-root-modules

so it could be no way of importing a pre-existing .terraform folder unless i am missing something

Yeh wasn’t sure if you were running from /localhost or /conf

yes from /conf

ok i duplicated a second env from the original one and i have got the same symptoms (403 when uploading tf state)

the policy applied to the bucket is this one :

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "DenyIncorrectEncryptionHeader",

"Effect": "Deny",

"Principal": {

"AWS": "*"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::go-algo-prod-terraform-state/*",

"Condition": {

"StringNotEquals": {

"s3:x-amz-server-side-encryption": "AES256"

}

}

},

{

"Sid": "DenyUnEncryptedObjectUploads",

"Effect": "Deny",

"Principal": {

"AWS": "*"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::go-algo-prod-terraform-state/*",

"Condition": {

"Null": {

"s3:x-amz-server-side-encryption": "true"

}

}

}

]

}

tried to remove 1st statement => 403, removed only 2nd one => 403 so i guess both are causing issue

runnin in eu-west-3 if that make a difference about ServerSideEncryption

anyone knows what the syntax of container_depends_on input in cloudposse/terraform-aws-ecs-container-definition should look like? CC: @Erik Osterman (Cloud Posse) @sarkis @Andriy Knysh (Cloud Posse)

in https://docs.aws.amazon.com/AmazonECS/latest/APIReference/API_ContainerDependency.html it’s specified as containing two values: condition and containerName

The dependencies defined for container startup and shutdown. A container can contain multiple dependencies. When a dependency is defined for container startup, for container shutdown it is reversed.

so should I pass a list of maps that contains those two keys?

try to provide a list of maps with those keys

let us know if any issues

will do thanks!

I’m using using something like in the terraform-aws-ecs-container-definition module with my tf version being 0.12.9:

log_options = {

awslogs-create-group = true

awslogs-region = "eu-central-1"

awslogs-groups = "group-name"

awslogs-stream-prefix = "ecs/service"

}

but I get

Definition container_definitions is invalid: Error decoding JSON: json: cannot unmarshal bool into Go struct field LogConfiguration.Options of type string

I’ve tried to use (double-)quotes for the “true” and still didn’t get rid of it…

@sarkis @Andriy Knysh (Cloud Posse) ^^^

Hello, I’m looking at this module: https://github.com/cloudposse/terraform-aws-ecs-web-app/blob/master/main.tf

Terraform module that implements a web app on ECS and supports autoscaling, CI/CD, monitoring, ALB integration, and much more. - cloudposse/terraform-aws-ecs-web-app

and I’m wondering, why is there an alb_ingress definition, but it seems that there is no ALB being created?

The listener needs to be created in that module and then passed along here: https://github.com/cloudposse/terraform-aws-ecs-web-app/blob/master/main.tf#L94

~https://github.com/cloudposse/terraform-aws-ecs-alb-service-task module creates the ALB itself~it’s been a while since i used these - see @Andriy Knysh (Cloud Posse) response below for a better explanation

ALB is created separately

take a look at this example https://github.com/cloudposse/terraform-aws-ecs-atlantis/blob/master/examples/without_authentication/main.tf#L32

Terraform module for deploying Atlantis as an ECS Task - cloudposse/terraform-aws-ecs-atlantis

So, when I try to spin down this stack, I get:

module.alb_ingress.aws_lb_target_group.default: aws_lb_target_group.default: value of 'count' cannot be computed

And, if I spin the stack up, then destroy it, I hit this error, and even running a new plan then gives the same error.

this project was applied and destroyed many times w/o those errors https://github.com/cloudposse/terraform-root-modules/tree/master/aws/ecs

Example Terraform service catalog of “root module” blueprints for provisioning reference architectures - cloudposse/terraform-root-modules

it’s for atlantis, but you can take the parts related only to ECS and test

I think the issue is that in the alb_ingress module, I’m passing in the default_target_group_arn from the ALB module into target_group_arn of the alb_ingress module…

So, I’m a little confused as to how to connect the alb_ingress module with the alb module, or if I even need to.

The ALB module has no examples and the alb_ingress module has an example of only itself being called. I think it’d be nice to have an example that shows putting these two together.

Somehow I keep getting this error:

aws_ecs_service.ignore_changes_task_definition: InvalidParameterException: The target group with targetGroupArn arn:aws:elasticloadbalancing:us-west-2::targetgroup/qa-nginx-default/b4be3dbba5e084ab does not have an associated load balancer.

Looks like the service and the ALB are being created at the same time, but really the service needs to be created after the alb.

yes

ALB needs to be created first

so either use -target, or call apply two times

(we’ll be adding examples this week when we convert all the modules to TF 0.12)

I suppose in TF 0.12 they’ll have depends_on for modules soon.

it’s not about depends_on, TF waits for the ALB to be created, BUT it does not wait for it to be in READY state

and it takes time for ALB to become ready

right

so depends_on will not help here

It turns out that starting with capital “T” solves the issue; it should be “True” not true

I’m using using something like in the terraform-aws-ecs-container-definition module with my tf version being 0.12.9:

log_options = {

awslogs-create-group = true

awslogs-region = "eu-central-1"

awslogs-groups = "group-name"

awslogs-stream-prefix = "ecs/service"

}

but I get

Definition container_definitions is invalid: Error decoding JSON: json: cannot unmarshal bool into Go struct field LogConfiguration.Options of type string

i’ve got a variable, "${aws_ssm_parameter.npm_token.arn}" which ends up being arn:aws:ssm:us-west-2:xxxxxxxxx:parameter/CodeBuild/npm-token-test

Is it possible to remove the -test based on if a variable is true or not?

tried this, with no luck

${var.is_test == "true" ? "${substr(aws_ssm_parameter.npm_token.arn, 0, length(aws_ssm_parameter.npm_token.arn) - 5)}" : "${aws_ssm_parameter.npm_token.arn}"}

why no luck?

you don’t need to do interpolation inside interpolation

"${var.is_test == "true" ? substr(aws_ssm_parameter.npm_token.arn, 0, length(aws_ssm_parameter.npm_token.arn) - 5) : aws_ssm_parameter.npm_token.arn}"

and what’s the provided value for var.is_test?

true

that worked forgot I idnt need to do the interpolation inside

thanks @Andriy Knysh (Cloud Posse)!

2019-10-05

HI, I am using custom script to export swagger from restapi and to create boilerplate for API Gateway Import. I created deployment resourse which will trigger redeploy once swagger export is changed. It looks something like this;

# Create Deployment resource “aws_api_gateway_deployment” “restapi” { rest_api_id = aws_api_gateway_rest_api.restapi.id variables = { trigger = filemd5(“./result.json”) } lifecycle { create_before_destroy = true } }

For now it works as expected. I am just trying to find solution to not destroy previous deployments on new deploys. Any suggestions more than welcome.

2019-10-07

how does terraform consider ${path.module}, what value does it take for it - Example: like in file(“${path.module}/hello.txt”)

file("${path.module}/hello.txt") is same as file("./hello.txt") ?

if used from the same folder (project), then yes, the same

${path.module} is useful when the module is used from another external module

for example:

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

when this is used from this example https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/examples/complete/main.tf#L73 (which is in diff folder)

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

${path.module} still points to the module’s root, not the example’s root (even if used from the example)

:zoom: Join us for “Office Hours” every Wednesday 11:30AM (PST, GMT-7) via Zoom.

This is an opportunity to ask us questions on terraform and get to know others in the community on a more personal level. Next one is Oct 16, 2019 11:30AM.

Register for Webinar

#office-hours (our channel)

#office-hours (our channel)

2019-10-08

Anyone able to find the Terraform Cloud CIDR:?

1

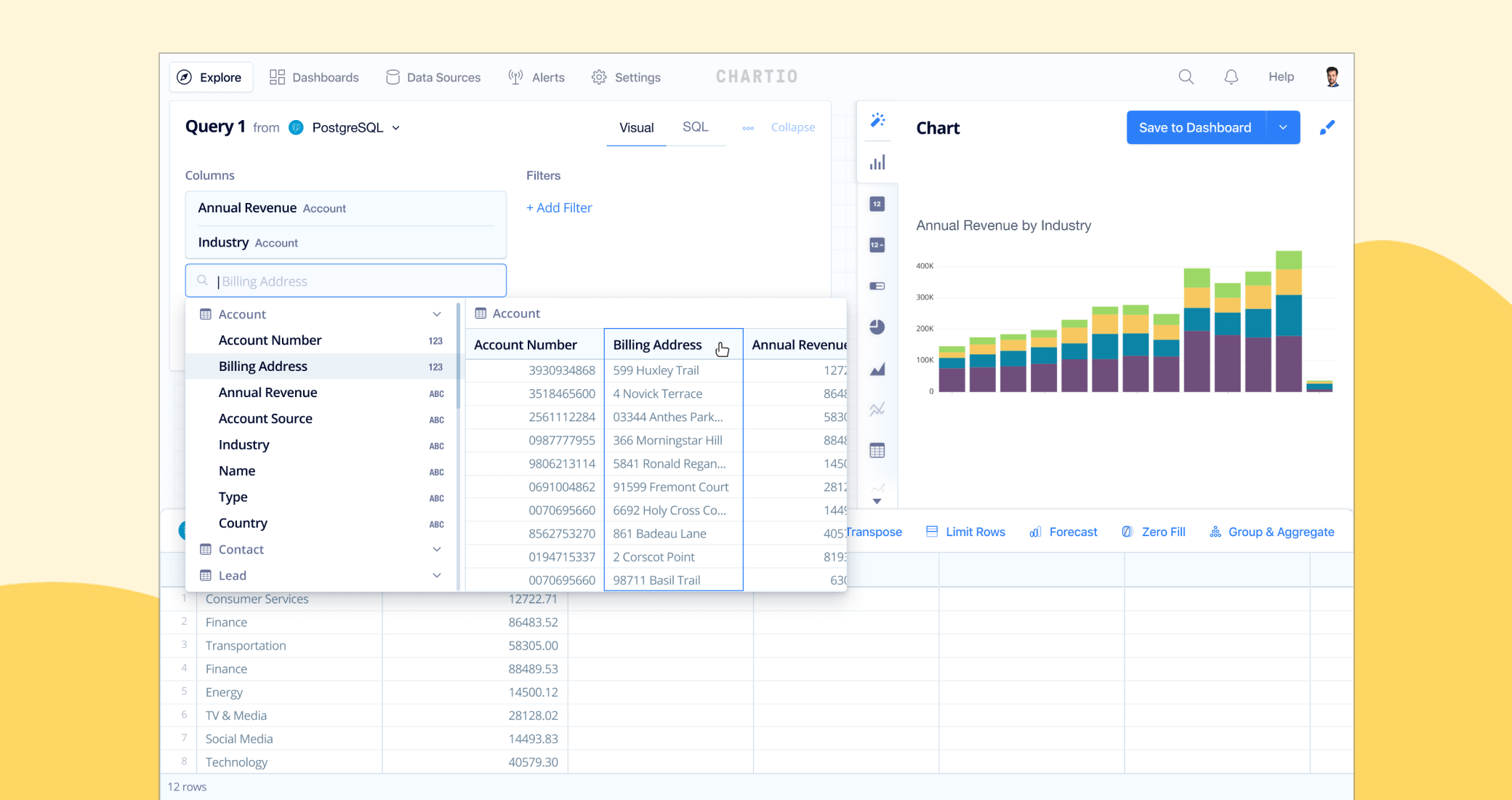

1Terraform Cloud desperately needs something like this https://chartio.com/docs/data-sources/tunnel-connection/ (what chartio does)

Chartio offers two ways of connecting to your database. A direct connection is easier; you can also use our SSH Tunnel Connection.

@sarkis

what’s nice about this is you don’t even expose SSH. using autossh you establish persistent tunnels from inside your environments that connect out to chartio

Tragically enough, it isn’t that that’s the issue

our VCS is IP whitelisted

so TF Cloud can’t access our code

aha

But yes that would be a useful solution if/when I hit the roadblock of terraform private infrastructure

Though in theory it is only running against AWS Accounts

so shouldn’t be an issue

honestly, if you’re that locked down, onprem terraform cloud is what you’re going to probably need

Aye

Got a call with them tomorrow

I’ll feed back in PM about what they say

Time to get ripped off by SaaS

the trouble is though, we don’t want on prem

we want managed services

less maintenance etc. We don’t want to host it!

but you self-host VCS it sounds like

@Erik Osterman (Cloud Posse) 100% agree on the connection bit - I’m proposing something like VPC Endpoints to the team after using TFC a bit we ran into the same limitation

I can’t seem to find it with google / terraform docs

Do they provide one?

Q: How can I target a specific .tf file with terraform? I have multiple tf files in my project and I would like to destroy and apply the resources specified within one specific tf file. I’m aware I can -target the resources within the tf file but I want an easier way if that exists.

no easy way that I can think of with pure terraform.

Maybe you can put those files into separate folders?

Or read about terragrunt

Thanks @Szymon I had been reading up on Terragrunt recently

Doesn’t appear to be that way

Do they provide one?

Hey everyone, I’ve been playing around with Terraform for a couple of weeks. After making a simple POC my modules ended up similar to CloudPosse modules on a much smaller scale. I found your repos just this past weekend and am pretty impressed with them compared to other community modules. One thing I haven’t figured out yet is how to build the infrastructure in one go. It seems to me like you can build the VPC, alb, etc.. all at once and then separately build the RDS due to dependency issues. I’d assume there are similar issues with much more complex architectures? I thought it was how I configured my own POC but using the CloudPosse modules there’s a similar issue. example: `Error: Invalid count argument

on .terraform/modules/rds_cluster_aurora_mysql.dns_replicas/main.tf line 2, in resource “aws_route53_record” “default”: 2: count = var.enabled ? 1 : 0

The “count” value depends on resource attributes that cannot be determined until apply, so Terraform cannot predict how many instances will be created. To work around this, use the -target argument to first apply only the resources that the count depends on. `

@MattyB the count error happened very often in TF 0.11, it’s much better in 0.12. We have some info about the count error here https://docs.cloudposse.com/troubleshooting/terraform-value-of-count-cannot-be-computed/

The error itself has nothing to do with how you organize your modules and projects - it’s separate tasks/issues. Take a look at these threads https://sweetops.slack.com/archives/CB6GHNLG0/p1569434890216300 and https://sweetops.slack.com/archives/CB6GHNLG0/p1569434945216700?thread_ts=1569434890.216300&cid=CB6GHNLG0

@kj22594 take a look here, similar conversation https://sweetops.slack.com/archives/CB6GHNLG0/p1569261528160800 https://sweetops.slack.com/archives/CB6GHNLG0/p1569261528160800

Get up and running quickly with one of our reference architecture using our fully automated cold-start process. - cloudposse/reference-architectures

Lookin for a terraform Module For - RDS- Instance Configuration : SQL Server Standard 2017, 2017 v14

we have https://github.com/cloudposse/terraform-aws-rds, tested with MySQL and Postgres, was not tested with SQL Server (but could work, just specify the correct params - https://github.com/cloudposse/terraform-aws-rds/blob/master/variables.tf#L111)

Terraform module to provision AWS RDS instances. Contribute to cloudposse/terraform-aws-rds development by creating an account on GitHub.

Terraform module to provision AWS RDS instances. Contribute to cloudposse/terraform-aws-rds development by creating an account on GitHub.

–

hot off the press: https://registry.terraform.io/modules/cloudposse/backup/aws/0.1.0

could it be possible to pass this construct :

lifecycle_rules = {

id = "test-expiration"

enabled = true

abort_incomplete_multipart_upload_days = 7

expiration {

days = 30

}

noncurrent_version_expiration {

days = 30

}

}

to a variable with something like map(string)?

you could do it with structural types if you want to validate the input map(object({id = string, enabled = bool, etc})) or you could do it as map(any) if you don’t want to validate the input

as a general practice though i tend to try and make variables somewhat more self documenting and have things like expiration_enable abort_incomplete_multipart_upload_days, etc as separate values as it makes it easier to use the module for others

Terraform module authors and provider developers can use detailed type constraints to validate the inputs of their modules and resources.

but how do you map :

expiration {

days = 30

}

and a map(any) for example ?

since is key=value

it will be so much easier if you could attach a lifecycle policy or include a file for varible blocks

yeh ok good point - blocks are a bit of a pain in the butterfly

technically they’re a list of maps

For historical reasons, certain arguments within resource blocks can use either block or attribute syntax.

Error: Invalid value for module argument

on s3_buckets.tf line 29, in module "s3_bucket_scans":

29: lifecycle_rule = {

30: id = "test-scans-expiration",

31: enabled = true,

33: abort_incomplete_multipart_upload_days = 7,

35: expiration = {

36: days = 30

37: }

39: noncurrent_version_expiration = {

40: days = 30

41: }

42: }

The given value is not suitable for child module variable "lifecycle_rule"

defined at ../terraform-aws-s3-bucket/variables.tf:102,1-26: all map elements

must have the same type.

it did not like that

I tried this too :

lifecycle_rule {

id = var.lifecycle_rule

}

and

lifecycle_rule = <<EOT

{

#id = "test-scans-expiration"

enabled = true

abort_incomplete_multipart_upload_days = 7

expiration {

days = 30

}

noncurrent_version_expiration {

days = 30

}

}

EOT

it almost worked

@jose.amengual here’s how we provide lifecycle_rule https://github.com/cloudposse/terraform-aws-s3-website/blob/master/main.tf#L74

Terraform Module for Creating S3 backed Websites and Route53 DNS - cloudposse/terraform-aws-s3-website

but if you want to have the entire block as variable, why not declare it as object (or list of objects) with different item types including other objects

for example: https://github.com/cloudposse/terraform-aws-ec2-autoscale-group/blob/master/variables.tf#L108

Terraform module to provision Auto Scaling Group and Launch Template on AWS - cloudposse/terraform-aws-ec2-autoscale-group

Terraform module to provision Auto Scaling Group and Launch Template on AWS - cloudposse/terraform-aws-ec2-autoscale-group

lifecycle_rule {

id = module.default_label.id

enabled = var.lifecycle_rule_enabled

prefix = var.prefix

tags = module.default_label.tags

noncurrent_version_transition {

days = var.noncurrent_version_transition_days

storage_class = "GLACIER"

}

noncurrent_version_expiration {

days = var.noncurrent_version_expiration_days

}

}

in my mind (and experience) this is a much better way to do it rather than try and pass a big object as a variable. you end up with a much easier to understand interface to your module

yes agree. The only case when you’d want to provide it as a big object var is when the entire block is optional

I prefer using a big object and leaving the usage up to the user

With dynamic blocks it seems to work very well in tf 0.12

yea, it all depends (on use case and your preferences)

and

since the other guy is taking too long

and

We a are all in the same team

if you have time to look at the alb and kms pull request I will appreciate

commented on kms

done on that, anything I can help with the terraform-aws-alb/pull/29?

ok , I like the example s3-website module, I will do something like that

does anyone know how to merge a list of maps into a single map with tf 0.12? i.e, i want:

[

{

"foo": {

"bar": 1

"bob": 2

}

},

{

"baz": {

"lur": 3

}

}

]

to become:

{

"foo": {

"bar": 1

"bob": 2

}

"baz": {

"lur": 3

}

}

the former is the output of a for loop that groups items with the ellipsis operator

2019-10-09

output "merged_tags" {

value = merge(local.list_of_maps...)

}

Oh, decomposition is supported like js

Hi @all Not sure if it is the right place to ask, but I take my chance !

Say I have 3 terraform repositories, A, B and …tadam : C! Both B & C rely on A states (mututlizaton of some components) /——–B A ——/ -——— C

(Please note my graphical skills )

All repositories are in terraform 0.11, but I would like to update to 0.12. What would be the best way to achieve that (and avoid conflicts) ? Can I simply update B then A and C ? Or should I take care about a particular order ?

If I recall correctly, you’ll want to run terraform apply with the latest version of 0.11 which will ensure state compatibility with 0.12

then you ought to be able to upgrade the projects in any order after that.

perhaps someone in #terraform-0_12 has better suggestions

If you haven’t reviewed this, make sure you do that first.

Upgrading to Terraform v0.12

interesting patterns https://discuss.hashicorp.com/t/pattern-to-handle-optional-dynamic-blocks/2384

What’s a good way to handle optional dynamic blocks, depending on existence of map keys? Example: Producing aws_route53_record resources, where they can have either a “records” list, or an “alias” block, but not both. I’m using Terraform 0.12.6. I’m supplying the entire Route53 zone YAML as a variable, through yamldecode(): zone_id: Z987654321 records: - name: route53test-plain.example.com type: A ttl: 60 records: - 127.0.0.1 - 127.0.0.2 - name: route53test-alias.example.com type…

When did HashiCorp launch https://discuss.hashicorp.com/?

HashiCorp

It’s long overdue!

yea, they have very nice posts in there

2019-10-10

i think it’s pretty recent, June

learn.hashicorp.com is getting really good too - but most of the material is too introductory for now

Trying to reference an instance.id from different directory- erroring out

./tf

./tf/dir1/ -> (trying to use aws_instance.name.id in ->./tf/modules/cloud/myfile.tf)

./tf/envs/sandbox/ -> (running plan here)

./tf/modules/cloud -> myfile.tf

Tried adding “${aws_instance.name.id}” in outputs.tf in ./tf/dir1/ and using that value in ./tf/modules/cloud/myfile.tf Throws a new error like

-unknown variable accessed: var.profile in:${var.profile}

How do i go about this ?

the module /tf/modules/cloud should have [variables.tf](http://variables.tf) and [outputs.tf](http://outputs.tf)

@Andriy Knysh (Cloud Posse) yes those two files do exist in /tf/modules/cloud

see for example https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/examples/complete/main.tf

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

the main file that uses the module provides vars to the module

and uses its outputs in its own outputs

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

bit confusing, but going to look into it. Thanks for sharing those @Andriy Knysh (Cloud Posse) appreciate your help

the variables go from top level modules to low level modules

the outputs go from low level modules to top level modules

@Andriy Knysh (Cloud Posse) @Erik Osterman (Cloud Posse) https://github.com/cloudposse/terraform-aws-ecs-alb-service-task/pull/34

Breaking Change what As of AWS provider 2.22, multiple load_balancer configs are supported; see https://www.terraform.io/docs/providers/aws/r/ecs_service.html. why We have a use …

thanks @johncblandii we’ll review

Breaking Change what As of AWS provider 2.22, multiple load_balancer configs are supported; see https://www.terraform.io/docs/providers/aws/r/ecs_service.html. why We have a use …

1

1Guys and Gals, is there a TF resouse to control AWS SSO?

I can’t seem to find it

i don’t think there is even a public API for AWS SSO, is there?

Hi guys, Is there anyone familiar with IAM role and Instance Profile ?> I have a case like this: I would like to create an Instance Profile with suitable policy to allow access to ECR repo ( include download image from ECR as well). Then I attach that Instance Profile for a Launch Configuration to spin up an instance. The reason why I mentioned Policy for ECR is that I would like to set aws-credential- helper on the instance to use with Docker (Cred-helper). when it launch, so that when that instance want to pull image from ECR, it wont need AWS credential on the host itself at first. All of that module, I would like to put in Terraform format as well. Any help would be appreciated so much.

@Phuc see this example on how to create instance profile https://github.com/cloudposse/terraform-aws-eks-workers/blob/master/main.tf#L69

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

Hi aknysh

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

the main point I focus on

is that instance launch by launch configuration with instance profile

contain aws credential to access ecr yet

it kind complex for my case, I try alot, but the previous working way for me is ~/.aws/credential must exist on the host.

not sure what’s the issue is, but you create a role, assign the required permissions to the role (ECR etc.), add assume_role document for the EC2 service to assume the role, and finally create an instance profile from the role

then you use the instance profile in launch configuration or launch template

Do you know how aws-cred-helper and docker login working together ?

Terraform module to provision Auto Scaling Group and Launch Template on AWS - cloudposse/terraform-aws-ec2-autoscale-group

https://github.com/awslabs/amazon-ecr-credential-helper. I want to achieve the same result but with IAM only

Automatically gets credentials for Amazon ECR on docker push/docker pull - awslabs/amazon-ecr-credential-helper

https://github.com/awslabs/amazon-ecr-credential-helper#prerequisites says it works with all standard credential locations, including IAM roles (which will be used in case of EC2 instance profile)

Automatically gets credentials for Amazon ECR on docker push/docker pull - awslabs/amazon-ecr-credential-helper

so once you have an instance profile on EC2 where the amazon-ecr-credential-helper is running, the instance profile (role) will be used automatically

yeah, I was thinking that too

try to figure if there is any missing policy for the iam role

2019-10-11

i’m trying to apply a generated certificate from ACM, using DNS validation, using the cloudposse module, terraform-aws-acm-request-certificate. The certificate is applied to the loadbalancer in an elasticbeanstalk application. The cert gets created and applied to the loadbalancer before it’s verified, the eb config fails and then the app is in a failed state, preventing further configuration. to fix this I added a aws_acm_certificate_validation resource to the module and changed the arn output to aws_acm_certificate_validation.cert.certificate_arn. this seems to have fixed my problem.

That looks right to me :) The other solution would have been to force a dependency between the validation resource and the ALB

Using this

source = "cloudposse/vpc-peering-multi-account/aws"

version = "0.5.0"

And getting this error.

Error: Missing resource instance key

on .terraform/modules/vpc_peering_cross_account_devdata/cloudposse-terraform-aws-vpc-peering-multi-account-3cf3f60/accepter.tf line 96, in locals:

96: accepter_aws_route_table_ids = "${distinct(sort(data.aws_route_tables.accepter.ids))}"

Because data.aws_route_tables.accepter has "count" set, its attributes must be

accessed on specific instances.

For example, to correlate with indices of a referring resource, use:

data.aws_route_tables.accepter[count.index]

any help is appreciated.

the module has not been converted to TF 0.12 yet, but you are using 0.12 which has more strict type system

FWIW. I actually just commented this out and proceeded and seem to have my VPC peering working …

# Lookup accepter route tables

data "aws_route_tables" "accepter" {

# count = "${local.count}"

So commenting out the count

got me further down the road with the module and Terraform 0.12.

Thanks.

2019-10-12

Hi everyone, I’m helping a friend with Azure and it’s a quite different from AWS.. Much appreciated if someone could tell me what the scope of a azurerm_resource_group normally is or should be best practice-wise.

Unclear to me if I should fit all resources of one project in there, or group in type like, dns related, network.. etc.etc. Thanks.

We use dedicated resource groups for different use cases.

Would you like to give me an example ?

or multiple, an example per different use-case ..

a) use a HPC resource group for HPC workload incl. network, storage, vms, limits, admin permissions b) SAP resource group for SAP workload incl. network, storage, blob storage, vms, databases. c) NLP processing for the implementation of NLP services d) HUB resource group which includes the HUB VPN endpoint and shared services like domain controllers.

gotcha, thanks

2019-10-13

HI here, I have a question about terraform registry that the NEXT button does not work in the page : https://registry.terraform.io/browse/modules?offset=109&provider=aws and why the registry only show at most 109 modules

Even if I use curl <https://registry.terraform.io/v1/modules?limit=100&offset=200&provider=aws> and the results also same as the previous.

perhaps that’s just the end of registered aws modules?

oh hmm… the registry home page says there are 1198 aws modules…

HI @loren It seems like all of provider have the same issue.

yeah, maybe look for or open an issue on the terraform github

possibly related: https://github.com/hashicorp/terraform/issues/22380

I was implementing the pagination logic client side in a PowerShell module when I noticed there is a pagination error. The returned offset in the meta is not updated over 115. As you can see, the r…

Thanks

2019-10-14

:zoom: Join us for “Office Hours” every Wednesday 11:30AM (PST, GMT-7) via Zoom.

This is an opportunity to ask us questions on terraform and get to know others in the community on a more personal level. Next one is Oct 23, 2019 11:30AM.

Register for Webinar

#office-hours (our channel)

#office-hours (our channel)

Hello All, Quick question - Did anyone come across setting up option group for MYSQL DB in RDS - in terraform

Terraform module to provision AWS RDS instances. Contribute to cloudposse/terraform-aws-rds development by creating an account on GitHub.

Terraform module to provision AWS RDS instances. Contribute to cloudposse/terraform-aws-rds development by creating an account on GitHub.

Hey @Andriy Knysh (Cloud Posse) — saw your comment on https://github.com/terraform-providers/terraform-provider-aws/issues/3963 for the cp EBS module. I’m using and I see it not trying to apply the name tag, but I’m still running into issues with receiving that Service:AmazonCloudFormation, Message:No updates are to be performed. + Environment tag update failed. error when I do an apply. It looks like the Namespace tag isn’t applying each time — Have you seen that before?

we deleted the Namespace tag from elasticbeanstalk-environment (latest release)

it was a problem as well, similar to the Name tag

in fact, AWS checks if tags contain anything with the string Name in it

probably using regex

(and now they/we have two problems https://blog.codinghorror.com/regular-expressions-now-you-have-two-problems/)

a blog by Jeff Atwood on programming and human factors

Ahaaa 4 days ago — I’m just the tiniest bit behind the curve. Awesome — Thank you! That module is great stuff. Allowing me to bootstrap this project quite easily!

@Andriy Knysh (Cloud Posse) Similar to the EBS environment module’s issue: The application resource module has a similar problem, it just doesn’t cause an error. It causes a change to that resource to be made on each apply. Put up a PR to fix: https://github.com/cloudposse/terraform-aws-elastic-beanstalk-application/pull/17

Including the "Namespace" tag to the beanstalk-application resource required a change for each apply as the tag is never applied. Removing that tag causes the beanstalk-application to avo…

thanks @Matt Gowie, merged. Wanted to do it, but since it does not cause an error and updating an EBS app takes a few secs, never got to it

Glad to help out! Would like to do more where I can since these EBS modules were such a huge help.

2019-10-15

has anyone else had that same dependency issue with https://github.com/cloudposse/terraform-aws-acm-request-certificate

Terraform module to request an ACM certificate for a domain name and create a CNAME record in the DNS zone to complete certificate validation - cloudposse/terraform-aws-acm-request-certificate

https://github.com/Flaconi/terraform-aws-alb-redirect - For everyone who deals with quite a lot of 302/301 redirects like apex -> www and want to solve it outside of nginx or s3, here you go  Next release will have optional vpc creation.

Next release will have optional vpc creation.

HTTP 301 and 302 redirects made simple utilising an ALB and listener rules. - Flaconi/terraform-aws-alb-redirect

quick question about https://github.com/cloudposse/terraform-aws-rds/

is it still viable to contribute to the 0.11 branch?

having an issue where i’d like to not have the module create its own security group and would be good to have that optional if possible

branch 0.11/master is for TF 0.11

Terraform module to provision AWS RDS instances. Contribute to cloudposse/terraform-aws-rds development by creating an account on GitHub.

fork from it and open a PR

we’ll merge it into 0.11/master branch

@Andriy Knysh (Cloud Posse) cool sounds good

Hey folks, what is the community approach to running SQL scripts against a new RDS cluster? remote-exec seems not great since it requires that the SG opens up to the calling machine.

I see https://github.com/fvinas/tf_rds_bootstrap — But that seems old. Wondering if my google-fu is not finding the right tool for this job.

A terraform module to provide a simple AWS RDS database bootstrap (typically running SQL code to create users, DB schema, …) - fvinas/tf_rds_bootstrap

@Matt Gowie There is a Mysql and Postgres provider which can create roles inside the RDS, something for you ?

Yeah, possibly. Mind sending me a link?

A provider for MySQL Server.

Thanks Maarten — Will check those out.

yet another PR https://github.com/cloudposse/terraform-aws-rds-cluster/pull/57 Aurora RDS backtrack support

Adding RDS Backtrack support

thanks @jose.amengual, commented on the PR

Adding RDS Backtrack support

added the changes bu I’m having an issue with make readme

curl --retry 3 --retry-delay 5 --fail -sSL -o /Users/jamengual/github/terraform-aws-rds-cluster/build-harness/vendor/terraform-docs <https://github.com/segmentio/terraform-docs/releases/download/v0.4.5/terraform-docs-v0.4.5-darwin-amd64> && chmod +x /Users/jamengual/github/terraform-aws-rds-cluster/build-harness/vendor/terraform-docs

make: gomplate: No such file or directory

make: *** [readme/build] Error 1

I upgraded my mac to Catalina, it could be related to that

did you run

make init

make readme/deps

make readme

yes

well I installed it by hand brew install gomplate

and it worked

2019-10-16

Hello , i have written this aws_autoscaling_policy :

resource "aws_autoscaling_policy" "web" {

name = "banking-web"

policy_type = "TargetTrackingScaling"

autoscaling_group_name = "${aws_autoscaling_group.web.name}"

policy_type = "TargetTrackingScaling"

target_tracking_configuration {

customized_metric_specification {

metric_dimension {

name = "LoadBalancer"

value = "${aws_lb.banking.arn_suffix}"

}

metric_name = "TargetResponseTime"

namespace = "AWS/ApplicationELB"

statistic = "Average"

}

target_value = 0.400

}

}

but i search to modify this value with this type of ScalingPolicy :

Hi everybody,

I have the following list variable in Terraform 0.11.x in terraform.tfvars defined:

mymaps = [

{

name = "john"

path = "/some/dir"

},

{

name = "pete"

path = "/some/other/dir"

}

]

Now in my module’s [main.tf](http://main.tf) I want to extend this variable and store it as a local.

The logic is something like this:

if mymaps[count.index]["name"] == "john"

mymaps[count.index]["newkey"] = "somevalue"

endif

In other words, if any element’s name key inside the list has a value of john, add another key/val pair to the john dict.

The resulting local should look like this

mymaps = [

{

name = "john"

path = "/some/dir"

newkey = "somevalue"

},

{

name = "pete"

path = "/some/other/dir"

}

]

Is this somehow possible with null_resource (as they have the count feature) and locals?

@here could people thumbs up this on GitHub so we can get CloudWatch anomaly dectection metrics in terraform?

Community Note Please vote on this issue by adding a reaction to the original issue to help the community and maintainers prioritize this request Please do not leave "+1" or "me to…

Community Note Please vote on this pull request by adding a reaction to the original pull request comment to help the community and maintainers prioritize this request Please do not leave "…

@here public #office-hours starting now! join us to talk shop

https://zoom.us/j/508587304

https://zoom.us/j/508587304

I have a general terraform layout question. When I first started with terraform and got an ‘understanding’ for modules I created a module, called ‘bootstrap’ . This module includes the creation of acm certs, route53 zones, iam users/roles, s3 buckets, firehose, cloudfront oai. I now realize that modules should probably be smaller than this. What I want to know is - for people who bootstrap new aws accounts using TF, do you have a bunch of smaller modules(acm, route53, firehose, etc) and then for one of things like some iam roles/user just include those resources along with the module in a terraform file?

for example:

module {}

module {}

resource "iam_user {}

@Brij S take a look at these threads on similar topics, might be of some help to you to understand how we do that

https://sweetops.slack.com/archives/CB6GHNLG0/p1569434890216300

https://sweetops.slack.com/archives/CB6GHNLG0/p1569261528160800

@kj22594 take a look here, similar conversation https://sweetops.slack.com/archives/CB6GHNLG0/p1569261528160800 https://sweetops.slack.com/archives/CB6GHNLG0/p1569261528160800

Hi guys,

Any of you has experience with maintenance of SaaS environments? What I mean is some dev, test, prod environments separate for every Customer?

In my case, those environments are very similar, at least the core part, which includes, vnet, web apps in Azure, VM, storage… All those components are currently written as modules, but what I’m thinking about is to create one more module on top of it, called e.g. myplatform-core. The reason why I want to do that is instead of copying and pasting puzzles of modules between environments, I could simply create env just by creating/importing my myplatform-core module and passing some vars like name, location, some scaling properties.

Any thoughts about it, is it good or bad idea in your opinion?

I appreciate your input.

is there any reason why this module is not upgraded to 0.12 ? https://github.com/cloudposse/terraform-aws-ecs-alb-service-task can I have a stab at it ?

Terraform module which implements an ECS service which exposes a web service via ALB. - cloudposse/terraform-aws-ecs-alb-service-task

This module was a very specific use case, I don’t think anyone would have an issue with you taking a stab at it, would need to 0.12 all the things eventually!

Terraform module which implements an ECS service which exposes a web service via ALB. - cloudposse/terraform-aws-ecs-alb-service-task

we use it a lot, it is pretty opinionated I will like to add a few more options to make it a bit more flexible

we have 100+ modules to upgrade to TF 0.12

this one is in the line, will be updated in the next week

is in the line to be updated next week ? really ?

well, if that is the case then it will save me some work

but for real @Andriy Knysh (Cloud Posse) is scheduled for next week ?

yes

is this xmas?

haha

@jose.amengual @Andriy Knysh (Cloud Posse) has been tearing it up

he just converted all the beanstalk modules and dependencies

then converted jenkins

added terratest to all of it

jenkins by the way is a real beast!

our main hold up is we’re only releasing terraform 0.12 modules with that have automated terratests

this is the only way we can continue to keep the scale we have of modules

I totally agree and yes I saw that jenkins module

is huge

hey everyone, I have maybe a simple question, but whenever I am running a terraform plan with https://github.com/cloudposse/terraform-aws-elastic-beanstalk-environment?ref=master it is always updating, even after it is applied. Can someone point me in the right direction?

- setting {

- name = "EnvironmentType" -> null

- namespace = "aws:elasticbeanstalk:environment" -> null

- value = "LoadBalanced" -> null

}

+ setting {

+ name = "EnvironmentType"

+ namespace = "aws:elasticbeanstalk:environment"

+ value = "LoadBalanced"

}

- setting {

- name = "HealthCheckPath" -> null

- namespace = "aws:elasticbeanstalk:environment:process:default" -> null

- value = "/healthz" -> null

}

+ setting {

+ name = "HealthCheckPath"

+ namespace = "aws:elasticbeanstalk:environment:process:default"

+ value = "/healthz"

}

- setting {

- name = "HealthStreamingEnabled" -> null

- namespace = "aws:elasticbeanstalk:cloudwatch:logs:health" -> null

- value = "false" -> null

}

+ setting {

+ name = "HealthStreamingEnabled"

+ namespace = "aws:elasticbeanstalk:cloudwatch:logs:health"

+ value = "false"

}

Terraform module to provision an AWS Elastic Beanstalk Environment - cloudposse/terraform-aws-elastic-beanstalk-environment

unfortunately, there is no right direction here. There are many bugs in the aws provider related to how EB environment handles settings

Terraform module to provision an AWS Elastic Beanstalk Environment - cloudposse/terraform-aws-elastic-beanstalk-environment

the new version tries to recreate all 100% of the settings regardless of how you arrange them

nice

so everyone has to live with this

0.11 version at least tried to recreate only those settings not related to the type of environment you built

gotcha

they have to fix those bugs in the provider

there are many others

Community Note Please vote on this issue by adding a reaction to the original issue to help the community and maintainers prioritize this request Please do not leave "+1" or "me to…

Community Note Please vote on this issue by adding a reaction to the original issue to help the community and maintainers prioritize this request Please do not leave "+1" or "me to…

that was going on for months and still same issues

ahhh

thank you for the detailed explanation!

that’s why we have to do this in our tests for Jenkins which runs on EB https://github.com/cloudposse/terraform-aws-jenkins/blob/master/test/src/examples_complete_test.go#L29

Terraform module to build Docker image with Jenkins, save it to an ECR repo, and deploy to Elastic Beanstalk running Docker stack - cloudposse/terraform-aws-jenkins

(the issue with dynamic blocks in settings)

at least solved by applying twice

but that does not solve the issue with TF trying to recreate 100% of settings regardless if they are static or defined in dynamic blocks

at least I did not find a solution

ping me if you find anything

hmmm interesting

thanks for all the links!

and will do

Hey everyone, thanks so much for your work.

New to EKS (moving from IBM) but am curious about subnetting with the CP eks-cluster module (and dependencies - vpc, subnets, workers, etc) and understanding how it works.

If we assign maximum size cidr_block of 10.0.0.0/16 to vpc then we will get:

- 3x ‘public’ (private) subnets

10.0.[0|32|64].0/19- one per AZ? This contains any public ALB/NLB’s internal IP? What if we provision a privateLoadBalancerK8s Service? I will test - 3x ‘private’ subnets

10.0.[96|128|160].0/19- one per AZ? This will be used by k8s worker nodes ASG’s. - Cluster seems to use

172.x.y.z/?(RFC1918 somewhere) for the K8sPodIP’s and for the K8sServiceIP’s. This makes me think we are doing IPIP in the cluster. - The remaining

10.0.192.0/18is free for us to use, say with separate non K8s ASG’s or perhaps SaaS VPC endpoints (RDS/MQ/ETC) that we want in the same VPC? Is this all correct? Whilst #1 above seems like a large range for a few services, it’s not like IP addresses are sparse. We haven’t added Calico yet.

we have this working/tested example of EKS cluster with workers https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/examples/complete/main.tf

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

as you can see, it uses the VPC module and subnets module to create VPC and subnets

there are many ways of creating subnets in a VPC

https://github.com/cloudposse/terraform-aws-dynamic-subnets is one opinionated approach which creates one public and one private subnet per AZ that you provide (we use that almost everywhere with kops/k8s)

Terraform module for public and private subnets provisioning in existing VPC - cloudposse/terraform-aws-dynamic-subnets

in the example we use 2 AZs https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/examples/complete/fixtures.us-east-2.tfvars#L3

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

the test shows what CIDRs we are getting for the public and private subnets https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/test/src/examples_complete_test.go#L42

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

you can divide your VPC into as many subnets as you want/need

it’s not related to the EKS module, which just accepts subnet IDs regardless of how you create them

we have a few modules for subnets, all of them divide a VPC differently https://github.com/cloudposse?utf8=%E2%9C%93&q=subnets&type=&language=

Yea, definitely no one-size-fits all strategy for subnets

It’s one of the more opinionated areas, especially in larger organizations

Pick what makes sense for your needs but have a look at our different subnet module that implement different strategies to slice and dice subnets

oh wow, awesome, I’ll dive through the different subnets and tests above. Thank so much for the response. A+++

2019-10-17

How do you set the var-file for Terraform on the CLI?

For instance

export TF_VAR_name=oscar

export TF_VAR_FILE=environments/oscar/terraform.tfvars == -var-file=environments/oscar/terraform.tfvars

Input variables are parameters for Terraform modules. This page covers configuration syntax for variables.

Thanks but.. not quite

I wasn’t quite clear enough

I meant as an ENV variable

like in the examples above

you cant. Terraform would see TF_VAR_FILE as a variable called FILE

Yup. Just an example of what it might be called in case anyone has come across it.

@oscar maybe use a yaml config instead?

locals {

env = yamldecode(file("${terraform.workspace}.yaml"))

}

or instead of terraform.workspace, use any TF_VAR_…

@oscar since you’re using geodesic + tfenv, you can do TF_CLI_PLAN_VAR_FILE=foobar.tfvars

or if you want to do stock terraform, you can do

TF_CLI_ARGS_plan=-var-file=foobar.tfvars

Terraform uses environment variables to configure various aspects of its behavior.

Can’t seem to find documentation on doing this

Hi how can I read a list in terraform from file? https://github.com/cloudposse/terraform-aws-ecs-container-definition/blob/master/examples/string_env_vars/main.tf I’d like to pass ENV variables from file eg. env.tpl ` [{

name = “string_var”

value = “123”

},

{

name = “another_string_var”

value = “true”

},

{

name = “yet_another_string_var”

value = “false”

},

]

` data “template_file” “policy” {

template = “${file(“ci.env.json)}”

}

`

environment = data.template_file.policy.rendered

Terraform module to generate well-formed JSON documents (container definitions) that are passed to the aws_ecs_task_definition Terraform resource - cloudposse/terraform-aws-ecs-container-definition

Hi folks, if you have a service exposing an API documented using OpenAPI and are thinking of creating a terraform provider for it, you might find the following plugin useful:

Link to the repo: https://github.com/dikhan/terraform-provider-openapi

The OpenAPI Terraform Provider dynamically configures itself at runtime with the resources exposed by the service provider (defined in an OpenAPI document, formerly known as swagger file).

OpenAPI Terraform Provider that configures itself at runtime with the resources exposed by the service provider (defined in a swagger file) - dikhan/terraform-provider-openapi

2019-10-18

How folks — I keep struggling with the idea of bootstrapping an RDS database with a user, schema, etc after it is initially created. I can use local-exec to invoke psql with sql scripts, but that kind of stinks and there seems to be heavy lifting involved to use something like the OS Ansible Provider (https://github.com/radekg/terraform-provisioner-ansible). What I’m trying to get at: Is there a better way? I’d like a way to easily bootstrap on RDS instance or cluster creation + run migrations appropriately.

Marrying Ansible with Terraform 0.12.x. Contribute to radekg/terraform-provisioner-ansible development by creating an account on GitHub.

Have you looked into the postgresql provider? https://www.terraform.io/docs/providers/postgresql/index.html. This will work better than local-exec.

A provider for PostgreSQL Server.

@sarkis It doesn’t seem to allow me to run SQL files against the remote DB or am I missing that functionality?

i wouldn’t do this with terraform, the provider allows you to setup user, database and schema

any sql past that like init or migration should not be in TF imo

Yeah, I’m going the ansible route.

Thanks for weighing in @sarkis — just confirming I shouldn’t go that route is great.

i think that would work better in that ansible may help in making that stuff idempotent, there could be potentially bad things happening if you keep applying sql every time tf applies

via local-exec - i’m not certain how you can easily achieve this .. i haven’t looked at the ansible provisioner, but i assume that is possible… basically you want to only run the sql once right?

Yeah, I need a way to query a database_version column and then run migration files off of that. It’s easy to do that in Ansible. Just being new to TF I didn’t know where to draw the line. Everyone says TF is not for the actual provisioning of resources so this makes.

@Matt Gowie how do you plan to deploy the actual software ? Is it docker orchestrated ?

2019-10-19

I must be blind - can’t find anywhere in the terraform docs how to add an IP to a listener target group with target_type set to ip for a network load balancer

2019-10-20

hi, I get “An argument named “tags” is not expected here” when trying to use tags in “aws_eks_cluster”

according to the docs it is supported, not sure how to debug/further investigate what’s going on… any tip?

I’m using the latest TF version, and the syntax is simply

tags = {

Name = "k8s_test_masters"

}

@Andrea Do you use aws provider version >=2.31 ?

Hi @github140 I was on 2.29 actually…

I removed it and run “terraform init”, and I got

provider.aws: version = "~> 2.30"

but no 2.31, and I still get the “tags” error…

hold on a sec, I’ve updated to 2.33 and the tags are now showing up in the TF plan command

thanks so much!!

can I just ask how you knew that at least version 2.31 was needed please?

this might help me the next time I fall into a similar issue..

@Andrea weekly check https://github.com/terraform-providers/terraform-provider-aws/blob/master/CHANGELOG.md

Terraform AWS provider. Contribute to terraform-providers/terraform-provider-aws development by creating an account on GitHub.

fair enough thanks @github140!

hey there how can i iterate two levels?, i want to get values from a list inside another list count = length(var.main_domains) count_alias = length(var.main_domains[count.index].aliases) like that

https://github.com/hashicorp/hcl2/blob/master/hcl/hclsyntax/spec.md#splat-operators tuple[*].foo.bar[0] is approximately equivalent to [for v in tuple: v.foo.bar[0]]

Former temporary home for experimental new version of HCL - hashicorp/hcl2

2019-10-21

:zoom: Join us for “Office Hours” every Wednesday 11:30AM (PST, GMT-7) via Zoom.

This is an opportunity to ask us questions on terraform and get to know others in the community on a more personal level. Next one is Oct 30, 2019 11:30AM.

Register for Webinar

#office-hours (our channel)

#office-hours (our channel)

I’m interested in hearing about some directory structure being used to manage terraform projects.

Hi Guys, anyone has tried update the ECS-module to attach multiple target group and add additional port in task definition in Terraform ?

we are working on upgrading the ECS modules to TF 0.12. If you want these new features added, please open an issue in the repo and described the required changes in more details

2019-10-22

Anyone using the terraform-aws-sns-lambda-notify-slack module? It doesn’t seem to include any outputs which makes it tricky to integrate with the things we want to feed events from. Opened https://github.com/cloudposse/terraform-aws-sns-lambda-notify-slack/issues/8

Hi, this module looks helpful to get slack notifications hooked up, however it's missing some outputs to make it easy to wire into other resources. My current use case is configuring a cloudwat…

This module is a wrapper around another module

Hi, this module looks helpful to get slack notifications hooked up, however it's missing some outputs to make it easy to wire into other resources. My current use case is configuring a cloudwat…

If you are blocked, you might want to use the upstream module directly

did you guys see this?

Thanks for sharing

Anyone know a way to allow configuring S3 bucket variables for transitions and support not including those transitions?

For example, I have the following module resource:

resource "aws_s3_bucket" "media_bucket" {

bucket = module.label.id

region = var.region

acl = "private"

force_destroy = true

tags = module.label.tags

versioning {

enabled = var.versioning_enabled

}

lifecycle_rule {

id = "${module.label.id}-lifecycle"

enabled = var.enable_lifecycle

tags = module.label.tags

transition {

days = var.transition_to_standard_ia_days

storage_class = "STANDARD_IA"

}

transition {

days = var.transition_to_glacier_days

storage_class = "GLACIER"

}

expiration {

days = var.expiry_days

}

}

}

Is there a way that I can pass values to the vars expiry_days or transition_to_* and have them not apply. I want to be able to turn those transitions on for some environments, but keep them off for others.

use dynamic blocks for transition and expiration with condition to check those variables (if the vars are present, enable the dynamic blocks), similar to https://github.com/cloudposse/terraform-aws-ec2-autoscale-group/blob/master/main.tf#L39

Terraform module to provision Auto Scaling Group and Launch Template on AWS - cloudposse/terraform-aws-ec2-autoscale-group

Good stuff @Andriy Knysh (Cloud Posse) — Will check that out. Thanks!

2019-10-23

hi guys, does anyone have a real working out of the box openvpn aws terraform module?

if not i’ll make one and share

Terraform module which creates OpenVPN on AWS. Contribute to terraform-community-modules/tf_aws_openvpn development by creating an account on GitHub.

A sample terraform setup for OpenVPN using Let’s Encrypt and Certbot to generate certificates - lmammino/terraform-openvpn

Hi, I haven’t tried them. but look like what you’re looking for.

Thanks!

@Andriy Knysh (Cloud Posse) so I mention to eric in the office hours, so sourcegraph reach out to me and I am building a LSIF indexer for terraform

basically it will allow web browser based code navigation for terraform

sounds interesting, thanks for sharing

hey all, thanks for open sourcing your terraform examples/modules. theyre a huge help.

i’m trying to use terraform-multi-az but just had a question.. how do i define the cidr i want to use? it’s a little unclear to me what this is doing:

locals {

public_cidr_block = cidrsubnet(module.vpc.vpc_cidr_block, 1, 0)

private_cidr_block = cidrsubnet(module.vpc.vpc_cidr_block, 1, 1)

}

are you talking about https://github.com/cloudposse/terraform-aws-multi-az-subnets ?

Terraform module for multi-AZ public and private subnets provisioning - cloudposse/terraform-aws-multi-az-subnets

so it takes the cidr from the vpc module and then splits it up using the https://www.terraform.io/docs/configuration/functions/cidrsubnet.html function for private/public. The resulting cidr blocks are then broken up further when the subnets are actually created. https://github.com/cloudposse/terraform-aws-multi-az-subnets/blob/master/private.tf#L17-L21

The cidrsubnet function calculates a subnet address within a given IP network address prefix.

Terraform module for multi-AZ public and private subnets provisioning - cloudposse/terraform-aws-multi-az-subnets

Yes Andrew. Ok let me take a look

is there a complete example somewhere showing how i’d use these to build a vpc from start to finish with everything – vpc, subnets, route tables, security groups, transit gateway attachment, transit gateway route tables, etc? it’s confusing to me how i’d use multiple modules together

By design, we’ve taken a different approach than many modules in the community.

We design our modules to be highly composable and solve one thing.

For example, with subnets, there are so many possible subnet strategies, that we didn’t overload the VPC module with that.

instead, we created (3) modules that implement various strategies

for example, our experience is there’s no “one size fits all” for organizations in how they want to subnet. it’s one of the more opinionated areas.

today, we don’t have a transit gateway module

but we have many vpc peering modules

it seems that using modules is the way to go, but having trouble understanding how i can adapt my old stuff that’s written as individual resources and leverage all the work you guys have already done with modules

there might not be a clear path. it might mean taking one piece at a time and redoing it using modules.

There’s an example here - https://github.com/cloudposse/terraform-aws-multi-az-subnets/tree/master/examples/complete

Terraform module for multi-AZ public and private subnets provisioning - cloudposse/terraform-aws-multi-az-subnets

and that makes sense now regarding the cidr, i thought that the multi-az-subnets was meant to bringup the full vpc but its just for the subnets

In terms of adapting your old stuff which is individual resources to modules. Generally you’d want to recreate things using modules where possible as while you can import/mv your existing terraform state to match modules etc it’s a lot of work and rarely ends well.

oh, yeah, i’m not talking about existing infra that’s running. not worried about state.

thanks.. will look through the examples some more.

almost all our modules (that have been converted to TF 0.12) have working examples with automated tests on a real AWS account, and many of them use vpc and subnets modules. Examples:

Terraform module to provision an AWS Elastic Beanstalk Environment - cloudposse/terraform-aws-elastic-beanstalk-environment

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

Terraform module to provision an Elastic MapReduce (EMR) cluster on AWS - cloudposse/terraform-aws-emr-cluster

thanks, yeah once you linked the first example i found a bunch of them

thanks sir

The EKS cluster is actually what I was trying to create. The only thing I need to add is a Route53 resolver / rules

2019-10-24

I am having a hard time retrieving an AWS Cognito pool id. data.aws_cognito_user_pools.pool[*].id seems to return the pool name instead. Anyone ran into this?

[*] ?

Try .ids instead of .id

Wasn’t working, but I found the solution. Need to wrap ids in tolist() and then it works. (This is TF012)

Uhhh! Nice

2019-10-25

Playing around with https://github.com/cloudposse/terraform-aws-cloudfront-s3-cdn and getting a 502 when testing it out. My understanding before reading AWS documentation was that I should be able to access the assets through the given cloudfront domain so d1234.cloudfront.net/{s3DirPath} or the alias cdn.example.com/{s3DirPath}. The s3DirPath is static/app/img/favicon.jpg so d1234.cloudfront.net/static/app/img/favicon.png should work

Terraform module to easily provision CloudFront CDN backed by an S3 origin - cloudposse/terraform-aws-cloudfront-s3-cdn

After reading the AWS documentation https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/GettingStarted.html it seems like my s3 bucket needs to be less restrictive?

Get started with these basic steps to start delivering your content using CloudFront.

have you looked at this example https://github.com/cloudposse/terraform-aws-cloudfront-s3-cdn/tree/master/examples/complete ?

Terraform module to easily provision CloudFront CDN backed by an S3 origin - cloudposse/terraform-aws-cloudfront-s3-cdn

also

The s3DirPath is static/app/img/favicon.jpg so d1234.cloudfront.net/static/app/img/favicon.png should work

check the file extensions, you have jpg and png for the same file

also, in this module https://github.com/cloudposse/terraform-aws-cloudfront-s3-cdn, the S3 bucket is not in the website mode

if you need an S3 website with CloudFront CDN, do something like this https://github.com/cloudposse/terraform-root-modules/blob/master/aws/docs/main.tf

Example Terraform service catalog of “root module” blueprints for provisioning reference architectures - cloudposse/terraform-root-modules

i was typing the paths, not copy pasting. it’s jpg

must be the website mode. thanks!

website mode and not website mode - you should be able to get your files anyway. Take a look at the example above

in website mode, the bucket must have public read access

in normal mode, it does not

Is there a terraform provider for terraform cloud? I can’t find one

The Terraform Enterprise provider is used to interact with the many resources supported by Terraform Enterprise. The provider needs to be configured with the proper credentials before it can be used.

But that is enterprise

I could tell next week after our tf cloud demo

I think the client is the same

Endpoint is different

TFE and cloud is the same except one can be sefl hosted and the other one is saas

2019-10-26

Erik I use tf cloud.

TFE is provider & remote is tfe backend

Tfe == tfc

Ok great! Know if one can use their tfe provider with tfc without upgrading

Not sure how you mean.

The tfe provider requires no changes to be used with tfc

I mean upgrading from a free tier

Free tfc to the 2nd or 3rd tfc? No dont believe so. Just a SaaS package upgrade

Tfc to tfe though does require changes to the host name in the provider config

Yes, I think is the same client, different hostname (since you host it yourself) and you will have to migrate modules and such but I guess hashicorp will do that for you

2019-10-27

2019-10-28

:zoom: Join us for “Office Hours” every Wednesday 11:30AM (PST, GMT-7) via Zoom.

This is an opportunity to ask us questions on terraform and get to know others in the community on a more personal level. Next one is Nov 06, 2019 11:30AM.

Register for Webinar

#office-hours (our channel)

#office-hours (our channel)

I remember someone here writing a “serverless” module wholly in terraform, but now I can’t find it… Anyone have that link?

Terraform module which creates an ECS Service, IAM roles, Scaling, ALB listener rules.. Fargate & AWSVPC compatible - blinkist/terraform-aws-airship-ecs-service

api gateway + lambda?

pretty much, i can’t remember who here posted the module they were working on

i’ll try the sweetops archive

@Maxim Mironenko (Cloud Posse) is working to break down the archive by month

it will make it easier to find things.

it’s all good, i like the one page per channel, lets find-in-page do its thing

I love you @Andriy Knysh (Cloud Posse) https://github.com/cloudposse/terraform-aws-ecs-alb-service-task you made my day!!!!!!!!!!! 0.12 ready..

Terraform module which implements an ECS service which exposes a web service via ALB. - cloudposse/terraform-aws-ecs-alb-service-task

props to @Andriy Knysh (Cloud Posse) !

he’ll have the rest finished anyday now (today or tomorrow)

Thanks @jose.amengual

amazing, thanks so much

found it, thank you sweetops archive! https://github.com/rms1000watt/serverless-tf

Serverless but in Terraform . Contribute to rms1000watt/serverless-tf development by creating an account on GitHub.

aha! by @rms1000watt

Team, May I have working terraform module for AWS Fargate with ALB

Terraform module which implements an ECS service which exposes a web service via ALB. - cloudposse/terraform-aws-ecs-alb-service-task

Thank you Pepe,

np

2019-10-29

guys I have a bit of issue with terraform-aws-modules/eks/aws

I have a cluster up and running, but when I ssh into pod, the localhost:10255 is unreachable

[root@ip-10-2-201-138 metricbeat]# curl localhost:10255

curl: (7) Failed connect to localhost:10255; Connection refused

anyone knows how to enable this port on kubernetes level?

if someone would be interested this is the issue https://github.com/awslabs/amazon-eks-ami/issues/128

Port 10255 is no longer open by default and it was not marked as release…

What happened: I have an EKS 1.10 cluster with worker nodes running 1.10.3 and everything is great. I decided to create a new worker group today with the 1.10.11 ami. Everything is great except it …

ask in #terraform-aws-modules channel

How to override ECS task role when using terraform in code build? I am trying to set profile on backend config but it’s not picking up the profile

please provide more info, what modules are you using, share some code

I am using my custom AWS code with partial backed init

With terragrunt

Stucks exactly here

Terraform AWS provider. Contribute to terraform-providers/terraform-provider-aws development by creating an account on GitHub.