#terraform (2019-11)

Discussions related to Terraform or Terraform Modules

Discussions related to Terraform or Terraform Modules

Archive: https://archive.sweetops.com/terraform/

2019-11-01

Hey Guys, I have a Quick Question - if any one has every come across this “Is there any way we can make our S3 bucket Private and then Cloudfront provides - URL , Which can be viewed from this Private S3 Bucket” ?

i hope this link will help you https://aws.amazon.com/blogs/security/how-to-use-bucket-policies-and-apply-defense-in-depth-to-help-secure-your-amazon-s3-data/

Amazon S3 provides comprehensive security and compliance capabilities that meet even the most stringent regulatory requirements. It gives you flexibility in the way you manage data for cost optimization, access control, and compliance. However, because the service is flexible, a user could accidentally configure buckets in a manner that is not secure. For example, let’s […]

acm certs DNS validation with assume role

acm certs DNS validation with assume role

here’s what I’m trying to do: create a cert in dev account with a subject alternative name (SAN) in “vanity” domains account

and validate both with DNS validation

here’s teh problem I’m getting: Access Denied!

I do tf apply from my ops profile. It is a trusted entity on both my dev and domains accounts

You need two separate aws providers

Is that how you have it setup?

I straight up copy / pasted the provider blocks used in the https://github.com/cloudposse/terraform-aws-vpc-peering-multi-account/blob/master/requester.tf#L29

Terraform module to provision a VPC peering across multiple VPCs in different accounts by using multiple providers - cloudposse/terraform-aws-vpc-peering-multi-account

yes! thanks @Igor

here’s a gist with the least possible stuff; the acm parts are straight from tf docs

on looking at the vpc-peering module above, I notice there’s no profile attr, just the assume_role - I wonder if that’s my issue.

the Acces Denied error indicates that dev account assumed role does not have permissions to access the domains account route53 record.

but what i’d expect is that it should be assumed under the domain profile to validate the record, because that 2nd provider alias is used.

aws_route53_record.cert_validation_alt1 doesn’t have provider attribute set

I assume you want that to be aws.route53 as well

I can’t tell from the gist what your code is trying to do

Oh, I see, you’re using a SAN. I have never tried that before – does AWS allow multiple cert-validations on the same cert?

I also don’t see you using the aws_acm_certificate_validation resource

aws_route53_record.cert_validation_alt1 doesn’t have provider…

yep @Igor that could be it!

you’re right I left that off of the gist; copy paste fail. updating now to better show the intent.

2019-11-02

Interesting read on why terraform providers for Kubernetes and helm are less than ideal… see comment by pulumi developer. https://www.reddit.com/r/devops/comments/awy81c/managing_helm_releases_terraform_helmsman/?utm_source=share&utm_medium=ios_app&utm_name=iossmf

Hey everyone, We are continuing to move more of our stack to Kube, specifically GKE, and have gone through a few evaloutions as to how we handle…

(It’s also a bit older post. Maybe something have been addressed in later releases of the providers and 0.12)

2019-11-03

If I renamed a folder, terrafrom is creating a new resource from that folder. How do I fix this in the state?

How do you use this folder in terraform? Module?

If module then path should not matter as long as module name stays same

Actually this folder stores terragrunt file

Oh, so it is terragrunt - i haven’t used it - won’t help

incase anyone else get’s a requirement to convert TitleCaseText to kabab-case-text in terraform here you go:

lower(join("-", flatten(regexall("([A-Z][a-z]*)", "ThisIsTitleCaseStuff"))))

I don’t think I’ll ever need to do that again, but it felt a shame to waste

Has anyone seen any good resources for using terratest, examples etc and they have tied it in to a CI/CD pipeline?

@Bruce checkout these repos: https://github.com/search?q=topic%3Ahcl2+org%3Acloudposse+fork%3Atrue

GitHub is where people build software. More than 40 million people use GitHub to discover, fork, and contribute to over 100 million projects.

They all use terratest with ci/cd (#codefresh)

basically, every terraform 0.12 (HCL2) module we have has terratest

Thanks @Erik Osterman (Cloud Posse)

2019-11-04

:zoom: Join us for “Office Hours” every Wednesday 11:30AM (PST, GMT-7) via Zoom.

This is an opportunity to ask us questions on terraform and get to know others in the community on a more personal level. Next one is Nov 13, 2019 11:30AM.

Register for Webinar

#office-hours (our channel)

#office-hours (our channel)

hey everyone, couple questions about the EKS module. https://github.com/cloudposse/terraform-aws-eks-workers

- The default ‘use latest image’ filter and data source doesn’t seem to work for me. Using

var.regionvalue of"ap-southeast-2"results in 1.11 nodes with a 1.14 cluster (I set cluster version to 1.14). Any ideas? This works:aws ssm get-parameter --name /aws/service/eks/optimized-ami/1.14/amazon-linux-2/recommended/image_id --region ap-southeast-2 --query "Parameter.Value" --output text - I think we will generally set AMI’s and in doing so I see the launch template has updated but new version is not default and the nodes aren’t rolling automatically (updated in place, dummy cluster). Is this expected behaviour? If I

terraform destroy -resource=workerpool1followed by anapplyI get the newer node version. - Will PR custom configmap template once get cluster going.

- Have egress from an alpine pod to 8.8.8.8:53 but don’t seem to have DNS in cluster. Using multi-az subnets and tagging private (nodes), public (nlb/alb), shared, owned..

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

2019-11-05

data "aws_ssm_parameter" "eks_ami" {

name = "/aws/service/eks/optimized-ami/${var.kubernetes_version}/amazon-linux-2/recommended/image_id"

}

module "eks_workers_1" {

source = "git::<https://github.com/cloudposse/terraform-aws-eks-workers.git?ref=tags/0.10.0>"

use_custom_image_id = true

image_id = data.aws_ssm_parameter.eks_ami.value

oh good thinking, sorry I didn’t look at other data sources.

howdy all… I’m trying to use cidrsubnets() to take a /16 and chop it up into /24’s for my subnets…

however, I think that I may need to hardcode the cidr blocks I want to use for my subnets

because:

A: I don't know if I'll need to add more subnets or AZs in the future

B: I don't think that cidrsubnets() will accurately chop up the cidr blocks into my subnets consistently

is there a better way to go about this other than hard coding the /24’s I want to assign to certain subnets?

I’m guessing there is some magic I’m missing with cidrsubnets(var.vpc_cidr_block, N, length(var.aws_azs)) or something like that

take a look at our subnet modules

Terraform module for multi-AZ public and private subnets provisioning - cloudposse/terraform-aws-multi-az-subnets

This one looks interesting

Yes, we use that one all the time

we factor in a “max subnets” fudge factor

so it will slice and dice it based on the max

but allocate the number you need now

Hey team, is there a way to pass the assumed role credentials for the provider into a null resource local exec provisioner?

@Erik Osterman (Cloud Posse) have you ever done anything like this?

my gut tells me it should just work

the environment variables are inherited

ah, but we assume before we call terraform

i’m guessing you’re assuming in terraform

then no-go

assuming in terraform

Uhhh!

painful

not sure how you’ll work around that one

fwiw, we assume role with vault

then call terraform

yeah, right now I’m assuming a role with a provider block that uses the session token I have in my local machines env

so I’m assuming it uses my local environment variables and not those assumed in terraform?

@Callum Robertson look what @Andriy Knysh (Cloud Posse) just did

what Update provisioner "local-exec": Optionally install external packages (AWS CLI and kubectl) if the worstation that runs terraform plan/apply does not have them installed Optionally…

for getting assumed roles to work in a local-exec

aws_cli_assume_role_arn=${var.aws_cli_assume_role_arn}

aws_cli_assume_role_session_name=${var.aws_cli_assume_role_session_name}

if [[ -n "$aws_cli_assume_role_arn" && -n "$aws_cli_assume_role_session_name" ]] ; then

echo 'Assuming role ${var.aws_cli_assume_role_arn} ...'

mkdir -p ${local.external_packages_install_path}

cd ${local.external_packages_install_path}

curl -L <https://github.com/stedolan/jq/releases/download/jq-${var.jq_version}/jq-linux64> -o jq

chmod +x ./jq

source <(aws --output json sts assume-role --role-arn "$aws_cli_assume_role_arn" --role-session-name "$aws_cli_assume_role_session_name" | jq -r '.Credentials | @sh "export AWS_SESSION_TOKEN=\(.SessionToken)\nexport AWS_ACCESS_KEY_ID=\(.AccessKeyId)\nexport AWS_SECRET_ACCESS_KEY=\(.SecretAccessKey) "')

echo 'Assumed role ${var.aws_cli_assume_role_arn}'

fi

we’ve been testing the EKS modules on Terraform Cloud, and needed to add two things:

- Install external packages e.g. AWS CLI and

kubectlsince those are not present on TF Cloud Ubuntu workers

- If we have multi-account structure and have users in the identity account, but want to deploy to e.g. staging account, we provide the access key of an IAM user from the identity account and the role ARN to assume to be able to access the staging account. TF itself assumes that role. But also AWS CLI assumes that role (see above) to get kubeconfig from the EKS cluster and then run

kubectlto provision the auth configmap

epic work @Andriy Knysh (Cloud Posse)!

I’ll test this out over the weekend and report

the PR has been merged to master

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

Community Note Please vote on this issue by adding a reaction to the original issue to help the community and maintainers prioritize this request Please do not leave "+1" or "me to…

Found this, sounds like an awesome idea

environment {

AWS_ACCESS_KEY_ID = "${data.aws_caller_identity.current.access_key}"

AWS_ACCESS_KEY_ID = "${data.aws_caller_identity.current.secret_key}"

}

clever

didn’t know they were available like that

they’re not right now =[

Hoping Hashicorp review the pull request and get it added in

looks like the secrets get persisted in the statefile however, so will need some workaround on that

what are you trying to solve? … using local exec

aws s3 sync

for bucket objects

aha

yea, i’ve had that pain before

Looks like I’ll have to keep this seperate from my tf configuration

and put it into a build pipeline of sorts

do you strictly need to use aws s3 sync?

maybe just a stage that jenkins runs

e.g. non deterministic list of files

yeah, it’s just a sprawling directory of web assets

the TF resource for bucket upload with single object from memory (this was a while ago)

sooooo

what about using local exec to build a list of files?

then use raw terraform to upload them

e.g. call find

could you give me an example?

You could try using local-exec to build an aws config profile with the assume role config, then pass the –profile option to aws-cli?

See credential_source = Environment… https://docs.aws.amazon.com/cli/latest/topic/config-vars.html#using-aws-iam-roles

this is what I do to assume role :

aws-vault exec PROFILE -- terraform apply -var 'region=us-east-2' -var-file=staging.tfvars

in my local machine

providers.conf

provider "aws" {

region = var.region

# Comment this if you are using aws-vault locally

assume_role {

role_arn = var.role_arn

}

}

jenkins with and instance profile uses straight terraform

the role arn is passed as a var

pretty easy

yeah, I’m using AWS-VAULT as well but right now it assumes security account credentials with MFA to get the state file and lock in the state bucket

I’ve just made this a stage that my jenkins host runs with it’s instance profile as you suggested @jose.amengual

Thanks all!

2019-11-06

Hi, how can I pass environemnt variables from file using https://github.com/cloudposse/terraform-aws-ecs-container-definition

Terraform module to generate well-formed JSON documents (container definitions) that are passed to the aws_ecs_task_definition Terraform resource - cloudposse/terraform-aws-ecs-container-definition

I’ve managed to deploy multiple task definitions and services to an AWS ECS service using terraform but now am stumped at simple services discovery. I don’t want to use service discovery through Route53 as this seems overkill for now when the deployed services (docker containers) exist on the same host ECS EC2 instance. Does anyone have any recommendations beyond using docker inspect and grep to discover the subnet IP address ?

Consul?

Istio? AppMesh? Consul is really good though, I would check that out.

has anyone gotten terraform 0.12 syntax checking and highlighting to work with VSCode?

SweetOps is a collaborative DevOps community. We welcome engineers from around the world of all skill levels, backgrounds, and experience to join us! This is the best place to talk shop, ask questions, solicit feedback, and work together as a community to build sweet infrastructure.

not sure if helpful

also, there’s someone in this channel that has been working on something for vscode/HCL2 I think

thanks @loren!

Hello, do you know any vscode extension that work well with v0.12 syntax?

but i can’t remember who it was

https://github.com/mauve/vscode-terraform/issues/157#issuecomment-547690010 I’ve had it working for a couple of weeks. It’s stable but not 100% functionality

Hi! Is there any plans to implement hcl2 support?

crud, i grabbed the wrong link. it’s the next reply

What’s up? @Erik Osterman (Cloud Posse)

has anyone gotten terraform 0.12 syntax checking and highlighting to work with VSCode?

@MattyB will fix most of the issues, been busy with preparing for release of the terraform provider for azuredevops

Is this the same provider Microsoft is working on? https://github.com/microsoft/terraform-provider-azuredevops

Terraform provider for Azure DevOps. Contribute to microsoft/terraform-provider-azuredevops development by creating an account on GitHub.

No worries. It does what I need. Thanks!

Anybody here use azuredevops , just curious

I used to. No complaints.

I use it as well and have been pretty happy with it

hi @Andriy Knysh (Cloud Posse) @Igor Rodionov I’ve been trying to setup an EKS cluster using this module setup node groups https://github.com/cloudposse/terraform-aws-eks-workers, how I can set a list of labels to kube node ? thank you in advance

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

by list of labels you mean tagging?

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

tags attached only to ec2 instances

Good timing, we tried doing this but nodes wouldn’t join cluster.

# FIXME #

bootstrap_extra_args = "--node-labels=example.com/worker-pool=01,example.com/worker-pool-colour=blue"

Don’t have SSH yet so commented out until can debug

it could be done using the three variables https://github.com/cloudposse/terraform-aws-eks-workers/blob/master/userdata.tpl

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

1

1Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

1

1Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

Come and read 90 days of AWS EKS in Production on Kubedex.com. The number one site to Discover, Compare and Share Kubernetes Applications.

@vitaly.markov @kskewes thanks for testing the modules

it would be super nice if we could add your changes to kubelet params to the test https://github.com/cloudposse/terraform-aws-eks-cluster/tree/master/examples/complete

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

and here in the automatic Terratest we have Go code to wait for all worker nodes to join the cluster https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/test/src/examples_complete_test.go#L81

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

which was tested many times

but we did not test it with node labels

would be nice to add it to the test and test automatically

TestExamplesComplete 2019-11-06T18:55:51Z command.go:53: Running command terraform with args [output -no-color eks_cluster_security_group_name]

TestExamplesComplete 2019-11-06T18:55:51Z command.go:121: eg-test-eks-cluster

Waiting for worker nodes to join the EKS cluster

Worker Node ip-172-16-100-7.us-east-2.compute.internal has joined the EKS cluster at 2019-11-06 18:56:05 +0000 UTC

Worker Node ip-172-16-147-3.us-east-2.compute.internal has joined the EKS cluster at 2019-11-06 18:56:06 +0000 UTC

All worker nodes have joined the EKS cluster

@Andriy Knysh (Cloud Posse) I’ve found another bug (I think), by default terraform create a control plane with a specified kubernetes version from tf vars, but the node group will be provisioned with default version provided by ami, currently 1.11

I specify another variable eks_worker_ami_name_filter = "amazon-eks-node-${var.kubernetes_version}*" to use correct ami

+ kubectl version --short

Client Version: v1.16.0

Server Version: v1.14.6-eks-5047ed

+ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-172-16-109-48.eu-central-1.compute.internal Ready <none> 12m v1.11.10-eks-17cd81

ip-172-16-116-136.eu-central-1.compute.internal Ready <none> 99m v1.11.10-eks-17cd81

ip-172-16-143-206.eu-central-1.compute.internal Ready <none> 99m v1.11.10-eks-17cd81

ip-172-16-157-119.eu-central-1.compute.internal Ready <none> 12m v1.11.10-eks-17cd81

I think EKS currently supports 1.14 only

and you can set it here https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/main.tf#L101

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

oh you are talking about worker nodes?

your changes would be good

but still I think EKS support 1.14 only (last time I checked)

is most_resent not working to select the latest version? https://github.com/cloudposse/terraform-aws-eks-workers/blob/master/main.tf#L141

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

and you can always use your own AMI https://github.com/cloudposse/terraform-aws-eks-workers/blob/master/main.tf#L182

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

is most_resent not working to select the latest version?

yeap, it doesn’t work correctly

Correct. It does not. I haven’t tested suggested change above, we pin AMI.

Just utilised custom security groups, so glad this is available in workers module.

@Andriy Knysh (Cloud Posse) I’ve opened a PR https://github.com/cloudposse/terraform-aws-eks-workers/pull/27 that solves the issue with extra kubelet args

I've faced with issue passing node labels to bootstrap.sh, the bootstrap cannot completed when we pass extra kubelet args as an argument, and thus node won't join to the kubernetes cluster….

How is everyone breaking up their Terraform stack for an ‘environment’ ? We have shared out by provider (aws/gitlab/etc) but with a migration to AWS am looking at breaking out our AWS within an environment. This means multiple state files, some copies into variables files and a dependency order for standing up the whole environment, but does limit blast radius for changes. We put multiple state files into the same s3 bucket using the CP s3 backend module. (thank you!) Very rough tree output in thread.

$ tree

. (production)

├── ap-southeast-2

│ ├── eks

│ │ ├── eks.tf

│ │ ├── manifests

│ │ │ └── example-stg-ap-southeast-2-eks-01-cluster-kubeconfig

│ ├── provider.tf -> ../provider.tf

│ │ ├── variables-env.tf -> ../../variables-env.tf

│ │ └── variables.tf -> ../variables.tf

│ ├── gpu.tf

│ ├── managed_services

│ │ ├── aurora.tf

│ │ ├── elasticache.tf

│ │ ├── mq.tf

│ ├── provider.tf -> ../provider.tf

│ │ ├── s3.tf

│ │ ├── variables-env.tf -> ../../variables-env.tf

│ │ └── variables.tf -> ../variables.tf

│ ├── provider.tf

│ ├── variables.tf

│ └── vpc

│ ├── provider.tf -> ../provider.tf

│ ├── subnets.tf

│ ├── variables-env.tf -> ../../variables-env.tf

│ ├── variables.tf -> ../variables.tf

│ └── vpc.tf

├── us-west-2

│ ├── eks

│ │ ├── eks.tf

<snip>

└── variables-env.tf

Note we are a linux + macOS shop and use symlinks in terraform and ansible already.

we do it this way

var.environment = var.stage

a region = a variable

modules are created by functional pieces/aws products or product groups

ECS task module, ECS cluster module, ALB module, Beanstalk module

and every module takes var.environment, var.region as an argument from a .tfvars file

sp no matter what region the module will just work

if you are doing mutiregion it is IMPERATIVE that you use a var.name that contains the region ( this is always thinking from the point of view of using cloudposse module structure)

otherwise things like IAM roles will collide because it is a globalservice

use Regional s3 state buckets if you are going multiregion

Cheers, I forgot to say we have modules in a separate git repo.

This structure is for within a region and done too minimise blast radius when running terraform apply.

The individual files line [mq.tf](http://mq.tf) is instantiating the mq module plus whatever else it needs.

ok , cool yes so similar of what we have

We overload stage with region to achieve that. I guess name would work as well.

we like to have a flat structure module where there is no subfolders and many TF files floating around

Eg. stage = "stg-ap-southeast-2"

cool

Flat structure is nice for referencing everything line global variables hah.

Have you ever merged two state files? If we wanted to collapse again that could be an option. Splitting out same I guess.

never

I will never try to do that

I think at that point I will prepare to do a destroy and add the resources to the other file and do an apply

Yeah. Pretty gnarly. Redeploy better if possible.

Think I’ll continue splitting environment into multiple directories to limit blast. Symlink around some vars.

Does it make sense to put the whole stack into a module and then use that module in test/uat/prod environments?

tt depends

I guess if you are comfortable with having dbs, albs, instances , containers and a bunch of other stuff in one single terraform if fine

you kinda need to think in terms of how your team will interact with that piece of code too

I will still separate deployment from infra but I’ll have db, alb and RDS in one module

Hi guys, quick question. Is this required to createa ECS cluster first, then you can deploy ECS service using fargate type on that cluster ?

what is required ?

ECS cluster I mean

yes

sorry for clumsy grammar

so let say

ecs cluster, service, task, task definition

we provision ecs cluster with terraform

in that order

is there any specific option for ecs cluster ?

as we plan only to use as fargate option for ecs service later on

when I see in ecs cluster, they say it has ecs cluster as instances

is not on the cluster level

Terraform module which implements an ECS service which exposes a web service via ALB. - cloudposse/terraform-aws-ecs-alb-service-task

we don’t want to follow create instances and use them as cluster

Terraform module which implements an ECS service which exposes a web service via ALB. - cloudposse/terraform-aws-ecs-alb-service-task

Fargate can only run with 16 gb of memory and 10 gb of disk

no EBS mounts

if you need more then you need to use EC2 type an manage your instances

ya

but for easy scalling and handle traffic up and down, is fargate enough ?

depend of your needs but yes it is easier

1 important thing need to ask

and also 3x more expensive than EC2 type

is like a slim down version of k8 running pods

is there anyway we can use terraform to provision ecs service without specific image ? It’s a mandatory to have image at first in ECR right ?

no is not

you can deploy a service that does not work

then deploy a new task def and it will work

oh I see

or a service with a correct task def but empty ECR repo

the reason why I ask those question

is because

we planing to do Ci/CD step for infras.

flow is . vpc -> all thing include ecs cluster -> ecs service

you think it’s can be achieved ?

yes

almost forgot

have you ever been in issue with update the ecs service itself ?

my issue is the name of ecs service, the container name sometimes make terraform stuck as well to reapply. Something relate with lambda_live_look_up on old task definition

no , I have not seen that

we do not use tf to deploy a new task def

we use ecs-deploy python script (google it)

ya

we follow that tool to

but you know what is big issue about ecs-deploy ?

it based the URL container image url. for ex: 1233452.aws.dk..zzz/test:aaa

so what happen when we want to apply new image: 1233452.aws.dk..zzz/exam1:aaa

it stuck forever.

2019-11-07

Hi everyone

I have a burning question on terraform <> AWS

resource "aws_kinesis_analytics_application" "app" {

name = var.analytics_app_name

tags = local.tags

// TODO: need to make inputs & outputs dynamic -- cater for cases of multiple columns, e.t.c.

// inputs {

// name_prefix = ""

// "schema" {

// "record_columns" {

// name = ""

// sql_type = ""

// }

// "record_format" {}

// }

// }

// outputs {

// name = ""

// "schema" {}

// }

}

Here’s my quandary - number of columns is not fixed

The issue here is how to accommodate different inputs (having different column types or numbers, etc)

use dynamic blocks https://www.terraform.io/docs/configuration/expressions.html#dynamic-blocks

The Terraform language allows the use of expressions to access data exported by resources and to transform and combine that data to produce other values.

Terraform module to provision Auto Scaling Group and Launch Template on AWS - cloudposse/terraform-aws-ec2-autoscale-group

hi guys, is cloudposse ecs cluster module come with draining lambda function as well ?

what module exactly ?

@Erik Osterman (Cloud Posse) thanks

Terraform Provider for AzureDevOps. Contribute to ellisdon-oss/terraform-provider-azuredevops development by creating an account on GitHub.

@Jake Lundberg (HashiCorp) maybe something for you…

I believe you were saying a lot of your customers were asking about integration of terraform cloud with azure devops

@Julio Tain Sueiras has something for you

@Erik Osterman (Cloud Posse) in this case is different A) https://www.hashicorp.com/blog/announcing-azure-devops-support-for-terraform-cloud-and-enterprise/ and B) the one that jake mention is probably TF Cloud to hook to AzureDevOps Repos

Today we’re pleased to announce Azure DevOps Services support for HashiCorp Terraform Cloud and HashiCorp Terraform Enterprise. This support includes the ability to link your Terra…

where as the provider purpose to manage all components of azuredevops with terraform

when I have a parameter like this:

// Add environment variables.

environment {

variables {

SLACK_URL = "${var.slack_url}"

}

}

How can I parameterize it?

the value of “variables” needs to be some kind of dynamic map

with any number of key/value pairs

When I try:

// Add environment variables.

environment {

variables {

"${var.env_vars}"

}

}

… where var.env_vars is a map … I get an error

key '"${var.env_vars}"' expected start of object ('{') or assignment ('=')

Actually, I think this solved my issue: https://github.com/hashicorp/terraform/issues/10437#issuecomment-263903770

Hi there, I'm trying to create a module which has a map variable as parameter and use it as parameter to create a resource. As map can be dynamic, I want to don't need to specify any of map…

I’ve found another not obvious behavior in case when we create two or more node groups (https://github.com/cloudposse/terraform-aws-eks-workers) then trafiek beetween pods in diferent node groups is not allowed due to SG missconfiguration,

So the podA in the ng-1 cannot to send request to podB in ng-2

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

Nice find @vitaly.markov thanks for testing again. It’s not a misconfiguration, we just didn’t think about that case when created the modules

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

but maybe we can use the additional SGs to connect the two worker groups together https://github.com/cloudposse/terraform-aws-eks-workers/blob/master/variables.tf#L410

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

by adding the SG from group 1 to the additional SGs of group 2, and vice versa

or we can even place all of the groups into just one SG https://github.com/cloudposse/terraform-aws-eks-workers/blob/master/variables.tf#L398

Terraform module to provision an AWS AutoScaling Group, IAM Role, and Security Group for EKS Workers - cloudposse/terraform-aws-eks-workers

Mm. I didn’t think about the example. For blue green node pools we created our own security group and put all pools in it and then specified in master. This way there was no chicken egg and reliance on pool module output to construct sg members.

Happy to share this if useful? Would be much nicer with for each module support.

yea that’s a good solution - create a separate SG, put all worker pools into it, and then connect to the master cluster

@kskewes please share, it would be worth adding to the examples

@kskewes I wasted time before I found a root of cause, I used to create EKS using eksctl, I didn’t expect this behavior

I think would be better to add an example to repo

Me too mate. Spent day or so tracking down. When back at computer will post. We can slim it down for repo.

Our [eks.tf](http://eks.tf) file with:

- vpc and subnets from remote state (we tend to use lots of state files to limit blast radius).

- single security group for all nodes so nodes and their pods can talk to each other and no chicken/egg with blue/green/etc. Note we left out a couple rules from module’s SG.

- blue/green worker pools.

- worker pool per AZ (no rebalancing and we need to manage AZ fault domain).

- static AMI selection (deterministic). Note, we are dangerously close to character limits (<64 or 63?) for things like role arn’s, etc.

Workflow is to:

- terraform apply (blue pool)

- …. update locals (ie: green pool ami and enable)

- terraform apply (deploys green pool alongside blue pool)

- … drain.

- …. update locals (ie: blue pool ami and disable)

- terraform apply (deletes blue pool, leaving green only)

Please sing out if any questions or suggestions. Will PR custom configmap location soon (haven’t written but we want).

I think the evolution of this could be even simpler. Always keep blue and green “online”. Only change the properties of blue and green with terraform. Automate the rest entirely using a combination of taints and cluster autoscaler. The cluster autoscaler supports scaling down to 0.

So basically you can taint all the nodes in blue or green and that would trigger a graceful migration of pods to one or the other

Then Kubernetes will take care of scaling down the inactive pool to zero.

kubectl taint node -l flavor=blue dedicated=PreferNoSchedule

This would then scale down the blue pool

@oscar you might dig this

That’s a great idea. My colleague has done an internal PR to start cluster auto scalar and we’ll discuss this. New to EKS so bit of a lift and shift.

Thanks!

For ref, we do this:

module "eks_workers_1" {

source = "git::<https://github.com/cloudposse/terraform-aws-eks-workers.git?ref=tags/0.10.0>"

use_custom_image_id = true

image_id = data.aws_ssm_parameter.eks_ami.value

namespace = var.namespace

stage = var.stage

name = "1"

...

allowed_security_groups = [module.eks_workers_2.security_group_id, module.eks_workers_3.security_group_id]

...

@oscar I tried to use allowed_security_groups and it broke all SG setup, I’ll create an issue later

I’ve not verified it ‘works’, but it certainly doesn’t ‘break’ on terraform apply

It’s been like this for some time

Thanks Oscar. Problem for us with security groups is if the color is disabled then no security group exists and terraform complains. This way we create a single group up front.

Team mate has done a great job with cluster auto scalar so we’ll end up doing Erik’s suggestion soon. Thanks for that!

Dude check out what was just announced! https://github.com/aws/aws-node-termination-handler

A Kubernetes DaemonSet to gracefully handle EC2 Spot Instance interruptions. - aws/aws-node-termination-handler

This might make things even easier

NOTE:

If a termination notice is received for an instance that’s running on the cluster, the termination handler begins a multi-step cordon and drain process for the node.

it’s not spot specific

Yeah, we want to roll out PDB’s everywhere so we don’t just take down our apps because we drained too fast

thanks for sharing though, we’ve been chatting in house when we saw

There is a Microsoft effort for a terraform provider for azure devops. Would be good to consolidate efforts https://github.com/microsoft/terraform-provider-azuredevops

Terraform provider for Azure DevOps. Contribute to microsoft/terraform-provider-azuredevops development by creating an account on GitHub.

2019-11-08

So, I’m trying to write an AWS Lambda module that can automatically add DataDog Lambda layers to the caller’s lambda…

I don’t think I can do terraform data lookup on the Lambda layer in DataDog’s account, so I’m trying to write a python script for my own external data lookup

data "external" "layers" {

program = ["python3", "${path.module}/scripts/layers.py"]

query = {

runtime = "${var.runtime}"

}

}

but, what I’m having an issue with right now, is how Python interprets the “query” parameter inside my program. The docs say it’s a JSON object … but depending on how I read it, it seems to come in as a set() or a io.TextWrapper

did Hashicorp changed the font on the Doc site ?

If you mean the general revamp and adding search then yes

Not seeing any search - am I blind? haha

yeah, the fonts changed too – and I don’t like them!

Oh, I thought something looked different

so, out of familiarity, I liked the old “default” font, but it feels quite readable to me.

do you know anyone in hashicorp to complain ?

I hope the search is better

I can’t find a solution how to format map to string, an example below:

input: { purpose = "ci_worker", lifecycle = "Ec2Spot" }

output: "purpose=ci_worker,lifecycle=Ec2Spot"

Any ideas?

yes, i’ve done this before in 0.11 syntax

- use

null_resourcewithcountto create a list of key=value pairs

- use

joinwithsplatto aggregate them with,

Example: https://github.com/cloudposse/reference-architectures/blob/master/modules/export-env/main.tf

Get up and running quickly with one of our reference architecture using our fully automated cold-start process. - cloudposse/reference-architectures

Awesome, thank you a lot

Get up and running quickly with one of our reference architecture using our fully automated cold-start process. - cloudposse/reference-architectures

maybe some more elegant way in HCl2

I think I’m getting bit by a really strange error: https://github.com/hashicorp/terraform/issues/16856

Terraform said that a local list variable (which elements have interpolations) is not a list Terraform Version Terraform v0.11.1 + provider.aws v1.5.0 + provider.template v1.0.0 Terraform Configura…

Yep. No workaround I know of in tf 0.11. Upgrade to tf 0.12

Terraform said that a local list variable (which elements have interpolations) is not a list Terraform Version Terraform v0.11.1 + provider.aws v1.5.0 + provider.template v1.0.0 Terraform Configura…

yeah, I’m trying to determine if the user has set “var.enable_datadog” to “true”; If they have then:

layers = [ list(var.layers, local.dd_layer) ]

else:

layers = [ var.layers ]

layers = [

"${var.enable_datadog ? "${local.dd_layer}:${data.external.layers.result["arn_suffix"]}" : var.layers }"

]

Error: module.lambda.module.lambda.aws_lambda_function.lambda: layers: should be a list

even when I remove the local, I get the same error:

layers = [

"${var.enable_datadog ? data.external.layers.result["arn_suffix"] : var.layers }"

]

so for some reason, data.external along with locals that are not strings seem to be the culprits here

Hi Guys : I’m using https://github.com/cloudposse/terraform-aws-s3-log-storage and I created a bucket that is receiving logs but I can download them, copy nothing not from the root account or anything

This module creates an S3 bucket suitable for receiving logs from other AWS services such as S3, CloudFront, and CloudTrail - cloudposse/terraform-aws-s3-log-storage

I tried setting acl = ""

This module creates an S3 bucket suitable for receiving logs from other AWS services such as S3, CloudFront, and CloudTrail - cloudposse/terraform-aws-s3-log-storage

but I got

Error: Error putting S3 ACL: MissingSecurityHeader: Your request was missing a required header

status code: 400, request id: 594BF7171170FCA0, host id: N+nVSWtyZ4S9+XMfo4d57W1LuuyPZWSI3y357Li0hUD46eBFRmEUt9c0z8iGssCE3iVsVbs11BU=

I guess the only thing that can write this flow logs

the policy that Tf is applying :

policy = jsonencode(

{

Statement = [

{

Action = "s3:PutObject"

Condition = {

StringEquals = {

s3:x-amz-acl = "bucket-owner-full-control"

}

}

Effect = "Allow"

Principal = {

AWS = "arn:aws:iam::127311923021:root"

}

Resource = "arn:aws:s3:::globalaccelerator-logs/*"

Sid = "AWSLogDeliveryWrite"

},

]

Version = "2012-10-17"

}

)

region = "us-east-1"

request_payer = "BucketOwner"

tags = {

"Attributes" = "logs"

"Name" = "globalaccelerator-logs"

"Namespace" = "hds"

"Stage" = "stage"

}

lifecycle_rule {

abort_incomplete_multipart_upload_days = 0

enabled = false

id = "globalaccelerator-logs"

tags = {}

expiration {

days = 90

expired_object_delete_marker = false

}

noncurrent_version_expiration {

days = 90

}

noncurrent_version_transition {

days = 30

storage_class = "GLACIER"

}

transition {

days = 30

storage_class = "STANDARD_IA"

}

transition {

days = 60

storage_class = "GLACIER"

}

}

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "aws:kms"

}

}

}

versioning {

enabled = true

mfa_delete = false

}

}

the resource part

~ resource "aws_s3_bucket" "default" {

- acl = "log-delivery-write" -> null

arn = "arn:aws:s3:::globalaccelerator-logs"

bucket = "globalaccelerator-logs"

bucket_domain_name = "globalaccelerator-logs.s3.amazonaws.com"

bucket_regional_domain_name = "globalaccelerator-logs.s3.amazonaws.com"

force_destroy = false

hosted_zone_id = "Z3AQBSTGFYJSTF"

id = "globalaccelerator-logs"

I can’t even modify the ACL

anyone have any ideas ?

@Igor Rodionov

Reading

it is driving me nuts…

ACL :

{

"Owner": {

"DisplayName": "pepe-aws",

"ID": "234234234242334234234222423423422424242434424224243"

},

"Grants": [

{

"Grantee": {

"DisplayName": "pepe-aws",

"ID": "234234234242334234234222423423422424242434424224243",

"Type": "CanonicalUser"

},

"Permission": "FULL_CONTROL"

},

{

"Grantee": {

"Type": "Group",

"URI": "<http://acs.amazonaws.com/groups/s3/LogDelivery>"

},

"Permission": "WRITE"

},

{

"Grantee": {

"Type": "Group",

"URI": "<http://acs.amazonaws.com/groups/s3/LogDelivery>"

},

"Permission": "READ_ACP"

}

]

}

to create the bucket I used :

# Policy for S3 bucket for Global accelerator flow logs

data "aws_iam_policy_document" "default" {

statement {

sid = "AWSLogDeliveryWrite"

principals {

type = "AWS"

identifiers = [

data.aws_elb_service_account.default.arn,

"arn:aws:iam::${data.aws_caller_identity.current.account_id}:root"

]

}

effect = "Allow"

actions = [

"s3:PutObject",

"s3:Get*"

]

resources = [

"arn:aws:s3:::${module.s3_log_label.id}/*",

]

condition {

test = "StringEquals"

variable = "s3:x-amz-acl"

values = [

"bucket-owner-full-control",

]

}

}

}

# S3 bucket for Global accelerator flow logs

module "s3_bucket" {

source = "git::<https://github.com/cloudposse/terraform-aws-s3-log-storage.git?ref=tags/0.6.0>"

namespace = var.namespace

stage = var.stage

name = var.name

delimiter = var.delimiter

attributes = [module.s3_log_label.attributes]

tags = var.tags

region = var.region

policy = data.aws_iam_policy_document.default.json

versioning_enabled = "true"

lifecycle_rule_enabled = "false"

sse_algorithm = "aws:kms"

#acl = ""

}

one very weird thing is that the KMS key used to encrypt the objects is unknown to me

maybe since I do not have access to the key I can unencrypt the objects

We do not have any account with ID

399586xxxxx

I guess that ID comes from GlobalAccelerator or something like that

@jose.amengual here is an example of using the module in another module https://github.com/cloudposse/terraform-aws-cloudfront-s3-cdn/blob/master/main.tf#L80

Terraform module to easily provision CloudFront CDN backed by an S3 origin - cloudposse/terraform-aws-cloudfront-s3-cdn

usage example https://github.com/cloudposse/terraform-aws-cloudfront-s3-cdn/blob/master/examples/complete/main.tf

Terraform module to easily provision CloudFront CDN backed by an S3 origin - cloudposse/terraform-aws-cloudfront-s3-cdn

maybe ^ will help

what value did you set here https://github.com/cloudposse/terraform-aws-s3-log-storage/blob/master/variables.tf#L115 ?

This module creates an S3 bucket suitable for receiving logs from other AWS services such as S3, CloudFront, and CloudTrail - cloudposse/terraform-aws-s3-log-storage

So after some research :

I never had a problem creating the bucket

but I did one mistake

I used :

sse_algorithm = "aws:kms"

without passing a key

and that somehow chose a kms from who knows where and my objects are all encrypted with a key that I don’t own

that is not the module doing that, that is the API or terraform doing it

my guess is that the objects are encrypted using the Origins KMS key ?

like the aws log delivery service ?

I have no clue

I wan to to test creating buckets this way

by hand and see what happens

the flow logs were successfully written in the bucket so that is why I though it was weir that it can encrypt but not decrypt the objects

I created another bucket using default aes-256 server side encryption using the manged aws kms key and the flow logs were correctly written to the bucket and I was able to download them without any issued and all this using the same module but just using the default encryption

so I tried earlier a new bucket same module using sse_algorithm = "aws:kms"

not passing a custom key and it used the default kms key

so I have no clue WTH

the other thing I could try is to use terraform 0.11 use the old version of the module and try again

maybe is a provider bug

I think it could be related to this :

After you enable default encryption for a bucket, the following encryption behavior applies:

There is no change to the encryption of the objects that existed in the bucket before default encryption was enabled.

When you upload objects after enabling default encryption:

If your PUT request headers don't include encryption information, Amazon S3 uses the bucket's default encryption settings to encrypt the objects.

If your PUT request headers include encryption information, Amazon S3 uses the encryption information from the PUT request to encrypt objects before storing them in Amazon S3.

If you use the SSE-KMS option for your default encryption configuration, you are subject to the RPS (requests per second) limits of AWS KMS. For more information about AWS KMS limits and how to request a limit increase, see AWS KMS limits.

2019-11-09

2019-11-10

I’ve opened a PR with hint to avoid another unexpected behaviour - mismatch kubelet versions on control plane and node groups https://github.com/cloudposse/terraform-aws-eks-cluster/pull/32

Unfortunately, most_recent variable does not work as expected. Enforce usage of eks_worker_ami_name_filter variable to set the right kubernetes version for EKS workers, otherwise will be used the f…

I need to stand up AWS Directory Service. I would love to maintain the users and groups as configuration as code with gitops. Anybody done that before?

there’s no aws api for it, i don’t think. you might need to roll your own thing on lambda or ec2 that leverages an ldap library

That was my other thought. To do it with LDAP or some Microsoft tool.

We’ve had some luck with the openldap package and python-ldap

2019-11-11

Static analysis powered security scanner for your terraform code - liamg/tfsec

Hi, is it possbile to read locals from file in terraform?

Sorta

You can load a json file into a local

Or yaml

The jsondecode function decodes a JSON string into a representation of its value.

The yamldecode function decodes a YAML string into a representation of its value.

:zoom: Join us for “Office Hours” every Wednesday 11:30AM (PST, GMT-7) via Zoom.

This is an opportunity to ask us questions on terraform and get to know others in the community on a more personal level. Next one is Nov 20, 2019 11:30AM.

Register for Webinar

#office-hours (our channel)

#office-hours (our channel)

One of my discomforts with Terraform has been the paucity of testing and verification… It looks like Hashicorp got the memo ; this just in from Berlin: https://speakerdeck.com/joatmon08/test-driven-development-tdd-for-infrastructure

Originally presented at 2019 O’Reilly Velocity (Berlin). In software development, test-driven development (TDD) is the process of writing tests and then developing functionality to pass the tests. Let’s explore methods of adapting and applying TDD to configuring and deploying infrastructure-as-code. Repository here: https://github.com/joatmon08/tdd-infrastructure

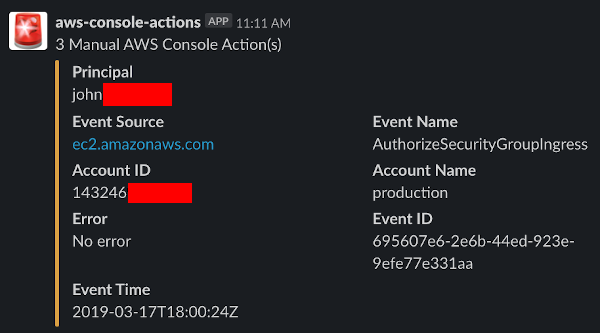

Yeah, using conftest to validate outputs from terraform plan is slick.

Originally presented at 2019 O’Reilly Velocity (Berlin). In software development, test-driven development (TDD) is the process of writing tests and then developing functionality to pass the tests. Let’s explore methods of adapting and applying TDD to configuring and deploying infrastructure-as-code. Repository here: https://github.com/joatmon08/tdd-infrastructure

@kskewes are you using conftest at work?

2019-11-12

Any pointers on what is the best practice in creating multiple AWS console iam users using terraform?

We as a team are evaluating Terraform Enterprise..vs Terraform Opensource.. does anyone see value add in Enterprise version

are you talking about TF Cloud or TF Enterprise (on-prem installation)? https://www.terraform.io/docs/cloud/index.html#note-about-product-names

Terraform Cloud is an application that helps teams use Terraform to provision infrastructure.

TF Cloud - to start with.. thats what Hashicorp is pushing lately

we see these features in TF Cloud that are very useful:

- Automatic state management - you don’t have to setup S3 bucket and DynamoDB for TF state backend

atlantis-like functionality included (runplanon pull request) - you don’t have to provisionatlantisif you want GitOps

- Security and access control management - you create teams and assign permissions to the teams (which can run plan, apply, destroy, etc.), and then add users to the teams

and you can use workspaces

and they are the recommended way to use Cloud

not that you can’t use it in OSS but they are not recommended for multi-team repos etc

We have an official Demo on Friday,

The bigger issue I have with TF Cloud is it requires me to give them Access Keys with essentially admin access. Which means I need to trust them to keep those keys secure… Whereas with Atlantis I can run it in an account and the security of it is my responsibility.

Thanks for the inputs @Andriy Knysh (Cloud Posse) @jose.amengual.. Appreciate that! I am using terragrunt for state-s3 management , tied up terragrunt plan/apply on Jenkins.. but only the Security and access management is thru iam-roles.. I will look forward the Demo on Friday to see further on TF cloud

then you buy TF enterprise, that is self hosted, if you do not trust them

Yes giving them the access keys is the biggest concern

All the rest is ok

Not sure if buying tf enterprise is worth it

You have to pay for it plus for its hosting

exactly

In that case, might be better to use open source terraform and atlantis

For cost and security reasons

yes

or use GithubAction hosted-runner

if you use github

I guess we just have to wait for the news that tf cloud was hacked and access keys compromised :)

Internal players are the biggest concern

that is a good point

Hello Everyone. I’m having an issue with ACM and I’m hoping someone knows why this is happening or how to troubleshoot further. I’m creating an LB config with an HTTPS listener and with the following module configuration:

module "cert" {

source = "git::<https://github.com/cloudposse/terraform-aws-acm-request-certificate.git?ref=0.4.0>"

zone_name = local.zone

domain_name = local.origin_dns

subject_alternative_names = local.origin_san

ttl = "300"

process_domain_validation_options = true

wait_for_certificate_issued = true

}

Environment: Terraform: v0.12.13 local.zone is: nonprod.mydomain.com local.origin_dns is: origin.nonprod.mydomain.com local.origin_san is: [“main.dev.mydomain.com”, “origin.dev.mydomain.com”, “main.qa.mydomain.com”, “origin.qa.mydomain.com”, “main.uat.mydomain.com”, “origin.uat.mydomain.com”]

Issue 1: First run: everything is created when I look in the portal, but the TF output says:

Error: 6 errors occurred:

* missing main.dev.mydomain.com DNS validation record: _654c27fb72c292a9967470a6a4c10216.main.dev.mydomain.com

* missing origin.qa.mydomain.com DNS validation record: _88c3a0c95150482d39451905c3703883.origin.qa.mydomain.com

* missing main.uat.mydomain.com DNS validation record: _f5a5dd6ef183a5104c5e65cc301a27d0.main.uat.mydomain.com

* missing origin.uat.mydomain.com DNS validation record: _2527e37bcae7b44a3d28db696f258b84.origin.uat.mydomain.com

* missing main.qa.mydomain.com DNS validation record: _c1f13019befd352bcee1787e92f43a0b.main.qa.mydomain.com

* missing origin.dev.mydomain.com DNS validation record: _b2eda108e906975e5775231a8b92dcbf.origin.dev.mydomain.com

Issue 2: Second+ run: I am getting a Cycle error and all of the records:

Error: Cycle: module.cert.aws_route53_record.default (destroy deposed 0aea8e5c), module.cert.aws_route53_record.default (destroy deposed 0eee4568), module.cert.aws_route53_record.default (destroy deposed 8317334a), module.cert.aws_route53_record.default (destroy deposed cfd8b435), module.cert.aws_route53_record.default (destroy deposed 8741e753), module.cert.aws_route53_record.default (destroy deposed 44a3ff11), module.cert.aws_acm_certificate.default (destroy deposed 312e6bad), aws_lb_listener.front_end_https, module.cert.aws_route53_record.default (destroy deposed bad2ac93)

anyone have any ideas? thanks in advance!

Hrmmmm! so looks like you’re using the HCL2 version of the module

that one i know fixed a bunch of problems we had with tf-0.11

yeah, i’ve tried many adjustments to it, and all roads lead back to the above “errors”

I haven’t seen this one before…

have you tried (for debugging) to reduce the configuration to the smallest working one?

then go up from there?

resources are created on run 1, but it errors out, Cycle error with destroy deposed from the lifecycle on the cert

yeah, i’ve done a debug TF with just the module and variables involved.. no joy

i’m going to see if i can increase the create timeout on validation by adding this to the aws_acm_certificate_validation resource:

timeouts {

create = "60m"

}

looks like that’s an option, not sure if it’ll work.. i already had to increase retries and decrease parallelism.. this thing makes a slew of route53 API calls

moment of truth…

getting all the Creation complete after 42s and watnot… waiting for the error at the end.

no joy:

Error: 6 errors occurred:

* missing origin.uat.mydomain.com DNS validation record: _2527e37bcae7b44a3d28db696f258b84.origin.uat.mydomain.com

* missing main.qa.mydomain.com DNS validation record: _c1f13019befd352bcee1787e92f43a0b.main.qa.mydomain.com

* missing origin.dev.mydomain.com DNS validation record: _b2eda108e906975e5775231a8b92dcbf.origin.dev.mydomain.com

* missing main.uat.mydomain.com DNS validation record: _f5a5dd6ef183a5104c5e65cc301a27d0.main.uat.mydomain.com

* missing origin.qa.mydomain.com DNS validation record: _88c3a0c95150482d39451905c3703883.origin.qa.mydomain.com

* missing main.dev.mydomain.com DNS validation record: _654c27fb72c292a9967470a6a4c10216.main.dev.mydomain.com

all the records were successfully created, tho

@John H Patton did you loom at the example https://github.com/cloudposse/terraform-aws-acm-request-certificate/blob/master/examples/complete

Terraform module to request an ACM certificate for a domain name and create a CNAME record in the DNS zone to complete certificate validation - cloudposse/terraform-aws-acm-request-certificate

it gets provisioned on AWS and tested with terratest https://github.com/cloudposse/terraform-aws-acm-request-certificate/blob/master/test/src/examples_complete_test.go

Terraform module to request an ACM certificate for a domain name and create a CNAME record in the DNS zone to complete certificate validation - cloudposse/terraform-aws-acm-request-certificate

yeah, i have looked at so much terraform all over the place, those examples included… i’ll revisit that, however… perhaps i’ve missed something

yeah, i have… the equivalent for me would be the following tfvars:

region = "us-east-2"

namespace = "na"

stage = "nonprod"

name = "nonprod-zone"

parent_zone_name = "nonprod.mydomain.com"

validation_method = "DNS"

ttl = "300"

process_domain_validation_options = true

wait_for_certificate_issued = true

mydomain is a placeholder, of course

and my san is different

let me try using wildcards… haven’t tried that yet

oh, so the module works only for star subdomains in SAN

[domain.com](http://domain.com) and *.[domain.com](http://domain.com) will have exactly the same DNS validation records generated by TF

not-star subdomain will have its own different DNS validation record

the module does not apply many DNS records

for many different issues we had with that before

[domain.com](http://domain.com) and *.[domain.com](http://domain.com) will have exactly the same DNS validation records generated - this is how AWS works

so, how would i create a cert with the following: CN: origin.nonprod.mydomain.com SAN: origin.dev.mydomain.com origin.qa.mydomain.com origin.uat.mydomain.com

i mean, i see the validation records being created correctly, and in the portal the cert is in the issued state

but, i can’t seem to use the cert

as for the many DNS records… yeah, i’ve run into that issue already… seems to be a rate limit on route53 that causes issues, i needed to reduce the parallelism to 2

i think i’m going to need to create the cert separate from the rest of this infra

multi-level subdomains are not supported in a wild-card cert

you have to do something like https://www.sslshopper.com/article-ssl-certificates-for-multi-level-subdomains.html

if you use the module, you’ll have to request many wildcard certificates, one for each subdomain DNS zone

well, i’m not sure how to make the hardcoded domains in the SAN work right… it’s driving me nuts.. been fighting this for over a week

I figured it out, finally! This is an array of an array:

distinct_domain_names = distinct(concat([var.domain_name], [for s in var.subject_alternative_names : replace(s, "*.", "")]))

It outputs:

distinct_domain_names = [

[

"main.nonprod.mydomain.com",

"origin.nonprod.mydomain.com",

],

]

This needed to be flattened, then I can work with it in other variables/data sources correctly

distinct_domain_names = flatten(distinct(concat([var.domain_name], [for s in var.subject_alternative_names : replace(s, "*.", "")])))

that took entirely way too long to figure out

hello there

can anyone tell me how to use the https://www.terraform.io/docs/providers/aws/d/autoscaling_groups.html to select an ASG based on it’s tags?

Provides a list of Autoscaling Groups within a specific region.

Specifically, I’m confused on the filters:

filter {

name = "key"

values = ["Team"]

}

filter {

name = "value"

values = ["Pets"]

}

so, what I’m thinking is:

filter {

name = "key"

values = ["tag:Name"]

}

filter {

name = "value"

values = ["my-name-tag-value"]

}

I don’t think so

filter {

name = "tag:Name"

values = ["hello"]

}

filter {

value = ["value"]

name = "tag:my-name-tag-value"

}

there

so I need two filter blocks?

no, that depends on your tags

if with one tag you can find what you need

then you just need only one

So, how do I fetch or specify the value of the “Name” tag?

* data.aws_autoscaling_groups.default: data.aws_autoscaling_groups.default: Error fetching Autoscaling Groups: ValidationError: Filter type tag:Name is not correct. Allowed Filter types are: auto-scaling-group key value propagate-at-launch

Like so:

filter {

name = "tag:Name"

values = ["thevalue"]

}

Provides a list of Autoscaling Groups within a specific region.

name - (Required) The name of the filter. The valid values are: auto-scaling-group, key, value, and propagate-at-launch.

You can’t use name = "tag:Name"

the tag Name has a capital N

by the way

Error: Error refreshing state: 1 error occurred:

* data.aws_autoscaling_groups.default: 1 error occurred:

* data.aws_autoscaling_groups.default: data.aws_autoscaling_groups.default: Error fetching Autoscaling Groups: ValidationError: Filter type tag:Name is not correct. Allowed Filter types are: auto-scaling-group key value propagate-at-launch

ah, i see.. this is a slightly different mechanism.. one moment…

does it have a tag name ?

some resources allow for tag{} lookup filters

filter {

name = "key"

values = ["tag:Name"]

}

filter {

name = "value"

values = ["thevalue"]

}

not all

even when I use this as a filter, I get nothing returned:

filter {

name = "key"

values = ["tag:Name"]

}

filter {

name = "value"

values = ["*"]

}

ah-hah.. here’s the thing… ASGs have funky tags:

tag {

key = "Name"

value = "somename"

propagate_at_launch = true

}

that;s what you’re matching against

if the tag:Name doesn’t work for the key, try:

filter {

name = "key"

values = ["Name"]

}

filter {

name = "value"

values = ["thevalue"]

}

data "aws_autoscaling_groups" "default" {

filter {

name = "key"

values = ["Name"]

}

filter {

name = "value"

values = ["*"]

}

filter {

name = "propagate-at-launch"

values = ["true"]

}

}

can anyone test this on their own ASGs?

I’m getting nothing back on all these variations

what version of TF are you using? what version of the aws provider?

I found this from back in 2017: https://github.com/terraform-providers/terraform-provider-aws/issues/1909

Feature Request Add arns as an output in addition to names in https://www.terraform.io/docs/providers/aws/d/autoscaling_groups.html Example usage: data "aws_autoscaling_groups" "beta…

TF 0.11

AWS provider ~> 1.0

that might have been it

I’ll try with latest AWS provider now

yeah, i’m using aws -> 2.35

give it a shot

Alright, I’m on 2.35 now

but im still not getting anything returned from the data lookup

are you sure you are running against the right AWS account and region ?

When using aws_autoscaling_group as a data source, I expect to be able to filter autoscaling groups similar to how I can filter other resource (i.e. aws_ami). Terraform Version Any Affected Resourc…

account and region are fine, my state is finding everything else

this is also my first time using this data lookup

I got it to work, using only one filter

nice!!!

congrats

thanks for taking a look

does that seem right?

2019-11-13

Good Morning anyone run into this?

Initializing provider plugins...

- Checking for available provider plugins...

Error verifying checksum for provider "okta"

Terraform v0.12.13 Linux somevm0 4.15.0-66-generic #75-Ubuntu SMP Tue Oct 1 0509 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

Hrmmmm no haven’t seen that one.

maybe blow away your .terraform cache directory and try agian?

Thanks… I blew both cache dirs away ~/.terraform.d and .terraform Same result.

Adding debug to the terraform Run I see this:

2019/11/13 14:23:05 [TRACE] HTTP client GET request to <https://releases.hashicorp.com/terraform-provider-okta/3.0.0/terraform-provider-okta_3.0.0_SHA256SUMS>

2019/11/13 14:23:05 [ERROR] error fetching checksums from "<https://releases.hashicorp.com/terraform-provider-okta/3.0.0/terraform-provider-okta_3.0.0_SHA256SUMS>": 403 Forbidden

possibly an upstream problem?

maybe try pinning to an earlier release of the provider

version = "~> 1.0.0"

No provider "okta" plugins meet the constraint "~> 1.0.0".

The version constraint is derived from the "version" argument within the

provider "okta" block in configuration. Child modules may also apply

provider version constraints. To view the provider versions requested by each

module in the current configuration, run "terraform providers".

To proceed, the version constraints for this provider must be relaxed by

either adjusting or removing the "version" argument in the provider blocks

throughout the configuration.

Error: no suitable version is available

perhaps I need to harass hashicorp

https://releases.hashicorp.com/ This shows no sign of okta.

https://github.com/terraform-providers/terraform-provider-okta/issues/4

So I cloned the repo and built it and installed locally and got it working.

Should articulate/terraform-provider-okta be the canonical version? I would propose either removing this or working with the articulate folks to give them access to this repository. Thanks.

mismatch between ~/.terraform.d/plugins/[octa_provider_file] and terraform state checksum of this file?

I have some mangled state locally and am moving it to TF Cloud so manual state management…yayyyy.

-/+ module.eks_cluster.aws_eks_cluster.default (new resource required)

id: "mycluster" => <computed> (forces new resource)

arn: "arn:aws:eks:us-west-2:xxxxxxx:cluster/mycluster" => <computed>

^ This is a concern. Normally I wouldn’t sweat a new resource, but if this destroys the EKS cluster… goes my week restoring everything. So my question is……..will this really delete the resource IF the id is the exact same as the live instance?

This is using https://github.com/cloudposse/terraform-aws-eks-cluster.git?ref=0.4.0 as the module source.

Yes, what ever is causing your new ID to be generated will force a new cluster

I don’t think there’s a way to avoid that

fwiw, @Andriy Knysh (Cloud Posse) is working on tf cloud support in our EKS modules

we have some PRs for this open now that will get merged by EOD

what Update provisioner "local-exec": Optionally install external packages (AWS CLI and kubectl) if the worstation that runs terraform plan/apply does not have them installed Optionally…

I pretty much knew the answer, but had to sanity check. the thing is…the id shouldn’t be new. it should be the exact same

well lookie there…kctl is a great addition. we do that separately right now

Hi there! We’re using this module for EKS https://github.com/cloudposse/terraform-aws-eks-cluster, and currently trying to setup the mapping for IAM roles to k8s service accounts. According to https://docs.aws.amazon.com/eks/latest/userguide/enable-iam-roles-for-service-accounts.html, we need to grab the OIDC issuer URL from the EKS cluster and add it to the aws_iam_openid_connect_provider resource.

Has anyone come across this? Unfortunately can’t evaluate the eks_cluster_id output variable from the eks cluster module into a data lookup for the aws_eks_cluster data source. Perhaps we add an output to the eks module for the identity output from aws_eks_cluster? Happy to do a PR for it

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

The IAM roles for service accounts feature is available on new Amazon EKS Kubernetes version 1.14 clusters, and clusters that were updated to versions 1.14 or 1.13 on or after September 3rd, 2019. Existing clusters can update to version 1.13 or 1.14 to take advantage of this feature. For more information, see

if you’re using .net on eks beware of this: https://github.com/aws/aws-sdk-net/issues/1413

I'm trying to get a .NET Core app to work with EKS new support for IAM for Service Accounts. I've followed these instructions . This app is reading from an SQS queue and was working previou…

also make sure you add a thumbprint to the openid connect provider:

resource "aws_iam_openid_connect_provider" "this" {

client_id_list = ["sts.amazonaws.com"]

thumbprint_list = [

"9e99a48a9960b14926bb7f3b02e22da2b0ab7280" # <https://github.com/terraform-providers/terraform-provider-aws/issues/10104#issuecomment-545264374>

]

url = "${aws_eks_cluster.this.identity.0.oidc.0.issuer}"

}

these are the things that i ran into over the last week dealing with IAM for service account

Thanks for the heads up! Luckily no .net, but could be similar risks with some of the other pods we’re running

Unfortunately we can’t do something similar to "${aws_eks_cluster.this.identity.0.oidc.0.issuer}" to get the oidc issuer URL as we’re using this module, which doesn’t have this as an output https://github.com/cloudposse/terraform-aws-eks-cluster

is the cluster id output the cluster name?

we don’t use the module unfortunately so i don’t know

right - you said it wasn’t up above

i’ve had some success using the arn datasource to parse names out of arns:

Parses an ARN into its constituent parts.

but ideally it would be an output from the eks module

Yea, one of the outputs of the module is the EKS cluster name. With this we could use with the aws_eks_cluster datasource like you’ve done above… however doesn’t seem like we can do an indirect variable reference like with evalin bash.

Ideally would want to be able to have something like "${data.aws_eks_cluster.${module.eks_cluster_01.eks_cluster_id}.identity.0.oidc.0.issuer}"

Thanks for the suggestions though @Chris Fowles! Good to have warnings that the IAM Roles to svc accounts isn’t the magic bullet we were expecting it to be

Anyone have a good article/blog/post/etc.. on directory structures for more complex architectures? I’ve gone through a few blogs, and threads on reddit, but haven’t quite understood how to break mine down to be as simple as theirs. I have a lot of variables & modules compared to others. Maybe part of it is on me. For my PoC(proof of concept but PoS might be more like it) I’m declaring quite a few variables, env vars for example, and making quite a few module references. If I want to use the same architecture for all of my environments it’s all fine and dandy because I can just have another tfvars file but that doesn’t seem like a great idea thanks for the feedback.

I know there’s also #terragrunt and #geodesic but I’m not at that point yet. Just trying to build a solid foundation in terraform for right now.

This is one way. https://charity.wtf/2016/03/30/terraform-vpc-and-why-you-want-a-tfstate-file-per-env/ We do something similar but split an env into multiple directories. Vpc, eks, services, etc.

How to blow up your entire infrastructure with this one great trick! Or, how you can isolate the blast radius of terraform explosions by using a separate state file per environment.

Have you read Terraform: Up and Running 2nd Edition? It’s pretty much the book on how to organize your Terraform code.

Not yet but it’s on my to do list. I’ll move it higher up on the priority list

@email PRs are welcome

A wild PR appeared! https://github.com/cloudposse/terraform-aws-eks-cluster/pull/34

Add the OIDC Provider output to the module, to enable module consumers to link IAM roles to service accounts, as described here. Note, updating the readme seemed to fail on MacOS, so not updated yet.

it should be similar to https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/outputs.tf#L36

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

and calculated similar to https://github.com/cloudposse/terraform-aws-eks-cluster/blob/master/auth.tf#L33

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

not sure I understand this

Yea, one of the outputs of the module is the EKS cluster name. With this we _could_ use with the `aws_eks_cluster` datasource like you've done above… however doesn't seem like we can do an indirect variable reference like with `eval`in bash

since the module outputs cluster name, you can use https://www.terraform.io/docs/providers/aws/d/eks_cluster.html to read all other attributes

Retrieve information about an EKS Cluster

Correct me if I’m wrong, but I think going down that path, we’d have to have something like this right? "${data.aws_eks_cluster.${module.eks_cluster_01.eks_cluster_id}.identity.0.oidc.0.issuer}"

E.g. evaluate the cluster name, and then use the cluster name as part of the data lookup against aws_eks_cluster?

the example here https://www.terraform.io/docs/providers/aws/d/eks_cluster.html shows exactly what you need to do

module "eks_cluster" {

source = "../../"

namespace = var.namespace

stage = var.stage

name = var.name

attributes = var.attributes

tags = var.tags

region = var.region

vpc_id = module.vpc.vpc_id

subnet_ids = module.subnets.public_subnet_ids

kubernetes_version = var.kubernetes_version

kubeconfig_path = var.kubeconfig_path

local_exec_interpreter = var.local_exec_interpreter

configmap_auth_template_file = var.configmap_auth_template_file

configmap_auth_file = var.configmap_auth_file

workers_role_arns = [module.eks_workers.workers_role_arn]

workers_security_group_ids = [module.eks_workers.security_group_id]

}

data "aws_eks_cluster" "example" {

name = module.eks_cluster.eks_cluster_id

}

locals {

oidc_issuer = data.aws_eks_cluster.example.identity.0.oidc.0.issuer

}

Of course! Didn’t think to create the data source first, and then get the variable from that, this is exactly what we need

Need more coffee…

Thanks heaps for the help @Andriy Knysh (Cloud Posse)

2019-11-14

any ECS users here? we’re considering a migration into ECS (from vanilla docker on EC2) but I couldn’t find much in the way of existing ECS modules in the module directory

does it play well with Terraform?

yep

I’m using it for managing our task definitions and services

and is pretty simple and straightforward

Great to hear, thanks

https://github.com/cloudposse/terraform-aws-ecs-web-app using it right now. super simple to setup

Terraform module that implements a web app on ECS and supports autoscaling, CI/CD, monitoring, ALB integration, and much more. - cloudposse/terraform-aws-ecs-web-app

Nice!

Terraform module that implements a web app on ECS and supports autoscaling, CI/CD, monitoring, ALB integration, and much more. - cloudposse/terraform-aws-ecs-web-app

working example here https://github.com/cloudposse/terraform-aws-ecs-web-app/tree/master/examples/complete

Terraform module that implements a web app on ECS and supports autoscaling, CI/CD, monitoring, ALB integration, and much more. - cloudposse/terraform-aws-ecs-web-app

Terratest for the example (it provisions the example on real AWS account) https://github.com/cloudposse/terraform-aws-ecs-web-app/blob/master/test/src/examples_complete_test.go

Terraform module that implements a web app on ECS and supports autoscaling, CI/CD, monitoring, ALB integration, and much more. - cloudposse/terraform-aws-ecs-web-app

We use ECS for 90% of our apps (Fargate specifically) and it works great.

Hi, sometimes when terraform has to destroy and recreate ecs service I get a following error: InvalidParameterException: Unable to Start a service that is still Draining. What causes it and how can I fix that?

Is there a way to show the output of terraform plan from a plan file? In 0.11 you just did terraform show but in 0.12 I’m just getting resource names without changes

maybe terraform apply -auto-approve=false $PLANFILE < /dev/null

im trying to create a custom domain with api gateway, but i am using count to create two types of r53 records. Im using count for api_gateway as a conditional, which I would also like to use for the r53 records: