#terraform (2020-05)

Discussions related to Terraform or Terraform Modules

Discussions related to Terraform or Terraform Modules

Archive: https://archive.sweetops.com/terraform/

2020-05-01

Anyone take the HashiCorp Certified Terraform Associate exam yet?

It may have been asked before, but I’m new to checking out these awesome modules.

I have an environment/account already setup (out of my control) - ie: vpc, ig, subnets, etc.. already created.

Is it possible to use a module, like: terraform-aws-elastic-beanstalk-environment and not create all the extras, or would I have to go through an import everything ?

no need to create those resources again

you can do Data lookups

base on tags

that sounds like it could be easier.

like that

I’m going to have to lookup a lot of tags..

thanks

you need to define enough to find it

not all

if it has a tag that is unique, then that is it

Hi everyone A team at work has been developing their entire infrastructure in one single Cloudformation Template file which now over 4000 lines of spaghetti I helped them fix a bunch of cyclic dependencies and now I want to re write the CFT => TF module’s which mainly has alot of I inline lambda Glue jobs, catalog, tables CW alarms and events A many S3 buckets

This infrastructure is live in production in five environments

Im seeking advise on what will the best approach to deploy the infrastructure I have developed into Terraform?

What about the existing S3 buckets which has TB of data? Should do a TF import on S3 bucket? Deploy a parallel env with TF and delete CFT elements manually as I can’t delete the CFT stack?

I will use terraformer

CLI tool to generate terraform files from existing infrastructure (reverse Terraform). Infrastructure to Code - GoogleCloudPlatform/terraformer

we recently used to import some stuff

worked very well

we just changed the resources names a bit

Noice

I will take a look at this.

I will say, creating each resource bit by bit will be better, using modules reduces de time for implementation

but it is possible to do it with this

@jose.amengual thanks for u advise

np

+1 for Terraformer, we’re using for moving parts of the current infrastructure that was manually deployed by the previous lead to Terraform Modules.

what about cdk? seems neat, gives you full benefit of a real programming language

it is going to take you a REALLY long time tod o

It’s a mountain of a task to climb :)

anyone have seen this ?????

security_groups = [

+ "sg-015133333333d473b",

+ "sg-05294444444432970",

+ "sg-0a35555555553ea35",

+ "sg-0c8a33333dbca389b",

+ "sg-022222229ccf66e24",

+ "terraform-20200502011517105000000003",

]

Data lookup where that comes from is this :

data "aws_security_groups" "atlantis" {

filter {

name = "group-name"

values = ["hds-${var.environment}-atlantis-service"]

}

provider = aws.primary

}

Weird looks like a timestamp to me, what happens if u apply it

Do not know why it is under =[]

it complain that is expecting sg- as a value

never seen such thing before

Taint the resource?

If you you can’t cure it, kill it lol

lol

it was created by another TF

is there, it works

I can add it manually in the UI

data.aws_security_groups.atlantis.id

returns the terraform-2222

data.aws_security_groups.atlantis.ids.* returns the id

stupid

it should not return anything

is there, it works

you mean you could see the id in the AWS UI??

I mean it should give you some error since the data resource is aws_security_groups and now a not a resource “aws_security_group”

very subtle difference

# atlantis

data "aws_security_groups" "atlantis" {

filter {

name = "group-name"

values = ["hds-${var.environment}-atlantis-service"]

}

provider = aws.primary

}

resource "aws_security_group" "ods-purge-lambda-us-east-2" {

name = "hds-${var.environment}-ods-purge-us-east-2"

description = "Used in lambda script"

vpc_id = local.vpc_id

tags = local.complete_tags

provider = aws.primary

}

locals {

sg-atlantis = join("", data.aws_security_groups.atlantis.ids.*)

sg-ods-purge-us-east-2 = join("", aws_security_group.ods-purge-lambda-us-east-2.id.*)

}

aha! now i get it

you see how easy is to make a mistake ?

WTH is that terraform-XXXX???

2020-05-02

2020-05-03

Hello, I have a newbie-type question. Is there a way to force rebuilding a specific resource? Basically, using “terraform taint” in other resources than ec2 instances such as aws kinesis stream or dynamodb table, etc (My current workaround is changing the resource name which usually forces the rebuild, however it not very practical.)

well is you remove the resource and add it again it does it too

you can do a count argument and enable and disable it

yeah that’s a better option than renaming on one hand. On the other hand it requires more steps. In the setup I have in mind (automated testing), it actual might be better .

nvm, got it working with terraform taint actually

I’m not so familiar with taint

2020-05-04

You can also use ‘untaint’ for times when terraform gives up on a resource or gets confused and wants to destroy it, even though it turned out ok

If a terraform module (module UP) is updated, is there a way for atlantis or something else to rerun a terraform plan for a module (module DOWN) that depends on UP ?

personally, we version all our modules, and use dependabot to update the version refs with a pr…

If a terraform module (module UP) is updated, is there a way for atlantis or something else to rerun a terraform plan for a module (module DOWN) that depends on UP ?

interesting so dependabot will notice that the module UP has changed and somehow find all modules that depend on UP and submit a PR to them to update the git reference tag in the source which will trigger atlantis’ terraform plan comment ?

if i understand that correctly… how do you configure dependabot to do this ?

if it’s a public project, and tf 0.11, then the free dependabot service works great

if you need tf 0.12, we’ve built a fork and a github-action

could you link this ?

im surprised you folks havent’ blogged about this.

Terraform module that deploys a lambda function which will publish open pull requests to Slack - plus3it/terraform-aws-codecommit-pr-reminders

(note that’s a read-only github token, please don’t flay me)

haha i was just wondering about that. might be good to comment that inline

here’s the repo for the gh-action, https://github.com/plus3it/dependabot-terraform-action/

Github action for running dependabot on terraform repositories with HCL 2.0 - plus3it/dependabot-terraform-action

1

1and our fork of dependabot-core, which the gh-action uses, https://github.com/plus3it/dependabot-core

The core logic behind Dependabot’s update PR creation - plus3it/dependabot-core

1

1hopefully dependabot will eventually get around to merging tf012 support upstream… https://github.com/dependabot/dependabot-core/pull/1388

Fixes #1176 I opted for both hcl2json and terraform-config-inspect. hcl2json for terragrunt and terraform-config-inspect for tf 0.12 I wanted to go with terraform-config-inspect for both, but it di…

1

1:zoom: Join us for “Office Hours” every Wednesday 11:30AM (PST, GMT-7) via Zoom.

This is an opportunity to ask us questions on terraform and get to know others in the community on a more personal level. Next one is May 13, 2020 11:30AM.

Register for Webinar

#office-hours (our channel)

#office-hours (our channel)

Hoping someone can help - I’m curious how would you use the import command to import an existing resource within TFC if i’m using TFC to trigger plan/apply and not via cli?

@Mr.Devops You can still use import to update your TFC state from your local environment AFAIK. Once you update it once from your local environment then it will be in your state and TFC should just know it’s there.

ah ok i will look into this thx @Matt Gowie

2020-05-05

HashiCorp Consul Service for Azure (HCS) Affected May 5, 16:23 UTC Investigating - We are currently experiencing a potential disruption of service to the HashiCorp Consul Service for Azure (HCS). HCS Cluster creations are currently failing. Teams are currently working to identify a solution and will update as soon as information is available.

HashiCorp Services’s Status Page - HashiCorp Consul Service for Azure (HCS) Affected.

Hi I’m using CloudPosse’s terraform-aws-eks-cluster module, how do I decide whether to instantiate the worker nodes with their terraform-aws-eks-node-group module vs their terraform-aws-eks-workers modules? The node-group approach seems to use what is intended for EKS, whereas workers module uses autoscaling group. Am I correct that node-group module is the way to go?

there are pros and cons with managed node groups

also, the workers module was created long, long before managed node groups were supported

I would start with managed node groups until you find a use-case that doesn’t work for you

for example, if you need to run docker-in-docker for Jenkins, then the managed node groups won’t work well for that and you should use the workers module.

Keep in mind, that a kubernetes cluster can have any number of node pools

and you can mix and match node pools from different modules concurrently in the same cluster

you can have a fargate node pool along side a managed node pool, along side a ASG workers node pool

@Erik Osterman (Cloud Posse) Would you mind elaborating why dind won’t work well with managed node groups? I use dind and was thinking of moving my nodes to managed.

@Jeremy G (Cloud Posse)

@s_slack EKS disables the Docker bridge network by default, and Managed Node Groups to not allow you to turn it back on. This is a problem if your Dockerfile includes a RUN command that installs a package, for example, as it will not have internet connectivity. Another issue for us is that you cannot configure Managed Node Groups to launch nodes with a Kubernetes taint on startup, which we wanted to keep our spot instances from having non-whitelisted applications running on them.

What happened: We utilize docker-in-docker configuration to build our images from CI within our dev cluster. Because docker0 has been disabled, internal routing from the inner container can no long…

Perfect. Much appreciated. @Jeremy G (Cloud Posse) @Erik Osterman (Cloud Posse)

Hi. I am hoping someone can help me with an interesting problem. I am trying to use the data lifecycle manager to create snapshots of the root volume of my instance but the root ebs volume needs to be tagged. Terraform doesn’t have a way to add tags to the root_block_device of the aws_instance. I tried to use the data.aws_ebs_volume to find the ebs volume that is created but I can’t figure out how to use that to tag it. The resource.aws_ebs_volume doesn’t seem to have a way to reference the id from the data.aws_ebs_volume which means that I can’t import the volume either. Hope that makes sense.

@breanngielissen Can you tag via the AWS CLI? If you can then you can use the local-exec provisioner to tag it.

resource "null_resource" "tag_aws_ebs_volume" {

provisioner "local-exec" {

command = <<EOF

$YOUR_AWS_CLI_CODE_TO_TAG

EOF

}

}

@Matt Gowie That worked perfectly. Thank you!

@breanngielissen Glad to hear it!

2020-05-06

anyone know of a terraform module for opengrok or a similar code search app that can be run in aws ?

their docker container description says to use the standalone one …

i was looking into ECS using its docker, but it might be better to use an EC2 instance with userdata to setup opengrok, and keep code on an EFS

or just use an EBS

so then the setup would be…

• EC2

• userdata to retrieve ssh key, configure opengrok, clone all github org’s repos

• EBS / EFS for storage of github org repos

• ALB with a target group

• route53 record

• acm cert curious to hear other peoples’ thoughts

Can Sentinel be used to enforce policies on IAM roles? For example, don’t grant IAM roles related to networking to a user? I searched through the documentation but couldn’t find any examples related to IAM.

It’d be nice if this module allowed additional IAM permissions: https://github.com/cloudposse/terraform-aws-emr-cluster

Terraform module to provision an Elastic MapReduce (EMR) cluster on AWS - cloudposse/terraform-aws-emr-cluster

ooo let me take a look at this

Terraform module to provision an Elastic MapReduce (EMR) cluster on AWS - cloudposse/terraform-aws-emr-cluster

it looks like it creates an IAM role https://github.com/cloudposse/terraform-aws-emr-cluster/blob/6444ee4f2a48d3d41c2499914ab8b882e454b11c/main.tf#L280

Terraform module to provision an Elastic MapReduce (EMR) cluster on AWS - cloudposse/terraform-aws-emr-cluster

you can use a data source to grab the IAM role that it creates and then do a aws_iam_role_policy_attachment to attach a new policy to it, outside of its module reference.

1

1Yea, this is our typical pattern - provision the role, expose it, then let the caller attach policies

it would be nice if those modules that are already creating the iam role(s) would also output their respective arns. then you wouldn’t need to use a data source to get the arn

@RB ya I’d consider it a “bug” if they don’t

Using data provider shouldn’t be needed.

because in order to use bootstrap actions, the instance needs permissions to download the file

Hi Guys, Is there any example that creates global accelerator with alb as endpoint?

I think i find it

2020-05-07

Hi All - I would like to setup a simple AWS EKS cluster with 1 master and 2 worker nodes..which set of terraform files I need to use. Please guide..I have been trying to figure out and end up bringing up something I could not access from local machine

Have you seen our EKS cluster module? It has a good example to get you started

yes but it has lot of things in root folder there are some .tf files and also in example I could see ‘complete’ folder has lot of files..Not sure from which folder i need to trigger “terraform apply” and also which variable.tf file I need to feed my details

Hey, I’m thinking about how to properly manage resources created by AWS with Terraform. One example is the S3 bucket which is created for Elastic Beanstalk (elasticbeanstalk-<region>-<account_id>). I would like to add cross-region replication and encryption for this bucket due to compliancy reasons. Any ideas?

here better

this is for Security Hub PCI standard, which requires for S3 buckets to have cross-region replica and encryption

cross account or cross region ?

cross region

on one account

so then you do not need the account id in the name in that case ?

we did team-nameofthing-region

we added the regions to everything

we even duplicated similar IAM roles for that

because IAM is a global service

but resources are not

which makes things very difficult to troubleshoot in case of access issues and such

and you need to keep in mind that some resources like ALBs can’t have names longer than 32 characters

so is better to keep it short

the bucket is created by AWS automatically like that

and I haven’t found a place where could I specify the bucket for Beanstalk myself

mmm I do not know much about EB

is this for a multi region setup ?

Hi. I’m using TF modules and wondering if anyone has a hack to conditionally enable/disable one based on a variable.

currently, the module has to support it, by accepting a variable that is used in the count/for_each expression on every resource in the module

Terraform module which creates VPC resources on AWS - terraform-aws-modules/terraform-aws-vpc

follow the create_vpc value through the module .tf files…

thanks. i’ll take a look.

terraform core is working on module-level count/for_each support. it is in-scope for tf 0.13… https://github.com/hashicorp/terraform/issues/17519#issuecomment-605003408

Is it possible to dynamically select map variable, e.g? Currently I am doing this: vars.tf locals { map1 = { name1 = "foo" name2 = "bar" } } main.tf module "x1" { sour…

Managed to deploy EKS cluster using Terraform..with basic thing running. However when I try to connect from my local machine from where I ran terraform. I get below error message. An error occurred (AccessDenied) when calling the AssumeRole operation: User: arnxxxxxxx:user/xxxx is not authorized to perform: sts:AssumeRole on resource: arnxxxxxxx:user/xxxx

I am using same aws access key and secret key to deploy the EKS cluster using terraform but when I try to connect it from the same machine with same AWS config i get this error message..Any help would be really helpful?

Is that error from running kubectl?

What does your kubeconfig look like? It’s probably in ~/.kube/config. (Don’t paste the whole config in here; we don’t need to see your cluster address or certificate)

If the access keys on your local system already have permissions to connect to EKS, make sure your kubeconfig doesn’t have a redundant role assumption in the args:

args:

...

- "--role"

- "<role-arn>"

Actually yes I have added my role again using command.same role which I am using to connect to EKS. This I have done using command

aws eks --region us-east-1 update-kubeconfig --name test-xxx --role-arn arn:awsiam:xxxx:user/xxx

this is because when I checked in kubeconfig file it had new iam role created by Terraform but not mine which i connected in my local machine..only due to this i assumed I couldnt connect so I ve added it. So you mean if we dont add it should be able to connect?

It works when if I dont add the redundant roles. Thanks @David Scott for your help. I really saved lot of time

Glad I could help!

One silly question: I use the terraform_state_backend, I was on version 0.16.0 and when I try to upgrade and use version 0.17.0 it’s telling me something around Provider configuration not present because of null_data_source. I don’t know how to solve that conflict and I can’t run destroy :rolling_on_the_floor_laughing: …so is it safe to just remove all the modules with type "null_data_source" from terraform.tfstate? That’s the only way I’ve found to make it work, or to better say: to not show that error message, I don’t know if it works, I’m just running until terraform plan

All right! I found that going back to 0.16.0 and running terraform destroy -target=the_troublemaker, then terraform plan will do it…after that, upgrading to 0.17.0 didn’t give me any problems

yea, we’ve seen that before as well. The problem is the explicitly defined providers in modules, e.g. https://github.com/cloudposse/terraform-null-label/blob/0.13.0/versions.tf#L5

Terraform Module to define a consistent naming convention by (namespace, stage, name, [attributes]) - cloudposse/terraform-null-label

if you define providers in modules, then you can’t just remove it from the code or rename it

TF will not be able to destroy it w/o the original provider present

label module 0.16.0 does not have it defined anymore

I’m refactoring some Terraform modules and am getting: Error: Provider configuration not present To work with module.my_module.some_resource.resource_name its original provider configuration at m…

(the moral of the store: try not to define providers in sub-modules, provide the providers from top-level modules. In most cases, they are inherited from top-level modules automatically)

this is very true….I just hit this issue with the github webhooks module

I’m dealing with the following; different customers, different terraform projects across different github repositories, some on the customer github org some on mine. The different projects can have information in common like my ssh-keys, e-mail addresses, whitelisted ip’s. An idea which was opted was, why not put those semi-static vars in a private git terraform module and distribute it like that. I’m personally afraid this module will end up like the windows registry, but I have no valid alternative either. I’m curious to know what everyones take is on this.

I heard of that solution from a colleague who worked on a project with a customer where they did that. I didn’t go down that route, because the things that were shared were things that didn’t change much, like project ids (this is for GCP). I simply hardcoded the outputs from various runs into the vars as I ran the environment from start to finish.

But I can see the central terraform module as either a God Class or as a glorified parameter store.

@Andriy Knysh (Cloud Posse) Does that mean you folks at CP will eventually be removing your usage of providers (like GH provider for GH webhooks) in your modules?

if they are still in some modules, yes, need to be removed. We tried to clean it up in all modules converted to TF 0.12

if you see providers in modules, let us know

I think this is the only one that I know of. I hit issues with it with the codepipeline module on a project. And I know @jose.amengual had troubles with it a week or two back.

Terraform module to provision webhooks on a set of GitHub repositories - cloudposse/terraform-github-repository-webhooks

yea, that one will trigger changes across many modules

yes that is the one, I had that problem

@Andriy Knysh (Cloud Posse) Yeah… I could see that being a PITA. Ya’ll doing a good job with versioning won’t make it too bad I would hope?

yes versioning will help not to break everything (if people don’t pin to master )

I bet there is a few millions doing that lol

Ah yes, hopefully people listen to you folks and don’t pin master. But if they do then they’re outta luck.

¯_(ツ)_/¯

I think the examples in the README should be pinned to the latest version

not to master

yes they should (the latest version always changes though)

Sounds like a good automation task for release:

- Build README with new tag as input, README example is updated to use new tag

- Tag repo

- Push repo

but codefresh will have to inject that, right ?

Does anyone know if its possible to create an AWS ClientVPN completely with Terraform? It seems like there are some resources missing from the provider such as Route Tables and authorizations

correct

I just went trough this a few months back

Route Tables and authorizations need to be done manually

or over CLI

ok thank you, ive been going crazy wondering why I stopped where I did on writing Terraform to create this a few weeks back

now it makes sense

I was surprised too since without those, nothing works!!!!!

lol right? lets just give them half of what they need! One more quick question, do you have to associate the security groups with the networks you associate with the VPN manually as well?

i dont see a way to do that with that resource either

We are working internally to update the community VS Code extension to fully support Terraform 0.12 syntax and use our Language Server by default. A new version will be shipping later this year with the updates.

Question for VSCode folks — Is it that much better than Atom?

I’ve switched text editors a few times over the years from emacs => Sublime => Atom. Now I’m finally considering another switch to VSCode cause people love it so much, but I’m not sure if I get the hype.

We are working internally to update the community VS Code extension to fully support Terraform 0.12 syntax and use our Language Server by default. A new version will be shipping later this year with the updates.

its pretty good. I made the switch and love it. I’ve even slowly been forgetting about pycharm when working on Python and just defaulting to vscode

id recomend just installing it and checking it out. I can give you a list of good starting extensions to grab if you want too

and my settings.json file with a bunch of customizatiohn, just so you can see how it all works

Those are some nice offers — makes this more attractive… Yeah, I’ll happily take you up on that.

sure give me about 20 mins, on a call until about 5pm cst

Thanks man!

i find vscode significantly faster than atom. or did when i switched away from atom several years ago…

@Matt Gowie sorry about that, completely forgot about you. I forgot I went through and commented my settings file as well the other day, so that may help you understand some of it too. https://gist.github.com/afmsavage/1bf0241472f74fa21112d4d3698bcb80

Awesome — Thanks @Tony

terraform registry now exposes the # of downloads per module. 1 more metric for vetting open source modules.

nice! thanks for sharing

@loren working with the guys for concurrent development with a unified goal, right now the hashicorp one is more stable , alot less features, and mine is less stable but more experimental features (is explained in the repo)

Thank you for all your work! Been using your language server for quite a while. Brilliant work!

@Matt Gowie https://github.com/cloudposse/terraform-aws-ssm-parameter-store/releases/tag/0.2.0 (thanks for your contribution)

Terraform module to populate AWS Systems Manager (SSM) Parameter Store with values from Terraform. Works great with Chamber. - cloudposse/terraform-aws-ssm-parameter-store

Thanks @Matt Gowie and @Andriy Knysh (Cloud Posse) - definitely appreciate the 0.12 upgrade!

Terraform module to populate AWS Systems Manager (SSM) Parameter Store with values from Terraform. Works great with Chamber. - cloudposse/terraform-aws-ssm-parameter-store

any eks + spotinst + terraform integration around here? Is it possible to do everything within terraform? Im POC-ing it right now through the portal (where it basically drains all your nodes to their nodes) but I’m curious how that would work w/ my existing terraform state

I have it all within terraform but not as a single run. Cant figure out how best to reinstate the same number of nodes so i swap out to an ondemand scaling group first, drain to it then swap back in the spot asgs

ugh. not a fan of that.

Yeah :(

2020-05-08

I have a pretty broad and general question about modules and module composition. In a previous role I built out a multi account / multi region AWS architecture very much following the terragrunt methodology. i.e a single repo that defined what modules were live in what accounts/regions based on directory structure and git tagged modules

In a new role I have a clean slate. Introducing Terraform to the organisation and using Terraform Cloud. Terraform Cloud supports private module registry where a repo of the form terraform-<provider>-<name> can be automatically published as a module when a git tag is pushed

Historically I am used to working with a big monorepo where all modules reside. A huge advantage of this is easier module composition. i.e a module called service_iam could include dozens of other IAM helper modules from the same repo

I suppose I am having a bit of trouble in my head figuring out what my new approach should be. I want to avoid code duplication and also a spaghetti of modules referring to other modules at specific versions

Theres a question in there somewhere…

Has anyone else done this “transition” I dont think I want to end up with a repo per module as that has a management / operational costs

Should I just create registry modules that have many many sub modules nested inside?

@conzymaher If you’re wanting lean away from doing a module per-repo and you’re looking to use the registry then I’d check out of the modules from the terraform-aws-modules GH org. The terraform-aws-iam one is great. They do the multiple modules in one repo pattern and works well in my experience.

Terraform module which creates IAM resources on AWS - terraform-aws-modules/terraform-aws-iam

I’m a big fan of those modules. None of them have a dependancy on each other though. But theres nothing to stop that

Terraform module which creates IAM resources on AWS - terraform-aws-modules/terraform-aws-iam

Thanks for the pointer. I hadn’t considered looking at some of the other modules in that repo. The atlantis module is basically the model I want. A “top level” module that uses other modules that are outside of that module, but can also use optional sub modules

Np. Glad you found one that is what you’re looking for!

Cloud Posse folks, do you have any plans to publish a Terraform module for deploying a typical Lambda function? I can’t find anything on your GitHub account

we have a few very specific modules that deploy lambdas for very specific tasks https://github.com/cloudposse?q=lambda-&type=&language=

Yeah, I get it…that’s what I’m doing…I was just thinking that it would be nice to have one module for a generic Lambda; I know it’s (almost) straightforward, but there are a few corner cases that usually modules takes care about it

your contribution to the open-source community would be very welcome @x80486

@randomy has several awesome modules for managing lambdas

(we have no plans right now to publish a module for generic lambda workflows)

I’ve heard good things about https://github.com/claranet/terraform-aws-lambda

Terraform module for AWS Lambda functions. Contribute to claranet/terraform-aws-lambda development by creating an account on GitHub.

but haven’t used it

Thanks. https://github.com/raymondbutcher/terraform-aws-lambda-builder is better than the Claranet one IMO (I made both)

Terraform module to build Lambda functions in Lambda or CodeBuild - raymondbutcher/terraform-aws-lambda-builder

Has anyone used https://github.com/liamg/tfsec and had it actually find legit security vulnerabilities? I’m skeptical.

Static analysis powered security scanner for your terraform code - liamg/tfsec

ya, i use that currently in atlantis

Static analysis powered security scanner for your terraform code - liamg/tfsec

What’re your thoughts? Has it caught any serious gotchas for you?

it’s nice and i believe it has some overlap with checkov and tflint

but it does find certain things the others dont.

we really need a nice comparison betw linters and their rules

So I’m noticing that we’re having issues w/ our child modules when folks remove them from their root module due to having a provider block in the child module. We currently use the provider block to setup the following for aws:

assume_role {

role_arn = var.workspace_iam_roles[terraform.workspace]

}

Is there a way to set up assume_role for the child module so we can test it without the provider block then as to not have missing provider error messages like the following?:

To work with module.kms.aws_kms_key.this its original provider configuration

at module.kms.provider.aws is required, but it has been removed. This occurs

when a provider configuration is removed while objects created by that

provider still exist in the state. Re-add the provider configuration to

destroy module.kms.aws_kms_key.this, after which you can remove the provider

configuration again.

Or a cleaner pattern to follow?

TIA

we always setup the provider credentials in the root module, using multiple providers with aliases if there are multiple credentials (e.g. different roles). we then pass the provider alias to the module that needs it

Modules allow multiple resources to be grouped together and encapsulated.

We compose our modules as well and run tests against them for that larger piece of infrastructure they build. I’m just wondering how I could continue testing the child module w/o having a way to setup some necessary config. We use kitchen-terraform currently. Maybe it entails working w/ that to test the child module w/o baking in a provider block which causes headaches down the road.

ya, this issue is coming up more an more often. we’ve (@Andriy Knysh (Cloud Posse)) fought a few issues in the past week

yeah, i think you’d have to setup the credential through kitchen-terraform

At least we’re in agreement - cause after re-reading the docs - I see the issue w/ nested providers - but now need to figure out how we can still test independently our child modules w/o those provider blocks.

I’m refactoring some Terraform modules and am getting: Error: Provider configuration not present To work with module.my_module.some_resource.resource_name its original provider configuration at m…

see the thread below that message

we also have multi-provider modules, for cross-account actions (e.g. vpc peering). in that case you must have a provider block in the module. but we only define the alias. the caller then passes the aliased providers they define to each aliased provider in the module

and the setup in the test config (though in this case it is vpc peering in the same account)… https://github.com/plus3it/terraform-aws-tardigrade-pcx/blob/master/tests/create_pcx/main.tf#L38-L41

Cool. I’ll take a look at all these. Hopefully there’s a way forward that reduces the issues we see when our dev teams remove child modules w/ their own provider modules but still allows us to test the child modules and the composed repos independently

Thanks @loren @Erik Osterman (Cloud Posse) !!

You don’t specify providers in child modules. This is logically correct since your submodules don’t need to know or deal with how they are being provisioned.

You specify providers in root modules

Or in the test fixtures

Those could have different providers with different roles or credentials

Or for different regions or accounts

Right. It’s mainly now how to remove the provider blocks (which I see from the other thread that Erik posted - you guys have been removing from all your modules) and continue to have testing ability for these child modules as well.

I’m only going to add that I personally avoid mapping providers into modules as much as humanly possible

Every time you have to do this I’d ask yourself if you are doing the right thing or not.

sometimes is simply unavoidable

sorry, I’m late to the party and likely missed the point but all this self-isolation made me feeling like proclaiming some stuff out loud….

right, in almost all cases you don’t need to specify providers in child moduler, nor map providers from the top-level modules

just define them in root modules or test fixtures, they are inherited automatically

Anyone have a cli or quick way to trigger a terraform cloud run? I’m can cobble together rest call but just checking. Have azure devops pipeline running packer and want to trigger it to run a terraform plan update to sync the SSM parameters for ami images after I’m done.

2020-05-09

I’m sure someone out there may have thought about this, but it would be nice if terraform would have the ability to output its graph to lucid chart(3rd party integration) -feat request for Hasicorp?

I suppose if you can figure out how to transform graphviz language into a csv you can simply import that into lucidchart (https://lucidchart.zendesk.com/hc/en-us/articles/115003866723-Process-Diagram-Import-from-CSV)

Use Lucidchart’s CSV Import for Process Diagrams to create flowcharts, swim lanes, and process diagrams quickly from your data. This tutorial will walk you through the steps of formatting your data…

though it is an interesting challenge I’ll leave that task up to you to figure out

thx @Zachary Loeber

2020-05-11

:zoom: Join us for “Office Hours” every Wednesday 11:30AM (PST, GMT-7) via Zoom.

This is an opportunity to ask us questions on terraform and get to know others in the community on a more personal level. Next one is May 20, 2020 11:30AM.

Register for Webinar

#office-hours (our channel)

#office-hours (our channel)

Hello,

Do folks feel workspaces serve a purpose while using terraform open source? I personally have found the use of any other variable such as environment sufficient to distinguish between different environments. It may also be so that I haven’t understood fully the purpose of workspaces in terraform. Any advice/insights appreciated.

i dont find workspaces to be that useful. i’d much rather a module references with an environment argument and an environment directory with those module references

We’ve (cloudposse) switch over to using workspaces. previously we would provision a lot of s3 state backends, but that process is tedious and difficult to automate using pure terraform

I’m not too familiar with workspaces but how does it work with atlantis ? you guys have a repo per environment still or due to using workspaces you are now have one repo and multiple workspaces ? @Erik Osterman (Cloud Posse)

we now use a flat project structure. we define a root module exactly once. we don’t import it anywhere. we define an a terraform varfile for each environment. we don’t use the hierarchical folder structure (e.g. aws/us-east-2/prod/eks/ ) and instead just have projects/eks) with files like projects/eks/conf/prod.tfvars )

this enforces that we have the identical architecture in every environment and do not need to copy and paste changes across folders. what I don’t like about the multiple folders is that architectures drift between the folders without human oversight.

2

2

you guys have a repo per environment still or due to using workspaces you are now have one repo and multiple workspaces ?

@jose.amengual: yes, we used to, but now have abandoned that approach.

cool so we are using the same aproach

we adopted that approach before gitops was a thing. it proved to not be very gitops friendly and changes were left behind leading to drift. we moved to the flat folder architecture this year in all new engagements.

almost, we do not have subfolders for the conf/ we have it all int the root

for us, it has worked well, the main reason we ended up with one repo was due to multiregion deployments, if we decided to split by env we could endup with an exponential number of repos

Terraform state supposed to help you with infrastructure drift but by having so many repos or folders then who solves the problem of configuration drift when using multi-folder or env-repos?

pretty much impossible

I’d like what you talk about @Erik Osterman (Cloud Posse) to be a decent norm but the diminishing returns come fast when you try to do all this rigorously with only one or two envs and requirements popping up all the time where they end up having to differ in the end for various reasons.

For the things which I do repeat in a very identical fashion I could probably use workspaces.

I have (pretty much) a structure such as:

infra/logical_grouping/myprefix-env-stack1infra/logical_grouping/myprefix-env-stack2infra/logical_grouping/myprefix-env-stack2-substack

where -extension in many cases either utilize remote data from the “parent” stacks, sometimes several of them. Sometimes, in the case where it makes more sense to not tie things so closely, the information sharing is done via regular data sources instead.

I’ve made the mistake of putting too many things in the same “stack” way too many times. I’ve learned to separate early now.

(While also learning to become really proficient in terraform state mv )

@mfridh just to be clear, what I’m describing still breaks terraform state apart so it’s not a terralith. we also have taken this a step further, so we have a single YAML config that describes the entire environment and all dependencies. so as @joshmyers was pointing out in the #terragrunt channel, we don’t have this problem anymore with hundreds of config fragments all over the place.

the key here is the terraform state is stored in workspaces, the desired state is stored in the YAML configuration, and the declarative configuration of infrastructure is in pure terraform HCL2 + modules.

Here’s a hint as to what that looks like: https://archive.sweetops.com/aws/2020/04/#ebb3d28d-3753-417c-a88a-7775f8df633c

but we’ve progressed beyond this point.

SweetOps Slack archive of #aws for April, 2020.  Discussion related to Amazon Web Services (AWS)

Discussion related to Amazon Web Services (AWS)

All config fragments are in a single repo, but still a lot of them…multi region definitely doesn’t help that

@Erik Osterman (Cloud Posse) not using the https://github.com/cloudposse/testing.cloudposse.co approach still?

Example Terraform Reference Architecture that implements a Geodesic Module for an Automated Testing Organization in AWS - cloudposse/testing.cloudposse.co

Does the use of workspaces mean your states are all in the same s3 bucket? Last time I tried it, I found this to be problematic. (Can’t remember the exact issue/limitation)

@joshmyers using geodesic (and still using that repo), but the 1-repo-per-account proved not very friendly for pipeline style automation

@randomy yes, all in one bucket, but with IAM policies restricting access based on roles

note, workspaces get their own path within the bucket so it’s easy to restrict.

are they any different to the usual environment variable? Could do all states in same s3 bucket without workspaces, right?

@joshmyers, yes, you’re correct. Could implement “workspace” like functionality without actually using the workspaces feature just by manipulating the backend settings for S3 during initialization.

I don’t know what the advantage would be to doing so

That’s what my Makefile does. No advantage over workspaces I guess but to be fair, workspaces didn’t exist when I started to do this ;).

I like that yaml defined ordering you linked to Erik. Looks legit. Although I’m not sure how you actually implement it… variant reads it and runs various targets in order?

That’s one thing to consider…. I’m also considering Atlantis. But not sure if that would actually be a good idea for practical reasons or just “for show”.

has anyone ever created a ClientVPN configuration using Terraform to call CloudFormation templates? Or even if anyone has created a ClientVPN config in Cloudformation you might be able to help. I am getting this error when trying to create routes via cloudformation.

Error: ROLLBACK_COMPLETE: ["The following resource(s) failed to create: [alphaRoute]. . Rollback requested by user." "Property validation failure: [Encountered unsupported properties in {/}: [TargetVPCSubnetId]]"]

Code:

---

Resources:

alphaRoute:

Properties:

ClientVpnEndpointId: "${aws_ec2_client_vpn_endpoint.alpha-clientvpn.id}"

Description: alpha-Route-01

DestinationCidrBlock: 172.31.32.0/20

TargetVPCSubnetId: subnet-5c4a7916

Type: AWS::EC2::ClientVpnRoute

I’m using terraform aws_ec2_client_vpn_endpoint to create CVPN and aws_cloudformation_stack to add routes

I can make the exact resource in the console without issue

I have a bash command that outputs a list in yaml format. I use yq to put that list into a file (each line is a value). There are about 2700 items in the file. How can I get that list from a file into a terraform variable? The only other approach I see is to do some magic to get a plain list into Terraform list variable file. Basically a *.txt -> *.tf transformation.

Generate a variables file. Json works fine.

Terraform module plugin concept for external data integration - frimik/tf_module_plugin_example

That’s from days of yonder before Terraform had proper provider plugin support.

Another alternative in your case, if you don’t want to pass through files on disk is to run that bash command as an external data source script: https://www.terraform.io/docs/providers/external/data_source.html

Executes an external program that implements a data source.

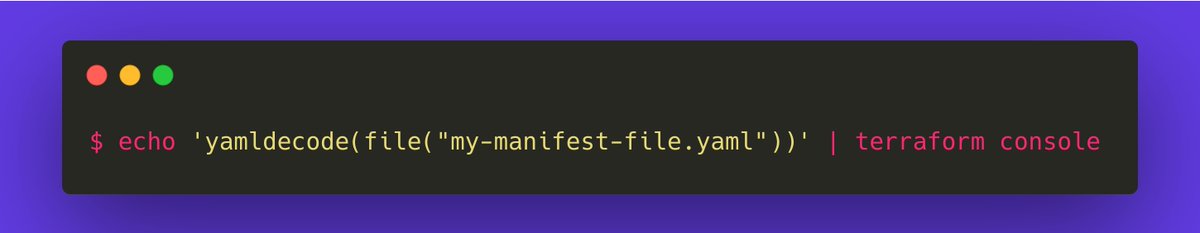

Have you considered using yamldecode(file(...)) with a local?

The yamldecode function decodes a YAML string into a representation of its value.

We use this pattern and it works well.

I’ll look into yamldecode. I’m also wondering if I could have just used a data source for the initial load of values.

if you can output to json instead, you can write <foo>.auto.tfvars.json to your tf directory, and terraform will read it automatically and assign the tfvars

Input variables are parameters for Terraform modules. This page covers configuration syntax for variables.

Terraform also automatically loads a number of variable definitions files if they are present:

* Files named exactly terraform.tfvars or terraform.tfvars.json.

- Any files with names ending in

.auto.tfvarsor.auto.tfvars.json.

i’ve also used terragrunt to do this kind of pre-processing

or maybe try pretf, https://github.com/raymondbutcher/pretf

Generate Terraform code with Python. Contribute to raymondbutcher/pretf development by creating an account on GitHub.

here’s an example of using terragrunt and hcl string templating to massage some input into a auto.tfvars file… i think .auto.tfvars.json is better, but you can pick your own poison… https://github.com/gruntwork-io/terragrunt/issues/1121#issuecomment-610529140

Is there any way to disable the addition of a comment when using generate blocks? I was attempting to write an .auto.tfvars.json file, and it is written fine, but terragrunt injects a comment into …

Thanks @loren Those are awesome options.

Merge Issue With Terraform Maps

locals {

default_settings = {

topics = ["infrastructure-as-code", "terraform"]

gitignore_template = "Terraform"

has_issues = false

has_projects = false

auto_init = true

default_branch = "master"

allow_merge_commit = false

allow_squash_merge = true

allow_rebase_merge = false

archived = false

template = false

enforce_admins = false

dismiss_stale_reviews = true

require_code_owner_reviews = true

required_approving_review_count = 1

is_template = false

delete_branch_on_merge = true

}

}

Ok… this is what I’m trying to do

Default settings above… new item below

repos = {

terraform-aws-foobar = {

description = ""

repo_creator = "willy.wonka"

settings = merge(local.default_settings, {})

}

}

But when I some map key values that I want to override, they don’t seem to get picked up, despite the behavior of merge being the last one should replace for simple (not deep) merges.

repos = {

terraform-aws-foobar = {

description = ""

repo_creator = "willy.wonka"

settings = merge(local.default_settings, {

topics = ["terraform", "productivity", "github-templates"]

},

{

is_template = true

}

)

}

}

Any ideas before I go to devops.stackexchange.com or terraform community?

what is posted should work, i think. do you have an error? or an output displaying exactly what is not working?

It just doesn’t seem to pick up the “override” values from the is_template = true when the default at the top was false. No changes detected. Applying my new topics also doesn’t get picked up. This doesn’t align with my understanding of merge in hcl2

i do exactly this quite a bit, and it does indeed work. hence, we need to see what you are seeing

try creating a reproduction case with your locals and just an output so we can see what the merge produces

Well a good example would be running terraform console on this

I just created a new repo. I added topics to it, like you see above. The new topics I’m overriding don’t even show

terraform-devops-datadog = {

description = "Datadog configuration and setup managed centrally through this"

repo_creator = "me"

settings = merge(local.default_settings, {

topics = ["observability"]

})

}

and when i run doesn’t show topics at all in the properties list

Yeah so I just ran console against on specific on that has the topics override it shows empty on topics, not taking it at all

this item

devops-stream-analytics = {

description = "foobar"

repo_creator = "sheldon.hull"

settings = merge(local.default_settings, {

topics = [

"analytics",

"telemetry",

"azure"

]

})

}

Here

> github_repository.repo["devops-stream-analytics"]

{

"allow_merge_commit" = false

"allow_rebase_merge" = false

"allow_squash_merge" = true

"archived" = false

"auto_init" = true

"default_branch" = "master"

"delete_branch_on_merge" = true

"description" = "foobar"

"etag" = "foobar"

"full_name" = "foobar"

"git_clone_url" = "foobar"

"gitignore_template" = "Terraform"

"has_downloads" = false

"has_issues" = false

"has_projects" = false

"has_wiki" = false

"homepage_url" = ""

"html_url" = "<https://github.com/foobar>"

"http_clone_url" = "<https://github.com/foobar>"

"id" = "devops-stream-analytics"

"is_template" = false

"name" = "devops-stream-analytics"

"node_id" = "MDEwOlJlcG9zaXRvcnkyNTQ3NTEwMzc="

"private" = true

"ssh_clone_url" = "[email protected]:foobar.git"

"svn_url" = "<https://github.com/foobar>"

"template" = []

"topics" = [] <------ this should be overriden by my logic?

}

see the last item. It’s empty. Not sure why my merge syntax would fail as you see above.

looks fine to me?

$ cat main.tf

locals {

default_settings = {

topics = ["infrastructure-as-code", "terraform"]

gitignore_template = "Terraform"

has_issues = false

has_projects = false

auto_init = true

default_branch = "master"

allow_merge_commit = false

allow_squash_merge = true

allow_rebase_merge = false

archived = false

template = false

enforce_admins = false

dismiss_stale_reviews = true

require_code_owner_reviews = true

required_approving_review_count = 1

is_template = false

delete_branch_on_merge = true

}

}

locals {

repos = {

devops-stream-analytics = {

description = "foobar"

repo_creator = "sheldon.hull"

settings = merge(local.default_settings, {

topics = [

"analytics",

"telemetry",

"azure"

]

})

}

}

}

output "repos" {

value = local.repos

}

$ terraform apply

Apply complete! Resources: 0 added, 0 changed, 0 destroyed.

Outputs:

repos = {

"devops-stream-analytics" = {

"description" = "foobar"

"repo_creator" = "sheldon.hull"

"settings" = {

"allow_merge_commit" = false

"allow_rebase_merge" = false

"allow_squash_merge" = true

"archived" = false

"auto_init" = true

"default_branch" = "master"

"delete_branch_on_merge" = true

"dismiss_stale_reviews" = true

"enforce_admins" = false

"gitignore_template" = "Terraform"

"has_issues" = false

"has_projects" = false

"is_template" = false

"require_code_owner_reviews" = true

"required_approving_review_count" = 1

"template" = false

"topics" = [

"analytics",

"telemetry",

"azure",

]

}

}

}

hmm. What terraform version are you running

$ terraform -version

Terraform v0.12.24

2020-05-12

More terraform thinking out loud. I’ve been reading some of the cloudposse repos just getting a feel for how other organisations do terraform. The scale at my organization is small (handful of engineers and we will have at most 5 aws accounts across one maybe 2 regions) In the past I have handled all IAM in a single “root” module

This has its pros and cons

I’ve noticed in some cloudposse examples IAM resources are created alongside other resources. e.g an ECS service and the role it uses may be defined together. this is massively convenient

But its easier end up with issues like: Team A create a role called “pipeline_foo” and Team B (in another IAM state) create a role called “pipeline_foo”

They have no indication until the apply phase fails that this is an issue

As I move towards Terraform Cloud I also see the advantage of having a single IAM state / workspace

As just that state can be delegated to a team that manage IAM

Anyone have strong feelings on either approach?

Personally I prefer to have unique IAM resources for each module as it’s easily repeatable and self-contained solution. IMO the label module helps to avoid the naming collision

encourage them to use name_prefix instead of name ?

ask them what they do when they deploy the same tf module more than once?

This is not an issue with modules having hardcodes names. The “submodules” are very configurable but the root module in this case defines a very specific configuration and should only be deployed once per account

My worry is that if IAM resources are created in dozens of states it could become very hard to reason about the state of IAM in an account

I hope that makes sense

@vFondevilla That is an interesting solution. Although for example if that module defines a simple barebones ecs_task_execution_role and you deploy 20 copies of that module

well alright, but that was the scenario presented

You end up with 20 ecs_task_execution_role_some_id with identical policies attached? That always felt a bit wrong to me. If they have different policies great but just having many copies of identical IAM resources seems redundant

iam policies have no costs. multiple instances of a policy with the same permissions is worth it to us, for the flexibility of a self-contained module. i suppose we tend to reason about the service we are creating. not the state of an aws service as a whole

That is interesting. Its great to talk about this stuff. Historically I have had a very “service centric” split of state

Yes, that’s the situation in my accounts. Each ECS service has its own IAM execution role managed by the terraform module. This enables us to repeatedly deploy the module in one of the multiple aws accounts we’re using

While it can be easier to reason about / delegate responsibility it does also lead to a lot of remote state hell and dependancy hell

e.g I need to update the vpc state to add a security group and then I need to use remote state or data sources in my ecs state to now refer to that security group

Maybe I should relax my service per state thinking

we do separate IAM for humans into its own module and control it centrally. but IAM for services goes into the service module

and move towards an “application” or “logical grouping of resources” per state

Yes for IAM for humans I tend to just create a module that uses lots of the modules in https://github.com/terraform-aws-modules/terraform-aws-iam

Terraform module which creates IAM resources on AWS - terraform-aws-modules/terraform-aws-iam

And that is deployed in a single account

Thanks for rubber ducking this. Do you ever end up with the opposite problem to me? i.e because IAM roles are defined alongside the service, can it be difficult to for example allow access to another principal? Because its created in another module and you can have chicken egg problems?

Or would a scenario like that be moved out to another state

I’m probably overthinking this a bit. Its a greenfields project so trying not to make any decisions that will shoot me in the foot in 12 months

I’m trying to avoid state dependencies just to simplify my life and reduce the steep learning curve for the rest of the team, so sometimes instead of referencing the remote state managed via another module, I’m using data resources as variables for the modules. In our case is working awesome with a team which before me doesn’t knew anything about terraform.

the thing that shoots us in the foot is when we do use iam resources (or most anything else, really) managed in a different state. when we keep all the resources contained in the services modules, it stays clean

Do you mean you use data sources for most module inputs @vFondevilla something like this?

data "aws_vpc" "default" {

default = true

}

data "aws_subnet_ids" "all" {

vpc_id = data.aws_vpc.default.id

}

module "db" {

vpc_id = data.aws_vpc.default.id

subnets = data.aws_subnet_ids.all.ids

#.......

}

yep

Sounds good @loren I will definitely try out this approach. I think it will save me some pain going forward

Much easier than explain someone with barely 0 experience working with Terraform modules how to use remote state and the possible ramifications. It’s pretty manual for deploying new modules (as you have to change the data and/or variables of the module), but in our case is working well.

Yeah I find data sources a cleaner solution than remote state where possible. Nothing worse than the “output plumbing” required when you need a new output from a nested module for example

Hey Guys, having issue after adding vpc to my terraform(0.12), before i am using default vpc to extract info like subnet ids etc. Error: Invalid for_each argument

on resource.tf line 63, in resource “aws_efs_mount_target” “efs”: 63: for_each = toset(module.vpc-1.private_subnets)

The “for_each” value depends on resource attributes that cannot be determined until apply, so Terraform cannot predict how many instances will be created. To work around this, use the -target argument to first apply only the resources that the for_each depends on. how can i solve

You can try this

for_each = toset(compact(module.vpc-1.private_subnets))

this are same with that Error: Invalid for_each argument

on resource.tf line 63, in resource “aws_efs_mount_target” “efs”: 63: for_each = toset(compact(module.vpc-1.private_subnets))

The “for_each” value depends on resource attributes that cannot be determined until apply, so Terraform cannot predict how many instances will be created. To work around this, use the -target argument to first apply only the resources that the for_each depends on.

Hello everyone

I am using cloudpoose module for vpc peering. I am having an issue can any one help me with it. Asap. Thank you

@maharjanaabhusan please post your actual question so that someone can answer

Error: Invalid count argument

on .terraform/modules/vpc_peering-1/main.tf line 62, in resource “aws_route” “requestor”: 62: count = var.enabled ? length(distinct(sort(data.aws_route_tables.requestor.0.ids))) * length(data.aws_vpc.acceptor.0.cidr_block_associations) : 0

The “count” value depends on resource attributes that cannot be determined until apply, so Terraform cannot predict how many instances will be created. To work around this, use the -target argument to first apply only the resources that the count depends on.

module “vpc_peering-1” { source = “git://github.com/cloudposse/terraform-aws-vpc-peering.git?ref=master)” namespace = “eg” stage = “test-1” name = “peering-1” requestor_vpc_id = module.vpc1.vpc_id acceptor_vpc_id = module.vpc3.vpc_id }

Terraform module to create a peering connection between two VPCs in the same AWS account. - cloudposse/terraform-aws-vpc-peering

Do you already created routes in your vpc? Count depend on output of:

- data.aws_route_tables.requestor.0.ids

- data.aws_vpc.acceptor.0.cidr_block_associations

Terraform module to create a peering connection between two VPCs in the same AWS account. - cloudposse/terraform-aws-vpc-peering

I am using aws vpc module of cloudpoose.

try to use https://github.com/cloudposse/terraform-aws-vpc-peering/blob/master/examples/complete/main.tf

Terraform module to create a peering connection between two VPCs in the same AWS account. - cloudposse/terraform-aws-vpc-peering

they create

• vpc

• subnets

• peering

probably you are missing subnets creation

RTFM

requestor_vpc_id = module.vpc1.vpc_id

acceptor_vpc_id = module.vpc3.vpc_id

are these vpcs created ?

Yes

i think me and @maharjanaabhusan have same problem

Does anyone here have a Terraform example for provisioning a forecast monitor in DataDog? Not sure it’s possible with the current provider, couldn’t find any examples for this.

forecast metric and a monitor on that metric ?

usually that is how it works

yeah, I think I got it

an example would be nice though. . . for that provider

@Matt do you still need help with that one?

@Julio Tain Sueiras think I have it now

need to test tomorrow

it’s basically just a variant of a query alert

2020-05-13

v0.12.25 NOTES: backend/s3: Region validation now automatically supports the new af-south-1 (Africa (Cape Town)) region. For AWS operations to work in the new region, the region must be explicitly enabled as outlined in the AWS Documentation. When the region is not enabled, the Terraform S3 Backend will return errors during credential validation (e.g. error validating provider credentials:…

Learn how to enable and disable AWS Regions.

Does anyone know of a terraform plan review tool? Something like GitHub Pull Requests but for Terraform Plan? I know Atlantis will comment on a PR with the plan and allow review and what not, but I would love a tool that I can push a plan to it and then discuss that plan with my team.

Push plan to Jira?

Hahah that strikes a cord — I do PM / Architecture consulting for one client and I use Jira too much as it is. I think that’d be my nightmare.

I’m going to guess after my quick google search that there is no such tool. Which is interesting to me… Too many ideas, not enough time.

You get that with terraform cloud

Use your ci platform to report the plan as a test result on the pr in Github?

the ability to comment on the plan

Huh I thought and looked at my TF cloud project for one client. It doesn’t go into the discussion level that I wanted, but maybe that solves enough of the problem. I just haven’t used it enough.

I was hoping to see something that has the ability to comment on a line by line change in a plan so I can explain / discuss the specific results of resource change to non-ops team members before we move it forward.

That’s an interesting idea. Atlantis had to work around Terraform plans hitting the max character length in GitHub comments by splitting up the plan into multiple comments. Sticking the plan output into a file in the pull request would get around the comment length limitation, and allow for inline commenting.

I have a to-do item to implement terraform in GitHub Actions in my org. When I get around to that task I’ll see if I can add the plan to the pull request as a file when the PR is opened.

Allowing the CI/CD process to change the contents of a commit or add a commit poses its own challenges, but we already have to deal with it using @lerna/version

I guess you could have a dedicated repo for plan artifacts and commit to that via automation and open pull request and all. That sounds like what you need, but dang the house of cards starts getting higher. How about just the review in page and then fail fast and fix if an issue :-)

you could fork atlantis and add what you need

time to learn golang

Took my first swing at some Go terraform-tfe sdk stuff today to create runs from azure devops/cli. Learned a bunch, including finding a much more mature project with great examples. Might fork and modify a bit. Looks like with this go-tfe project on you can easily run terraform cloud actions from github actions now. Super cool!

I’m going to modify this probably to accept command line args for my first PR on a go project https://github.com/kvrhdn/tfe-run

GitHub Action to create a new run on Terraform Cloud - kvrhdn/tfe-run

slick

GitHub Action to create a new run on Terraform Cloud - kvrhdn/tfe-run

2020-05-14

Hi All, I’m looking for a solution in managing/creating multiple AWS route53 zones/records. Any suggestions

What’s the problem? Terraform’s AWS R53 resources work as advertised.

resource "aws_route53_zone" "main" {

count = length(var.domains) > 0 ? length(var.domains) : 0

name = element(var.domains, count.index)

}

for CNAME record, how can i create for specific domain?

resource “aws_route53_record” “www” { count = length(aws_route53_zone.main) zone_id = element(aws_route53_zone.main.*.zone_id, count.index) name = “www” type = “CNAME” ttl = “300” records = [“test.example.com”] }

don’t know if I understand specifically but: count and index are blunt instruments and often will fail you when you try to implement more complex use cases

my use cases is, i’m working on creating a poc with route53 using terraform.

I had to create multiple domains in route53 and take care of operation tasks like adding new/updating/deleting records

i haven’t tried with map and for_each. i will give a try

yeah, for_each is better. count would be a disaster there, it would likely want to delete and recreate your zones when you change var.domains

Thank you, i will give a try and come back

Hey y’all, I did something really dumb and I’m still a bit too green at Terraform to understand how to resolve it. I’m keeping multiple state workspaces in S3 with a dynamo lock DB. I wanted to purge one of the workspaces and rebuild things from scratch. I didn’t have any resources outstanding, so I blindly deleted the file directly from S3. Now I can’t rebuild it, I suspect, because the lock db expects it to exist. Is there anyway to get back to a blank slate from here so that I can start over for this particular workspace?

go into dynamodb and clear the lock table…

Okay, thank you. I haven’t worked with dynamo yet and was worried I may break something else by clearing it. I’m especially cautious because I’ve already dug myself in to a deep enough hole.

nah, much more likely to break something on the s3 side. the dynamodb table is very lightweight

if you haven’t already, highly recommend turning on versioning for any s3 bucket you use for tfstate

yeah, as soon as I realized what was going on, I wished that I had.

you might have a copy of the tfstate locally, from your last apply

check the .terraform directory wherever you ran terraform from

I wish. It was a new terraform script that I had only applied in Jenkins.

ah, well, jenkins. there’s the problem

I think I actually have my script working fine at this point, but I ran taint locally (which was on .25) and it bumped the version of the state file. I can only automatically install up to .24 on Jenkins at the moment. :x

Oof. Yeah, might also want to add a terraform version constraint…

we do this in all our root modules:

terraform {

required_version = "0.12.24"

}

pin the version, then update it intentionally as a routine task

Does anyone have thoughts on how to scale Terraform when working with thousands of internal customers. The concurrent requests would be about 10 at a time. There’s a talk Uber did where they said DevOps tools don’t scale when you’re dealing with 100k servers, so there’s some upper limit. What would you say the limit is for Terraform? Is there a way to wrap Terraform around an api call so an application platform could enter a few parameters for a Terraform module to render the Terraform?

I don’t know if you could do this but maybe you could fork runatlantis.io and instead of receiving a web hook payload change it to receive a regular Rest request

That’s an idea. I remember there is a company that created a service catalog for Terraform templates. I couldn’t find the company, but I did run into this when I did a Google search for it. https://www.hashicorp.com/products/terraform/self-service-infrastructure/

Enable users to easily provision infrastructure on-demand with a library of approved infrastructure. Increase IT ops productivity and developer speed with self-service provisioning

It doesn’t go into details on how the self-service part works.

Self-service = pay for nomad and terraform enterprise

If Terraform Enterprise can provide self-service, that would be a plus if you can afford it. For larger companies, it would pay for itself in not having engineers reinvent the wheel.

my old team did some work on addressing scaling issues on one of the largest terraform enterprise installations deployed - i’d say if you’re getting to that size you want to divide the scope of what an individual deployment of TFE is addressing - maybe broken down by business units or some other logical boundary

there is an upper limit and it gets ugly when things go wrong at that limit

so i’m a bit confused here… where is the concern about scaling?

when working with thousands of internal customer

or

when you’re dealing with 100k servers, so there’s some upper limit.

And I think they are different problems to optimize for.

If you have thousands of internal customers (e.g. developers), I still would imagine it scales well, if:

• massively bifurcated terraform state across projects and AWS accounts

• teams have their own projects, aws accounts

• teams are < 20 developers on average

then i really don’t see how terraform would have technical scaling challenges e.g. by hitting AWS rate limits.

terraform itself yes - terraform enterprise (as in the server) can hit scaling issues at high usage

aha, gotcha - i could see that with something like terraform enterprise.

and (at least when we were working on it a while back - older versions) did not scale out well

i imagine that they’ve implemented a lot of lessons learned in deploying the new terraform cloud platform

but with standard CI/CD systems that can fan out to workers, I see it less of a problem.

yeh - as long as you can scale your worker fleet then it should be easier

the practical problem I see is enforcing policies at that scale.

i’m in a bit of a quandary there between opa and sentinel - i really really like opa

Ya, same. OPA is where I’d want to put my investment.

but then going against the TFE ecosystem

i mean if you’re not going to use TFE as your runner it makes sense

I was also thinking of scaling in terms of management and implementation. For example if a core team has say 10 developers, writing code for thousands of internal teams, each with their own accounts/projects doesn’t scale well. So the urge is to create some system where the end user has a gui they can go to request their infrastructure while using Terraform. What I don’t get is how to use Terraform when you scale beyond what manually writing code in a GitOps fashion does.

generally i’d say you want to scale out to teams via code rather than a gui

but i realise that that’s not always a possible reality for some enterprises

somewhat embarrassed to admit i built a demo PoC for basically this before it existed: https://www.terraform.io/docs/cloud/integrations/service-now/index.html

ServiceNow integration to enable your users to order Terraform-built infrastructure from ServiceNow

I was reading about that. It looks pretty cool. I think what the company I’m working with now wants to do is integrate Terraform with their own infrastructure request toolchain.

that’s something that i’ve seen asked for a lot - i’ve worked on projects to build it a couple of times. i understand why it’s a reality - i just don’t think i’d ever want to have to request infrastructure in that way

as an engineer on the other side of the gui i’d probably hate it

but that’s possibly because i’ve always had the privilege of not being blocked by systems of approval like that

enterprise realities kind of suck

which is why i don’t work in enterprise

i know realistically that a system to request from a service catalogue like that is 1000x better than what a lot of enterprises are dealing with today

@Erik Osterman (Cloud Posse) can you expand on this : https://sweetops.slack.com/archives/CB6GHNLG0/p1589502664287900?thread_ts=1589496149.282000&cid=CB6GHNLG0

but then going against the TFE ecosystem

I have read about OPA, and every time I go the the website it looks to good to be true

I keep thinking in how I’m to somehow get charged, of screwed for lack of support or something

2020-05-15

find . -name '*.tf' | xargs -n1 sed 's/\(= \+\)"${\([^{}]\+\)}"/\1\2/' -i

has anyone used this and have any opinions about it? https://github.com/liatrio/aws-accounts-terraform

Contribute to liatrio/aws-accounts-terraform development by creating an account on GitHub.

or is control tower to be preferred?

Hi All, I have my kubeconfig as terraform local value after eks deployment.. Now when I try to run a command “echo ${local.kubeconfig} > ~~~/.kube/config” using null_resource command option. I get command not found error guess due to multiple lines get replaced as part of local.kubeconfig..Need help on how to run this command. Right now I am coming out of terraform and doing “terraform output > ~~~kube/config” Any help to achieve it as part of null_resources are any terraform resources?

I ended up outputing my kubeconfig(s) to local_file and storing them back in source control. I reference them in null_resource with --kubeconfig=${local_file.kubeconfig.filename}. It’s not the most elegant solution, but neither is using null_resource for kubectl apply.

Thanks for the reply. Can this be achieved in single terraform run like generating kubeconfig n copying in config path? Also can we use --kubeconfig=${local_file.kubeconfig.filename} like this inside null resources?

Please ignore my previous msg.. I understood your answer thanks for the help. Will try it n get back if any issues

Question how am I supposed to be running the tests? in a container or does calling the make file work? I’m trying to upgrade the iam module, but first I want to know how you guys are writing tests for the other modules first.

Keeping my eyes on this. You can see some stuff on this in some repos but I haven’t yet had capacity to dive into tests yet.

2020-05-16

I am trying to get the Kubernetes Pod IP..I have launched a kubernetes deployment…in terraform.tfstate file I dont see the IP address of the POD. I need to use that POD IP address to bring another POD. How to get kubernetes POD IP which is launched by Terraform. Any help would be great!

Really appreciate if any help on this?

I’m not sure you would use the pod IP for that.

What do you mean by:

I need to use that POD IP address to bring another POD

In Kubernetes pods get assigned ephemeral IP addresses that change when the pod is moved, restarted, scaled. Using the IP of the pod to communicate with would not be a good experience. instead, Kubernetes depends on DNS names and Kubernetes Service resources.

A good place to start to understand this is: http://www.youtube.com/watch?v=0Omvgd7Hg1I&t=10m0s

The whole talk is great but the relevant part is at 10 minutes in.

Actually I need that POD to get some information from that POD..anyways let me check on DNS config..that would be appropriate as you say..

I will go over this video..

one basic question..i never tried with dns config..is it default dns config we can give are we need to have dns server configured in K8s cluster then set a dns for a particular pod..

BTW Thank you for the response..really appreciate

A good place to start to understand DNS and services in Kubernetes is: https://kubernetes.io/docs/concepts/services-networking/dns-pod-service

If your deployments (and the pods they create) are in the same namespace, once you’ve created the service, you’ll be able to access the pods with the host part of the DNS name.

hmm I get it..it really makes sense to go with service

Say I have a Ghost blog in namespace my-blog and a Percona DB server in my-blog - I’d create a Service of type ClusterIP for the database server named db and my Ghost blog could access it with the host name db

i was trying out raw thing out here..this looks complete solution..

If I were to deploy the DB server into another namespace I could reference it with it’s FQDN: db.other-namespace.svc.cluster.local

Another fantastic talk to watch to understand how all these Kubernetes resources interact is this video: https://www.youtube.com/watch?v=90kZRyPcRZw

Ok that sounds clear.. for now I can go with same namespace that should be fine for my case..so that it would be simple for me to refer that..

Thanks Tim..guess these details would really help me kick start on these areas..which is new to me as a k8s beginner..

anyone have a lambda for provisioning the databases inside of an RDS postgres instance? problem we’re trying to solve is creating the databases (e.g. granana, keycloak, and vault) on the RDS cluster without direct access since it’s in a private VPC. In this case we do not have #atlantis or VPN connectivity to the VPC. Looking for a solution we can use in pure terraform.

mm we do have lambdas that interact with the db but not to create them

usually we create from a snapshot

can you just create a snapshot with the schema and whatever data you need and put it on a s3 bucket ?

or share the snapshot

Ohh that is an interesting idea. The s3 import option.

@Jeremy G (Cloud Posse)

Only downside is that it needs to be in the percona xtradb format, but haven’t looked at it yet.