#terraform (2021-08)

Discussions related to Terraform or Terraform Modules

Discussions related to Terraform or Terraform Modules

Archive: https://archive.sweetops.com/terraform/

2021-08-01

Good evening everyone. I’m new here and hopefully you don’t mind a really noobish question but i’m trying to add my lambda functions dynamically to the api gateway integration like so:

// need to do it dynamically somehow but it won't let me assign the key name with variable

dynamic "integrations" {

for_each = module.lambdas

content {

"ANY /hello-world" = {

lambda_arn = module.lambdas["hello-world"].lambda_function_arn

payload_format_version = "2.0"

timeout_milliseconds = 12000

}

}

}

// this works

// integrations = {

// "ANY /hello-world" = {

// lambda_arn = module.lambdas["hello-world"].lambda_function_arn

// payload_format_version = "2.0"

// timeout_milliseconds = 12000

// }

// }

any help on how to archive this in tf would be awesome!

dynamic "integrations" {

for_each = module.lambdas

content {

(integrations.values.lambda_function_name) = {

lambda_arn = integrations.values.lambda_function_arn

payload_format_version = "2.0"

timeout_milliseconds = 12000

}

}

}

The keys in a map must be strings; they can be left unquoted if they are a valid identifier, but must be quoted otherwise. You can use a non-literal string expression as a key by wrapping it in parentheses, like (var.business_unit_tag_name) = "SRE".

Terraform by HashiCorp

damn you are good sir! thank you! I’ve come up with a workaround but this is much cleaner. My workaround was:

integrations = {

for lambda in module.lambdas : lambda.function_name_iterator => {

lambda_arn = lambda.lambda_function_arn

payload_format_version = "2.0"

timeout_milliseconds = 12000

}

}

i had to export a “fake” variable from my module where i added the “ANY /” in front of the function name

you can add/format any prefix/suffix/variable to (integrations.values.lambda_function_name) , it’s a standard terraform interpolation

Hi All we did a webinar on intro to IAC and Terraform in the weekend if anyone is interested: https://www.youtube.com/watch?v=2keKHXtvY5c

Is there a way to perform a deep merge like following:

locals {

a = { foo = { age = 12, items = [1] } }

b = { foo = { age = 12, items = [2] }, bar = { age = 4, items = [3] } }

c = { bar = { age = 4, items = [] } }

# desired output

out = { foo = { age = 12, items = [1,2] }, bar = { age = 4, items = [3] } }

}

I have many maps of objects. The objects with same key are identical except for one list field. I want to merge all items with the same key, except concat the list field.

2021-08-02

Hi all. Have a question about working with provider aliases. In my case I have several accounts in AWS:

provider "aws" {

region = var.region

}

provider "aws" {

region = "eu-west-2"

alias = "aws.eu-west-2"

}

provider "aws" {

region = "eu-central-1"

alias = "aws.eu-central-1"

}

I want to create same shared WAF rules in this accounts. Could I use for_each somehow to get call module creating this rules and iterating over different provider aliases?

I don’t believe you can currently loop with a for_each for provider aliases due to it being a meta-argument but you can consume the module you’re talking of like so:

module "waf_rules_euc1" {

source = "./modules/waf-rules"

providers = {

aws = aws.eu-central-1

}

myvar = "value"

}

- repeat for regions

1

1Hi all, I’m using the terraform-aws-ecs-alb-service-task module and running into a bit of an issue; I’ve set deployment_controller_type to CODE_DEPLOY and using the blue/green deployment method - when Code Deploy diligently switches to the green autoscaling group, the next run of the module deletes/recreates the ecs service because it’s trying to put back the blue target group (or both)… Has anyone tried to run this setup? I can make a PR to ignore changes to load balancers, but if you look at the module it’s going to become an immediate nightmare to support the 3 different ignore combinations. Any advice greatly appreciated.

Hi, just asking again https://github.com/cloudposse/terraform-aws-cloudfront-cdn for this module. Is there a plan in the roadmap for users to be able to modify default_cache_behavior ?

Terraform Module that implements a CloudFront Distribution (CDN) for a custom origin. - GitHub - cloudposse/terraform-aws-cloudfront-cdn: Terraform Module that implements a CloudFront Distribution…

anyone can create a PR to add features/flexibility

Terraform Module that implements a CloudFront Distribution (CDN) for a custom origin. - GitHub - cloudposse/terraform-aws-cloudfront-cdn: Terraform Module that implements a CloudFront Distribution…

there is not roadmap for features in community modules

although the cache behavior have variables and a dynamic already so seems to be pretty flexible

Oh, alright. Thanks @jose.amengual

2021-08-03

Hello folks, I’m facing this issue when trying to deploy https://github.com/cloudposse/terraform-aws-elasticsearch using all other modules that this one requires. I’ll link the files I have in the thread

│ Error: Error creating ElasticSearch domain: ValidationException: You must specify exactly two subnets because you've set zone count to two.

│

│ with module.elasticsearch.aws_elasticsearch_domain.default[0],

│ on modules/elasticsearch/main.tf line 100, in resource "aws_elasticsearch_domain" "default":

│ 100: resource "aws_elasticsearch_domain" "default" {

│

╵

╷

│ Error: Error creating Security Group: InvalidGroup.Duplicate: The security group 'elastic-test-es-test' already exists for VPC 'vpc-0fbda4f1d6105a68c'

│ status code: 400, request id: 6ad8d766-7954-49d5-b257-8b2213d1f8ec

│

│ with module.vpc.module.security_group.aws_security_group.default[0],

│ on modules/sg-cp/main.tf line 28, in resource "aws_security_group" "default":

│ 28: resource "aws_security_group" "default" {

They’re basically CloudPosse’s modules.

@Grubhold the error you’re getting spells it out — When ES is deployed it needs a certain number of subnets to account for the number of nodes that it has.

So for —

dynamic "vpc_options" {

for_each = var.vpc_enabled ? [true] : []

content {

security_group_ids = [join("", aws_security_group.default.*.id)]

subnet_ids = var.subnet_ids

}

}

Your var.subnet_ids needs to include more subnet IDs.

Thank you so much @Matt Gowie for pointing that out, it’s interesting not to notice this for a whole day

No problem — Glad that worked out for ya.

Hello folks, I have a question regarding terraform-aws-eks-cluster https://github.com/cloudposse/terraform-aws-eks-cluster/blame/master/README.md#L100

The readme contains two statements that seem contradicting but maybe I’m just not getting it. It states:

The KUBECONFG file is the most reliable [method], […]

and a few lines below:

At the moment, the exec option appears to be the most reliable method, […]

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

I believe @Jeremy G (Cloud Posse) recently updated that documentation… There was a big re-work he just did to support the 2.x k8s provider I’m pretty sure. Easy for something to pass through the cracks.

Terraform module for provisioning an EKS cluster. Contribute to cloudposse/terraform-aws-eks-cluster development by creating an account on GitHub.

You may look for his PR (which should be recent) and see what changed to try and figure out the answer to this one.

PR to correct would be much appreciated!

I can only guess which one is correct (exec method), but as both statements have been added in the same PR, it’s not obvious which one is more true.

I’m also a bit hesitant to start working on a PR because there seems to be some tooling involved in the readme file creation.

Ah that is indeed confusing then…

Since Jeremy isn’t around… @Andriy Knysh (Cloud Posse) do you know which of the two options Jeremy meant as the recommended way forward?

I would imagine it’s exec, but now I’m confused considering they’re both mentioned in the same PR.

@Ben @Matt Gowie The README needs revision, as you note. Please read the updated Release Notes for v0.42.0. Fixing the README will take a PR, but I was able to update the Release Notes easily.

This release resumes compatibility with v0.39.0 and adds new features: Releases and builds on PR #116 by providing the create_eks_service_role option that allows you to disable the automatic creat…

Good stuff — Thanks Jeremy.

The short answer is “use data_auth if you can, kubeconfig for importing things into Terraform state, and exec_auth if it works and data_auth doesn’t.”

Great, thank you!

2021-08-04

Hello folks, is there a workaround for the current security group module where using it with another module such as Elasticsearch it yells that xyz security group already exists? This PR seems to address this but its not yet merged.

│ Error: Error creating Security Group: InvalidGroup.Duplicate: The security group 'logger-test-es-test' already exists for VPC 'vpc-0e868046c92d7bb2a'

│ status code: 400, request id: be88d8b8-1ed0-43ac-8246-e37265782098

│

│ with module.vpc.module.security_group.aws_security_group.default[0],

│ on modules/sg-cp/main.tf line 28, in resource "aws_security_group" "default":

│ 28: resource "aws_security_group" "default" {

what Input use_name_prefix replaced with create_before_destroy. Previously, create_before_destroy was always set to true but of course that fails if you are not using a name prefix, because the na…

My security group module taken from CloudPosse’s

what Input use_name_prefix replaced with create_before_destroy. Previously, create_before_destroy was always set to true but of course that fails if you are not using a name prefix, because the na…

After making var.security_group_enabled as false that error went away.

But this time it gave me this error

use attribute and add diff attributes for the Elasticsearch and other modules you use with it

the issue is that all modules, when provided with the same context (e.g. namespace, environment, stage, name), generates the same names for the SGs that they create

adding attributes will make all generated IDs different

Oh wow, indeed all contexts in all module folders are the same. And I just found out that var.attributes are left without reference in [variables.tf](http://variables.tf)

many modules have flags to create the SG or not, you can create your own and provide to the modules

I wanted to use CP’s Security Group module as well for everything. But I think maybe for the time being I can just use my own simple security group configs instead of CP’s until I get to fully understand how its functioning.

And provide it to the modules as you say

cc: @Jeremy G (Cloud Posse)

@Erik Osterman (Cloud Posse) nothing really to do with our SG module, more, as Andriy said, about how we handle naming of SGs.

If I understood correctly you’re suggesting to use my own custom SGs but maybe follow the naming conventions you have set up in your SGs?

Also, thank you so much for sparing time to answer my rather newbie questions. But I’m loving your modules and it has been teaching me a lot!

Hi folks, I want to include the snippet section below from my ECS task definition for datadog container but the problem volume argument not supported? Anyone can give me advise. Thank you

mount_points = [

{

containerPath = "/var/run/docker.sock"

sourceVolume = "docker_sock"

readOnly = true

},

{

containerPath = "/host/sys/fs/cgroup"

sourceVolume = "cgroup"

readOnly = true

},

{

containerPath = "/host/proc"

sourceVolume = "proc"

readOnly = true

}

]

volumes = [

{

host_path = "/var/run/docker.sock"

name = "docker_sock"

docker_volume_configuration = []

},

{

host_path = "/proc/"

name = "proc"

docker_volume_configuration = []

},

{

host_path = "/sys/fs/cgroup/"

name = "cgroup"

docker_volume_configuration = []

}

]

BTW: I’m using this module https://github.com/cloudposse/terraform-aws-ecs-container-definition/blob/master/main.tf

Terraform module to generate well-formed JSON documents (container definitions) that are passed to the aws_ecs_task_definition Terraform resource - terraform-aws-ecs-container-definition/main.tf a…

@Gerald — If you’ve got the code then put it up on PR and mention it in #pr-reviews. We’d be happy to take a look at it.

Terraform module to generate well-formed JSON documents (container definitions) that are passed to the aws_ecs_task_definition Terraform resource - terraform-aws-ecs-container-definition/main.tf a…

Thanks Matt will do it

possible we can add this line here?

dynamic "volume" {

for_each = var.volumes

content {

name = volume.value.name

host_path = lookup(volume.value, "host_path", null)

dynamic "docker_volume_configuration" {

for_each = lookup(volume.value, "docker_volume_configuration", [])

content {

autoprovision = lookup(docker_volume_configuration.value, "autoprovision", null)

driver = lookup(docker_volume_configuration.value, "driver", null)

driver_opts = lookup(docker_volume_configuration.value, "driver_opts", null)

labels = lookup(docker_volume_configuration.value, "labels", null)

scope = lookup(docker_volume_configuration.value, "scope", null)

}

}

}

}

v1.0.4 1.0.4 (August 04, 2021) BUG FIXES: backend/consul: Fix a bug where the state value may be too large for consul to accept (#28838) cli: Fixed a crashing bug with some edge-cases when reporting syntax errors that happen to be reported at the position of a newline. (<a href=”https://github.com/hashicorp/terraform/issues/29048“…

The logic in e680211 to determine whether a given state is small enough to fit in a single KV entry in Consul is buggy: because we are using the Transaction API we are base64 encoding it so the pay…

Because our snippet generator is trying to select whole lines to include in the snippet, it has some edge cases for odd situations where the relevant source range starts or ends directly at a newli…

Hello i’m trying to create a proper policy for the cluster-autoscaler service account (aws eks) and I need the ASG ARNs. Any ideas on how I can get them from the eks_node_group module? I can only get the ASG name from the node_group “resource” attribute, which I can use to get the ARN from the aws_autoscaling_group data source. Unfortunately this will be known only after apply. Do the more experienced folks have a decent workaround or a better way to do this?

Trying to use cloudposse/terraform-aws-ec2-instance-group and it may be lack of sleep but I can’t figure out how to get it to not generate spurious SSH keys if I’m using an existing key?

If I pass in ssh_key_pair , I’ll want to specify generate_ssh_key_pair as false, but if I do that ssh_key_pair module goes “Oh, well we’re using an existing file then”, and then plan dies with

│ Error: Invalid function argument

│

│ on .terraform\modules\worker_tenants.worker_tenant.ssh_key_pair\main.tf line 19, in resource "aws_key_pair" "

imported":

│ 19: public_key = file(local.public_key_filename)

│ ├────────────────

│ │ local.public_key_filename is "C:/projects/terraform-network-worker/app-dev-worker-worker-arrow.pub"

│

│ Invalid value for "path" parameter: no file exists at

Sounds like a potential bug in the module? PR to fix would be welcome!

Should be there already, horribly done. But there

what If you're passing in a keypair name, don't generate one, and done try to load a local file that doesn't exist why You want to use an existing keypair You don't want plan to …

Can workaround the error by passing true to generate_ssh_key_pair but then I got an extra keypair for every instance group being generated.

2021-08-05

is there an easy way to get TF to ignore data resource changes (see below)

data "tls_certificate" "eks_oidc_cert" {

url = aws_eks_cluster.eks.identity.0.oidc.0.issuer

}

~ id = "2021-08-05 16:09:43.671261128 +0000 UTC" -> "2021-08-05 16:09:58.401961984 +0000 UTC"

Hey folks, wondering if anyone has what they consider a good, or definitive, resource on how to do a major version upgrade of an RDS database with Terraform?

Do it entirely by hand and then remediate your TF state after. I’m mostly serious. Terraform is not good at changing state in a sensible way

^ that’s how I have usually done DB upgrades

There is a flag to do an immediate apply but if the upgrade takes a while then I imagine the odds of Terraform timing out are high

It’s not in terraform, but I really liked this article… https://engineering.theblueground.com/blog/zero-downtime-postgres-migration-done-right/

A step by step guide to migrate your Postgres databases in production environments with zero downtime

ugh I could have really used that a month ago

Hoping someone can give an update on this issue with the terraform aws ses module. Any idea when we can get a fix in? I think ideally the resource would be configurable by an input variable similar to how the iam_permissions is configurable. https://github.com/cloudposse/terraform-aws-ses/issues/40

Found a bug? Maybe our Slack Community can help. Describe the Bug I believe this line resources = [join("", aws_ses_domain_identity.ses_domain.*.arn)] prevent from sending email to outsid…

@Eric Alford I’m assuming you just need the ability to associate additional domains with that SES sender? That seems like an easy one to wire in a new variable for. You can give it a shot and put up a PR and we’ll be happy to take a look at it.

Found a bug? Maybe our Slack Community can help. Describe the Bug I believe this line resources = [join("", aws_ses_domain_identity.ses_domain.*.arn)] prevent from sending email to outsid…

Just curious i am using the https://github.com/cloudposse/terraform-aws-alb and https://github.com/cloudposse/terraform-aws-alb-ingress just curious how to set the instance targets for the default target group ?

Terraform module to provision a standard ALB for HTTP/HTTP traffic - GitHub - cloudposse/terraform-aws-alb: Terraform module to provision a standard ALB for HTTP/HTTP traffic

Terraform module to provision an HTTP style ingress rule based on hostname and path for an ALB using target groups - GitHub - cloudposse/terraform-aws-alb-ingress: Terraform module to provision an …

Depends on whether you’re attaching EC2 (ASG), ECS, or lambdas

Terraform module to provision a standard ALB for HTTP/HTTP traffic - GitHub - cloudposse/terraform-aws-alb: Terraform module to provision a standard ALB for HTTP/HTTP traffic

Terraform module to provision an HTTP style ingress rule based on hostname and path for an ALB using target groups - GitHub - cloudposse/terraform-aws-alb-ingress: Terraform module to provision an …

ECS its done in the ECS Service Definition itself in the load_balancer block https://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/ecs_service

Just with EC2

2021-08-06

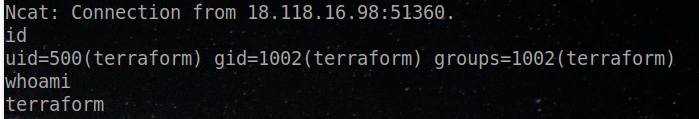

Hi all  I’m speaking today on DEFCON Cloud Village about attacking Terraform environments. It will different attacks I have seen in the years against TF environments, and how engineers can make use of it in security their TF environment.

I’m speaking today on DEFCON Cloud Village about attacking Terraform environments. It will different attacks I have seen in the years against TF environments, and how engineers can make use of it in security their TF environment.

Please join me today at 12.05 PDT at Cloud Village livestream!

Watch how I got RCE at HashiCorp Infrastructure today at #DEFCON. I’m dropping the PoC and reproducible exploit after the talk! https://pbs.twimg.com/media/E8HXnjnXMAElkdQ.png

Do you have a link to the stream?

@Alex Jurkiewicz it was livestreamed yesterday, here is the recorded talk: https://www.youtube.com/watch?v=3ODhxYY9-9U

2021-08-07

https://github.com/cloudposse/terraform-aws-rds-cluster –

Use case:

• provisioned

• aurora-mysql

• Upgrading of an example engine_version: 5.7.mysql_aurora.2.09.1 => 5.7.mysql_aurora.2.09.2 …

Instead of just bumping the cluster resource and letting RDS handle the instances, it deletes and recreates each aws_rds_cluster_instance as well.

Thoughts?

Terraform module to provision an RDS Aurora cluster for MySQL or Postgres - GitHub - cloudposse/terraform-aws-rds-cluster: Terraform module to provision an RDS Aurora cluster for MySQL or Postgres

Not really much to go on here… does that plan indicate what is causing it to recreate? my guess is it’s not the version change.

Terraform module to provision an RDS Aurora cluster for MySQL or Postgres - GitHub - cloudposse/terraform-aws-rds-cluster: Terraform module to provision an RDS Aurora cluster for MySQL or Postgres

It is the version change. If upgrading cluster from Aws Console and allowing it to propagate to the individual instances, then changing the passed in version in the terraform stack - it detects the remote changes and is happy.

I can provide more details when I have time to revisit.

2021-08-09

It’ll awesome if aws_dynamic_subnet module has support to specific number of private & public subnets

2021-08-10

cross-post BUG:wave: I’m here! What’s up?

I was about to create a bug ticket and and saw the link to your slack. So I want to make sure its a Bug before opening a ticket.

its about the terraform-aws-s3-bucket.

if you specify the privileged_principal_arns option it will never create a bucket policy. Is this a wanted behaviour, since the a aws_iam_policy_document is created?

My guess is that in the the privileged_principal_arns is missing in the count option here:

resource "aws_s3_bucket_policy" "default" {

count = local.enabled && (var.allow_ssl_requests_only || var.allow_encrypted_uploads_only || length(var.s3_replication_source_roles) > 0 || var.policy != "") ? 1 : 0

bucket = join("", aws_s3_bucket.default.*.id)

policy = join("", data.aws_iam_policy_document.aggregated_policy.*.json)

depends_on = [aws_s3_bucket_public_access_block.default]

}

ok I am almost 100% sure its a bug, so here are the issue and the PR

Bug-Issue//github.com/cloudposse/terraform-aws-s3-bucket/issues/100>

PR//github.com/cloudposse/terraform-aws-s3-bucket/pull/101>

As a workaround, I thought I could specify a dedicated policy like this:

policy = jsonencode({

"Version" = "2012-10-17",

"Id" = "MYBUCKETPOLICY",

"Statement" = [

{

"Sid" = "${var.bucket_name}-bucket_policy",

"Effect" = "Allow",

"Action" = [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:ListBucket",

"s3:ListBucketMultipartUploads",

"s3:GetBucketLocation",

"s3:AbortMultipartUpload"

],

"Resource" = [

"arn:aws:s3:::${var.bucket_name}",

"arn:aws:s3:::${var.bucket_name}/*"

],

"Principal" = {

"AWS" : [var.privileged_principal_arn]

}

},

]

})

but this results in this error:

Error: Invalid count argument

on .terraform/modules/service.s3-bucket.s3_bucket/main.tf line 367, in resource "aws_s3_bucket_policy" "default":

367: count = local.enabled && (var.allow_ssl_requests_only || var.allow_encrypted_uploads_only || length(var.s3_replication_source_roles) > 0 || var.policy != "") ? 1 : 0

The "count" value depends on resource attributes that cannot be determined

until apply, so Terraform cannot predict how many instances will be created.

To work around this, use the -target argument to first apply only the

resources that the count depends on.

does anyone has a clue why?

Hello, anybody had issues with this before

aws_cloudwatch_event_rule.this: Creating...

╷

│ Error: Creating CloudWatch Events Rule failed: InvalidEventPatternException: Event pattern is not valid. Reason: Filter is not an object

i have tried umpteen ways of trying to get the thing to work with jsonencoding, tomaps, etc

Terraform used the selected providers to generate the following execution

plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_cloudwatch_event_rule.this will be created

+ resource "aws_cloudwatch_event_rule" "this" {

+ arn = (known after apply)

+ description = "This is event rule description."

+ event_bus_name = "default"

+ event_pattern = "\"{\\\"detail\\\":{\\\"eventTypeCategory\\\":[\\\"issue\\\"],\\\"service\\\":[\\\"EC2\\\"]},\\\"detail-type\\\":[\\\"AWS Health Event\\\"],\\\"source\\\":[\\\"aws.health\\\"]}\""

+ id = (known after apply)

+ is_enabled = true

The event pattern is what’s getting me

sussed it out issue in the module it self

Any tools to help simplify state migration in a mono repo without destroying your current infrastructure?

what does monorepo have to do with the state migration?

Someone linked https://github.com/minamijoyo/tfmigrate recently, which seems good for more complex migrations

A Terraform state migration tool for GitOps. Contribute to minamijoyo/tfmigrate development by creating an account on GitHub.

2021-08-11

Hi folks, I’ve been working on https://github.com/cloudposse/terraform-aws-elasticsearch and it’s dependencies, its working great and deploying successfully. I have two questions regarding this that I need your assistance with;

- How is CloudWatch subscription filter managed by this resource, I believe for ES we need a Lambda function for that, does CloudPosse have a module for this, that I missed?

- How is access to Kibana managed by this module? Looking at the config it seems that its depending on VPC and access through a Route53 resource, if so how to access the dashboard of Kibana?

v1.1.0-alpha20210811 1.1.0 (Unreleased) NEW FEATURES: cli: terraform add generates resource configuration templates (#28874) config: a new type() function, only available in terraform console (<a href=”https://github.com/hashicorp/terraform/issues/28501” data-hovercard-type=”pull_request”…

terraform add generates resource configuration templates which can be filled out and used to create resources. The template is output in stdout unless the -out flag is used. By default, only requir…

The type() function, which is only available for terraform console, prints out a string representation of the type of a given value. This is mainly intended for debugging - it's handy to be abl…

If you use Mac with M1, this is really cool: https://github.com/kreuzwerker/m1-terraform-provider-helper (I do, and have run into what the author described there)

CLI to support with downloading and compiling terraform providers for Mac with M1 chip - GitHub - kreuzwerker/m1-terraform-provider-helper: CLI to support with downloading and compiling terraform p…

Hello team!

TLDR Question: Do you have tips/suggestions/pointers/resources on creating plugins for tflint?

Details: I have a group of 15-20 modules that I’d like to be coded consistently, specifically:

• All modules have inputs for name, environment, and tags

• All variables have a description, and optionally a type if applicable

• All outputs have a description

• All AWS resources that can be tagged, have their tag attribute assigned like tag = merge(vars.tags, local.tags) and optionally an resource level override like tag = merge(vars.tags, local.tags, {RESOURCE = OVERRIDE})

So far I have python scripts that are doing most of these but as I went deeper into the weeds, I thought a tool like tflint might be better suited. So before I go down that route, I’m looking for best practices and tips from those that have been there and done that. Thanks!

Hello, all.

I am trying to use the CloudPosse ec2-autoscale-group module and every time I do a terraform apply, it just repeatedly generates EC2s that are automatically terminated. Any thoughts on where to begin?

Thanks!

Additional note: the Launch template it generates seems ok when I specify a subnet for it. I am passing in a list of 3 subnets for my 3 AZs.

Hello! First, thanks for the cloudposse modules, they’ve been very helpful. I’ve got an issue trying to implement two instances of cloudposse/terraform-aws-datadog-integration

Details in thread.

I’m not sure if this is the right place to ask, but I figured I’d try.

The modules …

module "datadog_integration_alpha_prod" {

source = "cloudposse/datadog-integration/aws"

version = "0.13.0"

namespace = "alpha"

stage = "prod"

name = "datadog"

integrations = ["all"]

forwarder_rds_enabled = true

dd_api_key_source = {

identifier = aws_ssm_parameter.dd_api_key_secret.name

resource = "ssm"

}

providers = {

aws = aws.alpha-prod

}

}

module "datadog_integration_alpha_stg" {

source = "cloudposse/datadog-integration/aws"

version = "0.13.0"

namespace = "alpha"

stage = "stg"

name = "datadog"

integrations = ["all"]

forwarder_rds_enabled = true

dd_api_key_source = {

identifier = aws_ssm_parameter.dd_api_key_secret.name

resource = "ssm"

}

providers = {

aws = aws.alpha-stg

}

}

The errors…

Error: failed to execute "git": fatal: not a git repository (or any of the parent directories): .git

│

│

│ with module.datadog_integration_alpha_stg.module.forwarder_rds[0].data.external.git[0],

│ on .terraform/modules/datadog_integration_alpha_stg.forwarder_rds/main.tf line 7, in data "external" "git":

│ 7: data "external" "git" {

│

Tony, please set forwarder_rds_enabled = false

and try again

These both succeed if we set the forwarder_rds_enabled to false, but the whole reason we’re using this is that we want to enable enhanced RDS info being forwarded to DataDog

we are moving all that code out of it and we are creating a module just for the DD forwarder

I’m pretty sure it will be released today

although the error you are getting is related to git not able to pull the datadog repo

you should be able to download this : <https://raw.githubusercontent.com/DataDog/datadog-serverless-functions/master/aws/rds_enhanced_monitoring/lambda_function.py?ref=3.34.0>

although the error you are getting is related to git not able to pull the datadog repo

Yeah, it appears so. This is running from TF cloud though, and only an issue with this module. Other changes in our workspace are working fine, as does this module with the enhanced RDS functionality turned off.

I’ll look for that new module. Do you have a link to the repo?

the repo is private until re lease it

I will send you the link when is ready

but that functionality on how we download the file has not been changed

so I imagine you will have the same issue

Am I missing anything in my module block?

Could the providers blocks be causing an issue?

this are the providers needed

terraform {

required_version = ">= 0.13"

required_providers {

# Update these to reflect the actual requirements of your module

local = {

source = "hashicorp/local"

version = ">= 1.2"

}

random = {

source = "hashicorp/random"

version = ">= 2.2"

}

aws = {

source = "hashicorp/aws"

version = ">= 3.0"

}

archive = {

source = "hashicorp/archive"

version = ">= 2.2.0"

}

}

}

the archive module is trying to do this :

data "external" "git" {

count = module.this.enabled && var.git_ref == "" ? 1 : 0

program = ["git", "-C", var.module_path, "log", "-n", "1", "--pretty=format:{\"ref\": \"%H\"}"]

}

is trying to run git on that file

it could be that where are you running it git is not properly configured

Perhaps, but we’re running it in TF Cloud. I’ll try planning from my workstation later this evening and report back.

ohhh tf cloud, that might be an issue

yeah

Other cloudposse modules haven’t been an issue so far, we’ve had success with them in TF Cloud

no many modules use the git pull strategy

@Tony Bower https://github.com/cloudposse/terraform-aws-datadog-lambda-forwarder we just released it

Terraform module to provision all the necessary infrastructure to deploy Datadog Lambda forwarders - GitHub - cloudposse/terraform-aws-datadog-lambda-forwarder: Terraform module to provision all th…

you will see in the example how to use a local file so in the case of running in TFC then you can push the code in your repo and use the local file instead

Thanks! I’ll give it a shot tonight! Thanks for updating the thread.

np, let me know how that goes, I can make changes to the module since I’m still working on it so any feedback is appreciated

you will see in the example how to use a local file so in the case of running in TFC then you can push the code in your repo and use the local file instead

I’m not sure I follow what you are suggesting here.

so the problem you had with the RDS forwarder in Terraform cloud

was I think related to the module trying to run git

so with this new module you can point to a local file instead

Ok, I didn’t find that in the example, so maybe I missed it or wasn’t sure.

that was if you are running in a system where remote url connections are not allowed ( like TFC) then you can use a local zip file with the code

sorry, in the readme :

module "datadog_lambda_forwarder" {

source = "cloudposse/datadog-lambda-forwarder/aws"

forwarder_log_enabled = true

forwarder_rds_artifact_url = "${file("${path.module}/function.zip")}"

cloudwatch_forwarder_log_groups = {

postgres = "/aws/rds/cluster/pg-main/postgresql"

}

Ah, ok! Thanks!

2021-08-12

Hi all! I’m using Terraform Cloud for state storage and terraform execution. I’ve been running on 0.12.26 , but a module requires me to upgrade to 1.0.4. When changing the workspace version to the new version I get the error in the screenshot. I’m required to run terraform 0.13upgrade to upgrade the state files, however I don’t know how to target state in Terraform Cloud from my local cli. Can anyone advise how I can target Terraform Cloud state from my local cli?

you will have to go one by one

first update to 0.13 .. it comes with a command that update the tf files to new format .. a minor refactoring will be required but it can be done.

after upgrading .. the next time you will do terraform plan .. it will upgrade automatically

hey everyone! Trying to use terraform-aws-waf but no matter how I use it I get:

Error: Unsupported block type

on .terraform/modules/wafv2/rules.tf line 253, in resource "aws_wafv2_web_acl" "default":

253: dynamic "forwarded_ip_config" {

Blocks of type "forwarded_ip_config" are not expected here.

Error: Unsupported block type

on .terraform/modules/wafv2/rules.tf line 306, in resource "aws_wafv2_web_acl" "default":

306: dynamic "ip_set_forwarded_ip_config" {

Blocks of type "ip_set_forwarded_ip_config" are not expected here.

Error: Unsupported block type

on .terraform/modules/wafv2/rules.tf line 409, in resource "aws_wafv2_web_acl" "default":

409: dynamic "forwarded_ip_config" {

Blocks of type "forwarded_ip_config" are not expected here.

I also tried using the code from examples/complete, but still have the same issue. Is it a minimum version other than 0.13? I’m currently using 0.14.11

Contribute to cloudposse/terraform-aws-waf development by creating an account on GitHub.

fixed, was using an older version of aws provider. Upgraded to 3.53.0 and it works flawlessly

Contribute to cloudposse/terraform-aws-waf development by creating an account on GitHub.

Hi Team,

Can someone please help me to rocksdb alerts setup using terraform using write stalls. I am new to datadog and terraform. looking for a syntax to setup alert.

A library that provides an embeddable, persistent key-value store for fast storage. - Write Stalls · facebook/rocksdb Wiki

2021-08-13

Morning, is this the right place to ask questions about CloudPosse Terraform modules?

Hey everyone,

I am getting this error using your S3-module with the privileged_principal_arns option. Any clue why? I am using TF version 0.14.9. this was a common error at earlier versions

Error: Invalid count argument

on .terraform/modules/service.s3_bucket.s3_bucket/main.tf line 367, in resource "aws_s3_bucket_policy" "default":

367: count = local.enabled && (var.allow_ssl_requests_only || var.allow_encrypted_uploads_only || length(var.s3_replication_source_roles) > 0 || length(var.privileged_principal_arns) > 0 || var.policy != "") ? 1 : 0

The "count" value depends on resource attributes that cannot be determined

until apply, so Terraform cannot predict how many instances will be created.

To work around this, use the -target argument to first apply only the

resources that the count depends on.

ERRO[0043] Hit multiple errors:

Hit multiple errors:

exit status 1

Terraform module that creates an S3 bucket with an optional IAM user for external CI/CD systems - GitHub - cloudposse/terraform-aws-s3-bucket at 0.42.0

it’s because the number of ARNS depends on a computed value

Terraform module that creates an S3 bucket with an optional IAM user for external CI/CD systems - GitHub - cloudposse/terraform-aws-s3-bucket at 0.42.0

I guess you are passing in a ARN that is dynamically generated in the same terraform configuration

in this case, Terraform doesn’t know what the length of var.privileged_principal_arns is at plan time, so it doesn’t know how many resources of resource "aws_s3_bucket_policy" "default" to create

jupp I pass the arn in via from a ECS module. Ok then instead of checking the length would it make sense to check != "" or != null?

what confuses me, is that if I am trying this one out in a test repo where I just call the module with some random string as ARN it works

Right. Because the string is hard coded

added a PR: https://github.com/cloudposse/terraform-aws-s3-bucket/pull/103 and Issue: https://github.com/cloudposse/terraform-aws-s3-bucket/issues/102 Feel free to have a look

what The length(var.privileged_principal_arns) is not determinable before apply if the input itself is dependent on other resources. why Terraform cannot know the length of the variable before i…

Describe the Bug If no resources are created yet and the var.privileged_principal_arns is a variable, this will lead in to this error: Error: Invalid count argument on .terraform/modules/service.s3…

what’s that project which auto-adds tags to your terraform resources based on file/repo/commit?

it has some name like yonder

do you mean https://github.com/bridgecrewio/yor?

Extensible auto-tagger for your IaC files. The ultimate way to link entities in the cloud back to the codified resource which created it. - GitHub - bridgecrewio/yor: Extensible auto-tagger for you…

2021-08-15

hey, any thoughts as to how to update public ptr of an ec2 public ip programmatically? i think typically you have to ask aws support to do that… but it would be nice to do it via terraform/api

looks like it can be done via the aws cli: https://docs.aws.amazon.com/cli/latest/reference/ec2/modify-address-attribute.html

If it can be done with the aws cli, then there is an api for it, which means terraform could do it also… If it doesn’t have the feature yet, check for an existing issue or open one!

Example repo I made for running shell-based tests inside an ephemeral EC2 instance using Terratest, if anyone’s interested in that kind of thing

Proof of Concept for a shell-based E2E test using Terratest for an ephemeral EC2 instance - GitHub - RothAndrew/terratest-shell-e2e-poc: Proof of Concept for a shell-based E2E test using Terratest …

2021-08-16

Hie all, do anyone having idea about how to add cloudwatch as grafana data source via terraform(helm chart) and also verify it

Metrics exporter for Amazon AWS CloudWatch. Contribute to prometheus/cloudwatch_exporter development by creating an account on GitHub.

Hi guys, I am new at Terraform. How can I create more than one site2site vpn connection.

Error: Cannot import non-existent remote object

│

│ While attempting to import an existing object to "aws_codebuild_project.lambda", the provider detected that no object exists with the given id. Only pre-existing objects can be imported; check that the id is correct and that it is associated with the provider's

│ configured region or endpoint, or use "terraform apply" to create a new remote object for this resource.

Anyone else ever had an issue with this?

only when the object doesn’t exist in the account

hmm, i can see it via the console, I’ll get the cli

2021-08-17

Hi.. I am new at Terraform. How can I create more than one site2site vpn connection.

Sorry, this is overly broad. What have you already tried? Have you successfully created one VPN connection? Are you getting any errors?

Users of driftctl, I have a few questions for you:

AFAIK, driftctl only catches three kinds of drift that are not already caught by Terraform’s refresh process: 1. SG rule changes, 2. IAM policy assignment, 3. SSO permission set assignments.

- Is there anything else driftctl catches that I’m missing?

- If I’m correct about the above, why do you use driftctl and not simply run a TF plan on a cron and see if any drifts are detected (like env0 are suggesting, or even Spacelift)?

I think one of the benefits of using driftctl is that it can detect resources that are deployed in the cloud but not managed through Terraform.

At least that’s the promise.

Spacelift drift detection will detect (and optionally fix) drift only against the resources it manages.

So in that sense you can use both, though each for a different reason.

Good point. So it’s not so much drift as “unmanaged resources”. I’m curious how many people try to map out unmanaged resources and for what reason. For example, as a security person, I’d want to know if there are security issues in unmanaged resources. But I’m wondering if Engineering leaders care about them as much.

There are also tools like https://steampipe.io/ or https://www.cloudquery.io/ that allow you to query entire accounts for possible security violations regardless of how resources are managed.

Steampipe is an open source tool to instantly query your cloud services (e.g. AWS, Azure, GCP and more) with SQL. No DB required.

query, monitor and analyze your cloud infrastructure

¯_(ツ)_/¯

Oh, there are dozens, if not hundreds of tools for that. But one could say that the approach to security in IaC (like what we do at Cloudrail, or Bridgecrew/Accurics/Fugue does), is different to security in unmanaged resources.

hi there, I am trying to use: https://github.com/cloudposse/terraform-aws-elasticache-redis however, then I use subnets fetched through a data resource in the form of:

data "aws_vpc" "vpc-dev" {

tags = { environment = "dev" }

depends_on = [module.vpc-dev]

}

data "aws_subnet_ids" "vpc-dev-private-subnet-ids" {

vpc_id = data.aws_vpc.vpc-dev.id

depends_on = [module.vpc-dev]

tags = {

Name = "*private*"

}

}

And plug that into the configuration it throws an error saying:

│ Error: Invalid count argument

│

│ on .terraform/modules/my-redis-cluster.redis/main.tf line 31, in resource "aws_elasticache_subnet_group" "default":

│ 31: count = module.this.enabled && var.elasticache_subnet_group_name == "" && length(var.subnets) > 0 ? 1 : 0

│

│ The "count" value depends on resource attributes that cannot be determined until apply, so Terraform cannot predict how many instances will be created. To work around this, use

│ the -target argument to first apply only the resources that the count depends on.

has anyone encountered that error before? If so, how did you solve it?

Terraform module to provision an ElastiCache Redis Cluster - GitHub - cloudposse/terraform-aws-elasticache-redis: Terraform module to provision an ElastiCache Redis Cluster

are you sure is finding the subnet and vpc?

Terraform module to provision an ElastiCache Redis Cluster - GitHub - cloudposse/terraform-aws-elasticache-redis: Terraform module to provision an ElastiCache Redis Cluster

did you see them in the plan?

yep - they are already created.

In fact, this is the plan in question:

(venv) andylamp@ubuntu-vm:~/Desktop/my-tf$ tf plan

module.vpc-dev.module.my-vpc.aws_vpc.this[0]: Refreshing state... [id=vpc-00337c2afe6fce5c3]

module.vpc-dev.module.my-vpc.aws_eip.nat[0]: Refreshing state... [id=eipalloc-012856d4a1d951283]

module.vpc-dev.module.my-vpc.aws_subnet.private[2]: Refreshing state... [id=subnet-08266fec0283b0297]

module.vpc-dev.module.my-vpc.aws_subnet.private[0]: Refreshing state... [id=subnet-0325a36af25038e1c]

module.vpc-dev.module.my-vpc.aws_subnet.private[1]: Refreshing state... [id=subnet-0d55505e94c067aa2]

module.vpc-dev.module.my-vpc.aws_route_table.public[0]: Refreshing state... [id=rtb-0d42a5eb7d09ee795]

module.vpc-dev.module.my-vpc.aws_internet_gateway.this[0]: Refreshing state... [id=igw-005edae1c9ed3fa6b]

module.vpc-dev.module.my-vpc.aws_subnet.public[0]: Refreshing state... [id=subnet-0975f87892ab81275]

module.vpc-dev.module.my-vpc.aws_subnet.public[2]: Refreshing state... [id=subnet-0f148b639a32bc4d0]

module.vpc-dev.module.my-vpc.aws_subnet.public[1]: Refreshing state... [id=subnet-05611d5e681c8ff3c]

module.vpc-dev.module.my-vpc.aws_route_table.private[0]: Refreshing state... [id=rtb-04a14b9c331a1298a]

module.vpc-dev.module.my-vpc.aws_route.public_internet_gateway[0]: Refreshing state... [id=r-rtb-0d42a5eb7d09ee7951080289494]

module.vpc-dev.module.my-vpc.aws_nat_gateway.this[0]: Refreshing state... [id=nat-05b7e44d3b553b476]

module.vpc-dev.module.my-vpc.aws_route_table_association.public[0]: Refreshing state... [id=rtbassoc-0f540546824c3129d]

module.vpc-dev.module.my-vpc.aws_route_table_association.private[1]: Refreshing state... [id=rtbassoc-033f6fc6e37b040e8]

module.vpc-dev.module.my-vpc.aws_route_table_association.public[1]: Refreshing state... [id=rtbassoc-08deab663c8d993d9]

module.vpc-dev.module.my-vpc.aws_route_table_association.public[2]: Refreshing state... [id=rtbassoc-08d46742cd13a384d]

module.vpc-dev.module.my-vpc.aws_route_table_association.private[0]: Refreshing state... [id=rtbassoc-045d114f50647d8a6]

module.vpc-dev.module.my-vpc.aws_route_table_association.private[2]: Refreshing state... [id=rtbassoc-011e95d58db228e53]

module.vpc-dev.module.my-vpc.aws_default_network_acl.this[0]: Refreshing state... [id=acl-01bbf32f28ecd8ea9]

module.vpc-dev.module.my-vpc.aws_route.private_nat_gateway[0]: Refreshing state... [id=r-rtb-04a14b9c331a1298a1080289494]

╷

│ Error: Invalid count argument

│

│ on .terraform/modules/my-redis-cluster.redis/main.tf line 31, in resource "aws_elasticache_subnet_group" "default":

│ 31: count = module.this.enabled && var.elasticache_subnet_group_name == "" && length(var.subnets) > 0 ? 1 : 0

│

│ The "count" value depends on resource attributes that cannot be determined until apply, so Terraform cannot predict how many instances will be created. To work around this, use

│ the -target argument to first apply only the resources that the count depends on.

did you set the var.elasticache_subnet_group_name ?

no wait

I was under the impression that this was not needed

Terraform module to provision an ElastiCache Redis Cluster - terraform-aws-elasticache-redis/main.tf at master · cloudposse/terraform-aws-elasticache-redis

followed this bit.

var.subnets I think is basically 0

module.subnets.private_subnet_ids is a list

are you passing a list of subnets?

yes, the private ones.

what essentially I get from the output of this:

# grab the private subnets within the provided VPC

data "aws_subnet_ids" "vpc-private-subs" {

vpc_id = data.aws_vpc.redis-vpc.id

depends_on = [data.aws_vpc.redis-vpc]

tags = {

Name = "*private*"

}

}

which is a list of subnets.

matching to that tag.

(btw, thanks for replying fast!)

how does the module initialization looks like?

sec - let me fetch that.

module "redis" {

source = "cloudposse/elasticache-redis/aws"

version = ">= 0.40.0"

name = var.cluster-name

engine_version = var.redis-stack-version

instance_type = var.redis-instance-type

family = var.redis-stack-family

cluster_size = var.cluster-size

snapshot_window = "04:00-06:00"

snapshot_retention_limit = 7

apply_immediately = true

automatic_failover_enabled = false

at_rest_encryption_enabled = false

transit_encryption_enabled = false

vpc_id = var.vpc-id

subnets = data.aws_subnet_ids.vpc-private-subs.ids

snapshot_name = "redis-snapshot"

parameter = [

{

name = "notify-keyspace-events"

value = "lK"

}

]

}

basically this - and then I call it in my [main.tf](http://main.tf) as:

module "my-redis-cluster" {

source = "./modules/my-ec-redis"

vpc_id = data.aws_vpc.vpc-dev.id

}

(the data bits are in the [variables.tf](http://variables.tf) of the my-ec-redis module)

try passing a hardcoded subnet id to the subnets =

so, with [] (which assumes hardcoded) it works.

like subnets = [ "subnet-0325a36af25038e1c"]

I think this works, it just does not like when I dynamically fetch it from the target vpc

I think this is a bit weird

tell me about it

you have a module creating the vpc, then you do a data lookup to look at stuff the vpc module created

yep

and plus you tell the data resouce it depends on the module

exactly.

cyclical dependency

so use the output of the module.vpc-dev and output the subnet ids

and use the output as input for the redis module

TF will know how to build the dependency from that relationship

right, gotcha

let me try this.

when you start using depends_on you can make TF confused

cool, let me try this and hopefully this works - thanks for the tip!

(if not, I’ll just ping here again )

seems to be able to plan it now, I am curious however why this did not happen with aws eb resource (aws_elastic_beanstalk_environment) there I seem to be passing them as such:

setting {

name = "Subnets"

namespace = "aws:ec2:vpc"

value = join(",", data.aws_subnet_ids.vpc-public-subnets.ids)

resource = ""

}

It is able to both find them and use them successfully - if it was a circular dependency, then surely it would be a problem there as well right?

but there is no depends_on

there is

so it can calculate the graph dependency

module "eb-test" {

source = "./modules/my-eb"

vpc_id = module.vpc-dev.vpc_name

depends_on = [module.vpc-dev]

}

and this is how the my-eb module is defined:

# now create the app

resource "aws_elastic_beanstalk_application" "eb-app" {

name = var.app_name

}

# configure the ELB environment for the target app

resource "aws_elastic_beanstalk_environment" "eb-env" {

application = aws_elastic_beanstalk_application.eb-app.name

name = var.env_name

solution_stack_name = var.solution_stack_name == null ? data.aws_elastic_beanstalk_solution_stack.python_stack.name : var.solution_stack_name

tier = var.tier

# Configure various settings for the environment, they are grouped based on their scoped namespace

# -- Configure namespace: "aws:ec2:vpc"

setting {

name = "VPCId"

namespace = "aws:ec2:vpc"

value = var.vpc_id

resource = ""

}

# associate the ELB environment with a public IP address

setting {

name = "AssociatePublicIpAddress"

namespace = "aws:ec2:vpc"

value = "True"

resource = ""

}

setting {

name = "Subnets"

namespace = "aws:ec2:vpc"

value = join(",", data.aws_subnet_ids.vpc-public-subnets.ids)

resource = ""

}

// more properties...

}

that will be because the count can be calculated

right, it does not use count

count or for_each can’t use values that can’t be calculated at plan time

otherwise how many will create then?

ok I see, that’s helpful to know.

you can sometimes do a local with a tenary that can calculate the value before hand

nice, do you happen to have an example that I can see of such a use case? I’d be really helpful.

no I was wrong sorry, the value needs to be calculated at compile time, I do not think is possible to do it otherwise

I was going to show you this : https://github.com/cloudposse/terraform-aws-ssm-patch-manager/blob/master/ssm_log_bucket.tf

where I had a logic to do that but it did not work and I had to change the count arguments because I was trying to use a data resource

if you apply once a data resource like when using target and then use them in the count then that will work I think but it adds a step that you do not need if you make your logic simpler

one of your inputs and as well a count argument is not determiable by terrafrom. I had the same Issue some days ago (https://sweetops.slack.com/archives/CB6GHNLG0/p1628844492126800) There is no workaround. Either hardcode the variable or create things by your own. I wasted 3 Days figuring this out

Hey everyone,

I am getting this error using your S3-module with the privileged_principal_arns option. Any clue why? I am using TF version 0.14.9. this was a common error at earlier versions

Error: Invalid count argument

on .terraform/modules/service.s3_bucket.s3_bucket/main.tf line 367, in resource "aws_s3_bucket_policy" "default":

367: count = local.enabled && (var.allow_ssl_requests_only || var.allow_encrypted_uploads_only || length(var.s3_replication_source_roles) > 0 || length(var.privileged_principal_arns) > 0 || var.policy != "") ? 1 : 0

The "count" value depends on resource attributes that cannot be determined

until apply, so Terraform cannot predict how many instances will be created.

To work around this, use the -target argument to first apply only the

resources that the count depends on.

ERRO[0043] Hit multiple errors:

Hit multiple errors:

exit status 1

@jose.amengual thanks a lot for the clarification, I think hard-coded values is the way to go for now.

could you try this https://github.com/cloudposse/terraform-aws-s3-bucket/issues/102#issuecomment-906779848 ?

Describe the Bug If no resources are created yet and the var.privileged_principal_arns is a variable, this will lead in to this error: Error: Invalid count argument on .terraform/modules/service.s3…

2021-08-18

Do anyone know how to add cloudwatch as grafana data source by using helm chart(prometheus-grafana)?

For better context i’m using this values.yaml

podSecurityPolicy:

enabled: true

grafana:

datasources:

datasources.yaml:

apiVersion: 1

datasources:

- name: Cloudwatch

type: cloudwatch

isDefault: true

jsonData:

authType: arn

assumeRoleArn: "${ASSUME_ROLE_ARN}"

defaultRegion: "${CLUSTER_REGION}"

customMetricsNamespaces: ""

version: 1

grafana.ini:

feature_toggles:

enable: "ngalert"

autoscaling:

enabled: true

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 80

- type: Resource

resource:

name: memory

targetAverageUtilization: 80

image:

repository: grafana/grafana

tag: 8.1.0

ingress:

%{ if GRAFANA_HOST != "" }

enabled: true

hosts:

- ${GRAFANA_HOST}

%{ else }

enabled: false

%{ endif }

prometheus:

prometheusSpec:

storageSpec:

## Using PersistentVolumeClaim

volumeClaimTemplate:

spec:

storageClassName: gp2

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 50Gi

using this in terraform

data "template_file" "prom_template" {

template = file("./templates/prometheus-values.yaml")

vars = {

GRAFANA_HOST = var.domain_name == "" ? "" : "grafana.${local.cluster_name}.${var.domain_name}"

CLUSTER_REGION = var.app_region

ASSUME_ROLE_ARN = aws_iam_role.cloudwatch_role.arn

}

}

resource "helm_release" "prometheus" {

chart = "kube-prometheus-stack"

name = "prometheus"

namespace = kubernetes_namespace.monitoring.metadata.0.name

create_namespace = true

version = "17.1.1"

repository = "<https://prometheus-community.github.io/helm-charts>"

values = [

data.template_file.prom_template.rendered

]

}

Then setup kubectl and installed the helm chart and after that port forwarded to see GUI but not able to see the cloudwatch data source

nice trick I learn today: conditionnally create a block https://codeinthehole.com/tips/conditional-nested-blocks-in-terraform/

Using dynamic blocks to implement a maintenance mode.

For login to AWS, right now I manually using some bash scripts to assume role, but that MFA token expire every 1 hour. What would be option to automate those tasks? I would like probably to use docker

logging in from your pc to use awscli?

when you assume a role, you can set the expiry, the default is 1hr but you can go up to 8 generally

Oh ok it’s have security concerns…

Look into the refreshable credential in botocore, and the credential_process option in the awscli config. Between the two, you can have auto-refreshing temporary credentials

that’s all workarounds, you probably want to have AWS SSO configured for your account. its credentials are supported on aws-cli and terraform provider side. still you have to login from time to time once temporary credentials expire (this is configurable)

no thanks. AWS SSO is terribly limited when it comes to the full set of IAM features. sticking with Okta and an IAM Identity Provider for now

Checkout leapp.cloud. It handles automatic refreshes and provides best-in-class ui

Leapp grants to the users the generation of temporary credentials only for accessing the Cloud programmatically.

cc: @Andrea Cavagna

Both for AWS SSO and Okta Federated IAM Roles, Leapp store secure informations, such as AWS SSO token to generate credentials, and SAML response, in a secure place locally (EG. Keychain for MacOS) https://docs.leapp.cloud/contributing/system_vault/

And in the app the User can choose in which AWS Account log into Leapp generate and rotate temporary credentials.

If you have any question feel free to text me

Leapp is a tool for developers to manage, secure, and gain access to any cloud. From setting up your access data to activating a session, Leapp can help manage the underlying assets to let you use your provider CLI or SDK seamlessy.

I’ve been following leapp for a while, but haven’t yet given it a try

i can’t figure out the leapp setup when using okta. what role_arn? i have many roles through okta, and many accounts…

It’s the federated role arn with okta: Okta app add a federated role for each role in any account you have access to, you see is in the IAM Role panel https://docs.leapp.cloud/use-cases/aws_iam_role/#aws-iam-federated-role

If leapp is getting that info from Okta, why is it asking me for a role_arn in the setup?

Leapp is not getting this information from Okta, it’s up to you know what is the correct roleArn for a given IAM Role federated with okta

oh yeah, ok, no. this is too much setup for me. i’d have to add dozens of accounts and pick a single role for each one, or multiply by each role i wanted in Leapp

direct integration with okta would be a much nicer interface, similar to aws-okta-processor

The Okta application is always the same, you have just to add all the role arn you can access to. The integration with okta is in roadmap, I’ll keep you updated

not for multiple accounts, the idp arn changes also

ok thanks

i’ll be glad to try it again when Okta is supported directly as an IdP

i’m also curious if you had considered a credential_process integration via ~/.aws/config, instead of writing even the temp credential to ~/.aws/credentials?

Absolutely, Leapp will soon move the core business logic to a local Daemon that will comunicate with the UI. In the Daemon roadmap there is the credentials process written in the ~/.aws/config file:

https://github.com/Noovolari/leapp-daemon/issues/20

Hello team! Asking a question about name length limits.

TLDR Is there a document listing resources and their associated limits for the name and name_prefix lengths?

Details

Some resources have limits imposed on how long the name or name_prefix can be when being created in terraform. For aws_iam_role , for example, the name_prefix limit is 32 characters.

I sometimes have long values for the variables i use to populate the name prefix so I protect from errors by using substr like this:

var.name = "super-cool-unicorn-application"

var.environment = "staging"

resource "aws_iam_role" "task" {

...

name_prefix = substr("${var.name}-${var.environment}-task-", 0, 32)

...

}

However, I know that other resources allow for longer values for name_prefix (can’t think of one off the top of my head… will add it if i find it).

I’d like to use a reference for these lengths so I can allow my names and prefixes to be as long as possible.

Does such a reference exist? If not, is there a where to “mine” it out of the terraform and/or provider source code?

we have an id length limit parameter i believe

cc: @Jeremy G (Cloud Posse)

Sorry, we know of no comprehensive list of ID length limits. We did consider adding some pre-configured length limits but gave up because (a) we could not find such a list and (b) to the extent we did find limits, they were all over the place.

all over the place.

Agreed!

The null-label module that we use to generate all our ID fields does have a length limit field. If you find length limits on resource names, you can submit a PR or even just open an issue to set that length on the module that creates that resource.

Of course you can also use it directly.

Terraform Module to define a consistent naming convention by (namespace, stage, name, [attributes]) - GitHub - cloudposse/terraform-null-label: Terraform Module to define a consistent naming conven…

Live HashiCorp Boundary demo right now!

https://sweetops.slack.com/archives/CB3579ZM3/p1629311407009500

@here our devops #office-hours are starting now! join us on zoom to talk shop url: cloudposse.zoom.us/j/508587304 password: sweetops

v1.0.5 1.0.5 (August 18, 2021) BUG FIXES: json-output: Add an output change summary message as part of the terraform plan -json structured logs, bringing this format into parity with the human-readable UI. (#29312) core: Handle null nested single attribute values (<a href=”https://github.com/hashicorp/terraform/issues/29411“…

Extend the outputs JSON log message to support an action field (and make the type and value fields optional). This allows us to emit a useful output change summary as part of the plan, bringing the…

Null NestingSingle attributes were not being handled in ProposedNew. Fixes #29388

I see a [context.tf](http://context.tf) referred to in many of the CloudPosse modules. Is that a file I should copy and commit to my project unaltered?

yes you can do that

Excellent, thanks

it’s inside every module already

you don’t need to copy it if you are just using the modules

just provide the namespace, env, stage, name

Ok, that’s where I was confused I guess. Here’s an example of an example (heh) where I see it added.

https://github.com/cloudposse/terraform-aws-datadog-integration/tree/master/examples/rds-enhanced

Terraform module to configure Datadog AWS integration - terraform-aws-datadog-integration/examples/rds-enhanced at master · cloudposse/terraform-aws-datadog-integration

those vars are inside [context.tf](http://context.tf)

the main purpose of the file is to provide common inputs to all modules w/o repeating them everywhere

(also, note, we’ve just released an updated version that adds some more fields)

Terraform v1.0.5 now adds summary in JSON plan, I just implemented this using JQ last week

2021-08-19

Do anyone know how to add cloudwatch as data source in grafana using this helm chart https://github.com/prometheus-community/helm-charts?

Prometheus community Helm charts. Contribute to prometheus-community/helm-charts development by creating an account on GitHub.

Hi all, I have a question about creating service connections resources on Azure Devops. Terraform stores the personal_access_token value in the state file and I would like to avoid that. I was wondering if there is a better and more secure approach of creating this resource?

resource "azuredevops_serviceendpoint_github" "serviceendpoint_github" {

project_id = azuredevops_project.project.id

service_endpoint_name = "xyz"

auth_personal {

personal_access_token = "TOKEN"

}

}

Hello @Alencar Junior, if you store the personnal_access-token in an azure KeyVault, you can then read it securely with data.

data.azurerm_key_vault_secret.example.value

https://registry.terraform.io/providers/hashicorp/azurerm/latest/docs/data-sources/key_vault_secret

Thanks @Pierre-Yves, I will try that.

Hi @Pierre-Yves, I’ve created a key vault secret and I am using a data resource in order to fetch its value to my service connection however, when I run the command terraform state pull I can see the personal_access_token value as a plain text, am I missing something?

when writting to azure key vault, you should set azurerm_key_vault_secret option to:

content_type = "password"

variables should have the parameter “sensitive = true” https://learn.hashicorp.com/tutorials/terraform/sensitive-variables

Protect sensitive values from accidental exposure using Terraform sensitive input variables. Provision a web application with Terraform, and mark input variables as sensitive to restrict when Terraform prints them out to the console.

Hi @Pierre-Yves, this is how I’m fetching the secrets:

data "azurerm_key_vault" "existing" {

name = "test-kv"

resource_group_name = "test-rg"

}

data "azurerm_key_vault_secret" "github" {

name = "github-pat"

key_vault_id = data.azurerm_key_vault.existing.id

}

resource "azuredevops_serviceendpoint_github" "serviceendpoint_ghes_1" {

project_id = data.azuredevops_project.project.id

service_endpoint_name = "Test GitHub Personal Access Token"

auth_personal {

personal_access_token = data.azurerm_key_vault_secret.github.value

}

}

I set the secret type as password however, it seems the secret value will be always stored in the raw state as plain-text.

This is what I get when running terraform state pull :

{

"mode": "managed",

"type": "azuredevops_serviceendpoint_github",

"name": "serviceendpoint_ghes_1",

"provider": "provider[\"<http://registry.terraform.io/microsoft/azuredevops\|registry.terraform.io/microsoft/azuredevops\>"]",

"instances": [

{

"schema_version": 0,

"attributes": {

"auth_oauth": [],

"auth_personal": [

{

"personal_access_token": "[PLAIN-TEXT-VALUE]",

"personal_access_token_hash": "......"

}

],

"authorization": {

"scheme": "Token"

},

"description": "Managed by Terraform",

"id": "..........",

"project_id": "........",

"service_endpoint_name": "Test GitHub Personal Access Token",

"timeouts": null

},

"sensitive_attributes": [

[

{

"type": "get_attr",

"value": "auth_personal"

}

]

],

sorry I don’t have much more information on it, you might want to encrypt/decrypt your secrets you might have a look to rsadecrypt function and encryption

Should I be able to do this?

module "foo" {

source = "git::<https://foo.com/bar.git?ref=${var.module_version}>"

Specifically, pulling the ref from a TF variable

only if you’re using terragrunt

well then

we “should be able to” but terraform doesn’t let us

Part of it is the module is cached during init, which doesn’t take vars, so the dereference needs to happen outside of TF

Ahhhhh yes, makes sense @loren

2021-08-20

SOLVED Hi folks, I’m using CloudPosse’s ECS web app module along with SSM Parameter store. I have a bunch of secrets and variables in .tfvars that I have used to create and pass them on SSM and encrypt with KMS. But I’m not sure how to actually pass those from SSM to ECS Task Definition for the containers? I couldn’t figure it out from the modules and I need it to be secure. Would appreciate your guidance.

How do I provide it to this

Based on Foqal app’s suggestions it lead me to this page https://alto9.com/2020/05/21/aws-ssm-parameters-as-ecs-environment-variables/#comments

If I understand correctly I need to have this block for every variable that I want to pass in the container

variable "secrets" {

type = list(object({

name = string

valueFrom = string

}))

description = "The secrets to pass to the container. This is a list of maps"

default = null

}

For example this is the type of variables I have in .tfvars

directory_api_parameter_write = [

{

name = "DB_PASSWORD"

value = "password"

type = "SecureString"

overwrite = "true"

description = "Issuer Directory"

},

{

name = "DB_USER"

value = "sa"

type = "String"

overwrite = "true"

description = "Issuer Directory"

}

]

How do I structure it? Sorry I couldn’t find an example in the repos

SOLUTION for anyone interested this is how I did this.

- Provide your secrets in a list in

.tfvarsfor exampledirectory_api_parameter_write = [ { name = "DB_PASSWORD" value = "password" type = "SecureString" overwrite = "true" description = "Directory API" }, { name = "DB_USER" value = "sa" type = "String" overwrite = "true" description = "Directory API" } ]

- In

[variables.tf](http://variables.tf)point to thatvariable "directory_api_parameter_write" { type = list(map(string)) }

- Include this variable name in the task definition module calling as

var.directory_api_parameter_write

but now the task def will have all your secrets in plain text

or are they showing up as secrets? the reason I point this out is because ECS now supports secrets arn mapping in the task Def but a year ago it did not

indeed it does. I don’t use a module for the task def, instead opting for a template. but mine is formatted like this:

...

"secrets": [

{

"name": "VARIABLE_NAME",

"valueFrom": "arn:aws:ssm:${logs_region}:${account_id}:parameter/${name}/${environment}/VARIABLE_NAME"

},

...

]

that is exactly what I was referring to @managedkaos

in the old day you needed to use something like chamber as an ENTRYPOINT to feed the ENV variables and such

@jose.amengual @managedkaos indeed they are now showing as plain text in the task definition page on AWS. And they’re not being treated as secrets or valueFrom but instead as value.

@managedkaos I can’t change it now at this point to template because I need to demo this in the upcoming week. I need to use CloudPosse’s module as its how everything is structured

What you suggest me do? The corresponding variables for these two is this

I tried passing this from .tfvars but terraform gives error that it doesn’t accept quotes in valueFrom and it gets invalid when I remove quotes

ClientException: The Systems Manager parameter name specified for secret TEST_DB_ENC_KEY is invalid. The parameter name can be up to 2048 characters and include the following letters and symbols: a-zA-Z0-9_.-,

@managedkaos @jose.amengual Really out of options I think that this should be very easy but I must be missing a big part. I got so used to CloudPosse’s modules and the structure and I want to use it, but very stuck at this point. Need your guidance

Also @managedkaos if a template is the best way to go, in that case I only need to include the template instead of the container_definitions part in the module? Can you please show me an example of the template file and it’s variables/tfvars how it’s being passed to the template?

system panager parameters are path+name like /myapp/servicea/mysecret

even if you have on layer is still /mysecret

and they need to exist before hand obviously

so the first time I’m actually writing the variables to parameter store I need to provide path? I actually went ahead and changed them to this. Is this correct?

But again it terraform yells at me with the new name

Error: ClientException: The Systems Manager parameter name specified for secret /opdev/issuer/DB_PASSWORD is invalid. The parameter name can be up to 2048 characters and include the following letters and symbols: a-zA-Z0-9_.-,

Is it something to do with how secrets is configured in the module itself?

no, you need name and value

name is just the name that will. end up as an env var

the value from is the arn of the secret I believe

I have an example but I’m not in my computer

@jose.amengual I think I got somewhere with your comment. So I took the arn output of SSM

issuer_parameter_write = [

{

name = "/opdev/issuer/DB_ENC_KEY"

value = "secretkey123"

type = "SecureString"

},

And added it to the secrets variable that is being passed to the container definition as such

issuer_secrets = [

{

name : "/dk/ssi_api/DB_ENC_KEY"

valueFrom : "arn:aws:ssm:eu-west-1:1111123213123:parameter/opdev/issuer/DB_ENC_KEY"

}

]

On the AWS Task Definition UI it is now showing the arn instead of the plain text value itself.

It no longer gave me that notorious error! it just yelled at me saying that

The secret name must be unique and not shared with any new or existing environment variables set on the container, such as '/opdev/issuer/DB_ENC_KEY'

And thats because I have already registered that var with that name on SSM using that parameter_write for the SSM module

But I wouldn’t know the arn if I haven’t created it first. For the sake of automation I will need to create maybe a function to take the arn outputs and pass to the consumer module. What you suggest @jose.amengual the output currently is this

name_list = tolist([

"/opdev/issuer/DB_ENC_KEY",

"/opdev/issuer/DB_SIG_KEY",

"/opdev/issuer//NODE_CONFIG_DIR",

"/opdev/issuer/NODE_CONFIG_ENV",

])

ssm-arn = tomap({

"/opdev/issuer/DB_ENC_KEY" = "arn:aws:ssm:eu-west-1:111112312312:parameter/opdev/issuer/DB_ENC_KEY"

"/opdev/issuer/DB_SIG_KEY" = "arn:aws:ssm:eu-west-1:111112312312:parameter/opdev/issuer/DB_SIG_KEY"

"/opdev/issuer/NODE_CONFIG_DIR" = "arn:aws:ssm:eu-west-1:111112312312:parameter/opdev/issuer/NODE_CONFIG_DIR"

"/opdev/issuer/NODE_CONFIG_ENV" = "arn:aws:ssm:eu-west-1:111112312312:parameter/opdev/issuer/NODE_CONFIG_ENV"

})

Possible Solution added a new function called as arn_list_map to [outputs.tf](http://outputs.tf) to combine name_list and arn_list in ssm-parameter-store module

# Splitting and joining, and then compacting a list to get a normalised list

locals {

name_list = compact(concat(keys(local.parameter_write), var.parameter_read))

value_list = compact(

concat(

[for p in aws_ssm_parameter.default : p.value], data.aws_ssm_parameter.read.*.value

)

)

arn_list = compact(

concat(

[for p in aws_ssm_parameter.default : p.arn], data.aws_ssm_parameter.read.*.arn

)

)

# Combining name_list and arn_list and mapping them together to produce output as a single object.

arn_list_map = [

for k, v in zipmap(local.name_list, local.arn_list) : {

name = k

valueFrom = v

}

]

}

output "names" {

# Names are not sensitive

value = local.name_list

description = "A list of all of the parameter names"

}

output "values" {

description = "A list of all of the parameter values"

value = local.value_list

sensitive = true

}

output "map" {

description = "A map of the names and values created"