#terraform (2021-10)

Discussions related to Terraform or Terraform Modules

Discussions related to Terraform or Terraform Modules

Archive: https://archive.sweetops.com/terraform/

2021-10-01

Smart and secure private registry and remote state backend for Terraform.

https://www.hashicorp.com/blog/announcing-terraform-aws-cloud-control-provider-tech-preview no more waiting for resources to be supported, now automatically generated - let

This new provider for HashiCorp Terraform — built around the AWS Cloud Control API — is designed to bring new services to Terraform faster.

automatically generated …. as long as the team wrote their cloudformation support

This new provider for HashiCorp Terraform — built around the AWS Cloud Control API — is designed to bring new services to Terraform faster.

Oh, I guess something not clear here

any resource type published to the CloudFormation Public Registry exposes a standard JSON schema and can be acted upon by this interface

the funny bit is that there are also resources there for running terraform…

After a bit more reading, it appears that AWS CC (cloud control) does not use cloudformation behind the scenes. Rather CC is just an interface to the AWS API for creating and interacting with AWS resources.

In fact, AWS CC does not manage resources, let alone a stack of resources; you can list and update any/all resources, not just those created with AWS CC.

So there is no notion of “importing a resource to be under AWS CC control”. AWS CC does not manage resources, and does not use CloudFormation to create or destroy them.

The quoted text just says that, because of how AWS CC and CF were implemented, resource types made accessible to AWS CC are automatically available in cloudformation.

In any case, AWS CC probably lowers the amount of work required by infra management tools to support AWS resource management, because of the unified json-based API that it provides. Eg Crossplane and ACK (AWS Controllers for Kubernetes) might be able to accelerate their coverage of aws resources dramatically through the use of AWS CC.

this sounds amazing

this is pretty much how ARM has worked on Azure for ages - kind of funny that AWS finally went there

basically it will be APIs that are well structured and defined generically enough that you can pretty much generate the required bits for cloudformation off the top of them

i was chatting with Glenn Gore 3+ years ago about this (and also the ui standardisation across services) so it’s been a long time coming

resource types made accessible to AWS CC are automatically available in cloudformation.

isn’t it the other way around? They add resources to cloudformation and they are automatically in AWS CC because they derive the schema from Cloudformation

1

1Probably. The point (for me anyways) is that CC does not use CF in any way that matters to using CC from TF.

No, it just depends on aws services teams writing the CF registry types and resources for the service and for new releases

Or it depends on third-parties like hashicorp writing and publishing third-party CF registry types for aws services.

All of which benefits CF and AWS even more than hashicorp

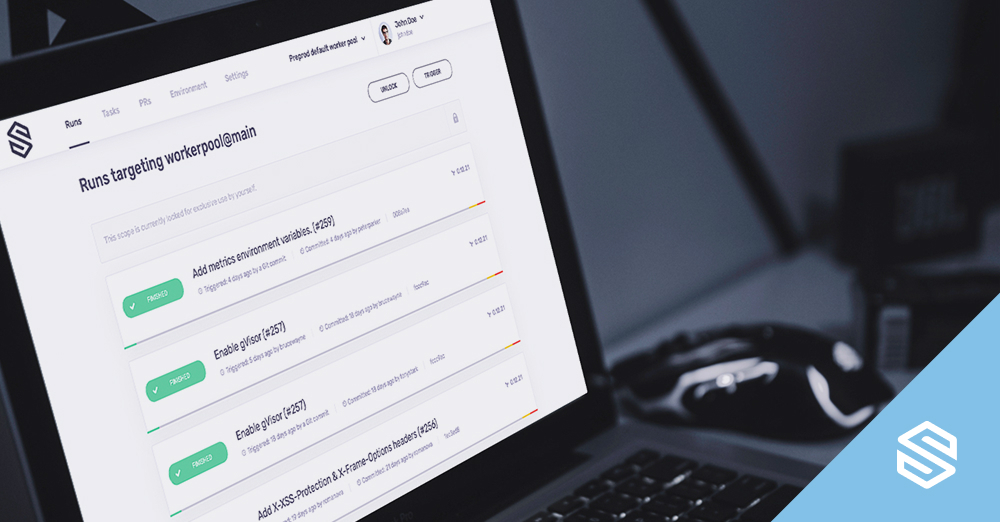

For those using TFE: We have recently had to integrate our product (Cloudrail) with it. The integration was a bit wonky to begin with, but Javier Ruiz Jimenez just cleaned it up very nicely. It’s actually beautiful. I think it’s worth looking at if you’re thinking of using Sentinel policies to integrate tools into TFE (irrespective of the usage of Cloudrail in there).

Code repo: https://github.com/indeni/cloudrail-tfe-integration Javier’s PR: https://github.com/indeni/cloudrail-tfe-integration/pull/3

2021-10-04

Hi all, can someone help me to translate this logic into “WAF rule language” please?

IF url contains production AND NOT (xx.production.url1.com OR yy.production.url2.com) = pass without examining IP’s

ELSEIF request has (xx.production.url1.com OR yy.production.url2.com) AND one of the IP`s in the list = pass

ELSE block all

(the ip filter list has already been prepared and tested ok). Thanks!

I’m not very good at WAF rules, but it seems like WAF can not inspect the URL itself, only the UriPath. (I may be very wrong here though, but I was unable to find it possible to inspect the server name itself).

So, in case you use the same load balancer / cloudfront distribution / or whatever it is, for multiple domain names, I’d suggest finding a way to pass the server name as a header through to WAF, and then use the Single Header inspection statement to match for the server name. Then you combine and_statement, or_statement to get what you want.

Possible Rule priority 0 (if the Server-Name header is present in the request):

• this rule will ALLOW request if server name is xx.production.url1.com or yy.production.url1.com and IP is from the allowed list

action {

allow {}

}

statement {

and_statement {

or_statement {

byte_match_statement {

positional_constraint = "CONTAINS"

search_string = "xx.production.url1.com"

field_to_match {

single_header {

name = "Server-Name"

}

}

text_transformation {

priority = 0

type = "NONE"

}

}

byte_match_statement {

positional_constraint = "CONTAINS"

search_string = "yy.production.url1.com"

field_to_match {

single_header {

name = "Server-Name"

}

}

text_transformation {

priority = 0

type = "NONE"

}

}

}

ip_set_reference_statement {

arn = ""

}

}

}

the Rule priority 1

• will BLOCK all requests to xx.production.url1.com and yy.production.url1.com

action {

block {}

}

or_statement {

byte_match_statement {

positional_constraint = "CONTAINS"

search_string = "xx.production.url1.com"

field_to_match {

single_header {

name = "Server-Name"

}

}

text_transformation {

priority = 0

type = "NONE"

}

}

byte_match_statement {

positional_constraint = "CONTAINS"

search_string = "yy.production.url1.com"

field_to_match {

single_header {

name = "Server-Name"

}

}

text_transformation {

priority = 0

type = "NONE"

}

}

}

Rule priority 2

• ALLOW requests with production in the UriPath

(this rule will be executed after the first 2, so your conditions are satisfied)

action {

allow {}

}

byte_match_statement {

positional_constraint = "CONTAINS"

search_string = "production"

field_to_match {

uri_path {}

}

text_transformation {

priority = 0

type = "NONE"

}

}

Hope this helps even if just a little

Again, I’m not very good at WAF rules and if any of the nested statements don’t work, then a different strategy should be used. But basically, try breaking all rules into the smaller ones, and come up with an efficient strategy of prioritizing Allow / Block rules to get what you need.

I have no words to thank you enough, tomorrow morning i am going to try that out and let you know

Happy to help! Please let me know if you will have more questions, we’ll try to find a solution together.

Hey @Almondovar - to make things simple, the server name will be in the Host header, so this is the one you will want to match with the server names.

Unless HTTP 1.0 (which I would suggest to avoid), the Host header is a must for HTTP 1.1 and HTTP 2, so you should always have it in the request.

2021-10-05

Hi everyone! i am trying to create vpc endpoint service (private link )so i can access my application from base vpc to custom vpc in aws. i am using terraform with jsonecode function to interprets my configuration.

issue is that terraform tries to create vpc endpoint service link before the network load balancer creation. so how i can pass through json depend up condition so it will wait and after NLB creation then create endpoint service.

Thanks

"vpc_endpoint_service": {

"${mdmt_prefix}-share-internal-${mdmt_env}-Ptlink": {

"acceptance_required": "true",

"private_dns_name": "true",

"network_load_balancer_arns": "${mdmt_prefix}-share-internal-${mdmt_env}-nlb.arn",

"iops": "100",

"tags": {

"Name": "${mdmt_prefix}-share-internal-${mdmt_env}-PVT"

}

}

},

Hey all! I’m trying to create a user defined map_roles for my eks using vars:

map_roles = [ { "groups": ["system:bootstrappers","system:nodes"] , "rolearn": "${module.eks.worker_iam_role_arn}", "username": "system:node:{{EC2PrivateDNSName}}" }, var.map_roles ]

map_users = var.map_users

map_accounts = var.map_accounts

variable "map_roles" {

description = "Additional IAM roles to add to the aws-auth configmap."

type = list(object({

rolearn = string

username = string

groups = list(string)

}))

default = [

{

rolearn = "arn:aws:iam::xxxxxxxxxx:role/DelegatedAdmin"

username = "DelegatedAdmin"

groups = ["system:masters"]

}

]

}

when I’m not adding the default node permissions it get deleted on the next apply, and I wish to add more roles of my own. but thats returns an error:

The given value is not suitable for child module variable "map_roles" defined at .terraform/modules/eks/variables.tf:70,1-21: element 1: object required.

I believe it is because I creating a - list(object) in list(object) Can I have your help pls?

Hi Everyone, currently I’m new to terraform and working on creating AWS ECR Repositories using Terraform. how can i apply same template like ecr_lifecycle_policy, repository_policy to many ECR repositories. can someone help me in this.

resource ”aws_ecr_lifecycle_policy” ”lifecycle” { repository = aws_ecr_repository.client-dashboard.name policy = <<EOF { ”rules”: [ { ”rulePriority”: 1, ”description”: ”Keep only 5 tagged images, expire all others”, ”selection”: { ”tagStatus”: ”tagged”, ”tagPrefixList”: [ ”build” ], ”countType”: ”imageCountMoreThan”, ”countNumber”: 5 }, ”action”: { ”type”: ”expire” } }, { ”rulePriority”: 2, ”description”: ”Keep only 5 tagged images, expire all others”, ”selection”: { ”tagStatus”: ”tagged”, ”tagPrefixList”: [ ”runtime” ], ”countType”: ”imageCountMoreThan”, ”countNumber”: 5 }, ”action”: { ”type”: ”expire” } }, { ”rulePriority”: 3, ”description”: ”Only keep untagged images for 7 days”, ”selection”: { ”tagStatus”: ”untagged”, ”countType”: ”sinceImagePushed”, ”countUnit”: ”days”, ”countNumber”: 7 }, ”action”: { ”type”: ”expire” } } ] } EOF }

- use a variable to hold a list of the repos you want to create; it would be a list of string like

["repo1","repo2"] - Create your repo resource with a

for_eachto loop over the set of the repo list likefor_each = toset(var.repolist) - In the policy resource, use the same loop over the repo resources for the

repositoryassignment.

i will try to get demo code in a bit.

Tqs

Applying this policy to N-number of repositories

2021-10-06

Howdy! So I am new to the community here. I am glad to be here. I am having some issues with https://github.com/cloudposse/terraform-aws-ecs-alb-service-task and https://github.com/cloudposse/terraform-aws-ecs-web-app I am trying to use it to deploy ecs services onto ecs instances but I am running into issues with the security group and network settings. The errors I keep receiving are here:

╷

│ Error: too many results: wanted 1, got 219

│

│ with module.apps["prism3-rmq-ecs-service"].module.ecs_alb_service_task.aws_security_group_rule.allow_all_egress[0],

│ on .terraform/modules/apps.ecs_alb_service_task/main.tf line 273, in resource "aws_security_group_rule" "allow_all_egress":

│ 273: resource "aws_security_group_rule" "allow_all_egress" {

│

╵

╷

│ Error: error creating shf-prism3-rmq-ecs-service service: error waiting for ECS service (shf-prism3-rmq-ecs-service) creation: InvalidParameterException: The provided target group arn:aws:elasticloadbalancing:us-west-2:632720948474:targetgroup/shf-prism3-rmq-ecs-service/c732ab107ef2aacb has target

type ip, which is incompatible with the bridge network mode specified in the task definition.

│

│ with module.apps["prism3-rmq-ecs-service"].module.ecs_alb_service_task.aws_ecs_service.default[0],

│ on .terraform/modules/apps.ecs_alb_service_task/main.tf line 399, in resource "aws_ecs_service" "default":

│ 399: resource "aws_ecs_service" "default" {

│

I am not sure if there have been issues with this in the past. The version of terraform-aws-ecs-alb-service-task is 0.55.1 which is set in the terraform-aws-ecs-web-app. I am setting the network_mode to bridge and that is when I run into these errors. I also am excluding the pipeline stuff which we had to create our own fork in order to do so. I have also tried to hardcode the target_type to host for the targetgroup type but it keeps setting it to the default which is ip in the variables.tf Just wanted to reach out and see if there was any advice or direction inside the cloudposse collection for folks that don’t want to use the awsvpc/fargate solutions.

Terraform module which implements an ECS service which exposes a web service via ALB. - GitHub - cloudposse/terraform-aws-ecs-alb-service-task: Terraform module which implements an ECS service whic…

Terraform module that implements a web app on ECS and supports autoscaling, CI/CD, monitoring, ALB integration, and much more. - GitHub - cloudposse/terraform-aws-ecs-web-app: Terraform module that…

Be helpful to see the inputs to resolve the first error. On the second, when using bridge mode you’re using the ecs host’s IP for the task and differentiating via port. The TG cannot register multiple tasks via IP because in bridge mode they share the same IP as the host. You thus need to change the TG type to instance.

Terraform module which implements an ECS service which exposes a web service via ALB. - GitHub - cloudposse/terraform-aws-ecs-alb-service-task: Terraform module which implements an ECS service whic…

Terraform module that implements a web app on ECS and supports autoscaling, CI/CD, monitoring, ALB integration, and much more. - GitHub - cloudposse/terraform-aws-ecs-web-app: Terraform module that…

The second is definitely more obvious than the first. Let me get the inputs I am using. I am using a yaml decode to a map and then iterating through the values in the map. Let me get the layers here.

module "apps" {

source = "github.com/itsacloudlife/terraform-aws-ecs-web-app-no-pipeline?ref=add-task-placement-constraints"

for_each = { for app in local.yaml_config.app : app.name => app }

name = each.value.name

namespace = var.namespace

ecs_cluster_name = data.terraform_remote_state.ecs.outputs.ecs_cluster.ecs_cluster_name

ecs_cluster_arn = data.terraform_remote_state.ecs.outputs.ecs_cluster.ecs_cluster_arn

ecs_private_subnet_ids = data.terraform_remote_state.network.outputs.app_subnets

vpc_id = data.terraform_remote_state.network.outputs.vpc_id

alb_security_group = ""

alb_arn_suffix = module.alb[each.value.type].alb_arn_suffix

alb_ingress_unauthenticated_hosts = [each.value.host]

alb_ingress_unauthenticated_listener_arns = [module.alb[each.value.type].https_listener_arn]

container_image = each.value.name

container_cpu = each.value.container_cpu

container_memory = each.value.container_memory

container_memory_reservation = each.value.container_memory_reservation

container_port = each.value.container_port

container_environment = each.value.container_environment

secrets = each.value.secrets

port_mappings = [each.value.port_mappings]

desired_count = each.value.desired_count

launch_type = each.value.launch_type

aws_logs_region = each.value.aws_logs_region

log_driver = each.value.log_driver

ecs_alarms_enabled = each.value.ecs_alarms_enabled

ecs_alarms_cpu_utilization_high_threshold = each.value.ecs_alarms_cpu_utilization_high_threshold

ecs_alarms_cpu_utilization_high_evaluation_periods = each.value.ecs_alarms_cpu_utilization_high_evaluation_periods

ecs_alarms_cpu_utilization_high_period = each.value.ecs_alarms_cpu_utilization_high_period

ecs_alarms_cpu_utilization_low_threshold = each.value.ecs_alarms_cpu_utilization_low_threshold

ecs_alarms_cpu_utilization_low_evaluation_periods = each.value.ecs_alarms_cpu_utilization_low_evaluation_periods

ecs_alarms_cpu_utilization_low_period = each.value.ecs_alarms_cpu_utilization_low_period

ecs_alarms_memory_utilization_high_threshold = each.value.ecs_alarms_memory_utilization_high_threshold

ecs_alarms_memory_utilization_high_evaluation_periods = each.value.ecs_alarms_memory_utilization_high_evaluation_periods

ecs_alarms_memory_utilization_high_period = each.value.ecs_alarms_memory_utilization_high_period

ecs_alarms_memory_utilization_low_threshold = each.value.ecs_alarms_memory_utilization_low_threshold

ecs_alarms_memory_utilization_low_evaluation_periods = each.value.ecs_alarms_memory_utilization_low_evaluation_periods

ecs_alarms_memory_utilization_low_period = each.value.ecs_alarms_memory_utilization_low_period

ecr_scan_images_on_push = each.value.ecr_scan_images_on_push

autoscaling_enabled = each.value.autoscaling_enabled

autoscaling_dimension = each.value.autoscaling_dimension

autoscaling_min_capacity = each.value.autoscaling_min_capacity

autoscaling_max_capacity = each.value.autoscaling_max_capacity

autoscaling_scale_up_adjustment = each.value.autoscaling_scale_up_adjustment

autoscaling_scale_up_cooldown = each.value.autoscaling_scale_up_cooldown

autoscaling_scale_down_adjustment = each.value.autoscaling_scale_down_adjustment

autoscaling_scale_down_cooldown = each.value.autoscaling_scale_down_cooldown

poll_source_changes = each.value.poll_source_changes

authentication_type = each.value.authentication_type

entrypoint = [each.value.entrypoint]

ignore_changes_task_definition = each.value.ignore_changes_task_definition

task_placement_constraints = [each.value.task_placement_constraints]

network_mode = each.value.network_mode

#task_role_arn = each.value.task_role_arn

#ecs_service_role_arn = each.value.ecs_service_role_arn

}

My guess is the container port or port mappings variable is incorrect. Do either of those get used to build the security group rule?

resource "aws_security_group" "ecs_service" {

count = local.enabled && var.network_mode == "awsvpc" ? 1 : 0

vpc_id = var.vpc_id

name = module.service_label.id

description = "Allow ALL egress from ECS service"

tags = module.service_label.tags

lifecycle {

create_before_destroy = true

}

}

resource "aws_security_group_rule" "allow_all_egress" {

count = local.enabled && var.enable_all_egress_rule ? 1 : 0

type = "egress"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

security_group_id = join("", aws_security_group.ecs_service.*.id)

}

I don’t believe so. In the terraform-aws-ecs-alb-service-task this is what it looks like ^

If I set the network_mode to awsvpc it will deploy the service but then it just doesn’t want to allocate to the ecs instance that is available on the cluster.

v1.1.0-alpha20211006 1.1.0 (Unreleased) UPGRADE NOTES:

Terraform on macOS now requires macOS 10.13 High Sierra or later; Older macOS versions are no longer supported.

The terraform graph command no longer supports -type=validate and -type=eval options. The validate graph is always the same as the plan graph anyway, and the “eval” graph was just an implementation detail of the terraform console command. The default behavior of creating a plan graph should be a reasonable replacement for both of the removed…

https://github.com/terraform-docs/terraform-docs/releases/tag/v0.16.0 terraform-docs release with very nice new features

Notable Updates Changelog Features f613750 Add ‘HideEmpy’ section bool flag 6f97f67 Add abitlity to partially override config from submodules de684ce Add public ReadConfig function 54dc0f5 Add recu…

2021-10-07

module "foundation" {

source = "git::<https://xyz/terraform-aws-foundation.git?ref=feature/hotfix>"

spec = local.data.spec

depends_on = [module.iaas.aws_lb.loadbalancer]

}

how i can call within one module to other module resources or can we define in module multiple source ?

Hi - I’m using what looks to be a really useful module - https://registry.terraform.io/modules/cloudposse/code-deploy/aws/latest?tab=inputs - thank you for this. Sadly, I’m trying to use your ec2_tag_filter input and we get a fail. I’m happy to create a PR to fix this if you can confirm the issue with the lookup - I’ve created a bug here: https://github.com/cloudposse/terraform-aws-code-deploy/issues/6 - do let me know if you’ll accept a PR for this - thank you! cc @Erik Osterman (Cloud Posse) who seems to be the author

Found a bug? Maybe our Slack Community can help. Describe the Bug I am trying to use your module for the following EC2 deployment. I get an issue when I try to use the ec2_tag_filter variable. When…

it seems to me that the following code: https://github.com/cloudposse/terraform-aws-code-deploy/blob/master/main.tf#L177-L184 has the ec2_tag_filter and ec2_tag_set the wrong way around - the lookup should be for ec2_tag_filter object and our content needs to be the ec2_tag_set. If you can confirm this, then I’ll create a PR or pehaps you can make this change directly for me?

Terraform module to provision AWS Code Deploy app and group. - terraform-aws-code-deploy/main.tf at master · cloudposse/terraform-aws-code-deploy

it’s a really nice and complete module - thank you - I was going to write my own until I looked at the Registry and saw this lurking.

@Stephen Tan I admit it’s been a while since using this module personally

I can’t look into the problem right now that your facing

it’s ok - I’ve got something working. If you can accept a PR then I’ll create one

@RB can you review https://github.com/cloudposse/terraform-aws-code-deploy/issues/6

Found a bug? Maybe our Slack Community can help. Describe the Bug I am trying to use your module for the following EC2 deployment. I get an issue when I try to use the ec2_tag_filter variable. When…

I’ve created a PR to fix this issue here: https://github.com/cloudposse/terraform-aws-code-deploy/pull/7

what This is to fix a bug when using the ec2-tag-filters why The dynamic block for_each and map lookups are broken

Do I get the job?

oh lol i just created a pr too

haha

take your pick!

let’s go with yours

did you try yours out locally ?

yes, it works, but yours is simpler. I didn’t spot the list of list until later

I think I have some dyslexia occaisionally

lol, no worries

not sure why we use the lookup or even how it works tbh which is why I removed it

lookup will look up a key and resolve it to a null if the key isnt there

unfortunately since terraform doesnt allow optional keys (yet), the code is not super useful

but at some point, the optional input object keys will be available and then the lookups will be more useful

happy to use anything that works tbh

the code is merged and released as 0.1.2. let us know if this works

thanks again for the contribution!

thanks!

yes, that works now, although when applying, roles won’t attach, but I’ll debug that now

@RB - I’ve created a new PR here: https://github.com/cloudposse/terraform-aws-code-deploy/pull/9/files - not sure why my old commits are included in the PR though. I did a merge from upstream so I don’t get it.

what There is a but where the ARN string for the role is missing "/service-role" - the policy can't be created in the current state I have added the missing part. The module applies …

is that required in all cases? what if you didn’t want to use the service role?

hi - sorry for being so late to reply. Yes, I see, so the structure of the ARN will vary depending on your platform - ie ECS, EC2 or Lambda. Your ARN is constructed with just the ECS case in mind and this role assignment will fail if you try to use with EC2 or Lambda. https://docs.aws.amazon.com/codedeploy/latest/userguide/getting-started-create-service-role.html “The managed policy you use depends on the compute platform” the possible policy ARNs are:

aws iam attach-role-policy --role-name CodeDeployServiceRole --policy-arn arn:aws:iam::aws:policy/service-role/AWSCodeDeployRole

,

aws iam attach-role-policy --role-name CodeDeployServiceRole --policy-arn arn:aws:iam::aws:policy/service-role/AWSCodeDeployRoleForLambda

,

aws iam attach-role-policy --role-name CodeDeployServiceRole --policy-arn arn:aws:iam::aws:policy/AWSCodeDeployRoleForECS

Learn how to use the IAM console or the AWS CLI to create an IAM role that gives CodeDeploy permission to access to your instances.

I’ve updated the PR - sorry it’s so messy. I’ve also made another EC2 related change to do with making tagging work properly https://github.com/cloudposse/terraform-aws-code-deploy/pull/9

what The tagging for EC2 is broken as one could not add more than one tag to be selected previously. I have added a new var to fix this There is a bit where the ARN string for the role is missing …

2021-10-08

Hiya, QQ regarding the dynamic subnet module. I’m calling the module twice for different purposes. Once to create subnets for an EKS cluster, and another time for an EC2 instance. My VPC has one /16 cidr block… the problem is, when the module is called the second time for EC2, it tries to create the same subnets it created for the EKS cluster, because it doesn’t know what it’s already used from the cidr block.

I’m not sure how this would work tbh, and I’m wondering whether it’s above and beyond what the module is intended for.

I guess I’d have to add additional CIDRs to the VPC and use another CIDR the second time I call the dynamic subnet module?

(i have not used the module…just brainstorming…)

is it possible to call the module once, and then reference those subnets as data where you need them again?

In my own use (again, without the module) I create a VPC in its on state and then use data resources to pull in the subnets. so only one module (the VPC module) is really trying to create subnets.

In the end I got around the problem by adding an additional cidr and then passed that into the dynamic subnet module. But, I suspect I’ll also need to do something to let both CIDRs talk to each other? Networking is not by bag.

2021-10-09

2021-10-10

Guys, is there any terraform wrapper that can pre-download binaries for providers that are requiring binaries installed on $PATH ? Thanks

Maybe some package manager like https://gofi.sh/ and food https://github.com/fishworks/fish-food/blob/main/Food/helmfile.lua ( just an example )

This one is also interesting https://github.com/mumoshu/shoal#history-and-context

hrmmm ya we had this problem with terraform-provider-helmfile

which depends on a lot of binaries

2021-10-12

Does anyone know is this module dead or are the approvers just afk? https://github.com/cloudposse/terraform-aws-multi-az-subnets

Terraform module for multi-AZ public and private subnets provisioning - GitHub - cloudposse/terraform-aws-multi-az-subnets: Terraform module for multi-AZ public and private subnets provisioning

last update was in August so not dead yet

Terraform module for multi-AZ public and private subnets provisioning - GitHub - cloudposse/terraform-aws-multi-az-subnets: Terraform module for multi-AZ public and private subnets provisioning

which PRs are you trying to get reviewed ? 55 and 56 ?

ipv6 one really, it needs eyes on it.

It works. I’m just not sure if there was a philosophy there with how private vs public work. Also considering how ipv6 works in general.

I applied it against public only, but technically if we pass a igw variable it could be applied to private as well (though that would make it not private anymore).

That’s #55

left some comments on #55

Thanks! I’ll review

Hi folks, I’ve got this error after implementing a bind mounts from docker container to EFS storage directory.

Error: ClientException: Fargate compatible task definitions do not support devices

I added this line in my ECS task definition

linux_parameters = {

capabilities = {

add = ["SYS_ADMIN"],

drop = null

}

devices = [

{

containerPath = "/dev/fuse",

hostPath = "/dev/fuse",

permissions = null

}

],

initProcessEnabled = null

maxSwap = null

sharedMemorySize = null

swappiness = null

tmpfs = []

}

Here’s the module I used https://github.com/cloudposse/terraform-aws-ecs-container-definition

Terraform module to generate well-formed JSON documents (container definitions) that are passed to the aws_ecs_task_definition Terraform resource - GitHub - cloudposse/terraform-aws-ecs-container-…

that error is coming from the aws api itself

Terraform module to generate well-formed JSON documents (container definitions) that are passed to the aws_ecs_task_definition Terraform resource - GitHub - cloudposse/terraform-aws-ecs-container-…

Tasks that use the Fargate launch type don’t support all of the Amazon ECS task definition parameters that are available. Some parameters aren’t supported at all, and others behave differently for Fargate tasks.

cc: @Gerald

Does anyone knows what is the workaround to support devices argument.

2021-10-13

has anyone experienced this before while using terraform to manage github repo creation.

Error: GET <https://api.github.com/xxx>: 403 API rate limit of 5000 still exceeded until 2021-10-13 12:05:50 +0000 UTC, not making remote request. [rate reset in 6m36s]

you are going to have to be a bit more specific

what are you trying to do?

are you using TF to hit the github api?

i am trying to use terraform to manage my github repository creation and i am using codebuild and codepipeline as my cicd tools

so its throwing this in codebuild

when i run this locally it does not throw this

but recently i decided to set the terraform plan –refresh=false

to reduce the amount of time that it tries to refresh github to reduce the api calls. this worked by the way but its also bad should incase someone makes a change on the aws console and i am not aware

yes, dealing with their rate limits is hard

effects of rate limits could also be exasperated during development and not an issue during normal usage

so i was able to by pass this by running. This kind of will reduce the amount of API calls made to git.

terraform plan --refresh=false

hi all, i am planning to implement a WAF v2 rule that “lets everything else pass” - am i right thinking that if i dont have any statement - it will allow everything?

rule {

name = "let-everything-else-pass"

priority = 2

action {

allow {}

}

# left without statement

visibility_config {

cloudwatch_metrics_enabled = true

metric_name = "rule-2"

sampled_requests_enabled = true

}

@Almondovar unfortunately, the statement part is required as per Terraform documentation:

statement - (Required) The AWS WAF processing statement for the rule, for example byte_match_statement or geo_match_statement. See Statement below for details.

What’s good though, is something to consider:

• the WAF will allow the request if it is not caught by any blocking rules

This means that you do not need an “allow-all” rule with empty statement if you design your blocking rules so that they catch all wrong requests; everything else will be auto-allowed.

Good morning @Constantine Kurianoff thank you once more time! indeed it works different than the classic cisco firewall rules i was used to, have a nice day

v1.0.9 1.0.9 (October 13, 2021) BUG FIXES: core: Fix panic when planning new resources with nested object attributes (#29701) core: Do not refresh deposed instances when the provider is not configured during destroy (<a href=”https://github.com/hashicorp/terraform/issues/29720” data-hovercard-type=”pull_request”…

The codepath for AllAttributesNull was not correct for any nested object types with collections, and should create single null values for the correct NestingMode rather than a single object with nu…

The NodePlanDeposedResourceInstanceObject is used in both a regular plan, and in a destroy plan, because the only action needed for a deposed instance is to destroy it so the functionality is mostl…

Hello, I have an issue to automate TF in Jenkinsfile to Apply terraform.tfstae from the backend S3. how I can write the correct command? //////////////////////////////////////////////////////////////////////////////////// pipeline { // Jenkins AWS Access & Secret key environment { AWS_ACCESS_KEY_ID = credentials(‘AWS_ACCESS_KEY_ID’) AWS_SECRET_ACCESS_KEY = credentials(‘AWS_SECRET_ACCESS_KEY’) } options { // Only keep the 5 most recent builds buildDiscarder(logRotator(numToKeepStr:’5’)) } agent any tools { terraform ‘terraform’ }

stages {

// Check out from GIT, Snippet Generato from pipeline Syntax --> Checkout: Check out from version control

stage ("Check from GIT") {

steps {

git branch: 'master', credentialsId: 'Jenkins_terraform_ssh_repo', url: '[email protected]:mickleissa/kobai.git'

}

}

// Terraform Init Stage

stage ("Terraform init") {

steps {

// sh 'terraform -chdir="./v.14/test_env" init -upgrade'

// terraform init -backend-config="bucket=kobai-s3-backend-terraform-state" -backend-config="key=stage-test-env/terraform.tfstate"

sh 'terraform -chdir="./v.14/test_env" init -migrate-state'

}

}

// Terraform fmt Stage

stage ("Terraform fmt") {

steps {

sh 'terraform fmt'

}

}

// Terraform Validate Stage

stage ("Terraform validate") {

steps {

sh 'terraform validate'

}

}

// Terraform Plan Stage

stage ("Terraform plan") {

steps {

sh 'terraform -chdir="./v.14/test_env" plan -var-file="stage.tfvars"'

// sh 'terraform -chdir="./v.14/test_env" plan'

}

}

// Terraform Apply Stage

stage ("Terraform apply") {

steps {

sh 'terraform -chdir="./v.14/test_env" apply -var-file="stage.tfvars" --auto-approve'

// sh 'terraform -chdir="./v.14/test_env" apply --auto-approve'

}

}

// Approvel stage

stage ("DEV approval Destroy") {

steps {

echo "Taking approval from DEV Manager for QA Deployment"

timeout(time: 7, unit: 'DAYS') {

input message: 'Do you want to Destroy the Infra', submitter: 'admin'

}

}

}

// Destroy stage

stage ("Terraform Destroy") {

steps {

sh 'terraform -chdir="./v.14/test_env" destroy -var-file="stage.tfvars" --auto-approve'

// sh 'terraform -chdir="./v.14/test_env" destroy --auto-approve'

}

}

}

post {

always {

echo 'This will always run'

}

success {

echo 'This will run only if successful'

}

failure {

echo 'This will run only if failed'

}

unstable {

echo 'This will run only if the run was marked as unstable'

}

changed {

echo 'This will run only if the state of the Pipeline has changed'

echo 'For example, if the Pipeline was previously failing but is now successful'

}

}

The most helpful output now would be the raw error message

This code working fine, I have the issue when I change it to s3 backend

I have the issue when I change it to s3 backend

so thats not working fine - that’s what should have an error message = )

2021-10-14

Hello, maybe someone could help me with creating a simple redis cluster on aws, with automatic_failover enabled, 1 master and 1 read replica. I’m trying to use cloudposse/terraform-aws-elasticache-redis but it seems there is no way to create a cluster with read replica, and that makes no sense to me. Here is my code so far:

module "redis" {

source = "cloudposse/elasticache-redis/aws"

version = "0.30.0"

stage = var.stage

name = "redis"

port = "6379"

vpc_id = data.terraform_remote_state.conf.outputs.vpc_id

subnets = data.terraform_remote_state.conf.outputs.private_subnet_ids

# need az's list due to bug:

# <https://github.com/cloudposse/terraform-aws-elasticache-redis/issues/63>

availability_zones = data.aws_availability_zones.azs.names

#In prod use 2 nodes

cluster_size = var.cicd_env != "prod" ? 1 : 2

# only really helpful in prod because we have 2 nodes

automatic_failover_enabled = true

instance_type = "cache.t3.small"

apply_immediately = true

engine_version = "6.x"

family = "redis6.x"

at_rest_encryption_enabled = true

transit_encryption_enabled = false

kms_key_id = aws_kms_key.redis.arn

#used only on version 0.40.0 and above:

#security_groups = ["module.sg-redis.security_group_id"]

#for version 0.30.0 use:

use_existing_security_groups = true

existing_security_groups = [module.sg-redis.security_group_id]

#used only on version 0.40.0 and above:

#multi_az_enabled = true

maintenance_window = "Tue:03:00-Tue:06:00"

tags = {

Name = var.cicd_domain

contactinfo = var.contactinfo

service = var.service

stage = var.stage

Environment = var.cicd_env

}

#used only on version 0.40.0 and above:

# Snapshot name upon Redis deletion

#final_snapshot_identifier = "${var.cicd_env}-final-snapshot"

# Daily snapshots - Keep last 5 for prod, 0 for other

snapshot_window = "06:30-07:30"

snapshot_retention_limit = var.cicd_env != "prod" ? 0 : 5

}

But I’m getting this error: Error: error updating ElastiCache Replication Group (alpha-harbor): InvalidReplicationGroupState: Replication group must have at least one read replica to enable autofailover.

status code: 400, request id: 22997e65-2bcb-41a1-861e-7adb7089e9e0

Any help?

the error message is pretty self-explanatory

what are you having trouble with?

The question is: How do I create the read replica? I’ve already changed the cluster size to 2, and enabled automatic_failover…

And i do not want to enable cluster_mode… basically how do I create read replicas in the other AZ’s so that the failover works?

are you sure you set cluster size to 2? It’s set to 1 in dev

you’re right… that is my “final” code but on my tests I had 2 for both dev and prod

so you’re saying that it should work right? I can try to test again so tee if it works… maybe I did something wrong. Will also try using 0.40 just to see if there is any difference.

2021-10-15

VS Code’s terraform language-server was updated with experimental support for pre-fill of required module/resource parameters! https://github.com/hashicorp/vscode-terraform/pull/799 To enable add this to your extension settings

"terraform-ls.experimentalFeatures":{

prefillRequiredFields": true

}

also @Erik Osterman (Cloud Posse) this seems particularly relevant to your org as just yesterday I was looking at the extensive changes in the eks-node-group module - https://discuss.hashicorp.com/t/request-for-feedback-config-driven-refactoring/30730

Hi all, My name is Korinne, and I’m a Product Manager for Terraform  We’re currently working on a project that will allow users to more easily refactor their Terraform modules and configurations, set to be generally available in Terraform v1.1. From a high-level, the goal is to use moved statements to do things like: Renaming a resource Enabling count or for_each for a resource Renaming a module call Enabling count or for_each for a module call Splitting one module into multiple The a…

We’re currently working on a project that will allow users to more easily refactor their Terraform modules and configurations, set to be generally available in Terraform v1.1. From a high-level, the goal is to use moved statements to do things like: Renaming a resource Enabling count or for_each for a resource Renaming a module call Enabling count or for_each for a module call Splitting one module into multiple The a…

this will be another major differentiator from cloudformation

Hi all, My name is Korinne, and I’m a Product Manager for Terraform  We’re currently working on a project that will allow users to more easily refactor their Terraform modules and configurations, set to be generally available in Terraform v1.1. From a high-level, the goal is to use moved statements to do things like: Renaming a resource Enabling count or for_each for a resource Renaming a module call Enabling count or for_each for a module call Splitting one module into multiple The a…

We’re currently working on a project that will allow users to more easily refactor their Terraform modules and configurations, set to be generally available in Terraform v1.1. From a high-level, the goal is to use moved statements to do things like: Renaming a resource Enabling count or for_each for a resource Renaming a module call Enabling count or for_each for a module call Splitting one module into multiple The a…

am i missing something or is there no way to attach a loadbalancer to a launch template?

i am wanting to use EKS node groups in my new role as they now support taints/labels and bottlerocket but i need to attach an ELB to our ingress launch template

You attach launch templates to autoscaling groups, not loadbalancers

i am wondering if i need to do something clever with https://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/eks_node_group#resources

Hi All, I stumbled upon the SweetOps TF code library…

I have a few questions…

(1) What is BridgeCrew’s relationship to some of these modules? I see their logo stamped on a few of the modules?

(2) terraform-null-label is used a lot in other SO TF modules: https://github.com/cloudposse/terraform-null-label

I’m trying to understand why this module is so important to the other SO TF modules

Terraform Module to define a consistent naming convention by (namespace, stage, name, [attributes]) - GitHub - cloudposse/terraform-null-label: Terraform Module to define a consistent naming conven…

for #2, check out https://www.youtube.com/watch?v=V2b5F6jt6tQ

for #1

Security scanning is graciously provided by Bridgecrew. Bridgecrew is the leading fully hosted, cloud-native solution providing continuous Terraform security and compliance.

#3 what is SO? (Stackoverflow? )

in any case, all Cloud Posse modules are used by many companies on TFC and Spacelift (https://spacelift.io/)

Spacelift is the CI/CD for infrastructure-as-code and policy as code. It enables collaboration, automates manual work and compliance, and lets teams customize and automate their workflows.

the modules are combined into components, which are deployed by terraform/TFC/Spacelift etc.

Opinionated, self-contained Terraform root modules that each solve one, specific problem - GitHub - cloudposse/terraform-aws-components: Opinionated, self-contained Terraform root modules that each…

Opinionated, self-contained Terraform root modules that each solve one, specific problem - terraform-aws-components/modules at master · cloudposse/terraform-aws-components

components are combined from many modules (and/or plain terraform resources)

Thanks @Andriy Knysh (Cloud Posse)

SO = SweetOps

Regarding using Spacelift / TFC…

Some context to begin with… Each business unit has an environment in AWS. There are 4 environments (sandbox, dev, qa and prod). Today, we are not using workspaces but plan to use them soon… In Scalr (TFC alternative) each “environment” is tied to a single AWS account. We are starting to adopt OPA…

I’ve been considering pushing all Security Groups into a single workspace. The SGS would be stored in git within a YAML multi document file, to list SGs per application or service that needs one, along with the SG rules. This file would list all SGs for the entire organization.

Why do it this way?

(a) to create transparency in git for security and devops teams (b) allow appdevs to create a PR but not allow them to merge. The devops and security teams would manage the PRs and merging of release branches. (c) upon approval of PR, automation will run and convert the YAML to a single TF file, which will reference the SG module. Gitops will then push the changes up via TF, into the single BU specific workspace (d) prevent appdev teams from adding custom SGs in custom TF module code (OPA will automatically block any code that references an SG outside of a data lookup). In this single workspace model, they’ll have to subscribe to their custom SG via the workspace within their organization (TFC) / environment (Scalr). (e) We are starting to deal with network micro segmentation. We are going to tackle WAF and SGs to start with. We need a layer of separation and control prior to TF being deployed.

I know that is a mouthful. But, I’m bringing this up because I noticed that SO also has a module for SGs. I’m wondering if this concept and the reasoning behind it is a common pattern now in devops circles?

Thanks in advance for even reading this. A double thanks for adding any tips, advice or corrections

@Andriy Knysh (Cloud Posse) ^^ Do you have any thoughts on this?

@Elvis McNeely I like that idea to have SGs in YAML files

in fact, we’ve been moving in that direction on many of our modules

we call it the catalog pattern

Terraform module to provision Service Control Policies (SCP) for AWS Organizations, Organizational Units, and AWS accounts - terraform-aws-service-control-policies/catalog at master · cloudposse/te…

Terraform module to configure and provision Datadog monitors, custom RBAC roles with permissions, and other Datadog resources from a YAML configuration, complete with automated tests. - terraform-d…

we define all the resources as YAML files, and then use them in other modules as remote sources

using this module to read the YAML files and deep-merge them (if needed deep-merging) https://github.com/cloudposse/terraform-datadog-platform/blob/master/examples/complete/main.tf#L1

Terraform module to configure and provision Datadog monitors, custom RBAC roles with permissions, and other Datadog resources from a YAML configuration, complete with automated tests. - terraform-d…

or just simple code like this https://github.com/cloudposse/terraform-datadog-platform/blob/master/examples/synthetics/main.tf#L2 if no deep-merging is needed

Terraform module to configure and provision Datadog monitors, custom RBAC roles with permissions, and other Datadog resources from a YAML configuration, complete with automated tests. - terraform-d…

“we define all the resources as YAML files, and then use them in other modules as remote sources”

What do you mean by “other” modules? I assume some of those configs in the yaml files are defaults? Remote sources are referencing them?

other modules load the YAML as remote sources and convert them to terraform and apply

Thanks for sharing @Andriy Knysh (Cloud Posse). I know this is a very basic question, but I want to understand a bit more. I see in your TF modules you are using:

source = “../../modules/synthetics”

before TF runs, are you pulling in all modules to the local directory? I would like to simplify this process on my end, a bit more. I wish the provider block would allow us to list a manifest of “modules”, within a TF template, something like this:

From:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 2.7.0"

configuration_aliases = [ aws.alternate ]

}

}

}

To:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 2.7.0"

configuration_aliases = [ aws.alternate ]

}

},

required_modules {

synthetics = {

source = "<https://github.com/cloudposse/terraform-datadog-platform.git>"

version = ">= 2.7.0"

}

}

}

Ok

What do you use to convert the yaml to TF? I’m wondering if you use an OSS package or have you created something internally?

the examples show just the local path loading

but the YAML files can be loaded as remote files

using this module to read the YAML files and deep-merge them (if needed deep-merging) https://github.com/cloudposse/terraform-datadog-platform/blob/master/examples/complete/main.tf#L1

What do you use to convert the yaml to TF? I’m wondering if you use an OSS package or have you created something internally?

Ok, I’ve done something similar in the past. But, I ran into issues. Like, DR. When setting up DR for two different regions, TF loops don’t operate in provider blocks (as of late) which forced me to write provider + module blocks from yaml. Have you hit that kind of issue? If so, how did you solve it?

Terraform module that loads an opinionated "stack" configuration from local or remote YAML sources. It supports deep-merged variables, settings, ENV variables, backend config, and remote …

yes, to deploy into many regions using one module with one terraform apply, the provider blocks need to be created manually or generated from templates - this is an issue with TF providers

That’s good to know. I was frustrated when I discovered that issue, but I will just make a plan to handle provider blocks in the yaml conversion.

Can you explain why / how you guys came to the conclusion to use this.context? It’s an interesting concept, I’m wondering what brought you to the point of doing this for all of your modules?

to not repeat the same common inputs in all modules (and we have more than 130 of them)

the inputs were defined somehow differently in each modules

now they are all the same

like region, name, business_unit etc? Var names that all modules need?

reuse the common code to make fewer mistakes

we don’t have all of those as common inputs (no business_unit, we have tenant for that), we have namespace, stage, tenant, environment, name, attributes`

see the null-label module

but you can use the same pattern and use your own inputs as common, e.g. business_unit

Right, but those inputs are common across all of your modules? In our case, we require a set of 12 vars that also translate to tags

common across all our modules

I really appreciate your time answering my questions

they also used by null-label to generate unique and consistent resource names

so the label and context solve two issues:

Have you used Scalr? I know you guys did a great videocast comparing TF statefile tools. I’m wondering if anyone (or you guys) have tested using your modules in Scalr? They have a slightly different model compared to spacelift

- Unique and consistent resource names

- Consistent common inputs across all modules and components

Have you used Scalr?

- is the label module? 2. Is for context?

no

I landed on the SweetOps docs https://docs.cloudposse.com/fundamentals/concepts/ Which cover a lot of what we discussed today. Thanks again for your time

@Andriy Knysh (Cloud Posse) I’ve spent some time reviewing everything you shared. The concept of components, forces all of the IaC to be in a single state file, meaning you aren’t using TF workspaces for major pieces of infra. Is that a deliberate choice? We are attempting to move away from have such large stacks in a single statefile as we found it to be difficult to manage within operations.

each component state is in a separtae state file

Oh, I think I missed that. So a single TF execution loops over components and does a TF plan / apply?

if we use S3 backend, we use the same bucket, but the state files for each component for each environment/stack (e.g. prod in us-west-2, dev in us-west-2, etc.) are in separate state files in separate bucket folders (TF workspace prefixes)

So a single TF execution loops over components and does a TF plan / apply?

we deploy each component separately, either manually or via automation like Spaxelift

Hmmm, In the example found here: https://docs.cloudposse.com/fundamentals/concepts/

A single deployment is deploying a single component found in that example yaml file?

deploying a single component, yes

I guess spacelift has a way of specifying which component to deploy…

a component is a high-level concept combining many modules and resources

Right

for eample, we have eks, vpc, aurora-postgres components, each consisting of many of our modules (and plain TF resources)

I guess spacelift has a way of specifying which component to deploy…

for each component in each stack (by stack I mean environment/stage/region), we generate Spacelift stacks

so we have Spacelift stacks like uw2-prod-vpc and uw2-dev-vpc and ue2-staging-eks etc.

Ah, so there is a process that does that outside of spacelift?

which deploy the corrsponding component into the corresponding environment/stage/region

Ah, so there is a process that does that outside of spacelift?

yes

https://github.com/cloudposse/terraform-spacelift-cloud-infrastructure-automation - module to deploy Spacelift stacks (via terraform)

Terraform module to provision Spacelift resources for cloud infrastructure automation - GitHub - cloudposse/terraform-spacelift-cloud-infrastructure-automation: Terraform module to provision Spacel…

https://github.com/cloudposse/terraform-provider-utils - TF provider that converts our infra stacks into Spacelift stacks

The Cloud Posse Terraform Provider for various utilities (e.g. deep merging, stack configuration management) - GitHub - cloudposse/terraform-provider-utils: The Cloud Posse Terraform Provider for v…

The Cloud Posse Terraform Provider for various utilities (e.g. deep merging, stack configuration management) - terraform-provider-utils/examples/data-sources/utils_spacelift_stack_config at main · …

so

- We define all our infra stack configs (vars, etc.) in YAML config files for each env/stage/region, separating the logic in components Terraform from the configuration (vars for each env/stage) in YAML config files, so the components themselves don’t know and don’t care where they will get deployed)

- The the provider iterates over the YAML configs and creates Spacelift stack config from it

- Then the Spacelift module deploys the Spacelift stacks into Spacelift

- Each Spacelift stack is responsible to deploying a particular component into particular env/stage/region, e.g.

uw2-prod-eksSpacelift stack will deploy the EKS component intous-west-2region intoprodenvironment

In step 2, so TF run is pushing a “stack” over to spacelift per iteration. That stack may just sit there until someone is ready to use it

Step 3, comes at a later date, as need by a consumer?

we deploy Spacelift stacks for each combination of component/env/stage/region first

Step 4, so spacelift is really dealing with pushing different components into different workspaces

then those Spacelift stacks deploy the components into the corresponding env

Ah

Ok, I see

So spacelift stacks has a configuration of what component goes where

yea, we provision the Spacelift stacks with terraform, then those Spacelift stacks provision the infra stacks with terraform

same applies to TFE

So spacelift stacks has a configuration of what component goes where

I wasn’t aware that TFE supported this concept.

they have, after the module and provider parce our YAML config files and generate Spacelift stacks from them

I wasn’t aware that TFE supported this concept.

I know you can create a single workspace and push a TF apply to that workspace, but, I didn’t think TFE couple push multiple TF components to different workspaces

TFE resources can be deployes with terraform as well

But a single TF run, in TFE, can’t push different portions of the infra to different workspaces, can it?

(I was working with TFE a year ago and don’t remember all the concepts now, and sure they have updated a lot). We are working with Spacelift now, and generate all the stacks/configs by our provider and module

Ok. We are moving into Scalr, I would like to use some of these SweetOps concepts into this next gen TF process we are building

yea, just remember that we do these main steps in general:

- Create terraform components (they don’t know in what env/stage/region) they will be deployed

- Create YAML config files for each component with vars for each env/stage/region

- Our provider and module parse the YAML configs and generate Spacelift stacks for them - Spacelift stacks are separate for each combination of component/region/env/stage etc.

- Provision the generated Spacelift stacks with terraform

- Each Spacelift stack now can plan/apply the corresponding infra stack

Thank you, that’s really helpful.

(3) Has anyone used the SO TF modules in a statefile management tool like TFC or Scalr? I’m wondering how the use of so many SO modules operate in these tools? Any links to resources or thoughts would be appreciated.

2021-10-17

Hi, I would like to create a Mongodb container with persistent storage (bind volumes). But how to do it with TF? Also, how can I create users for the container/database? Do I have to SSH into the container? Is there any other way?

Thanks

2021-10-18

Hi folks, using the aws-dynamic-subnets module we have reached a limit of 54 subnets even though our ranges are either /24 or /27. Not understanding exactly how the module is increasing the number can you maybe hint us to a way to increase this number? Running ECS Fargate across 2 AZs. It seems related to the number of CIDRs

Edit: For anyone interested, it turns out that /27 range was limiting as increasing that to /24 range it should now cover our usage. It seems that on AWS, in addition to using the first and last bit in the CIDR it is using a 3rd IP for some unknown reason to us.

Hi’ im using terraform-aws-elasticsearch module (https://registry.terraform.io/modules/cloudposse/elasticsearch/aws/latest) , its works great ! so first of all Thanks, just a question, is it possible to create ES env with only username and password authentication ? (without IAM ARN) I tried advanced_security_options_master_user_password and advanced_security_options_master_user_name but still i must to access the es with iam user authentication.

Thanks for all!

Hello, need a quick help. I’m trying to configure Amazon MQ RabbitMQ using https://github.com/cloudposse/terraform-aws-mq-broker/releases/tag/0.15.0 latest version

Error: 1 error occurred:

* logs.audit: Can not be configured when engine is RabbitMQ

on .terraform/modules/rabbitmq_broker_processing/main.tf line 89, in resource "aws_mq_broker" "default":

89: resource "aws_mq_broker" "default" {

Releasing state lock. This may take a few moments...

I found that it was already fixed in https://github.com/hashicorp/terraform-provider-aws/issues/18067 3.38.0 TF provider. But how to pin provider version if TF 0.14 version that I currently use ?

We are revising and standardizing our handling of security groups and security group rules across all our Terraform modules. This is an early attempt with significant breaking changes. We will make…

Community Note Please vote on this issue by adding a reaction to the original issue to help the community and maintainers prioritize this request Please do not leave "+1" or other comme…

In my terraform I am calling a local module multiple times. Since it’s the same module it has the same output {}. It looks like terraform doesn’t support splatting the modules like module.[test*].my_output . Does anyone know a better way to solve this?

use for_each on the module to create multiple instances of it? then module.test will contain the outputs from all of them

woahh that’s a good plan. i’ll test that out.

oh actually i forgot.. another wrench in things… they are using different AWS providers

oh, then i’d combine them using a local or whatever other expression

nice! i’ll try that

e.g.

output "my_outputs" {

value = {

test1 = module.test1.my_output

test2 = module.test2.my_output

test3 = module.test3.my_output

}

}

I was hoping to do something cheeky like

output "test" {

value = module.["test1*"].my_output

}

unfortunately, no, you can use an expression in the static part of a label, e.g. module.<label>

sad

closest you can get is maintaining a list of the modules…

locals {

modules = {

test1 = module.test1

test2 = module.test2

test3 = module.test3

}

}

output "my_outputs" {

value = { for label, module in local.modules : label => module.my_output }

}

In case anyone in the future stumbles upon this in an archive… :sweat_smile: I achieved a sub-optimal solution to address my root issue.

I wrote a quick bash script in my Makefile to do some regex magic and make sure all the modules are present in my outputs.tf file.

check_outputs:

@for i in `grep "^module " iam-delegate-roles.tf | cut -d '"' -f 2 | sed -e 's/^/module./g' -e 's/$$/.iam_role_arn,/g'`; do \

grep -q $$i outputs.tf || { echo -e "****FAIL**** $$i is not present in outputs.tf\n****Add this output before committing.****"; exit 1; }; \

done

Then loaded that script into a pre-commit hook:

- repo: local

hooks:

- id: check-outputs

name: All Role ARNs in outputs.tf

language: system

entry: make check_outputs

files: outputs.tf

always_run: true

2021-10-19

Hi, im using terraform-aws-elasticsearch module (https://registry.terraform.io/modules/cloudposse/elasticsearch/aws/latest) , its works great ! so first of all Thanks, just a question, is it possible to create ES env with only username and password authentication ? (without IAM ARN) I tried advanced_security_options_master_user_password and advanced_security_options_master_user_name but still i must to access the es with iam user authentication.

Amazon OpenSearch Service offers several ways to control access to your domains. This topic covers the various policy types, how they interact with each other, and how to create your own custom policies.

@idan levi If you’d like to authenticate using only the Internal User Database of Elastic(Open)Search, then you could use the following block inside aws_elasticsearch_domain resource:

advanced_security_options {

enabled = true

internal_user_database_enabled = true

master_user_options {

master_user_name = <MASTER_USERNAME>

master_user_password = <MASTER_PASSWORD>

}

}

This will create a main (“master”) user for you inside ElasticSearch domain and you’ll be able to authenticate with just username and password when accessing Kibana (or when accessing ElasticSearch APIs)

Hi all, can someone help me with adding redrive_policy for multiple sqs queues in calling one resource

2021-10-20

Hi! I’m using the sns-topic module for standard queues currently, but need to make use of a FIFO queue now. Since it’s a fifo queue, AWS requires that the name end in .fifo. For some reason the module is stripping my period out. Is there some other variable I’m missing setting for this besides setting fifo_topic to true?

Digging through the module, I see there’s a replace(module.this.id, ".", "-")for display_name, but I’m not seeing why it’s happening for the Topic name

Ex: Setting it to -fifo leaves it unchanged:

results-test-fifo

But ending in .fifo results in:

results-testfifo

Terraform Module to Provide an Amazon Simple Notification Service (SNS) - GitHub - cloudposse/terraform-aws-sns-topic: Terraform Module to Provide an Amazon Simple Notification Service (SNS)

Terraform Module to Provide an Amazon Simple Notification Service (SNS) - terraform-aws-sns-topic/main.tf at c61835f686855245e7f4af264a16d2874a67e5d5 · cloudposse/terraform-aws-sns-topic

This seems to work, but feels hacky and not an intended method. It at least lets me move forward though!

name = "results-test"

delimiter = "."

attributes = ["fifo"]

Terraform Module to Provide an Amazon Simple Notification Service (SNS) - GitHub - cloudposse/terraform-aws-sns-topic: Terraform Module to Provide an Amazon Simple Notification Service (SNS)

Terraform Module to Provide an Amazon Simple Notification Service (SNS) - terraform-aws-sns-topic/main.tf at c61835f686855245e7f4af264a16d2874a67e5d5 · cloudposse/terraform-aws-sns-topic

v1.1.0-alpha20211020 1.1.0 (Unreleased) UPGRADE NOTES:

Terraform on macOS now requires macOS 10.13 High Sierra or later; Older macOS versions are no longer supported.

The terraform graph command no longer supports -type=validate and -type=eval options. The validate graph is always the same as the plan graph anyway, and the “eval” graph was just an implementation detail of the terraform console command. The default behavior of creating a plan graph should be a reasonable replacement for both of the removed…

1.1.0 (Unreleased) UPGRADE NOTES: Terraform on macOS now requires macOS 10.13 High Sierra or later; Older macOS versions are no longer supported. The terraform graph command no longer supports …

Anyone run into TF hanging when refreshing state? Really weird, specific to a single module, hangs when trying to get the state of an IAM role attached to a Google service account. Hangs forever. Other modules work fine (which also get the state of the same service account).

Deleted everything by hand including state and the issue is resolved

In my case it’s usually an auth error to a provider or something. I used TF_LOG=DEBUG to figure those out

2021-10-21

2021-10-22

I’m having trouble importing a name_prefix’d resource. After I import it successfully and plan I get

+ name_prefix = "something" # forces replacement

Anyone had the same issue and a solution?

Thanks @Alex Jurkiewicz. This would mean that name_prefix’d resources can’t really be imported properly?

I guess lifecycle ignore_changes suits me the best atm.

btw, what resource is it?

here’s a related open aws provider issue https://github.com/hashicorp/terraform-provider-aws/issues/9574 but it’s possible you tripped over a new bug

looks like only the importation of the aws security group resource works but not for any other resource, according to the checklist in the ticket

it was an rds parameters group in my case today

ah ok makes sense. at least that resource is on bflads list. hopefully they complete it soon

Hey everyone, can help me with how can i fix the redrive policy for deadletter queue

resource “aws_sqs_queue” “queue1” { for_each = toset(var.repolist) name = “${each.value}${var.environmentname}” delay_seconds = 10 max_message_size = 86400 message_retention_seconds = 40 receive_wait_time_seconds = 30 visibility_timeout_seconds = 30 }

resource “aws_sqs_queue” “deadletter” { for_each = toset(var.repolist) name = “${each.value}-deadletter-${var.environmentname}” delay_seconds = 10 max_message_size = 86400 message_retention_seconds = 40 receive_wait_time_seconds = 30 visibility_timeout_seconds =30 redrive_policy = jsonencode({ deadLetterTargetArn=values(aws_sqs_queue.queue1)[*].arn maxReceiveCount = 4 }) }

can anyone help me in this

everything is working but unable to add redrive policy to each value in variable repolist.

Any help would be appreciated

2021-10-23

Hello Everone, Does anyone done before for EC2 (Windows) format the additional disks and mount as different drives via Terraform

2021-10-25

hi guys, this is a question regarding EKS - is anyone implementing the node termination handler? This is a copy of what the notes of the eks module mention:

• Setting instance_refresh_enabled = true will recreate your worker nodes *without draining them first.* It is recommended to install aws-node-termination-handler for proper node draining. Find the complete example here instance_refresh.

What can go wrong in a production system if we don’t drain the nodes first? as the new nodes will be spinned up already, and k8s will shift the load to them when we kill the old nodes, correct?

Think of it like this: nodes will be randomly killed. With a termination handler, there will be a clean termination process (move all the pods off the node, respect Pod Disruption Budgets, and so on)

I think if we use the min_healthy_percentage = 90 if we have 10 nodes, it will just bring down only 1 node at the time, correct?

instance_refresh {

strategy = "Rolling"

preferences {

min_healthy_percentage = 90

}

triggers = ["tag"]

}

i mean, sure, ths 1 node will be randomly selected, but when we got timewindow for outage anyway, it wont create any issues right?

I think it would be 1 node at a time, yes.

Also, those are nodes defined as “healthy” from the ASG standpoint. That means they may still be starting your pods, for example. It depends on how interruption-friendly your apps are.

This question is more about what happens to your workload if they’re not getting gracefully terminated. Also note that for most eg. most of the ingress controllers (working on k8s endpoints directly) it’s important that you’re somehow doing graceful shutdowns of your backend services including a preStop hook to wait a few seconds to ensure the k8s endpoint controller is able to adjust the k8s endpoints before the backend has been shutdown. So you’ll most likely see some hickups, like failing inflight requests if you don’t handle the shutdown.

Some more background can also be found here https://kubernetes.io/blog/2021/04/21/graceful-node-shutdown-beta/

Authors: David Porter (Google), Mrunal Patel (Red Hat), and Tim Bannister (The Scale Factory) Graceful node shutdown, beta in 1.21, enables kubelet to gracefully evict pods during a node shutdown. Kubernetes is a distributed system and as such we need to be prepared for inevitable failures — nodes will fail, containers might crash or be restarted, and - ideally - your workloads will be able to withstand these catastrophic events.

2021-10-26

Any idea how to adjust read_capacity or write_capacity in https://github.com/cloudposse/terraform-aws-dynamodb when autoscaler is turned off? We’ll need the lifecycle ignore to make the autoscaler work but it breaks the manual scale https://github.com/cloudposse/terraform-aws-dynamodb/blob/master/main.tf#L68. AFAIK there is no way to have dynamic lifecycle blocks in terraform so far

Terraform module that implements AWS DynamoDB with support for AutoScaling - GitHub - cloudposse/terraform-aws-dynamodb: Terraform module that implements AWS DynamoDB with support for AutoScaling

Terraform module that implements AWS DynamoDB with support for AutoScaling - terraform-aws-dynamodb/main.tf at master · cloudposse/terraform-aws-dynamodb

unfortunately dynamic lifecycle blocks are not permitted in terraform as of yet

Terraform module that implements AWS DynamoDB with support for AutoScaling - GitHub - cloudposse/terraform-aws-dynamodb: Terraform module that implements AWS DynamoDB with support for AutoScaling

Terraform module that implements AWS DynamoDB with support for AutoScaling - terraform-aws-dynamodb/main.tf at master · cloudposse/terraform-aws-dynamodb

Yeah, so there is basically no way to properly use this module without the autoscaler, right? Maybe a disclaimer would help then

have you tried setting enable_autoscaler to false?

yeah I mean, that’s exactly the point :smile: I’ve turned off enable_autoscaler which also is disabled by default. the table get’s created using the initial values for read/write due to https://github.com/cloudposse/terraform-aws-dynamodb/blob/master/main.tf#L50 but after that you cannot change them anymore using terraform as they’re ignored by lifecycle .

The only idea I had to fix this is having 2 more or less identical aws_dynamodb_table . One with count = local.enabled && var.enable_autoscaler ? 1 : 0 which includes the lifecycle and one with count = local.enabled && !var.enable_autoscaler ? 1 : 0 which runs without the lifecycle

Terraform module that implements AWS DynamoDB with support for AutoScaling - terraform-aws-dynamodb/main.tf at master · cloudposse/terraform-aws-dynamodb

ooof I see what you mean.

We’ve done this in https://github.com/cloudposse/terraform-aws-ecs-alb-service-task but it can be a real problem as someone will always come up with a new lifecycle ignore iteration to create yet another resource. There is a PR being worked on that will output the logic of the module to build your own custom resource. But… there must be a better way to do this while still taking advantage of what the module has to offer

Terraform module which implements an ECS service which exposes a web service via ALB. - GitHub - cloudposse/terraform-aws-ecs-alb-service-task: Terraform module which implements an ECS service whic…

@Thomas Eck could you try simply tainting the dynamodb table resource for now anytime you want to update the capacities ?

terraform taint module.dynamodb_table.aws_dynamodb_table.default

then run another plan ?

ehm I mean, using terraform taint would force terraform to destroy and recreate the table which is definitely not what we want here of course this would work as it would get recreated with ne settings put as you cam imagine that’s not feasible. I see the point regarding the lifecycle iterations but tbh. I don’t think it would be that much of an issue for the dynamodb repo as the lifecycle there is clearly set to make autoscaling work

ah ok so taint wouldnt simply allow you to modify it in-line

for now, perhaps you could update the table manually since the lifecycle is ignored. not my favorite workaround

yeah I mean, that’s what I did but I’ll get a drift to my IaC and also have some manual interventions which cannot be reviewed and so on So if you guys are not up for a workaround like the one I ’ve proposed I would simply move away from the module as I can’t use it without the autoscaler which of course is not an issue, so don’t get this wrong Don’t want to blame or something like this but it would make sense to add a disclaimer then, highlighting this issue

I agree. That would be a good disclaimer in the interim.

looks like the thread about pros and cons of tf over cloudformation is gone, too old so Slack discarded it. I remember modules, imports, resume from where left off at last error, state manipulation and refactoring, were all mentioned as advantages of tf.

CDK and recent improvement to cloudformation (resume from error) shortens that list a bit, with import and state file manip still big ones IMO.

Any other big ones I’m forgetting?

Try searching the archive, http://archive.sweetops.com

SweetOps is a collaborative DevOps community. We welcome engineers from around the world of all skill levels, backgrounds, and experience to join us! This is the best place to talk shop, ask questions, solicit feedback, and work together as a community to build sweet infrastructure.

Good idea, strangely, not there (perhaps this only archives the office hours?)

i’m sure it’s in there somewhere

I tried to search for some stuff in the archive some days ago. I definitely knew that the thing I was looking for had been discussed. No luck however:( And I gave up

@Erik Osterman (Cloud Posse) the archive is a daily sync?

2021-10-27

Hi all, i see strange error

╷

│ Error: Invalid resource instance data in state

│

│ on .terraform/modules/subnets/public.tf line 46:

│ 46: resource "aws_route_table" "public" {

│

│ Instance module.subnets.aws_route_table.public[0] data could not be decoded from the state: unsupported attribute "timeouts".

when executing totaly unrelated import like:

terraform import kubernetes_cluster_role.cluster_admin admin

cloudposse/ terraform-aws-dynamic-subnets latest version.. Any ideas?

Terraform module for public and private subnets provisioning in existing VPC - GitHub - cloudposse/terraform-aws-dynamic-subnets: Terraform module for public and private subnets provisioning in exi…

Forgot to post my fix/resolution to my edge case.

If you just put upper() around the module id for name inside the resource, you do not need to worry about the label being all uppercase. Took me a while to come up with this but its been rock solid ever since.

// ADFS ASSUME ROLE

resource “aws_iam_role” “adfs_role” {

name = upper(module.adfs_role_label.id)

max_sessi…

Have you checked the new PR “merge queue” feature ? This is gonna be so useful for automating Terraform

2021-10-28

Hey, I’m looking to use the module terraform-aws-tfstate-backend and got curious on why do you have two dynamodb tables, one with and one without encryption, instead of using some ternary logic to set encryption=false in a single table.

https://github.com/cloudposse/terraform-aws-tfstate-backend/blob/master/main.tf#L230

Terraform module that provision an S3 bucket to store the terraform.tfstate file and a DynamoDB table to lock the state file to prevent concurrent modifications and state corruption. - terraform-…

that’s a great question!