#terraform (2022-01)

Discussions related to Terraform or Terraform Modules

Discussions related to Terraform or Terraform Modules

Archive: https://archive.sweetops.com/terraform/

2022-01-03

2022-01-04

What are people doing to get around https://github.com/hashicorp/terraform/issues/28803 ? “Objects have changed outside of Terraform” in > 1.0.X

After upgrading to 0.15.4 terraform reports changes that are ignored. It is exactly like commented here: #28776 (comment) Terraform Version Terraform v0.15.4 on darwin_amd64 + provider registry.ter…

one thing that i think helps is to always run a refresh after apply

After upgrading to 0.15.4 terraform reports changes that are ignored. It is exactly like commented here: #28776 (comment) Terraform Version Terraform v0.15.4 on darwin_amd64 + provider registry.ter…

Hmm, don’t think that is gonna cut it in a lot of cases…

perhaps, but it helps an awful lot of the time

Won’t help in places we have ignore_changes

Currently seeing

Note: Objects have changed outside of Terraform

Terraform detected the following changes made outside of Terraform since the

last "terraform apply":

# aws_cloudwatch_log_resource_policy.default has been changed

~ resource "aws_cloudwatch_log_resource_policy" "default" {

id = "userservices-ecs-cluster"

~ policy_document = jsonencode( # whitespace changes

{

Statement = [

{

Action = [

"logs:PutLogEvents",

"logs:CreateLogStream",

]

Effect = "Allow"

Principal = {

Service = [

"events.amazonaws.com",

"delivery.logs.amazonaws.com",

]

}

Resource = "arn:aws:logs:us-east-2:789659335040:log-group:/userservices/events/ecs/clusters/userservices-ecs-cluster:*"

Sid = "TrustEventBridgeToStoreLogEvents"

},

]

Version = "2012-10-17"

}

)

# (1 unchanged attribute hidden)

}

Unless you have made equivalent changes to your configuration, or ignored the

relevant attributes using ignore_changes, the following plan may include

actions to undo or respond to these changes.

─────────────────────────────────────────────────────────────────────────────

No changes. Your infrastructure matches the configuration.

Not helpful at all

well that’s a very specific case, and not the majority

I’ve run 5 plans, 4 have confusing “Objects have changed outside of Terraform” , some for “whitespace changes” some not

Folks will just start to ignore all plans if they don’t understand what is going on

the whitespace changes is something the aws provider has been working on pretty actively the last few releases. it’s getting better, but there is still work there

Note: Objects have changed outside of Terraform

Terraform detected the following changes made outside of Terraform since the

last "terraform apply":

# aws_cloudwatch_log_group.default has been changed

~ resource "aws_cloudwatch_log_group" "default" {

id = "/userservices/events/ecs/clusters/userservices-ecs-cluster"

name = "/userservices/events/ecs/clusters/userservices-ecs-cluster"

tags = {

"Environment" = "global"

"Name" = "userservices-global-userservices-ecs-cluster"

"Namespace" = "userservices"

"bamazon:app" = "userservices-ecs-cluster"

"bamazon:env" = "global"

"bamazon:namespace" = "bamtech"

"bamazon:team" = "userservices"

}

+ tags_all = {

+ "Environment" = "global"

+ "Name" = "userservices-global-userservices-ecs-cluster"

+ "Namespace" = "userservices"

+ "bamazon:app" = "userservices-ecs-cluster"

+ "bamazon:env" = "global"

+ "bamazon:namespace" = "bamtech"

+ "bamazon:team" = "userservices"

}

# (2 unchanged attributes hidden)

}

# aws_cloudwatch_log_resource_policy.default has been changed

~ resource "aws_cloudwatch_log_resource_policy" "default" {

id = "userservices-ecs-cluster"

~ policy_document = jsonencode( # whitespace changes

{

Statement = [

{

Action = [

"logs:PutLogEvents",

"logs:CreateLogStream",

]

Effect = "Allow"

Principal = {

Service = [

"events.amazonaws.com",

"delivery.logs.amazonaws.com",

]

}

Resource = "arn:aws:logs:eu-west-1:789659335040:log-group:/userservices/events/ecs/clusters/userservices-ecs-cluster:*"

Sid = "TrustEventBridgeToStoreLogEvents"

},

]

Version = "2012-10-17"

}

)

# (1 unchanged attribute hidden)

}

# aws_ecs_cluster.default has been changed

~ resource "aws_ecs_cluster" "default" {

id = "arn:aws:ecs:eu-west-1:789659335040:cluster/userservices-ecs-cluster"

name = "userservices-ecs-cluster"

tags = {

"Environment" = "global"

"Name" = "userservices-global-userservices-ecs-cluster"

"Namespace" = "userservices"

"bamazon:app" = "userservices-ecs-cluster"

"bamazon:env" = "global"

"bamazon:namespace" = "bamtech"

"bamazon:team" = "userservices"

}

+ tags_all = {

+ "Environment" = "global"

+ "Name" = "userservices-global-userservices-ecs-cluster"

+ "Namespace" = "userservices"

+ "bamazon:app" = "userservices-ecs-cluster"

+ "bamazon:env" = "global"

+ "bamazon:namespace" = "bamtech"

+ "bamazon:team" = "userservices"

}

# (2 unchanged attributes hidden)

# (1 unchanged block hidden)

}

# aws_cloudwatch_event_rule.default has been changed

~ resource "aws_cloudwatch_event_rule" "default" {

id = "userservices-ecs-cluster"

name = "userservices-ecs-cluster"

tags = {

"Environment" = "global"

"Name" = "userservices-global-userservices-ecs-cluster"

"Namespace" = "userservices"

"bamazon:app" = "userservices-ecs-cluster"

"bamazon:env" = "global"

"bamazon:namespace" = "bamtech"

"bamazon:team" = "userservices"

}

+ tags_all = {

+ "Environment" = "global"

+ "Name" = "userservices-global-userservices-ecs-cluster"

+ "Namespace" = "userservices"

+ "bamazon:app" = "userservices-ecs-cluster"

+ "bamazon:env" = "global"

+ "bamazon:namespace" = "bamtech"

+ "bamazon:team" = "userservices"

}

# (5 unchanged attributes hidden)

}

Unless you have made equivalent changes to your configuration, or ignored the

relevant attributes using ignore_changes, the following plan may include

actions to undo or respond to these changes.

─────────────────────────────────────────────────────────────────────────────

No changes. Your infrastructure matches the configuration.

lol

oof, the tags_all stuff is implemented so poorly. very annoying

just bumped/ found https://github.com/boltops-tools/terraspace and i thought i should share it in case folks are not aware of it.

Hi team. I’ve opened a bug here https://github.com/cloudposse/terraform-provider-awsutils/issues/26

For me the awsutils_default_vpc_deletion resource deletes an unknown vpc then it reports as no default vpc found.

Describe the Bug I'm trying to delete the default VPC using awsutils_default_vpc_deletion but nothing happens on apply. After apply it said it removed the default vpc with id vpc-caf666b7 but m…

We use it

Describe the Bug I'm trying to delete the default VPC using awsutils_default_vpc_deletion but nothing happens on apply. After apply it said it removed the default vpc with id vpc-caf666b7 but m…

@matt

The AWS v4 provider allows you to delete the default VPC…

Implement Full Resource Lifecycle for Default Resources

Default resources (e.g. aws_default_vpc, aws_default_subnet) previously could only be read and updated. However, recent service changes now enable users to create and delete these resources within the provider. AWS has added corresponding API methods that allow practitioners to implement the full CRUD lifecycle.

In order to avoid breaking changes to default resources, you must upgrade to use create and delete functionality via Terraform, with the caveat that only one default VPC can exist per region and only one default subnet can exist per availability zone.

Obviously haven’t tested it, but that’s what the blog says

It used to be best practice to not delete the default VPC. Is that no longer true?

It is still a best practice to remove the default VPC. In fact, AWS Security Hub will flag default VPCs in the standard compliance results as a threat to remediate.

Anybody actually used that resource?

v1.1.0 1.1.0 (December 08, 2021) If you are using Terraform CLI v1.1.0 or v1.1.1, please upgrade to the latest version as soon as possible. Terraform CLI v1.1.0 and v1.1.1 both have a bug where a failure to construct the apply-time graph can cause Terraform to incorrectly report success and save an empty state, effectively “forgetting” all existing infrastructure. Although configurations that already worked on previous releases should not encounter this problem, it’s possible that incorrect future…

1.1.0 (December 08, 2021) If you are using Terraform CLI v1.1.0 or v1.1.1, please upgrade to the latest version as soon as possible. Terraform CLI v1.1.0 and v1.1.1 both have a bug where a failure …

v1.1.1 1.1.1 (December 15, 2021) If you are using Terraform CLI v1.1.0 or v1.1.1, please upgrade to the latest version as soon as possible. Terraform CLI v1.1.0 and v1.1.1 both have a bug where a failure to construct the apply-time graph can cause Terraform to incorrectly report success and save an empty state, effectively “forgetting” all existing infrastructure. Although configurations that already worked on previous releases should not encounter this problem, it’s possible that incorrect future…

1.1.1 (December 15, 2021) If you are using Terraform CLI v1.1.0 or v1.1.1, please upgrade to the latest version as soon as possible. Terraform CLI v1.1.0 and v1.1.1 both have a bug where a failure …

hm, v1.1.2 already came out. I guess they edited the release notes to mention the major bug

yeah, someone just commented that in hangops (i assume they’re a Hashicorp employee)…

Hey all, those are just release notes updates that add some scare text to the top, to try to keep folks off those versions - nothing new to see here.

Hey folks, we have a DevSecOps webinar coming up this week about Terraform and how you can use it safely in pipelines https://www.meetup.com/sydney-hashicorp-user-group/events/283063949/

Fri, Jan 7, 12:00 PM AEDT: Deploying Infrastructure with Infrastructure as code is great, but are you protected in case someone accidentally commits the wrong thing? In this webinar, Brad McCoy and B

is there any usable, open-source baseline ruleset yet?

Fri, Jan 7, 12:00 PM AEDT: Deploying Infrastructure with Infrastructure as code is great, but are you protected in case someone accidentally commits the wrong thing? In this webinar, Brad McCoy and B

Hey @Moritz we have setup some common rego files that we use for gcp, azure, and aws. recording is here where we talk about it https://www.youtube.com/watch?v=V12785HySYM

2022-01-05

Hi colleagues, i need to make appstream work via terraform, do i understand correctly that the only way to do that is the usage of a 3rd party provider that is not the official aws one?

appstream = {

source = "arnvid/appstream"

version = "2.0.0"

}

recently added see https://github.com/hashicorp/terraform-provider-aws/releases/tag/v3.67.0 https://github.com/hashicorp/terraform-provider-aws/blob/main/ROADMAP.md#amazon-appstream

FEATURES: New Data Source: aws_ec2_instance_types (#21850) New Data Source: aws_imagebuilder_image_recipes (#21814) New Resource: aws_account_alternate_contact (#21789) New Resource: aws_appstream…

Terraform AWS provider. Contribute to hashicorp/terraform-provider-aws development by creating an account on GitHub.

fore more details https://github.com/hashicorp/terraform-provider-aws/issues/6508

Community Note Please vote on this issue by adding a reaction to the original issue to help the community and maintainers prioritize this request Please do not leave "+1" or "me to…

thanks but i can’t make it work even if i use the aws 3.70 provider - any idea what is wrong?

Initializing the backend...

Initializing provider plugins...

- Finding hashicorp/aws versions matching "3.70.0"...

- Finding latest version of hashicorp/appstream...

- Using previously-installed hashicorp/aws v3.70.0

╷

│ Error: Failed to query available provider packages

│

│ Could not retrieve the list of available versions for provider hashicorp/appstream: provider registry registry.terraform.io

│ does not have a provider named registry.terraform.io/hashicorp/appstream

│

│ All modules should specify their required_providers so that external consumers will get the correct providers when using a

│ module. To see which modules are currently depending on hashicorp/appstream, run the following command:

│ terraform providers

╵

> terraform providers

Providers required by configuration:

.

├── provider[registry.terraform.io/hashicorp/aws] 3.70.0

├── provider[registry.terraform.io/hashicorp/appstream]

├── module.iam

│ └── provider[registry.terraform.io/hashicorp/aws]

└── module.tags

Providers required by state:

provider[registry.terraform.io/hashicorp/aws]

terraform {

required_version = ">= 1.0.2"

required_providers {

aws = {

source = "hashicorp/aws"

version = "3.70.0"

}

}

}

this is our config @ismail yenigul if you have any idea please

clean your .terraform caches etc.

$ cat main.tf

terraform {

required_version = ">= 1.0.2"

required_providers {

aws = {

source = "hashicorp/aws"

version = "3.70.0"

}

}

}

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding hashicorp/aws versions matching "3.70.0"...

- Using hashicorp/aws v3.70.0 from the shared cache directory

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

try

$ rm -rf ~/.terraform.d

$ rm -rf .terraform

I haven’t tested it yet btw. I am using cloudformation over terraform for appstream

cat main.tf

terraform {

required_version = ">= 1.0.2"

required_providers {

aws = {

source = "hashicorp/aws"

version = "3.70.0"

}

}

}

resource "aws_appstream_fleet" "test" {

name = "test"

image_name = "Amazon-AppStream2-Sample-Image-02-04-2019"

instance_type = "stream.standard.small"

compute_capacity {

desired_instances = 1

}

}

erraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_appstream_fleet.test will be created

+ resource "aws_appstream_fleet" "test" {

+ arn = (known after apply)

+ created_time = (known after apply)

+ description = (known after apply)

+ disconnect_timeout_in_seconds = (known after apply)

+ display_name = (known after apply)

+ enable_de

i tried the delete comands but still the same error

Providers required by configuration:

.

├── provider[[registry.terraform.io/hashicorp/appstream](http://registry.terraform.io/hashicorp/appstream)]

something in the config is asking for the [registry.terraform.io/hashicorp/appstream](http://registry.terraform.io/hashicorp/appstream) provider but i cant figure out what is this…..

Finding latest version of hashicorp/appstream..

can you check your all providers. this should be not there

do you have something like following?

provider "appstream" {

# Configuration options

}

not really

can you search for provider string in the codes

sure, but only the screenshots that i provided before exist

remove .lock file and try again or create a new directory and copy only .tf files there

once i delete the resource “appstream_stack” then error dissapears……

because it comes from https://registry.terraform.io/providers/arnvid/appstream/latest/docs/resources/stack

it should be

resource "aws_appstream_stack" "test"

for official aws module

Added a new resource, doc and tests for AppStream Stack Fleet Association called aws_appstream_stack_fleet_association Community Note Please vote on this pull request by adding a reaction to the…

indeed :tada: mate thank you so much!!! just a small aws_ keyword created so much confusion!!!

resource "aws_acm_certificate" "this" {

for_each = toset(var.vpn_certificate_urls)

domain_name = each.value

subject_alternative_names = ["*.${each.value}"]

certificate_authority_arn = var.certificate_authority_arn

tags = {

Name = each.value

}

options {

certificate_transparency_logging_preference = "ENABLED"

}

lifecycle {

create_before_destroy = true

}

provider = aws.so

}

Any tips on this? Error message on certificate in console: ‘The signing certificate for the CA you specified in the request has expired.’ https://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/acm_certificate According to the docs above, you can create a cert signed by a private CA by passing the CA arn

Creating a private CA issued certificate

domain_name - (Required) A domain name for which the certificate should be issued

certificate_authority_arn - (Required) ARN of an ACM PCA

subject_alternative_names - (Optional) Set of domains that should be SANs in the issued certificate. To remove all elements of a previously configured list, set this value equal to an empty list ([]) or use the terraform taint command to trigger recreation.

Hello Guys - I used the bastion incubator helm chart from here and deployed on K8s and tried to connect to it using below commands but getting permission denied always.

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app=bastion-bastion" -o jsonpath="{.items[0].metadata.name}")

echo "Run 'ssh -p 2222 127.0.0.1' to use your application"

kubectl port-forward $POD_NAME 2222:22

➜ ssh -p 2211 [email protected]

The authenticity of host '[127.0.0.1]:2211 ([127.0.0.1]:2211)' can't be established.

RSA key fingerprint is SHA256:S44NDDfev4x8NCJHMVJgYXrhx4OS/SoYGer5TMGUgqg.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '[127.0.0.1]:2211' (RSA) to the list of known hosts.

[email protected]: Permission denied (publickey).

• I checked the Github API Key is correct

• I checked the SSH key in Github is there as well

• I also checked the users created in github-authorized-keys & bastion containers as well from Github team that is configured in values.yaml file

Is there anything missing from my end? can you point me somewhere to fix the issue?

The “Cloud Posse” Distribution of Kubernetes Applications - charts/incubator/bastion at master · cloudposse/charts

This is what I get in logs.

Connection closed by authenticating user azizzoaib786 127.0.0.1 port 59712 [preauth]

Connection closed by authenticating user azizzoaib786 127.0.0.1 port 59730 [preauth]

The “Cloud Posse” Distribution of Kubernetes Applications - charts/incubator/bastion at master · cloudposse/charts

Issue opened - https://github.com/cloudposse/charts/issues/269

I used the bastion incubator helm chart from here and deployed on K8s and tried to connect to it using below commands but getting permission denied always. 1. Get the application URL by running the…

We’re not really using this anymore

we’ve moved to using SSM agent with a bastion instance

So that means its deprecated project?

pending customer sponsorship, we’re not prioritizing investment into it

Terraform module to define a generic Bastion host with parameterized user_data and support for AWS SSM Session Manager for remote access with IAM authentication. - GitHub - cloudposse/terraform-aw…

this is actively maintained

The problem with the k8s bastion is if your k8s is truly hosed, even the bastion is unavailable.

2022-01-06

Does anyone know how I can do this using Terraform? I have deployed a Lambda function, created a CF Distribution, associated the origin-request to it.. But right now it’s giving me a 503 because the “function is invalid or doesn’t have the required permissions”.

This is our setup for the Lambda:

data "archive_file" "edge-function" {

type = "zip"

output_path = "function.zip"

source_file = "function.js"

}

data "aws_iam_policy_document" "lambda-role-policy" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = [

"lambda.amazonaws.com",

"edgelambda.amazonaws.com"

]

}

}

}

resource "aws_iam_role" "function-role" {

name = "lambda-role"

assume_role_policy = data.aws_iam_policy_document.lambda-role-policy.json

}

resource "aws_iam_role_policy_attachment" "function-role-policy" {

role = aws_iam_role.function-role.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole"

}

resource "aws_lambda_function" "function" {

function_name = "headers-function"

filename = data.archive_file.edge-function.output_path

source_code_hash = data.archive_file.edge-function.output_base64sha256

role = aws_iam_role.function-role.arn

runtime = "nodejs14.x"

handler = "function.handler"

memory_size = 128

timeout = 3

publish = true

}

This is the CF assignment of the Lambda:

lambda_function_association {

event_type = "origin-response"

lambda_arn = aws_lambda_function.function.qualified_arn

include_body = false

}

I bet there’s a module for that

Thanks @omry. I am doing - more or less - the same yet the function doesn’t seem to work.. Just to check: do you see CF under Triggers in your Lambda function?

But perhaps I’m looking in the wrong place and is such a trigger not needed. Haven’t needed Lambda@Edge until now

Why do you need it?

One of our dev teams is working on a new application and they are using NextJS for that.

This uses some kind of proxy running within Lambda@Edge to determine whether to serve static content (from an S3 bucket) or pass it along to the API gateway and subsequent Lambda’s attached to it to handle that particular request

According to the error it looks like the lambda is calling some other AWS services but might not have permission to access them

It appears that it is not published as a Lambda@Edge function, just as a plain Lambda. That’s why CF can’t find it

But in your L@E function, do you see anything at the Triggers page?

Not sure, but it’s presented in CF

Let take a look at my TF code as I have a origin-request L@E function to handle the CSP headers for both my static site and API Gateway/Lambda function

Does anyone use, or has anyone used Ansible enough to shed some light on when (what types of tasks) Ansible would definitely be better than Terraform? Context: cloud, not on-prem.

Basically wondering if I should invest some time learning Ansible. It’s yet another DSL and architecture and system to manage (master etc) so there should be a significantly sized set of tasks that are significantly easier to do with it than with Terraform, in order to justify it.

For me Ansible is super usable for creating machine images (together with packer), but other than that I never fall back on Ansible anymore.

I use Terraform or Ansible, but try to avoid using both. I lean towards using Terraform myself, not very good at writing YAML.

os-level system management is all i do with ansible, e.g. files, configs, packages, services, etc. nothing specifically cloud

That ^^

which has its place. i think both are worth learning, but i wouldn’t focus on the cloud portions of ansible

I get the sense that Ansible is strongest in configuration management. One concern though, when applied to OS, is updates: it does not reset the state of the OS, eg if you did something manually to the OS, this change will remain.

But I could see creating a fresh OS image (like an AMI) by creating a temp machine via Ansible, configuring it, capturing it as image and tearing it down. Then when you need to update a system, you treat it as stateless and use terraform to apply the new AMI (ie there is no post-startup saved state on there except in data volumes that can be remounted after changing to the new AMI).

Ansible is a configuration management tool, mutable and stateless by design. Definitely worth learning. Re your concern on OS updates; Ansible will just run the playbook to check for updates and install them as required.. if someone manually installs updates after this, no problem; the next time Ansible runs, those updates won’t be available to install so it’ll come back with nothing to do

In an ideal world, you should be using Terraform and Ansible together. For e.g. you’d use Terraform to deploy your ec2 instance and Ansible to configure it after the fact (change hostname, make local changes to the host, join domain etc;). Whilst you can use things like SSM documents in Terraform to achieve some of those things (for e.g. joining the domain), they become immutable and when you do things like a Terraform destroy, Terraform won’t disjoin the machine from the domain so you’ll end up with orphaned objects in AD. As the saying goes; if a hammer is your only tool, every problem begins to look like a nail.. hope this helps

Thanks @IK exactly what I was looking for, in particular your example about using ssm via terraform leading to orphaned objects in AD.

If you (or anyone else!) has any other examples where there is a clear advantage of using ansible over the equivalent in terraform, it would help me make a case for it.

if your requirement is to build images, i would use packer+ansible. packer is purpose built for that, and addresses a ton of use cases and scenarios around interacting with the cloud provider. and it has a packer plugin to make it easy to use them together

i think the advantages are pretty clear.. i mean ultimately, terraform is not a configuration management tool and when you try to make it one, it quickly falls over (as per the SSM example i gave).. the way i see it, terraform is a day-0 tool (provisioning) and ansible is a day1+ tool (configuration management)

terraform is fantastic at continuing to manage the things it provisions. defining “configuration management” only as what happens inside an operating system is a very very limited and restricted definition of the term!

@IK Yeah but it’s the concrete examples that are hard to come by. I’ve seen the exact same statements about day 0 and 1 on the web, mutabilty, configuration management vs provisioning, but it’s the substantive examples that give weight to these statements

@loren I agree.. would be interested to hear your thoughts on what more configuration management is

oh i just feel like most everything has configuration. the tags applied to a vpc are configuration. the subnets associated to a load balancer are configuration. i use terraform to manage and update that configuration, and if somehow it was changed i often use terraform to tell me and to set it back

v1.1.3 1.1.3 (January 06, 2022) BUG FIXES: terraform init: Will now remove from the dependency lock file entries for providers not used in the current configuration. Previously it would leave formerly-used providers behind in the lock file, leading to “missing or corrupted provider plugins” errors when other commands verified the consistency of the installed plugins against the locked plugins. (<a…

Terraform uses the dependency lock file .teraform.lock.hcl to track and select provider versions. Learn about dependency installation and lock file changes.

I’ve recently took a stand that our teams should avoid using terraform to configure Datadog Monitors and Dashboards (despite having written a module to configure Datadog - AWS integration>).

I’ll say more about why in the thread, but the relevant TL;DR is that part of the workflow for configuring Datadog is to contextualize the monitors and dashboards with historical data. Doing so via a manifest doesn’t make sense.

What do you think? Do you agree? Have you seen examples where Datadog via code is super useful?

Here’s a snippet of my argument:

I do not believe that Datadog Monitors (and other configurations) are suited to be configured from API.

In more detail, I believe that despite the advantage of being able to use Terraform’s for_each or module functionality to templatize certain Datadog resource patterns, that doing so introduces an interweaving of dependencies that invokes the question of updating the larger pattern every time an incremental improvement is made. I believe this linking to templates reduces the ability of engineers to tune the Datadog Monitors and Dashboards to their needs, especially as Slideshare’s infrastructure is being heavily refactored. I do not believe that this advantage outweighs the need for monitoring to be continuously updated with the latest context about operational concerns. Reducing the toil associated with adding notes, and adjusting thresholds, etc. should be minimized with the utmost priority.

Using terraform directly for configuring Datadog severely curtails the usability of Datadog, since part of the workflow for configuring Datadog is to contextualize the monitors and dashboards with historical data. Doing so via a manifest would require actually using the web interface to produce the configuration, then exporting it to JSON, then translating that to HCL.

Having two places where changes may be introduced creates a sort of dual-writer problem, would require periodic reconciliation, and would result in confusion and non-beneficial work.

I’d rather not introduce a release process to a SaaS service, especially one that benefits greatly from using the web interface.

I disagree. We’ve been configuring datadog monitors using yaml and terraform and it’s wonderful. We can recreate standard monitors for specific services and we can create generic monitors with standardized messages using code.

if we used clickops, it would be a lot more challenging for consistency

@Nathaniel Selzer interesting thread re: our recent internal conversations

this might help make it easier to use.

so we dont have to mess around with custom hcl per monitor, we have a generic module that reads it from yaml. here’s an example yaml catalog.

https://github.com/cloudposse/terraform-datadog-platform/blob/master/catalog/monitors/amq.yaml

Terraform module to configure and provision Datadog monitors, custom RBAC roles with permissions, Datadog synthetic tests, Datadog child organizations, and other Datadog resources from a YAML confi…

we also allow deep merging of this yaml to setup default monitor inputs.

We also use TF to deploy DD monitors. We deploy the same monitors to multiple similar environments (client A prod, client B prod, Client a UAT, etc.)j, across multiple services. We do this rather than use multi-monitors, because we need different thresholds in each environment/service and that’s not supported.

I broke it out so each monitor is in its own yaml file, and once you create the monitor, we configure environments.yaml so the monitor is deployed to either selected or to all environments. In my last job, we used Python and JSON to persist monitors, which was awful for multi-line messages.

This was my initiation to flattening() environments, services, and monitor defs into a data structure suitable for for_each processing.

We have several kinds of monitors that monitor different types of thigns at different levels. Currently, our TF only supports deployment of monitors at the client environments/service level. We have plans to add one-offs and enhance the inclusion/exclusion functionality.

I could see the use case if Datadog Monitors are being deployed consistently across multiple client environments. This essentially turns Datadog configuration into part of the product. Makes sense to version control then, since delivering updates to the monitoring suite becomes more important.

To elaborate, at my organization there is only a couple environments, and I found that some engineers were using terraform to codify Datadog Monitors and Dashboards to be applied once. This was hampering their ability to make changes without invoking a terraform release process, so we’re moving away from that.

@RB, I’m curious, what kind of environment(s) are you working on that work well with the terraform-datadog pattern?

It’s all tradeoffs. Picking which battles you want to fight based on what resources you have available. e.g. the same argument can be made for so many things. It’s so easy, afterall, to clickops an EKS cluster and deploy mongodb with a helm chart. Maybe < 30 minutes of work. Now “operationalize it”, takes 10x more effort.

I think it’s okay if teams want to click ops some datadog monitors to get unblocked, but as a convention, I don’t like it. Maybe for a small company, with 1-3 devs with small scale infra.

We just revamped our entire strategy for how to handle datadog/opsgenie. Major refactor on the opsgenie side. On the datadog side, we did the refactor last year some time, moving to the catalog convention.

When we develop a new monitor, we develop it still in the UI, but then codify it in via the YAML as a datadog query.

Does this UX suck? ya, it’s a tradeoff, but one that we can always walk back. We know how to deploy any previous version. We have a smooth CD process with terraform via spacelift.

the reality is very few companies I think practice good gitops when it comes to monitoring and incident management. when going through our rewrite, i reflected on this. i think it’s because it’s so hard to get it right with the right level of abstraction in the right places. i think now we finally have the patterns down to solve it (literally we’re rolling it out this week). check back with me in a couple months to see how it’s going.

To elaborate, at my organization there is only a couple environments, and I found that some engineers were using terraform to codify Datadog Monitors and Dashboards to be applied once. This was hampering their ability to make changes without invoking a terraform release process, so we’re moving away from that.

is the terraform release process cumbersome?

the other thing to think about with monitoring is how easy it is to screw it up. someone can go change the thresholds and make an honest mistake of the wrong unit (E.g. 1s vs 1000ms)

not every engineer will have the same level of experience. the PR process helps level the playing field making it possible for more people to contribute.

with the spacelift drift detection, if changes are made in the datadog UI, that’s cool, but we’ll get a drift detected in our terraform plans within 24 hours. Then we can go remediate it.

lastly, we’ve probably spent 2000 hours (20+ sprints) on datadog/opsgenie work in terraform just in the past year. so is it easy to get it right? nope. but is it possible, believe it is which is why we do what we do.

you can certainly manage some subset of your monitors with clickops. don’t get in the way of developers, if you don’t have the resources in place to handle it in better ways. let them develop the monitors, burn them in and mature. then document them in code. tag monitors managed by terraform as such, so you know which clickops and which are automated.

@Erik Osterman (Cloud Posse) It's so easy, afterall, to clickops an EKS cluster and deploy mongodb with a helm chart. Maybe < 30 minutes of work. Now "operationalize it", takes 10x more effort. can you expand on this please? Particularly the 10x more effort part

Operationalizing it is about taking it over the line into a production-ready configuration.

- DR, Backups, restores

- Environment consistency (e.g. via GitOps)

- Logging

- Monitoring & incident management

- Upgrading automation of mongo (not all helm upgrades are cake walk, if the charts change it can lead to destruction of resources)

- Upgrading the EKS cluster

- Detecting environment drift, remediation

- Security Hardening

- Scaling or better yet autoscaling (storage and compute) for monogodb

- Managing monogodb collections, indexes, etc. …just some things that come to mind

Great points, thanks for that. I totally agree.. i’m actually in 2 minds about deploying a simple ECS cluster with TF vs clickops.. will take me about 15mins via clickops but likely longer via TF.. was wondering if the effort using TF was worthwhile.. after thinking about some of the points you raise, i can see why i’d spend the extra time doing it in TF

Yup, it sort of hurts when you think about it. You’re right, it takes just 15 minutes with clickops. And it seems stupid sometimes that we spend all this time on automating it. With IAC, there’s this dip. You are first much less productive, until you become tremendously efficient. It’s not until you have the processes implemented that you start achieving greater efficiencies. So if you’re building a POC that will be thrown away, think twice about the IAC (if you don’t already have it). And if it’s inevitably going to reach production, then push back and do it the right way. Also, as consultants, what we see all the time is that something was done quick and dirty, but now we need to come in and fix it, but it’s a lot more gnarly because nothing was documented. There’s no history to understand why something was set up the way it was setup.

I just got this error, when trying to plan the complete example of the Spacelift cloud-infrastructure-automation mod. What’s the preferred way to report this?

│ Error: Plugin did not respond

│

│ with module.example.module.yaml_stack_config.data.utils_spacelift_stack_config.spacelift_stacks,

│ on .terraform/modules/example.yaml_stack_config/modules/spacelift/main.tf line 1, in data "utils_spacelift_stack_config" "spacelift_stacks":

│ 1: data "utils_spacelift_stack_config" "spacelift_stacks" {

│

│ The plugin encountered an error, and failed to respond to the

│ plugin.(*GRPCProvider).ReadDataSource call. The plugin logs may contain

│ more details.

╵

Stack trace from the terraform-provider-utils_v0.17.10 plugin:

panic: interface conversion: interface {} is nil, not map[interface {}]interface {}

goroutine 55 [running]:

github.com/cloudposse/atmos/pkg/stack.ProcessConfig(0xc000120dd0, 0x6, 0xc00011e348, 0x17, 0xc000616690, 0x100, 0x0, 0x0, 0xc00034bcf0, 0xc00034bd20, ...)

github.com/cloudposse/[email protected]/pkg/stack/stack_processor.go:276 +0x42ad

github.com/cloudposse/atmos/pkg/stack.ProcessYAMLConfigFiles.func1(0xc000120dc0, 0x0, 0x0, 0xc0005130e0, 0x1040100, 0xc0005130d0, 0x1, 0x1, 0xc00050d0e0, 0x0, ...)

github.com/cloudposse/[email protected]/pkg/stack/stack_processor.go:72 +0x3f9

created by github.com/cloudposse/atmos/pkg/stack.ProcessYAMLConfigFiles

github.com/cloudposse/[email protected]/pkg/stack/stack_processor.go:39 +0x1a5

Error: The terraform-provider-utils_v0.17.10 plugin crashed!

This is always indicative of a bug within the plugin. It would be immensely

helpful if you could report the crash with the plugin's maintainers so that it

can be fixed. The output above should help diagnose the issue.

please open an issue

Thanks, @Andriy Knysh (Cloud Posse). Already started the ticket, but the message on that page suggests reaching out here, so…

Anyone know of a working json2hcl2 tool? I’ve tried kvx/json2hcl but it’s hcl1.

fwiw, terraform supports pure json

so no need to convert anything

we exploit this fact to generate “HCL” (in json) code, but prefer to leave it in JSON so it’s clear it’s machine generated.

jsondecode() ?

Heh, pretty much, just don’t. If it’s in json, and you want tf to process it, just save the file extension as .tf.json…

2022-01-07

hi colleagues i am working on terraforming the appstream on aws, but i cant find anywhere in terraform code the lines for the appstream image registry - am i missing something?

I have a terraform workspace upgrade question. Using self-hosted Terraform Enterprise. My workspace is tied to Github VCS (no jenkins or other CI), and set to remote execution, not local. That means the workspace will use whatever tf version is config’d (ie. 0.12.31). I’m trying to upgrade to 0.13 However, I’m trying to test whether the plan using the new (0.13) binary runs clean or not. Am I doing this right?

tfenv use 0.12.31

terraform plan

# it makes the workspace run the plan.. using version 0.12.31

tfenv list-remote | grep 0.13

0.13.6

tfenv install 0.13.6

tfenv use 0.13.6

terraform init

terraform 0.13upgrade

terraform plan

# it STILL runs the workspace's terraform version, 0.12.31!! NOT my local 0.13 binary.

^^ terraform plans whatever version is set by the workspace. Do I have any other options to test the upgrade locally?

If you want to test it locally, you’ll need to set the workspace to local, or change the backend to something else and use an alternate state file.

In remote, tf plans on tf cloud, and streams the output to your local machine

Thanks, confirms my suspicions. If I change execution mode to “local” , it breaks my CI/CD since I now have to supply variables somehow. So I’ve come up with :

- Ensure tf apply runs clean

- Create feature branch

- Tfenv install 0.13.7

- Tfenv use 0.13.7

- Terraform 0.13upgrade

- Fix syntax

- In TFE UI, make sure auto apply = OFF. Set version to 0.13.7 and branch to our feature branch

- Run plan . Do NOT APPLY.

- If any syntax errors , fix in feature branch, git push.

- Run plan in TFE UI again until it plans clean .

- After this, point of no return.

- Change workspace back to original (master) branch

- Do a PR to master with new 0.13 syntax

- Run tfe apply , should run clean. Fix errors and iterate until done

.. am I off track here ?

Using git::[email protected]:cloudposse/terraform-aws-msk-apache-kafka-cluster.git?ref=tags/0.6.0

I need to enable monitoring on Kafka (AWS MSK). While running terragrunt plan I noticed the server_properties from the aws_msk_configuration resource was going to be deleted. I will try to figure out whether the properties were added later manually, or whether those are some defaults by the module or by AWS itself, etc.

But if anyone knows what’s the default behavior of this module, and what are the best practices for the server properties, that would be useful to know.

Question 2 (related): Let’s say I decide it’s best to freeze server_properties in our Gruntwork templates. AWS MSK appears to store that variable as a plain text file, one key/value per line, no spaces, which I can retrieve with AWS CLI and base64 --decode it:

auto.create.topics.enable=true

default.replication.factor=3

min.insync.replicas=2

...

I’ve tried to pass all that as a Terraform map into inputs / properties for the Kafka cluster module, but it gets deleted / rewritten with spaces by terragrunt plan:

auto.create.topics.enable = true

default.replication.factor = 3

min.insync.replicas = 2

...

I’ve tried to include it as a template file, with the contents of the template just literally the properties file from AWS:

properties = templatefile("server.properties.tpl", { })

But then I get this error:

Error: Extra characters after expression

on <value for var.properties> line 1:

(source code not available)

An expression was successfully parsed, but extra characters were found after

it.

What is a good way to force terragrunt to inject that variable into AWS exactly as I want it?

the kafka module has an input called properties

https://github.com/cloudposse/terraform-aws-msk-apache-kafka-cluster#input_properties

Terraform module to provision AWS MSK. Contribute to cloudposse/terraform-aws-msk-apache-kafka-cluster development by creating an account on GitHub.

have you tried using that? @Florin Andrei

I ended up passing the parameters I need as a map via the properties input, and that seems to work. AWS Support told me I only need to declare the non-default values there.

2022-01-09

Most organizations have at least 1 of these infrastructure problems? How are you solving them?

-Broken Modules Tearing Down Your Configurations -Drifting Away From What You Had Defined -Lack of Security & Compliance -Troublesome Collaboration -Budgets Out of Hand

those are a lot of issues

the first 2 are solved with terraform deploy services like spacelift and atlantis

3rd is solved with appropriate usage of compliance and aws services like guardduty, shield, inspector, config, etc

4th is solved with git

5th, if related to terraform, can be solved by rightsizing and using the tool infracost

#office-hours today!

i gotta watch the recording, interesting topic !

Hi ! Using https://github.com/cloudposse/terraform-aws-sso/ . It creates iam roles through permission sets and I wonder if anybody figured out how to get access to the IAM role name (want to save it as SSM.

It seems to follow the pattern: AWSReservedSSO_{permissionSetName}_{someRandomnHash}

Terraform module to configure AWS Single Sign-On (SSO) - GitHub - cloudposse/terraform-aws-sso: Terraform module to configure AWS Single Sign-On (SSO)

they seem to be created by the permission sets: https://docs.aws.amazon.com/singlesignon/latest/userguide/using-service-linked-roles.html

Learn how the service-linked role for AWS SSO is used to access resources in your AWS account.

far as i know, the role name is not returned by the permission set api calls…

i think you could use the iam_roles data source, with a regex pattern specific enough to always match just the one role… probably need to use depends_on to force the dependency. and also, it can take a bit of time for the permission set to populate the role to the account, so might need to use the time provider to delay the call a bit…

instead of depends_on, you could parse the name from the arn attribute of the permission set. that would be somewhat better, in terms of letting terraform manage the dependency graph

Ya, I think @loren’s suggestion is correct. When we wrote the module, the IAM data source didn’t support a regex pattern, but that is a nice addition.

The trouble is the account where the permission set is created is not necessarily the account(s) where the role is created, which means likely need a second provider alias to perform the lookup in the correct account

2022-01-10

Hi  ,

,

I started looking at the Terraform AWS EC2 Client VPN module and got everything deployed based on the complete example. That worked well so far. I downloaded the client configuration and imported it in the OpenVPN client (which should be supported based on the AWS documentation).

But that’s when my luck runs out. I can’t connect to the VPN and the client provides following error:

Transport Error: DNS resolve error on 'cvpn-endpoint-.....prod.clientvpn.eu-central-1.amazonaws.com' for UDP session: Host not found.

So this appears to be a “networking issue”. My computer can’t resolve the endpoint address. So it appears I missed something in my VPN setup?

Any suggestions what I might be doing wrong?

what OS are you using?

macOS Monterey

are you using tunnelblick? if yes, disable it

FWIW: I am only using the OpenVPN connect client. I don’t think it has anything to do with the VPN tools. The endpoint is just not accessible from the net somehow.

sorry, ya, just realised that after I typed it

how are you setting up the cvpn endpoint? https://docs.aws.amazon.com/vpn/latest/clientvpn-admin/troubleshooting.html

The following topic can help you troubleshoot problems that you might have with a Client VPN endpoint.

I used the Terraform module provided by the Cloud Possee to set up the VPN. But that help article properly contains the answer to my problem

I’ll try modifying the DNS as suggested in the article.

ya I get it… this is “close” to me because Ive been fighting with a bunch of issues on my cvpn deployments… its always had nothing to do with terraform and almost always had to do with missing things in aws documentation tbh

Yeah — the AWS Client VPN hostnames are not resolve-able by all DNS servers AFAIR. I remember having to have clients add additional DNS servers to properly resolve the VPN hostname when I last set one up. An annoying issue.

@Leo Przybylski might have some ideas

@Jens Lauterbach You cannot use that address of the VPN endpoint as is. It must be a subdomain like acme.cvpn-endpoint-.....prod.clientvpn.eu-central-1.amazonaws.com

In the client config, you will see something like

remote cvpn-endpoint-.....prod.clientvpn.eu-central-1.amazonaws.com 443

remote-random-hostname

remote-random-hostname is not supported by openvpn AFAIK, so the client tries to connect directly rather than using a remote random hostname. You will need to add this yourself. FYI, you could just use the AWS VPN client which does recognize it.

Disclaimer: It was difficult getting openvpn to work. I don’t believe the AWS client vpn client configuration is fully supported by openvpn; therefore, I don’t recommend it. If possible, use https://aws.amazon.com/vpn/client-vpn-download/ instead

@Matt Gowie I also encountered what you were running into in separate instances. One was when I finally got my VPN client to connect, I could not resolve DNS entries. The reason for this is that unless you are using AWS for DNS service, it will not work. For this, I had to enable this option https://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/ec2_client_vpn_endpoint#split_tunnel

What split_tunnel does is it allows traffic outside the VPN. In this case, it allows traffic to travel to DNS servers outside AWS. If you are using DNS servers elsewhere or your application needs to communicate with services outside AWS infrastructure, this will be important.

@Jens Lauterbach Regarding the remote-random-hostname in the client config, are you using the client configuration exported from the terraform module or from AWS console? I ask because the terraform module automatically produces a client configuration that will connect to openvpn. If it is not, I’d like to discuss further and resolve that. (See: https://github.com/cloudposse/terraform-aws-ec2-client-vpn#output_client_configuration)

Contribute to cloudposse/terraform-aws-ec2-client-vpn development by creating an account on GitHub.

Pretty interesting email that just lit up all of my inboxes from AWS on a Terraform provider fix… I’m very surprised they went this far to announce a small fix. I wonder if it is bogging down their servers for some reason.

Hello,

You are receiving this message because we identified that your account uses Hashicorp Terraform to create and update Lambda functions. If you are using the V2.x release with version V2.70.1, or V3.x release with version V3.41.0 or newer of the AWS Provider for Terraform you can stop reading now.

As notified in July 2021, AWS Lambda has extended the capability to track the state of a function through its lifecycle to all functions [1] as of November 23, 2021 in all AWS public, GovCloud and China regions. Originally, we informed you that the minimum version of the AWS Provider for Terraform that supports states (by waiting until a Lambda a function enters an Active state) is V2.40.0. We recently identified that this version had an issue where Terraform was not waiting until the function enters an Active state after the function code is updated. Hashicorp released a fix for this issue in May 2021 via V3.41.0 [2] and back-ported it to V2.70.1 [3] on December 14, 2021.

If you are using V2.x release of AWS Provider for Terraform, please use V2.70.1, or update to the latest release. If you are using V3.x version, please use V3.41.0 or update to the latest release. Failing to use the minimum supported version or latest can result in a ‘ResourceConflictException’ error when calling Lambda APIs without waiting for the function to become Active.

If you need additional time to make the suggested changes, you can delay states change for your functions until January 31, 2022 using a special string (awsopt-out) in the description field when creating or updating the function. Starting February 1, 2022, the delay mechanism expires and all customers see the Lambda states lifecycle applied during function create or update. If you need additional time beyond January 31, 2022, please contact your enterprise support representative or AWS Support [4].

To learn more about this change refer to the blog post [5]. If you have any questions, please contact AWS Support [4].

[1] https://docs.aws.amazon.com/lambda/latest/dg/functions-states.html

[2] https://newreleases.io/project/github/hashicorp/terraform-provider-aws/release/v3.41.0

[3] https://github.com/hashicorp/terraform-provider-aws/releases/tag/v2.70.1

[4] https://aws.amazon.com/support

[5] https://aws.amazon.com/blogs/compute/coming-soon-expansion-of-aws-lambda-states-to-all-functions

Sincerely,

Amazon Web Services

Wow, that is surprising

Does anyone know how I would be able to determine what version of TLS is used by TF when making calls to AWS APIs?

What problem are you trying to solve?

Security appears to need the information for some kind of corporate security control.

Hello everyone and good evening. I’m trying to set up https://github.com/cloudposse/terraform-aws-vpc-peering-multi-account for a VPC peering between two regions in a single AWS account.

module "vpc_peering" {

source = "cloudposse/vpc-peering-multi-account/aws"

version = "0.17.1"

namespace = var.namespace

stage = var.stage

name = var.name

requester_vpc_id = var.requester_vpc_id

requester_region = var.requester_region

requester_allow_remote_vpc_dns_resolution = true

requester_aws_assume_role_arn = aws_iam_role.vpc_peering_requester_role.arn

requester_aws_profile = var.requester_profile

accepter_enabled = true

accepter_vpc_id = var.accepter_vpc

accepter_region = var.accepter_region

accepter_allow_remote_vpc_dns_resolution = true

accepter_aws_profile = var.accepter_profile

requester_vpc_tags = {

"Primary" = false

}

accepter_vpc_tags = {

Primary = true

}

}

This is how I’m defining the module right now.

I’ve run terraform init no problems, but when I try to create a plan I get:

│ Error: no matching VPC found

│

│ with module.vpc_peering_west_east.module.vpc_peering.data.aws_vpc.accepter[0],

│ on .terraform/modules/vpc_peering_west_east.vpc_peering/accepter.tf line 43, in data "aws_vpc" "accepter":

│ 43: data "aws_vpc" "accepter" {

╷

│ Error: no matching VPC found

│

│ with module.vpc_peering_west_east.module.vpc_peering.data.aws_vpc.requester[0],

│ on .terraform/modules/vpc_peering_west_east.vpc_peering/requester.tf line 99, in data "aws_vpc" "requester":

│ 99: data "aws_vpc" "requester" {

The module is:

module "vpc_peering_west_east" {

source = "../modules/vpc_peering"

namespace = "valley"

stage = terraform.workspace

name = "valley-us-east-2-to-us-west-2-${terraform.workspace}"

accepter_vpc = "vpc-id-1"

accepter_region = "us-west-2"

accepter_profile = "valley-prod-us-west-2"

requester_vpc_id = "vpc-id-2"

requester_region = "us-east-2"

requester_profile = "valley-prod-us-east-2"

vpc_peering_requester_role_name = "valley-us-west-2-to-us-east-2-${terraform.workspace}"

}

terraform version output is:

Terraform v1.1.3

on linux_amd64

+ provider registry.terraform.io/hashicorp/aws v3.68.0

+ provider registry.terraform.io/hashicorp/null v3.1.0

Both VPCs exist, and if I try to do a simple data block they are detected with the IDs. What am I missing and perhaps what I have not read about this? Thank you beforehand for any help.

The referenced profiles do exist, and they are the ones from which the existing infrastructures exist in their respective regions.

HNY all just wondering, can a .tfvars file reference a file (EC2 userdata file) as input, or can it only take string? TIA team

2022-01-11

Hi All, I am trying to install mysql database on Windows Server 2016 (64 bit) using terraform. This is not going to be RDS. I am not sure where to start on how to install mysql on Windows Server 2016 (64 bit) EC2 in aws using terraform. Can someone provide me the insight.

I don’t think you’d use Terraform to do something like that. An playbook with Chef/Ansible/Puppet might be better.

1

1you can do it in user_data, but terraform will not have any visibility into the results - it just blindly executes the script. Probably a better solution would be to bake an AMI with Packer first, then use terraform to spin up an instance based on it?

Yes, agree with the recommendations above

@Jim G Thank you. Now I have an AMI ready. From here how can I use that AMI to spin up using terraform? Is that to create a new ec2 based on that AMI?

@DevOpsGuy try not to double post

Ansible is much better for this task.

@jose.amengual These are two different issues and this is terraform group and I posted in that context only.

yes but now I know you are using an instance instead of a RDS instance, that is what I mention it

I got something helpful here https://thepracticalsysadmin.com/create-windows-server-2019-amis-using-packer/ In case it may help others cheers

There are quite a few blog posts out there detailing this, but none of them seem to be up to date for use with the HCL style syntax, introduced in Packer 1.5, which has a number of advantages over …

Hi, I was wondering what the official process is to add enhancements to Cloudposse git repos? I wanted to enable ebs_optimized to your eks_node_group but seems you require a fork, branch -> PR ? First time wanting to contribute to a public repo so not sure if this is a standard way of doing it.

Is it possible to become a contributor or is this the only way to handle updates from the community?

Terraform module to provision a fully managed AWS EKS Node Group - GitHub - cloudposse/terraform-aws-eks-node-group: Terraform module to provision a fully managed AWS EKS Node Group

hi @Jas Rowinski, fork, branch -> PR is the correct way of doing it

Terraform module to provision a fully managed AWS EKS Node Group - GitHub - cloudposse/terraform-aws-eks-node-group: Terraform module to provision a fully managed AWS EKS Node Group

we all do branch -> PR at Cloud Posse (we don’t need to fork since it’s our own repo)

thank you for your contribution

no problem, just put a PR in. Tested on my own cluster and it worked as intended

Next up is scheduling capacity with on-demand and spot types

Greetings! Is there a good way to pass an EC2 user data file that is stored (in the same repo obvs) in another folder via the production.tfvars file? “hardcoding” isn’t a option as the EC2 module is called in another capacity also. How do you manage passing user data files? TIA

you should use a template

https://github.com/cloudposse/terraform-aws-ec2-bastion-server/blob/master/user_data/amazon-linux.sh

Terraform module to define a generic Bastion host with parameterized user_data and support for AWS SSM Session Manager for remote access with IAM authentication. - terraform-aws-ec2-bastion-server…

Terraform module to define a generic Bastion host with parameterized user_data and support for AWS SSM Session Manager for remote access with IAM authentication. - terraform-aws-ec2-bastion-server…

2022-01-12

Sad to see this conversation in the sops repo considering it’s such an essential tool for at least my own Terraform workflow. Wanted to bring it up here to get more eyes on it and if anyone who knows folks at Mozilla so they can bug them.

It's quite apparent to me that neither @ajvb nor me currently have enough time to maintain the project, with PRs sitting unreviewed. I think it's time to look for some new maintainers. I do…

wow, this is almost like if the libcurl maintainers said they don’t think they can maintain it anymore, … sops is integral to many tools

It's quite apparent to me that neither @ajvb nor me currently have enough time to maintain the project, with PRs sitting unreviewed. I think it's time to look for some new maintainers. I do…

2

2Looks like Mozilla isn’t going to let this die just yet. Ref: https://github.com/mozilla/sops/discussions/927#discussioncomment-2183834

Hi all, I’m the new Security Engineering manager here at Mozilla. I wanted to update the community on our current status and future plans for the SOPS tool.

While the project does appear stagnant at this time, this is a temporary situation. Like many companies we’ve faced our own resource constraints that have led to SOPS not receiving the support many of you would have liked to see us provide, and I ask that you bear with us a bit longer as we pull this back in. I’m directing some engineer resources towards SOPS now and growing my team as well (see below, we’re hiring!), so we expect to work on the SOPS issue backlog and set our eyes to its future soon.

I realize there is interest in the community taking over SOPS. It may go that way eventually, but at the moment SOPS is so deeply integrated throughout our stack that we’re reluctant to take our hands completely off the wheel. In the longer term we’ll be evaluating the future for SOPS as we modernize and evolve Mozilla’s tech stack. At present it serves some important needs however, so for at least the next year you can expect Mozilla to support both the tool and community involvement in its development.

Lastly, as I noted above I am growing my team! If working on tools like SOPS or exploring other areas of security involving cloud, vulnerability management, fraud, crypto, or architecture sounds interesting to you, see our job link below and apply! I have multiple roles open and these are fully remote across most of US, Canada, and Germany.

https://www.mozilla.org/en-US/careers/position/gh/3605345/

Thank you,

rforsythe

2022-01-13

Hi everyone, I m new bee in Terraform and started Github Administration through Terraform. I am creating repo, setting all required config. Now, wants to upload files present into working directory or fetch from different repo/S3 to the newly created repo. Any pointers to achieve this? Thank you

Correct me if I’m mistaken, but I think you are saying you are currently using terraform to manage the GitHub configuration of your git repositories, and now you wish to use terraform to add files to the git repository.

Could you explain in more detail why you would wish to do this?

What I am trying to achieve is when developers need to create repo, run terraform we have to enter repo name, it will then create repo, required access, events, set webhooks, labels, etc. Say if it is java project using gradle all default files I want to create/upload using terraform.

so template will be ready for dev they just need to add their code to start CI

Do template repositories serve this purpose? There is the ability to create the repository from a template: https://registry.terraform.io/providers/integrations/github/latest/docs/resources/repository#template-repositories

I don’t think there is a way to manage template updates afterward, so this is only a solution for the start of the lifecycle.

Thanks @Jim Park, will have a look.

Hi everyone! I am trying to use the terraform-null-label module and I get an error with the map, Terraform recommends the use of tomap, but I have been doing tests passing the keys and values in various ways and I can’t get it to work, has anyone had the same problem? I leave an example of the error, thanks in advance to all!

│ Error: Error in function call

│

│ on .terraform/modules/subnets/private.tf line 8, in module "private_label":

│ 8: map(var.subnet_type_tag_key, format(var.subnet_type_tag_value_format, "private"))

│ ├────────────────

│ │ var.subnet_type_tag_key is a string, known only after apply

│ │ var.subnet_type_tag_value_format is a string, known only after apply

│

│ Call to function "map" failed: the "map" function was deprecated in Terraform v0.12 and is no longer available; use tomap({ ...

│ }) syntax to write a literal map.

╵

╷

│ Error: Error in function call

│

│ on .terraform/modules/subnets/public.tf line 8, in module "public_label":

│ 8: map(var.subnet_type_tag_key, format(var.subnet_type_tag_value_format, "public"))

│ ├────────────────

│ │ var.subnet_type_tag_key is a string, known only after apply

│ │ var.subnet_type_tag_value_format is a string, known only after apply

│

│ Call to function "map" failed: the "map" function was deprecated in Terraform v0.12 and is no longer available; use tomap({ ...

│ }) syntax to write a literal map.

check that you are using the latest versions of the modules

yes, version 0.25.0 of module cloudposse/label/null

the error above is in some subnets module

I am trying to deploy atlantis in ec2 https://github.com/cloudposse/terraform-aws-ecs-atlantis/tree/0.24.1

Terraform module for deploying Atlantis as an ECS Task - GitHub - cloudposse/terraform-aws-ecs-atlantis at 0.24.1

I use the latest subnet module version 0.39.8

Enhancements Bump providers @nitrocode (#146) what Bump providers why so consumers don’t see errors based on new features used by this module references Closes #145

terraform-aws-ecs-atlantis was not updated for a while and prob uses the map() function

uff yes that module is very very old

and it was created for a forked version of atlantis

Do you have any recommendations for deploying atlantis in HA mode?

@joshmyers is right, there is no HA for atlantis

you can have multiple atlantis and have WAF or something in between to match the repo URL from the body request of the webhook call and send it to the appropriate atlantis server, but there is no concept of load balancing or anything

(just to be clear, the HA atlantis is an atlantis limitation; fault tolerance can be achieved with healthchecks and automatic recovery)

1

1Thank you all for the information!

Did you start a terragrunt run, but then spam Ctrl + C out of cowardice, like me?

Of course you didn’t, because you know you’d end up with a bajillion locks. If you need some guidance to clearing those locks on dynamodb, reference this gist.

(I foot-gun’d months ago, but neglected to share with y’all. Recommend bookmarking just in case =))

2022-01-14

Heya, we’ve got all our terraform in a ./terraform repo subdirectory.

Does pre-commit does support passing args to tflint like:

repos:

- repo: <https://github.com/gruntwork-io/pre-commit>

rev: v0.1.17 # Get the latest from: <https://github.com/gruntwork-io/pre-commit/releases>

hooks:

- id: tflint

args:

- "--config ./terraform/.tflint.hcl"

- "./terraform"

Looking at this, I’m guessing not ? https://github.com/gruntwork-io/pre-commit/blob/master/hooks/tflint.sh#L14 Thanks

A collection of pre-commit hooks used by Gruntwork tools - pre-commit/tflint.sh at master · gruntwork-io/pre-commit

not sure about that one but have you looked at this one

https://github.com/antonbabenko/pre-commit-terraform#terraform_tflint

pre-commit git hooks to take care of Terraform configurations - GitHub - antonbabenko/pre-commit-terraform: pre-commit git hooks to take care of Terraform configurations

you can use args to configure it

Thanks! I hadn’t seen that

see the review dog version too

Run tflint with reviewdog on pull requests to enforce best practices - GitHub - reviewdog/action-tflint: Run tflint with reviewdog on pull requests to enforce best practices

the difference is that reviewdog comments the pr inline whereas the anton one will just fail if a rule is broken

nice feature

2022-01-15

whats the best ci/cd pipeline for terraform these days ?

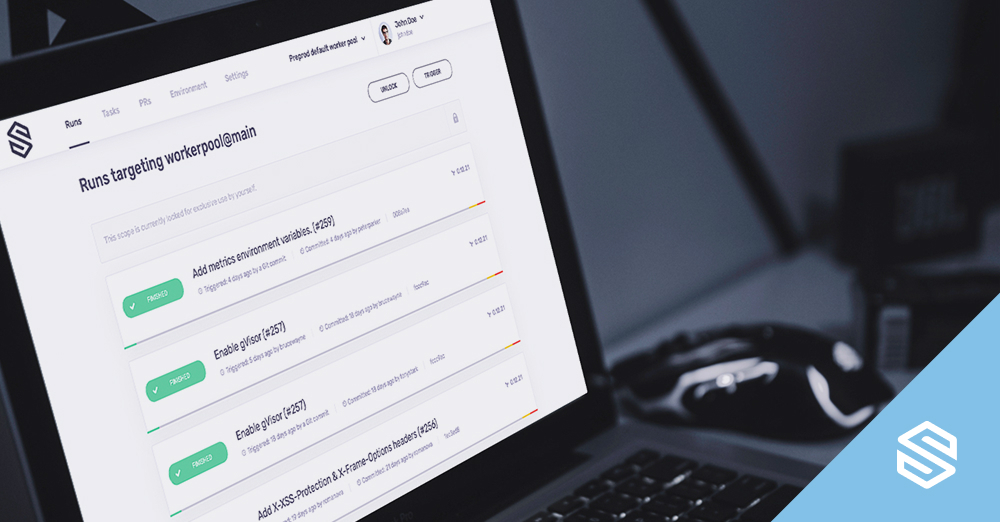

A lot of the standard CI/CD tools these days can execute TF, but you’re better off looking at the TACoS platforms (Terraform Automation and COllaboration Software). These platforms are purpose built for IaC lifecycle Automation. env0, Terraform Cloud, Spacelift, and Scalr.

Disclaimer, I’m the DevOps Advocate for env0

The Spacelift docs (which I recommend) have a great explanation of why dedicated systems for Terraform are worth it: https://docs.spacelift.io/#do-i-need-another-ci-cd-for-my-infrastructure

The Spacelift docs (which I recommend) have a great explanation of why dedicated systems for Terraform are worth it: https://docs.spacelift.io/#do-i-need-another-ci-cd-for-my-infrastructure

Take your infra-as-code to the next level

• env0

• scalr

• spacelift

• TFC ( enterprise is pricey)

• DIY Pipeline - I prefer this, as I can use Github actions or Gitlab or Bitbucket or ( my best choice ) CircleCI to implement all needed stages with fine grain control.

now with options like OIDC between AWS and Github Actions runners, no need to share credentials or worry about access

I used most of these, and fount that Terraform Cloud is best for new comers, and small team < 5

Thanks everyone.

@Mohammed Yahya among the DIY pipeline which ones the best.

there is no best one, only I found CircleCI feature rich and speedy

But depends on organisation selective tools

This looks slick https://github.com/suzuki-shunsuke/tfcmt (via weekly.tf)

Fork of mercari/tfnotify. tfcmt enhances tfnotify in many ways, including Terraform >= v0.15 support and advanced formatting options - GitHub - suzuki-shunsuke/tfcmt: Fork of mercari/tfnotify. t…

mostly for provisioning aws

Maybe it can help: https://youtu.be/Jy-fBSS8-sA?t=2646

Thanks. Have heard of spacelift but wasnt sure if it was mature enough. Ill have a look

2022-01-16

2022-01-17

Was wondering how people have setup their EKS clusters when it comes to Node Groups (EKS managed or Self Managed). I’m running EKS managed, but trying to find a way to achieve mixed_instances_policy when it comes to SPOT & ON_DEMAND instance types.

Using the node group Cloudposse module currently. But after reviewing others, it seems that mixed_instances_policy

can only be done via Self Managed. Is that correct or am I missing something?

Looking at this module, they offer 3 different strategies when it comes to node groups and mixed instances. But like I said before, it seems to be only Self Managed. Anyone else manage to get this to work with EKS managed node groups?

Seems to be an open ticket for AWS for this: https://github.com/aws/containers-roadmap/issues/1297

Community Note Please vote on this issue by adding a reaction to the original issue to help the community and maintainers prioritize this request Please do not leave "+1" or "me to…

I’ve switched to using a small Managed Node Group to bootstrap the cluster and then using Karpenter to provision instances for the rest of the workload. Karpenter can have multiple profiles for the nodes and can easily mix spot/on-demand types

That’s cool - you’re already using karpenter?

…in production too?

No just dev, we’re transitioning to k8s

2022-01-18

Hey friends. I’m running into an odd issue. I can run a terraform plan successfully from my user. I cannot run it from the user in our pipeline, who has the same permissions/policies. That pipeline user keeps hitting this error, but I have no idea why. This is deploy with cloudposse/eks-cluster/[email protected]

This cluster was originally deployed with this pipeline user as well.

module.eks_cluster.aws_iam_openid_connect_provider.default[0]: Refreshing state... [id=arn:aws:iam::amazonaws.com/id/<REDACTED>]

module.eks_cluster.kubernetes_config_map.aws_auth[0]: Refreshing state... [id=kube-system/aws-auth]

╷

│ Error: Get "https://eks.amazonaws.com/api/v1/namespaces/kube-system/configmaps/aws-auth": getting credentials: exec: executable aws failed with exit code 255

│

│ with module.eks_cluster.kubernetes_config_map.aws_auth[0],

│ on .terraform/modules/eks_cluster/auth.tf line 132, in resource "kubernetes_config_map" "aws_auth":

│ 132: resource "kubernetes_config_map" "aws_auth" {

is this the same IAM role/user that created the cluster? If not, is that role/user in the auth config map? (by default, only the role/user that created the cluster has access to it until you add additional roles/users to the auth config map)

It should’ve been. However, I’ve added the user to the mapUsers and the associated roles to the mapRoles. Maybe I’m missing a role.

I’ll dig a bit more here.

Do you happen to know what the appropriate groups should be?

would - system:masters be viable?

yea, system:masters is for admins

The user here can authenticate to the cluster, it can access the cluster via the roles, but it cant do anything via the terraform due to the error above. Do you see anything I’m inherently missing?

mapRoles: |

- groups:

- system:masters

rolearn: arn:aws:iam::123456789012:role/DevRole

username: DevRole

- groups:

- system:masters

username: DepRole

rolearn: arn:aws:iam::123456789012:role/DepRole

- groups:

- system:masters

rolearn: arn:aws:iam::123456789012:role/OrgAccountAcc

username: OrgAccountAcc

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::123456789012:role/dev-cluster-workers

username: system:node:{{EC2PrivateDNSName}}

mapUsers: |

- userarn: arn:aws:iam::098765432123:user/deploy.user

username: deploy.user

groups:

- system:masters

I found the issue. It was this, kube_exec_auth_role_arn = var.map_additional_iam_roles[0].rolearn Lets not taco bout it.

Thanks for your sanity checks. I appreciate you.

Hello everyone , I am trying to achieve vpc cross region peering using terragrunt need some ideas/suggestion to use provider as alias in terragunt.hcl root

I am trying to get my copy of reference-architectures up and running again — I know it is outdated but we already have 1 architecture built using it and we need another and the team wants them to be consistent — I believe I have everything resolved except that template_file is not available for the M1 (at least as far as I can find) so I need to update

data "template_file" "data" {

count = "${length(keys(var.users))}"

# this path is relative to repos/$image_name

template = "${file("${var.templates_dir}/conf/users/user.tf")}"

vars = {

resource_name = "${replace(element(keys(var.users), count.index), local.unsafe_characters, "_")}"

username = "${element(keys(var.users), count.index)}"

keybase_username = "${element(values(var.users), count.index)}"

}

}

resource "local_file" "data" {

count = "${length(keys(var.users))}"

content = "${element(data.template_file.data.*.rendered, count.index)}"

filename = "${var.output_dir}/overrides/${replace(element(keys(var.users), count.index), local.unsafe_characters, "_")}.tf"

}

I think I have to use templatefile() but can’t figure out how to re-write it.

Thanks in advance

If you’re running inside of geodesic amd64 it should “just work”

(with the emulation under the m1)

I didn’t realize I could run this repo inside geodesic… I guess I will try that

I guess that makes sense though. Thanks!

yep! use geodesic as your base toolbox image for all devops. that way it works uniformly on all workstations

No luck getting geodesic built… bumped into a build issue.

Did a fresh clone and check out the latest version muck when running make all I end up with a build error

=> ERROR [stage-2 7/30] RUN apk add --update $(grep -h -v '^#' /etc/apk/packages.txt /etc/apk/packages-alpine.txt) && mkdir -p /etc/bash_completion. 6.1s

------

> [stage-2 7/30] RUN apk add --update $(grep -h -v '^#' /etc/apk/packages.txt /etc/apk/packages-alpine.txt) && mkdir -p /etc/bash_completion.d/ /etc/profile.d/ /conf && touch /conf/.gitconfig:

#13 0.223 fetch <https://dl-cdn.alpinelinux.org/alpine/v3.15/main/aarch64/APKINDEX.tar.gz>

#13 1.483 fetch <https://dl-cdn.alpinelinux.org/alpine/v3.15/community/aarch64/APKINDEX.tar.gz>

#13 3.843 fetch <https://apk.cloudposse.com/3.13/vendor/aarch64/APKINDEX.tar.gz>

#13 4.584 fetch <https://alpine.global.ssl.fastly.net/alpine/edge/testing/aarch64/APKINDEX.tar.gz>

#13 5.378 fetch <https://alpine.global.ssl.fastly.net/alpine/edge/community/aarch64/APKINDEX.tar.gz>

#13 6.044 ERROR: unable to select packages: