#terraform (2022-06)

Discussions related to Terraform or Terraform Modules

Discussions related to Terraform or Terraform Modules

Archive: https://archive.sweetops.com/terraform/

2022-06-01

Hi everyone, I want to know how to block the use of an OS type for new aws/gcp instance resource creations and allow only plan/apply for existing stacks, is there a way to do this with Terraform? I have tested the validation variables but it doesn’t in all cases? T hanks

Thanks, there is some equivalent to SCPs in GCP ?

not sure to be honest. if you find the equivalent, please let me know

Hi guys: I opened this PR to the cloudposse aws-transfer-sftp terraform module to properly output the ‘id’ of the provisioned transfer server. it looks like the output.tf still had some template / example code? The ‘id’ output of the current version is always empty for me. This PR makes the ‘id’ output output the id of the provisioned transfer server. I referred to the ec2 instance module for style / naming / language conventions. Any chance I can get a review?

https://github.com/cloudposse/terraform-aws-transfer-sftp/pull/21

the ‘id’ output was an empty string, this causes it to be the disambiguated id of the provisioned AWS Transfer Server

the ‘id’ output was an empty string, this causes it to be the disambiguated id of the provisioned AWS Transfer Server

v1.2.2 1.2.2 (June 01, 2022) ENHANCEMENTS: Invalid -var arguments with spaces between the name and value now have an improved error message (#30985) BUG FIXES: Terraform now hides invalid input values for sensitive root module variables when generating error diagnostics (<a href=”https://github.com/hashicorp/terraform/issues/30552“…

When specifying variable values on the command line, name-value pairs must be joined with an equals sign, without surrounding spaces. Previously Terraform would interpret -var “foo = bar” as assign…

Terraform enables you to safely and predictably create, change, and improve infrastructure. It is an open source tool that codifies APIs into declarative configuration files that can be shared amongst team members, treated as code, edited, reviewed, and versioned. - Fixes #29156: Failing sensitive variables values are not logged by gbataille · Pull Request #30552 · hashicorp/terraform

some possible progress on the “optional” attrs experiment, setting a default value as the second argument… https://github.com/hashicorp/terraform/pull/31154

This pull request responds to community feedback on the long-running optional object attributes experiment, adding a second argument to the optional() call which describes a default value for the attribute. It’s probably easiest to review one commit at a time.

From a user perspective, the change here is in how default values are specified. The previous design used a defaults() function, intended to be called in a locals context with the type-converted variable value. This design instead inlines the default value for a given attribute next to its type constraint, which is hoped to be easier to understand and enables several use cases which were not possible with the previous design.

For example:

variable "with_optional_attribute" {

type = object({

a = string # a required attribute

b = optional(string) # an optional attribute

c = optional(number, 127) # an optional attribute with default value

})

}

Assigning { a = "foo" } to this variable will result in the value { a = "foo", b = null, c = 127 }.

While the configuration language colocates the attribute type and default value, this is not so in the underlying data structure, as this is unsupported by cty. Instead we maintain parallel data structures for the type constraint and any defaults present, which is the bulk of the new logic in this PR and is in the first commit.

Note that the typeexpr package in Terraform is a fork of the HCL package of the same name, which was done as part of the first iteration of this experiment. Should this approach be approved, we should upstream the changes to HCL to make tool integration easier. This is why we have an awkward addition to the public typeexpr API instead of modifying the TypeConstraint function.

If we move ahead with this design, I’d like to release it in an early 1.3 alpha to gather feedback from the community already using optional attributes. Should this approach seem viable, my goal would then be to conclude the experiment for the 1.3.0 release (i.e. before the first beta).

Wow!

This pull request responds to community feedback on the long-running optional object attributes experiment, adding a second argument to the optional() call which describes a default value for the attribute. It’s probably easiest to review one commit at a time.

From a user perspective, the change here is in how default values are specified. The previous design used a defaults() function, intended to be called in a locals context with the type-converted variable value. This design instead inlines the default value for a given attribute next to its type constraint, which is hoped to be easier to understand and enables several use cases which were not possible with the previous design.

For example:

variable "with_optional_attribute" {

type = object({

a = string # a required attribute

b = optional(string) # an optional attribute

c = optional(number, 127) # an optional attribute with default value

})

}

Assigning { a = "foo" } to this variable will result in the value { a = "foo", b = null, c = 127 }.

While the configuration language colocates the attribute type and default value, this is not so in the underlying data structure, as this is unsupported by cty. Instead we maintain parallel data structures for the type constraint and any defaults present, which is the bulk of the new logic in this PR and is in the first commit.

Note that the typeexpr package in Terraform is a fork of the HCL package of the same name, which was done as part of the first iteration of this experiment. Should this approach be approved, we should upstream the changes to HCL to make tool integration easier. This is why we have an awkward addition to the public typeexpr API instead of modifying the TypeConstraint function.

If we move ahead with this design, I’d like to release it in an early 1.3 alpha to gather feedback from the community already using optional attributes. Should this approach seem viable, my goal would then be to conclude the experiment for the 1.3.0 release (i.e. before the first beta).

I wonder if this PR would kick it out of an experiment and make the feature GA

Oh yep, the PR removed the experimental function for defaults/optional so it could be GA once that’s merged and released

yeah, the discussion says it could make it into the 1.3 release

v1.2.2 1.2.2 (June 01, 2022) ENHANCEMENTS: Invalid -var arguments with spaces between the name and value now have an improved error message (#30985) BUG FIXES: Terraform now hides invalid input values for sensitive root module variables when generating error diagnostics (<a href=”https://github.com/hashicorp/terraform/issues/30552“…

1.2.2 (June 01, 2022) ENHANCEMENTS: Invalid -var arguments with spaces between the name and value now have an improved error message (#30985) BUG FIXES: Terraform now hides invalid input values …

2022-06-02

For those using Spacelift, how crazy do you all go with the login/access/approval/plan policies? Looking to glean some ideas to approach implementing this.

You should limit login and make sure to use Okta or something similar to protect effectively. As for plans, it is good to auto plan and require manual configuration to make sure plans look good and will not negatively impact a stack.

I am a zero trust type person. I believe it is best to restrict access to things people really need access to.

I’m see, good tips. Do you allow local-previews for your devs?

We try to keep things simple. Generally, anyone with read access to our GH org will have read access to Spacelift. A smaller group of devops/SRE staff will have access to make changes, most via PRs to a Terraform configuration using the spacelift provider. And these people have admin access to Spacelift to make runtime break-glass type changes

Thanks for sharing, will come in handy as we explore.

Good question for spacelift

(related, have you seen https://github.com/spacelift-io/spacelift-policies-example-library? this is a relatively new resource)

A library of example Spacelift Policies.

Oh, no I haven’t! Thanks for sharing, will come in handy. Speaking of Spacelift, I have a question for office hours!

ok, tee it up

I did, but just got dragged into a meeting :( I’ll try to get out as soon as I can but please do have the discussion without me

2022-06-03

is anyone else getting throttling errors when trying to manage AWS SSO resources (aws_ssoadmin_account_assignment in particular) with terraform? https://github.com/hashicorp/terraform-provider-aws/issues/24858

Community Note

• Please vote on this issue by adding a :+1: reaction to the original issue to help the community and maintainers prioritize this request • Please do not leave “+1” or other comments that do not add relevant new information or questions, they generate extra noise for issue followers and do not help prioritize the request • If you are interested in working on this issue or have submitted a pull request, please leave a comment

Terraform CLI and Terraform AWS Provider Version

Terraform v1.1.5

on linux_amd64

+ provider registry.terraform.io/hashicorp/aws v4.4.0

Affected Resource(s)

aws_ssoadmin_account_assignment

Terraform Configuration Files

Please include all Terraform configurations required to reproduce the bug. Bug reports without a functional reproduction may be closed without investigation.

data "aws_identitystore_group" "awssso_group" {

identity_store_id = var.identity_store_id

filter {

attribute_path = "DisplayName"

attribute_value = var.group_name

}

}

# associate the group to the permission set & account(s)

resource "aws_ssoadmin_account_assignment" "group_permissionset_account_assignments" {

for_each = toset(var.account_id_list)

instance_arn = var.awssso_instance_arn

permission_set_arn = var.permission_set_arn

principal_id = data.aws_identitystore_group.awssso_group.group_id

principal_type = "GROUP"

target_id = sensitive(each.value)

target_type = "AWS_ACCOUNT"

}

Debug Output Panic Output Expected Behavior

Plan: 169 to add, 0 to change, 0 to destroy.

169 AWS resources should have been created with any AWS API Throttling being handled gracefully.

Actual Behavior

approximately 140 AWS resources were created before receiving the following error on subsequent calls:

Error: error reading SSO Account Assignment for Principal (...): not found

with module.group_assignment.aws_ssoadmin_account_assignment.group_permissionset_account_assignments["123456789"],

on ../../../modules/group_assignment/main.tf line 15, in resource "aws_ssoadmin_account_assignment" "group_permissionset_account_assignments":

15: resource "aws_ssoadmin_account_assignment" "group_permissionset_account_assignments" {

I re-ran terraform apply on the exact same code and all but 1 AWS resource was successfully created. Running terraform apply a third time with no code/config changes successfully created the last of the 169 resources.

Steps to Reproduce

terraform apply

Important Factoids

CloudTrail has the following error:

...

"eventTime": "2022-05-18T13:57:18Z",

"eventSource": "sso.amazonaws.com",

"eventName": "ListAccountAssignments",

"awsRegion": "us-east-1",

"sourceIPAddress": "123.456.789.123",

"userAgent": "APN/1.0 HashiCorp/1.0 Terraform/1.1.5 (+<https://www.terraform.io>) terraform-provider-aws/dev (+<https://registry.terraform.io/providers/hashicorp/aws>) aws-sdk-go/1.43.25 (go1.17.6; linux; amd64)",

"errorCode": "ThrottlingException",

"errorMessage": "Rate exceeded",

"requestParameters": null,

"responseElements": null,

"requestID": "GUID",

"eventID": "GUID",

"readOnly": true,

"eventType": "AwsApiCall",

"managementEvent": true,

"recipientAccountId": "123456789",

"eventCategory": "Management",

"tlsDetails": {

"tlsVersion": "TLSv1.2",

"clientProvidedHostHeader": "sso.us-east-1.amazonaws.com"

}

}

References

• #0000

surprisingly, we manage several thousand assignments in a single configuration and don’t see this. Our SSO is homed in us-west-2.

You could try setting -parallelism=1 as a workaround

Community Note

• Please vote on this issue by adding a :+1: reaction to the original issue to help the community and maintainers prioritize this request • Please do not leave “+1” or other comments that do not add relevant new information or questions, they generate extra noise for issue followers and do not help prioritize the request • If you are interested in working on this issue or have submitted a pull request, please leave a comment

Terraform CLI and Terraform AWS Provider Version

Terraform v1.1.5

on linux_amd64

+ provider registry.terraform.io/hashicorp/aws v4.4.0

Affected Resource(s)

aws_ssoadmin_account_assignment

Terraform Configuration Files

Please include all Terraform configurations required to reproduce the bug. Bug reports without a functional reproduction may be closed without investigation.

data "aws_identitystore_group" "awssso_group" {

identity_store_id = var.identity_store_id

filter {

attribute_path = "DisplayName"

attribute_value = var.group_name

}

}

# associate the group to the permission set & account(s)

resource "aws_ssoadmin_account_assignment" "group_permissionset_account_assignments" {

for_each = toset(var.account_id_list)

instance_arn = var.awssso_instance_arn

permission_set_arn = var.permission_set_arn

principal_id = data.aws_identitystore_group.awssso_group.group_id

principal_type = "GROUP"

target_id = sensitive(each.value)

target_type = "AWS_ACCOUNT"

}

Debug Output Panic Output Expected Behavior

Plan: 169 to add, 0 to change, 0 to destroy.

169 AWS resources should have been created with any AWS API Throttling being handled gracefully.

Actual Behavior

approximately 140 AWS resources were created before receiving the following error on subsequent calls:

Error: error reading SSO Account Assignment for Principal (...): not found

with module.group_assignment.aws_ssoadmin_account_assignment.group_permissionset_account_assignments["123456789"],

on ../../../modules/group_assignment/main.tf line 15, in resource "aws_ssoadmin_account_assignment" "group_permissionset_account_assignments":

15: resource "aws_ssoadmin_account_assignment" "group_permissionset_account_assignments" {

I re-ran terraform apply on the exact same code and all but 1 AWS resource was successfully created. Running terraform apply a third time with no code/config changes successfully created the last of the 169 resources.

Steps to Reproduce

terraform apply

Important Factoids

CloudTrail has the following error:

...

"eventTime": "2022-05-18T13:57:18Z",

"eventSource": "sso.amazonaws.com",

"eventName": "ListAccountAssignments",

"awsRegion": "us-east-1",

"sourceIPAddress": "123.456.789.123",

"userAgent": "APN/1.0 HashiCorp/1.0 Terraform/1.1.5 (+<https://www.terraform.io>) terraform-provider-aws/dev (+<https://registry.terraform.io/providers/hashicorp/aws>) aws-sdk-go/1.43.25 (go1.17.6; linux; amd64)",

"errorCode": "ThrottlingException",

"errorMessage": "Rate exceeded",

"requestParameters": null,

"responseElements": null,

"requestID": "GUID",

"eventID": "GUID",

"readOnly": true,

"eventType": "AwsApiCall",

"managementEvent": true,

"recipientAccountId": "123456789",

"eventCategory": "Management",

"tlsDetails": {

"tlsVersion": "TLSv1.2",

"clientProvidedHostHeader": "sso.us-east-1.amazonaws.com"

}

}

References

• #0000

2022-06-05

2022-06-06

2022-06-08

Hi, if i have a object with multiple values in it in terraform is it possible to call a specific set of them?

ie i have a test.values and it returns:

1,2,3,4,5

if i do

output "test" {

values = test.vaules[0]

}

it returns 1

is it possible to return: 1,2,3 or 3,4,5?

ive tried `test.values[0-2] and it errors saying

The given key does not identify an element in this collection value: a negative number is not a valid index for a sequence.

thanks for any help

it’s not clear if you are talking about a list, map, object, or set here

for lists, you can use range

https://www.terraform.io/language/functions/range

For sets, maps, and objects, you can convert to list and use range as above.

The range function generates sequences of numbers.

if you are working with a list of values, I think you want the slice() function:

https://www.terraform.io/language/functions/slice

variable "test" {

type = list(number)

default = [1, 2, 3, 4, 5]

}

output "first-three" {

value = slice(var.test, 0, 3)

}

output "last-three" {

value = slice(var.test, 2, 5)

}

gives:

first-three = tolist([

1,

2,

3,

])

last-three = tolist([

3,

4,

5,

])

The slice function extracts some consecutive elements from within a list.

btw, the a negative number is not a valid index error is coming from TF interpreting the [0-2] as a artithmetic operation… its subtracting 2 from 0 and then trying to use that as the index for the list.

This seems like it would be very handy… https://twitter.com/andymac4182/status/1534438515760824320?t=TBJuF1EzNAoAD08VPiFDYg&s=19

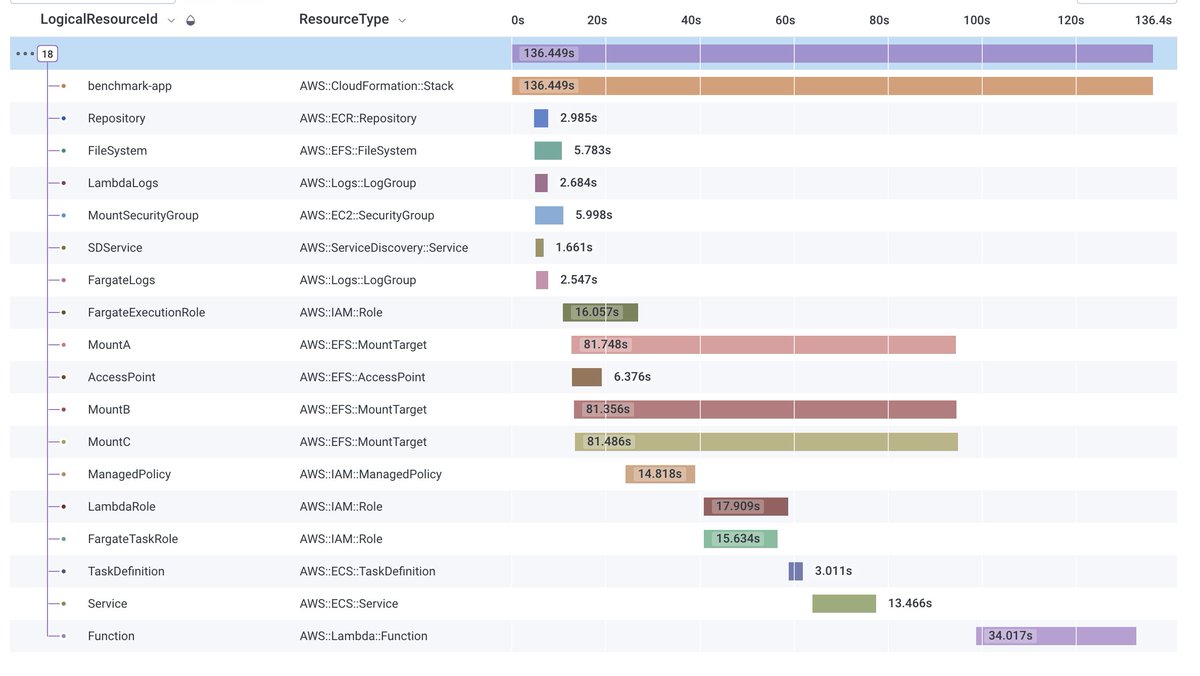

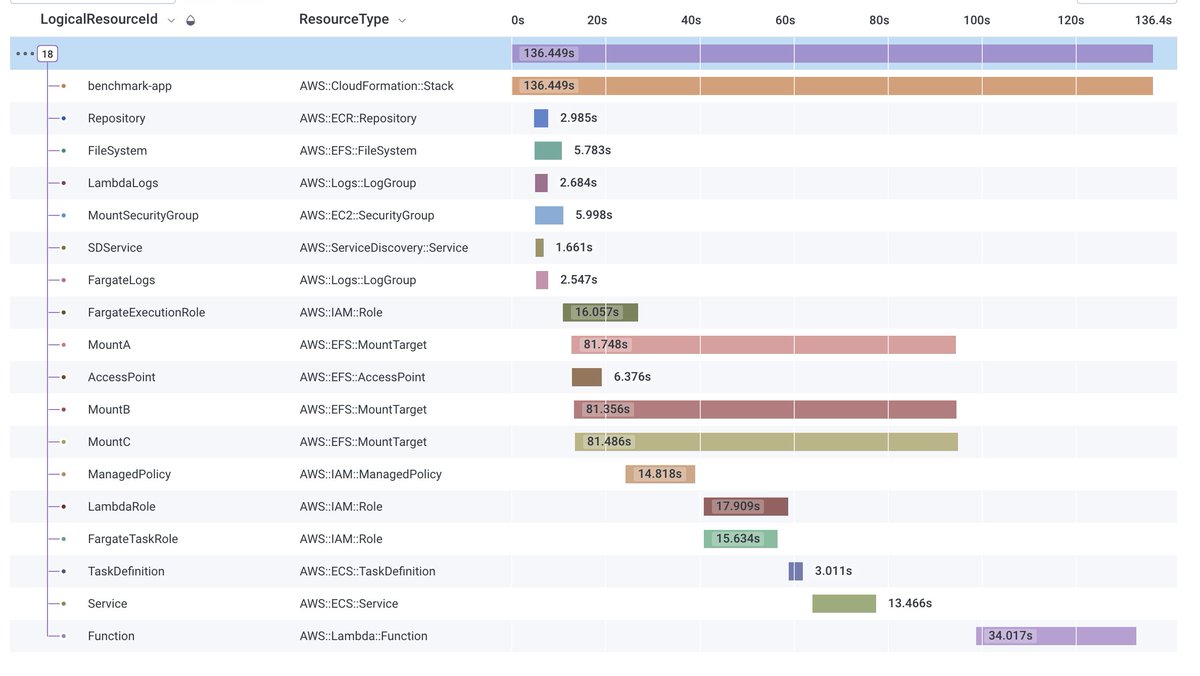

This would be amazing to see in @HashiCorp Terraform. It would make visualising what is going on really great for aggregating lots of deployments to see what is causing issues. https://twitter.com/__steele/status/1528204759320383488

I’d love to see Terraform and the AWS CDK emit resource creation / update durations to an @opentelemetry collector, so we can get useful stats like this.

It makes it easy to see at a glance which resources are causing the slow stack creation. https://pbs.twimg.com/media/FTVFr9jakAAF_i-.jpg

That would be rad!

This would be amazing to see in @HashiCorp Terraform. It would make visualising what is going on really great for aggregating lots of deployments to see what is causing issues. https://twitter.com/__steele/status/1528204759320383488

I’d love to see Terraform and the AWS CDK emit resource creation / update durations to an @opentelemetry collector, so we can get useful stats like this.

It makes it easy to see at a glance which resources are causing the slow stack creation. https://pbs.twimg.com/media/FTVFr9jakAAF_i-.jpg

Promote it on your linkedin!

Anyone here can spot the issue with my openapi doc for apigateway?

openapi_config = {

openapi = "3.0.1"

info = {

title = "example"

version = "1.0"

}

paths = {

"/"= {

get = {

responses = {

"200" = {

description= "200 response"

}

}

x-amazon-apigateway-integration= {

type = "mock"

responses= {

default= {

statusCode= "200"

}

}

}

}

}

}

}

I’m jsonencoding this after

{

"field": [ 1, 2, 3 ]

}

I need the commas?

example:

{

"title": "Sample Pet Store App",

"description": "This is a sample server for a pet store.",

"termsOfService": "<http://example.com/terms/>",

"contact": {

"name": "API Support",

"url": "<http://www.example.com/support>",

"email": "[email protected]"

},

"license": {

"name": "Apache 2.0",

"url": "<https://www.apache.org/licenses/LICENSE-2.0.html>"

},

"version": "1.0.1"

}

speech marks too

this is HCL format

I was using this before :

openapi_config = {

openapi = "3.0.1"

info = {

title = "example"

version = "1.0"

}

paths = {

"/path1" = {

get = {

x-amazon-apigateway-integration = {

httpMethod = "GET"

payloadFormatVersion = "1.0"

type = "HTTP_PROXY"

uri = "<https://ip-ranges.amazonaws.com/ip-ranges.json>"

}

}

}

}

}

that worked fine

both your first example and second example pass the sniff test https://www.hcl2json.com/

A web-based tool to convert between Hashicorp Configuration Language (HCL), JSON, and YAML

and it does not work

@matt

~Looks like in your first example the ~x-amazon-apigateway-integration is outside the GET method block

Nevermind…my eyes were playing tricks on me…haha

Are you using the Cloud Posse API Gateway module? You shouldn’t be JSON encoding it before passing it in…

See the example here

provider "aws" {

region = var.region

}

module "api_gateway" {

source = "../../"

openapi_config = {

openapi = "3.0.1"

info = {

title = "example"

version = "1.0"

}

paths = {

"/path1" = {

get = {

x-amazon-apigateway-integration = {

httpMethod = "GET"

payloadFormatVersion = "1.0"

type = "HTTP_PROXY"

uri = "<https://ip-ranges.amazonaws.com/ip-ranges.json>"

}

}

}

}

}

logging_level = var.logging_level

context = module.this.context

}

I’m using the cloud Posse module

but I’m setting an example prive api

v1.3.0-alpha20220608 1.3.0 (Unreleased) UPGRADE NOTES:

Module variable type constraints now support an optional() modifier for object attribute types. Optional attributes may be omitted from the variable value, and will be replaced by a default value (or null if no default is specified). For example: variable “with_optional_attribute” { type = object({ a = string # a required attribute b = optional(string) # an optional attribute c = optional(number, 127) # an optional attribute…

For those interested, we’re requesting feedback and bug reports in this post! Appreciate any and all alpha testing

Hi all , I’m the Product Manager for Terraform Core, and we’re excited to share our v1.3 alpha , which includes the ability to mark object type attributes as optional, as well as set default values (draft documentation here). With the delivery of this much requested language feature, we will conclude the existing experiment with an improved design. Below you can find some background information about this language feature, or you can read on to see how to participate in the alpha and pro…

2022-06-09

2022-06-10

what

• adds the ability to give push-only access to the repository

why

• full access was more than we wanted in our situation (CI pushing images to the repo) so we added a principals_push_access to give push-only access.

• Describe why these changes were made (e.g. why do these commits fix the problem?)

• Use bullet points to be concise and to the point.

references

• policy is based on this AWS doc

@RB

what

• adds the ability to give push-only access to the repository

why

• full access was more than we wanted in our situation (CI pushing images to the repo) so we added a principals_push_access to give push-only access.

• Describe why these changes were made (e.g. why do these commits fix the problem?)

• Use bullet points to be concise and to the point.

references

• policy is based on this AWS doc

2022-06-12

Hi all, I’m new in the platform, as well as using Cloudposse, I would like to know if you have a module for Fargate to deploy Java springboot

2022-06-13

In the aws_rds_cluster resource definition/docs it says …

To manage cluster instances that inherit configuration from the cluster (when not running the cluster in serverless engine mode),

see the aws_rds_cluster_instance resource.

I am confused about the when not running the cluster in serverless engine mode

Does anybody understands what this means?

Does this mean aws_rds_cluster_instance is not supported in engine mode is serverless?

Does this mean it automatically scales replicas in engine mode is serverless?

Work with Aurora Serverless v2, an on-demand autoscaling configuration for Amazon Aurora.

Scroll down to RDS Serverless v2 Cluster you might see a little more context. You can have it scale with replicas or more compute units.

Serverless uses are there so you can have a better understanding of why you may or may not use it

AWS Docs require multiple rounds of reading and parsing (for me at least) as they condense ALOT of info in a small amount of text.

I read all that before posting the question Thanks anyways

Does this mean it automatically scales replicas in engine mode is serverless? <–yes if you setup scaling metrics

I think? that serverless automatically scales rather than you creating a scaling policy.

haha thanks @Josh

Well it can scale on two parts.

yes if you setup scaling metrics

can you elaborate a bit on “setup scaling metrics” part?

I think? that serverless automatically scales rather than you creating a scaling policy.

It definitely scales capacity units automatically

You can add a scaling policy to the cluster to scale replicas based on CPU or connection percentage.

scaling policy? Any link to docs or terraform argument?

Let me look.

Are you using the cloudposse module?

not using any module

You may have to use something like this https://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/appautoscaling_target

ok. that would indicate aurora serverless v2 is not scaling replicas automatically

Not really; without setting up a policy, then it is automatic, pretty much. If you were even setting the ACU to scale from .5 - 2.0 and capped, you would still need to manually up the scaling limit on that. If you want it automatic and do not have money worries, max it out

2022-06-15

Hello, I am running into the same issue here: https://sweetops.slack.com/archives/CB6GHNLG0/p1636820679151800

Hi, I’m using https://github.com/cloudposse/terraform-aws-ecs-web-app/tree/0.65.2 and I face a strange problem. I’m doing it the way that is presented in “without_authentication”

alb_security_group = module.alb.security_group_id

alb_target_group_alarms_enabled = true

alb_target_group_alarms_3xx_threshold = 25

alb_target_group_alarms_4xx_threshold = 25

alb_target_group_alarms_5xx_threshold = 25

alb_target_group_alarms_response_time_threshold = 0.5

alb_target_group_alarms_period = 300

alb_target_group_alarms_evaluation_periods = 1

alb_arn_suffix = module.alb.alb_arn_suffix

alb_ingress_healthcheck_path = "/"

# Without authentication, both HTTP and HTTPS endpoints are supported

alb_ingress_unauthenticated_listener_arns = module.alb.listener_arns

alb_ingress_unauthenticated_listener_arns_count = 2

# All paths are unauthenticated

alb_ingress_unauthenticated_paths = ["/*"]

alb_ingress_listener_unauthenticated_priority = 100

error I got

Error: Invalid count argument

│

│ on .terraform/modules/gateway.alb_ingress/main.tf line 50, in resource "aws_lb_listener_rule" "unauthenticated_paths":

│ 50: count = module.this.enabled && length(var.unauthenticated_paths) > 0 && length(var.unauthenticated_hosts) == 0 ? length(var.unauthenticated_listener_arns) : 0

│

│ The "count" value depends on resource attributes that cannot be determined until apply, so Terraform

│ cannot predict how many instances will be created. To work around this, use the -target argument to first

│ apply only the resources that the count depends on.

By chance you may know what I’m doing wrong here?

I am not sure how to target the specific module. use the -target flag. I pass the flag but TF builds everything.

Hi, I’m using https://github.com/cloudposse/terraform-aws-ecs-web-app/tree/0.65.2 and I face a strange problem. I’m doing it the way that is presented in “without_authentication”

alb_security_group = module.alb.security_group_id

alb_target_group_alarms_enabled = true

alb_target_group_alarms_3xx_threshold = 25

alb_target_group_alarms_4xx_threshold = 25

alb_target_group_alarms_5xx_threshold = 25

alb_target_group_alarms_response_time_threshold = 0.5

alb_target_group_alarms_period = 300

alb_target_group_alarms_evaluation_periods = 1

alb_arn_suffix = module.alb.alb_arn_suffix

alb_ingress_healthcheck_path = "/"

# Without authentication, both HTTP and HTTPS endpoints are supported

alb_ingress_unauthenticated_listener_arns = module.alb.listener_arns

alb_ingress_unauthenticated_listener_arns_count = 2

# All paths are unauthenticated

alb_ingress_unauthenticated_paths = ["/*"]

alb_ingress_listener_unauthenticated_priority = 100

error I got

Error: Invalid count argument

│

│ on .terraform/modules/gateway.alb_ingress/main.tf line 50, in resource "aws_lb_listener_rule" "unauthenticated_paths":

│ 50: count = module.this.enabled && length(var.unauthenticated_paths) > 0 && length(var.unauthenticated_hosts) == 0 ? length(var.unauthenticated_listener_arns) : 0

│

│ The "count" value depends on resource attributes that cannot be determined until apply, so Terraform

│ cannot predict how many instances will be created. To work around this, use the -target argument to first

│ apply only the resources that the count depends on.

By chance you may know what I’m doing wrong here?

v1.2.3 1.2.3 (June 15, 2022) UPGRADE NOTES: The following remote state backends are now marked as deprecated, and are planned to be removed in a future Terraform release. These backends have been unmaintained since before Terraform v1.0, and may contain known bugs, outdated packages, or security vulnerabilities. artifactory etcd etcdv3 manta swift

BUG FIXES: Missing check for error diagnostics in GetProviderSchema could result in panic (<a href=”https://github.com/hashicorp/terraform/issues/31184“…

Closes #31047 Prevent potential panics and immediately return provider-defined errors diagnostics. Previously in internal/plugin6: — FAIL: TestGRPCProvider_GetSchema_ResponseErrorDiagnostic (0.00…

Hello,

Whats the safest way to delete default VPC and default subnets in an account for all regions

I don’t believe you can.

you can

trying to find an efficient way. If terraform then less code without declaring all regions. if via script then a safe script that does the job

this has been my preferred method in the past https://github.com/gruntwork-io/cloud-nuke

A tool for cleaning up your cloud accounts by nuking (deleting) all resources within it

I changed a variable to add an iam user, but then this started happening. I’ve added/removed staff from the list of iam users before without any issues

2022-06-16

Hello everyone!

I just joined, so sorry if I’m making a mess on the channel

I’m using CP modules for setting up my container based environments based on ECS and as TF module for ALB service fits great for my needs I didn’t found the similar module for ECS Scheduled Tasks and I’m thinking about implement it myself based on CP TF modules.

Do you have any process for incubating new “meta-module” projects under CP umbrella?

FYI: I didn’t started implemented it yet, I have for now messy barebones, but they will need more love and standardization to show anything

we usually use this repo https://github.com/cloudposse/terraform-example-module as a template for all new terraform modules

Example Terraform Module Scaffolding

Hi everyone! I have a question about this https://github.com/cloudposse/terraform-aws-vpc-peering-multi-account module and how tagging works on a couple of resources (aws_vpc_peering_connection-accepter, aws_vpc_peering_connection). I’m hitting an issue where terraform never converges, every time it runs it tries to remove the ‘Side = “accepter”’ tag or the ‘Side = “requester”’ one, seems like the terraform resource underneath uses the same ID for both (vpc_peering_connection , vpc_peering_connection_accepter). I was wondering if someone else has seen this too.

Terraform module to provision a VPC peering across multiple VPCs in different accounts by using multiple providers

2022-06-17

is anyone else having trouble accessing <https://registry.terraform.io/>

Yep, my team is getting 404s and Bad Gateways

seems like it’s back now

There’s an outage: https://status.hashicorp.com/

Welcome to HashiCorp Services’s home for real-time and historical data on system performance.

sigh, why does this have to happen on a friday at lunch time

i had a lot of changes i needed to apply today

it’s intermittent

2022-06-19

hello, I’m starting with TerraForm, can I learn here with you?

i am beginner devops

2022-06-20

I need a second set of eyes please…Im trying to use a list of strings to populate a variable thats used in a conditional variable of a data lookup…

Heres my code and the error im getting…

data "aws_iam_policy_document" "config_sns_policy_doc" {

statement {

effect = "Allow"

actions = ["SNS:Publish"]

principals {

type = "Service"

identifiers = ["config.amazonaws.com"]

}

resources = [aws_sns_topic.config_sns_topic.arn]

condition {

test = "StringEquals"

variable = "AWS:SourceAccount"

values = [

"${slice(var.workspace_acct_number, 0, 8)}"

]

}

}

}

variable "workspace_acct_number" {

type = list(string)

default = [

"123",

"456",

"789",

"987",

"765",

"543",

"169",

"783"

]

}

│ Error: Incorrect attribute value type

│

│ on modules/config/data.tf line 132, in data "aws_iam_policy_document" "config_sns_policy_doc":

│ 132: values = [

│ 133: "${slice(var.workspace_acct_number, 0, 8)}"

│ 134: ]

│ ├────────────────

│ │ var.workspace_acct_number is list of string with 8 elements

│

│ Inappropriate value for attribute "values": element 0: string required.

Ive tried "slice(var.workspace_acct_number, 0, 8)" and that literally spits out that line as what TF wants to put in my policy. Im just not getting the syntax right here or something I think

slice returns a list, so you don’t need to wrap it in []

You also do not need interpolation, "${...}", since you aren’t doing any string concatenation here

So just values = slice(...)

yep…that worked. Thanks!

I knew it was something i was missing… [ ]

Hello, can you suggest a DRY way to create multiple logical Postgres DB’s under a created aurora cluster (using this module: https://github.com/cloudposse/terraform-aws-rds-cluster, it allows to create the first DB). i see that db_name is optional (db_name Database name (default is not to create a database)) is there another module only for generating multiple DB’s in the same cluster? thanks!

Terraform module to provision an RDS Aurora cluster for MySQL or Postgres

2022-06-21

Hello everyone, I try to use this module https://registry.terraform.io/modules/cloudposse/s3-bucket/aws/latest But getting error on init step

Could not retrieve the list of available versions for provider hashicorp/aws: no available releases match the given constraints >= 2.0.0, >= 4.9.0

Maybe some one can help me resolve this issue?

Sorry guys it was local issue - for resolving need to clean cache from ~/.terraform.d

I’m not finding any info on the web that clearly identifies the gains of going tf cloud vs aws s3 backends other than the tf cloud GUI and “with tf cloud, you don’t have to manage your own aws resources for tfstate storage and access control”.

But if a team already has tfstate stored in s3 backends (eg using https://registry.terraform.io/modules/schollii/multi-stack-backends/aws/latest ), and some simple tooling to create the associated buckets and ddb tables and iam roles/policies when additional state is needed, is there any compelling reason left to transition to tf cloud? Using tf cloud means you become dependent on a third-party to keep your tfstates highly-available to you/your team… that’ seems like a pretty major con.

In my experience just seems statefiles are such pain especially if youre not versioning them. Im not sure what the benefits are for TF Cloud over self init’d TF deployments… Ok just looked it up, here we go for the FREE version.

- State management

- Remote operations

- Private module registry

- Community support

this would seem to be only perk… Community support but I think we have that already for free, so there really aren’t any…

Yeah for #3 you can use git repos? For #4 hashicorp community forum and SO? Github actions or weaveworks terraform operator for #2?

I wouldn’t switch if you’ve already got everything setup and automated on S3. Locking yourself in for no additional benefit AFAIK.

@Matt Gowie I would definitely agree I see no benefit in doing so… @OliverS #2 you can use gitlab docker runners and for #3 https://docs.gitlab.com/ee/user/packages/terraform_module_registry/

Documentation for GitLab Community Edition, GitLab Enterprise Edition, Omnibus GitLab, and GitLab Runner.

I wonder if a private tf registry could be a good way to control access to in-house (ie non open source) tf modules…

Can tf cloud users outside of one’s tf cloud organization be given read-only access to an org’s private tf registry?

Now that I dont know, Im famiiiar with self hosted Gitlab and with that, yes via access tokens.

PATs cannot limit access to just the repo in read-only on specific branch, that I know of.

security should be built-in and not something you have to pay for

2022-06-22

Hey everyone, Does anyone knows tools that generate auto documentation (graphs) for terraform and/or Azure?

not sure what you mean by graphs, but terraform-docs on github will document your Terraform modules

Yeah, that I already use. I am thinking for more of graph tools. With terraform docs I use for doc modules in text format

So far I found diagrams.mingrammer (but you need to write code for it ) and rover (not exactly graphs)…

Do you mean something like using the output of terraform graph to create a more detailed version of your aws/azure resources?

Have you consider using Lucidscale?

Have you tried https://github.com/cycloidio/inframap

Read your tfstate or HCL to generate a graph specific for each provider, showing only the resources that are most important/relevant.

Hi everyone!!

I’m using the eks module (v2.2.0) to manage my EKS cluster. I’ve found an issue when managing the aws_auth configuration with terraform. Adding additional users works perfectly, but when trying to add roles, it’s not adding them to the configmap.

The behaviour changes depending on the “kubernetes_config_map_ignore_role_changes” config.

• If I leave it as default (false), the worker roles are added, but not my additional roles.

• If I change it to true, the worker roles are removed and my additional roles are added. I’m attaching an example when I change the variable (from false to true). Also, I don’t see a difference on the map roles in the data block for both options except for the quoting.

Could someone help me with this? I’d love to use the “ignore changes” but adding roles, as I’m adding users.

It sounds like a bug in their module have you tried creating an issue on the repo? It would also help to see a minimum viable reproducible example.

I came here for asking first, just in case I missed something. I’m creating an issue in a minute

This is the issue: https://github.com/cloudposse/terraform-aws-eks-cluster/issues/155

Found a bug? Maybe our Slack Community can help.

Describe the Bug

When using the module to create an EKS cluster, I’m trying to add additional roles to the aws_auth configmap. This only happens with the roles, adding additional users works perfectly.

The behavior changes depending on the kubernetes_config_map_ignore_role_changes config.

• If I leave it as default (false), the worker roles are added, but not my additional roles. • If I change it to true, the worker roles are removed and my additional roles are added.

Expected Behavior

When adding map_additional_iam_roles, those roles should appear on the aws_auth configmap, together with the worker roles when the kubernetes_config_map_ignore_role_changes is set to false.

Steps to Reproduce

Steps to reproduce the behavior:

- Create a cluster and workers with this config (there are some vars not changed, so it’s easy to use):

module "eks_cluster" {

region = var.region

source = "cloudposse/eks-cluster/aws"

version = "2.2.0"

name = var.name

vpc_id = var.vpc_id

subnet_ids = var.private_subnet_ids

endpoint_public_access = false

endpoint_private_access = true

kubernetes_version = var.kubernetes_version

kube_exec_auth_enabled = true

kubernetes_config_map_ignore_role_changes = false

map_additional_iam_roles = [

{

rolearn = "arn:aws:iam::<account-id>:role/myRole"

username = "added-role"

groups = ["system:masters"]

}

]

map_additional_iam_users = [

{

userarn = "arn:aws:iam::<account-id>:user/myUser"

username = "added-user"

groups = ["system:masters"]

}

]

}

module "eks_node_group" {

source = "cloudposse/eks-node-group/aws"

version = "2.4.0"

ami_release_version = [var.ami_release_version]

instance_types = [var.instance_type]

subnet_ids = var.private_subnet_ids

min_size = var.min_size

max_size = var.max_size

desired_size = var.desired_size

cluster_name = module.eks_cluster.eks_cluster_id

name = var.node_group_name

create_before_destroy = true

kubernetes_version = [var.kubernetes_version]

cluster_autoscaler_enabled = var.autoscaling_policies_enabled

module_depends_on = [module.eks_cluster.kubernetes_config_map_id]

}

Screenshots

This example shows when I change the variable kubernetes_config_map_ignore_role_changes from false to true.

Also, I don’t see a difference in the map roles in the data block for both options except for the quoting.

Environment (please complete the following information):

Anything that will help us triage the bug will help. Here are some ideas:

• EKS version 1.22 • Module version 2.2.0

@Jeremy G (Cloud Posse)

@Julio Chana The kubernetes_config_map_ignore_role_changes setting is a bit of a hack. Terraform does not allow parameterization of the lifecycle block, so to give you the option of ignoring changes, we implement the resource twice, once with ignore_changes and once without.

If you set kubernetes_config_map_ignore_role_changes to true, then the expected behavior is that you will not be able to add or change Roles later, because you have told Terraform to ignore changes to the Roles. However, if you change the setting from false to true, the old ConfigMap is deleted and new one is created, so the first time you will get your roles added.

I’m attaching an example when I change the variable (from false to true). The screen shot you provided looks like what would happen if you change

kubernetes_config_map_ignore_role_changesfromtruetofalseand it looks like it includes the Roles you want. So I think you may just be understandably confused by Terraform’s and this module’s confusing behavior around how Role changes get ignored. I suggest you test it a little more withkubernetes_config_map_ignore_role_changesleft atfalse(which is the only setting that will let you update the Roles after first creating the cluster) and report back if you still have questions.

Thanks for your answer.

My concern here is that, in the case that I let it to false, the worker roles will disappear, even though in the auth.tf file it’s concatenating them with

mapRoles = replace(yamlencode(distinct(concat(local.map_worker_roles, var.map_additional_iam_roles))), "\"", local.yaml_quote)

This is why I’m more confused. I would love to manage all the authentication with terraform, but without breaking the regular configuration.

@Julio Chana just left a comment on the above issue. You can workaround it by first reading the existing config map using a data source then merge that with your settings

I kind of agree this is a bug because of:

• if you set ignore role changes then any extra roles you add will be ignored so you need to update both terraform vars and manually apply the new config map - kind of bad because you lose the descriptive nature of terraform • if you don’t ignore the role changes then this module will strictly apply what you specified in the variables and delete the existing aws auth map - also bad because you lose the roles added by eks when using managed node groups

So in both cases you lose something. I have got around this by first reading the config map (if exists) and merge with the current settings). That way terraform will manage only the roles setup in vars and will not touch any other manually added roles.

Example:

data "kubernetes_config_map" "existing_aws_auth" {

metadata {

name = "aws-auth"

namespace = "kube-system"

}

depends_on = [null_resource.wait_for_cluster[0]]

}

locals {

map_node_roles = try(yamldecode(data.kubernetes_config_map.existing_aws_auth.data["mapRoles"]), {})

}

resource "kubernetes_config_map" "aws_auth" {

......

mapRoles = replace(yamlencode(distinct(concat(local.map_worker_roles, local.map_node_roles, var.map_additional_iam_roles))), "\"", local.yaml_quote)

....

Also I don’t recommend using the new kubernetes_config_map_v1_data. This fails because the eks managed node group changes the ownership of the mapRoles in configMap if deployed separately. After testing vpcLambda is the sole owner of it so terraform applying again resets it.

@Jeremy G (Cloud Posse) maybe we could implement this in the official module?

If you look at the Cloud Posse EKS Terraform component (think of it at this point as a work-in-progress), you see that the way we handle this is to output and then read the managed role ARNs to include them in future auth maps.

# Yes, this is self-referential.

# It obtains the previous state of the cluster so that we can add

# to it rather than overwrite it (specifically the aws-auth configMap)

module "eks" {

source = "cloudposse/stack-config/yaml//modules/remote-state"

version = "0.22.0"

component = var.eks_component_name

defaults = {

eks_managed_node_workers_role_arns = []

}

context = module.this.context

}

My concern about reading the map in the module before writing to it is that the map does not exist before the EKS cluster is created or after it is destroyed, and everyone has had a ton of trouble just keeping it updated as is.

You can make the datasource depend on the eks cluster creation so you will always have it.

@Sebastian Macarescu You are welcome to give it a try, but be warned: there be dragons. I suggest you read the comments in auth.tf and the v0.42.0 Release Notes before proceeding.

Hi @Jeremy G (Cloud Posse). I have implemented a PR that fixes this: https://github.com/cloudposse/terraform-aws-eks-cluster/pull/157

what

• Use newer kubernetes_config_map_v1_data to force management of config map from a single place and have field ownership • Implement self reference as described here: #155 (comment) in order to detect if any iam roles were removed from terraform config • Preserve any existing config map setting that was added outside terraform; basically terraform will manage only what is passed in variables

why

Mainly the reasons from #155.

references

*closes #155

Hi everyone! I really needing some help ith the cloudposse/waf module.

Trying to create an and_statement for rate-based-statement

rate_based_statement_rules = [

{

name = "statement_name"

priority = 1

action = "block"

statement = {

aggregate_key_type = "IP"

limit = 100

scope_down_statement = {

and_statement = {

statement = [{

regex_pattern_set_reference_statement = {

arn = "some_arn_goes_here"

field_to_match = {

single_header = {

name = "authorization"

}

}

text_transformation = {

priority = 0

type = "NONE"

}

}

},

{

byte_match_statement = {

positional_constraint = "STARTS_WITH"

search_string = "search_string"

field_to_match = {

uri_path = {}

}

text_transformation = {

priority = 0

type = "NONE"

}

}

},

{

byte_match_statement = {

positional_constraint = "EXACTLY"

search_string = "DELETE"

field_to_match = {

method = {}

}

text_transformation = {

priority = 0

type = "NONE"

}

}

}]

}

}

}

visibility_config = {

cloudwatch_metrics_enabled = true

metric_name = "metric-name"

sampled_requests_enabled = true

}

}

]

but seems like everything inside of a statement being ignored, besides limit and aggregate_key_type

Feel free to post here and someone could help

@RB sorry updated the message

@Karina Titov have you seen this example ? https://github.com/cloudposse/terraform-aws-waf/blob/master/examples/complete/main.tf

provider "aws" {

region = var.region

}

module "waf" {

source = "../.."

geo_match_statement_rules = [

{

name = "rule-10"

action = "count"

priority = 10

statement = {

country_codes = ["NL", "GB"]

}

visibility_config = {

cloudwatch_metrics_enabled = true

sampled_requests_enabled = false

metric_name = "rule-10-metric"

}

},

{

name = "rule-11"

action = "allow"

priority = 11

statement = {

country_codes = ["US"]

}

visibility_config = {

cloudwatch_metrics_enabled = true

sampled_requests_enabled = false

metric_name = "rule-11-metric"

}

}

]

managed_rule_group_statement_rules = [

{

name = "rule-20"

override_action = "count"

priority = 20

statement = {

name = "AWSManagedRulesCommonRuleSet"

vendor_name = "AWS"

excluded_rule = [

"SizeRestrictions_QUERYSTRING",

"NoUserAgent_HEADER"

]

}

visibility_config = {

cloudwatch_metrics_enabled = false

sampled_requests_enabled = false

metric_name = "rule-20-metric"

}

}

]

byte_match_statement_rules = [

{

name = "rule-30"

action = "allow"

priority = 30

statement = {

positional_constraint = "EXACTLY"

search_string = "/cp-key"

text_transformation = [

{

priority = 30

type = "COMPRESS_WHITE_SPACE"

}

]

field_to_match = {

uri_path = {}

}

}

visibility_config = {

cloudwatch_metrics_enabled = false

sampled_requests_enabled = false

metric_name = "rule-30-metric"

}

}

]

rate_based_statement_rules = [

{

name = "rule-40"

action = "block"

priority = 40

statement = {

limit = 100

aggregate_key_type = "IP"

}

visibility_config = {

cloudwatch_metrics_enabled = false

sampled_requests_enabled = false

metric_name = "rule-40-metric"

}

}

]

size_constraint_statement_rules = [

{

name = "rule-50"

action = "block"

priority = 50

statement = {

comparison_operator = "GT"

size = 15

field_to_match = {

all_query_arguments = {}

}

text_transformation = [

{

type = "COMPRESS_WHITE_SPACE"

priority = 1

}

]

}

visibility_config = {

cloudwatch_metrics_enabled = false

sampled_requests_enabled = false

metric_name = "rule-50-metric"

}

}

]

xss_match_statement_rules = [

{

name = "rule-60"

action = "block"

priority = 60

statement = {

field_to_match = {

uri_path = {}

}

text_transformation = [

{

type = "URL_DECODE"

priority = 1

},

{

type = "HTML_ENTITY_DECODE"

priority = 2

}

]

}

visibility_config = {

cloudwatch_metrics_enabled = false

sampled_requests_enabled = false

metric_name = "rule-60-metric"

}

}

]

sqli_match_statement_rules = [

{

name = "rule-70"

action = "block"

priority = 70

statement = {

field_to_match = {

query_string = {}

}

text_transformation = [

{

type = "URL_DECODE"

priority = 1

},

{

type = "HTML_ENTITY_DECODE"

priority = 2

}

]

}

visibility_config = {

cloudwatch_metrics_enabled = false

sampled_requests_enabled = false

metric_name = "rule-70-metric"

}

}

]

context = module.this.context

}

yes, unfortunately, there is no example of the scope_down_statement or and_statement usage

This may be a limitation in the component

content {

aggregate_key_type = lookup(rate_based_statement.value, "aggregate_key_type", "IP")

limit = rate_based_statement.value.limit

dynamic "forwarded_ip_config" {

for_each = lookup(rate_based_statement.value, "forwarded_ip_config", null) != null ? [rate_based_statement.value.forwarded_ip_config] : []

content {

fallback_behavior = forwarded_ip_config.value.fallback_behavior

header_name = forwarded_ip_config.value.header_name

}

}

}

looks like it. should i create an issue to include the scope down section?

Sure thing !

A pull request is also encouraged :)

v1.3.0-alpha20220622 1.3.0 (Unreleased) NEW FEATURES:

Optional attributes for object type constraints: When declaring an input variable whose type constraint includes an object type, you can now declare individual attributes as optional, and specify a default value to use if the caller doesn’t set it. For example: variable “with_optional_attribute” { type = object({ a = string # a required attribute b = optional(string) # an optional attribute c = optional(number, 127) # an…

2022-06-23

Question, for https://github.com/cloudposse/terraform-aws-eks-node-group is there a best practice, or way to set the instance names?

Terraform module to provision a fully managed AWS EKS Node Group

I just use the context and let it be defined. I define a label module in my IaC that calls cloudeposse/eks-node-group/aws and pass context = module.<label>.context with it

Terraform module to provision a fully managed AWS EKS Node Group

And this tags the EC2 instances with Name label?

Ah so you’re trying to get the EC2 instances from the node group to be tagged…

In order to get the instances to have the tagging applied to them you’ll need to be sure to set the resources_to_tag variable sent to the cloudposse/eks-node-group/aws module.

I set mine to resources_to_tag = ["instance", "volume", "elastic-gpu", "spot-instances-request", "network-interfaces"] to enable all possible tagging options but I could have probably left of “elastic-gpu” and “spot-instances-request” as we aren’t making use of either options currently

Thank you that works and prevents me from having to create a custom Launch Template.

no problem… I had to figure it out for our deployment as well

2022-06-24

Hello everyone, I started to use you asg module https://github.com/cloudposse/terraform-aws-ec2-autoscale-group

But you doesn’t use name for aws_autoscaling_group resource, only name_prefix .

Is it possible add support of name to future releases??

Terraform module to provision Auto Scaling Group and Launch Template on AWS

Here’s more information on why name isn’t used

https://github.com/cloudposse/terraform-aws-ec2-autoscale-group/pull/63

Terraform module to provision Auto Scaling Group and Launch Template on AWS

I previously added name because I wanted to import existing autoscaling groups into that module but there is a create_before_destroy lifecycle on it which causes issues. Also I accidentally caused one issue in PR 60 with that implementation .

Perhaps this can be revisited ? cc: @Jeremy G (Cloud Posse)

Okay, create_before_destroy could be set up via variable

And for some uses it could be okay

create_before_destroy is a lifecycle option and these are not parametrizable. To implement them (where we do) we have to provide 2 separate resources, identical except for the lifecycle rule, and choose which one to use. This is a maintenance problem and can cause problems when switching from one to the other.

For an Auto Scaling Group, the only advantage of destroy before create behavior is that you can set the name of the group to be exactly what you want. That does not seem to me to be compelling enough to warrant the additional effort and resulting issues that come with supporting 2 variants.

thank you, for answering.

When using module sources pointing to private github repos, is there a way of defining the source using some kind of connection agnostic way instead of specifying a specific method https+pat or ssh+key?

You could simply invoke the module itself with the pure repository url and leave the configuration of your git client to your pipeline. Something like git config --global url."[https://oauth2:$](https://oauth2:$){{ secrets.GH_TOKEN }}@github.com".insteadOf [https://github.com](https://github.com) would in this case setup git access by PAT.

awesome thanks that’s what I was looking for

it’s also possible to use a .netrc file

2022-06-25

2022-06-27

2022-06-28

My setup is, we have atlantis running in a central account and assuming roles in child accounts to run terraform plan and terraform apply. In the [backend.tf](http://backend.tf) i have dynamodb table specified for terraform locking. It looks like terraform is using the dynamoDB in the central account for storing the lock info instead of child accounts. I want terraform to use dynamoDB in the other accounts. Is it possible to tell Terraform to use local dynamodb in the accounts where it is running plan and apply?

storing s3 in the shared account and use dynamo in the child account?

Is it possible to tell Terraform to use local dynamodb in the accounts where it is running plan and apply?

this is related to the backend definition

you want to tell it what role to use and if no role, it will assume the current role

note, providers and backends can both use different roles

so by the sounds of it, your provider might be correct, but your backend is not

@Erik Osterman (Cloud Posse) I added role_arn to backend.tf and it worked as expected. Thanks

I am creating newrelic synthetic pings for 1500 websites. NewRelic has a rate limit of 1000 requests per minute. I ran into issue initially when I have not passed the argument -parallelism. So, in general while making this many requests through terraform to any provider ““How can we check that - How many network requests does terraform is making with the provider in total or per minute OR any sort ?”” is there a way to check this ?

I am kind starting to learn and use new relic at work for aws and I do have several questions

Would you be able to give me a hand ?

Sure. Please DM me.

Well, the fundamental problem IMO is you have too much state in one root module.

If editing one root module can affect 1500 sites, that seems like a massive blast radius

Hi everyone!

I’m trying to use Cloud Posse’s ECS Web App module, I’m pretty new to Terraform and would like your help to make sure I understood the complete example and best practices correctly. I’m planning on structuring my directories like this:

roots

└── api-app

├── backend.tf

├── context.tf

├── env

│ ├── dev.tfvars

│ └── prod.tfvars

├── main.tf

├── outputs.tf

├── variables.tf

└── versions.tf

This way, the fixtures.us-east-2.tfvars file from the example could be replaced with each environment’s tfvars file, right?

Also, what is the best practice for passing environment variables to this web app’s task definition? How can it be done without hardcoding values to the code?

Cheers

Can’t help with the first question but can with the second question:

what is the best practice for passing environment variables to this web app’s task definition? How can it be done without hardcoding values to the code?

My team uses the "secrets" section of the task definition with "valueFrom" to specify an location in parameter store. Looks like this:

"secrets": [

{

"valueFrom": "arn:aws:ssm:${region}:${account_id}:parameter/shared/datadog/API_KEY",

"name": "DD_API_KEY"

}

]

so you would be storing your secrets in parameter store and then giving permission to read the secret to the role assumed by the task. You can do the same thing with Secrets Manager if you’d prefer.

I should add that the task definition is defined as a template and gets called from terraform like this:

data "template_file" "ecs" {

template = file("${path.module}/templates/container_definition_template.json")

vars = {

name = var.name

environment = var.environment

region = var.aws_region

account_id = data.aws_caller_identity.id.account_id

}

}

Thanks, that’s very helpful!

Could you elaborate on the task definition? I can’t find anything in the docs\examples about this data "template_file" "ecs"

Basically, you give TF a template and pass the variables in. It will fill in the template with the variable’s values and return the “filled in” template.

I use the template example from above to create a zip file with a appspect.yaml and a taskdef.json file for codepipeline to deploy to ECS:

data "archive_file" "codepipeline" {

type = "zip"

output_path = "${path.module}/templates/appspec_taskdef.zip"

source {

filename = "appspec.yaml"

content = data.template_file.appspec.rendered

}

source {

filename = "taskdef.json"

content = data.template_file.taskdef_pipeline.rendered

}

}

I might be leading you down a path that is not aligned with the Cloud Posse methods of deploying an ECS service. But just know there are multiple ways to get it done.

Thanks! I guess I’ll have to keep learning Terraform as this isn’t intuitive to me yet because I’m a bit confused about where this “template_file” came from and how everything connects together.

yes there are layers to it. dig in and you will find there is plenty to learn.

Have you looked into cloudposse/atmos (see atmos) for your environments? Its the tool we use to use yaml to auto create tfvars files per env which avoids the env/ directory structure

Also you may want to replace that data.template_file data source with the templatefile function

2022-06-29

v1.2.4 1.2.4 (June 29, 2022) ENHANCEMENTS: Improved validation of required_providers to prevent single providers from being required with multiple names. (#31218) Improved plan performance by optimizing addrs.Module.String for allocations. (<a href=”https://github.com/hashicorp/terraform/issues/31293” data-hovercard-type=”pull_request”…

Adding multiple local names for the same provider type in required_providers was not prevented, which can lead to ambiguous behavior in Terraform. Providers are always located via the provider’s fu…

After the change: terraform/internal/addrs$ go test -bench=Module . -benchmem BenchmarkModuleStringShort-12 15489140 77.80 ns/op 24 B/op 1 allocs/op …

:wave: Hi, i’m new here, but lately I’ve been using the AWS RDS Proxy module in Terraform Cloud and on “Create” it works great! but when I flip the enabled to false to delete it seems to always give me a:

Error: only lowercase alphanumeric characters and hyphens allowed in "name"

with module.proxy.aws_db_proxy.this

on .terraform/modules/proxy/main.tf line 2, in resource "aws_db_proxy" "this":

name = module.this.id

and

Error: first character of "name" must be a letter

with module.proxy.aws_db_proxy.this

on .terraform/modules/proxy/main.tf line 2, in resource "aws_db_proxy" "this":

name = module.this.id

not sure if this is the right place to ask this question or I should file an issue? Thanks!

Terraform module to provision an Amazon RDS Proxy

Ah I see the issue

Terraform module to provision an Amazon RDS Proxy

there is no count logic on the aws_db_proxy resource

actually, it’s on zero of the resources

for now, if i were you, i would add a count logic on your module to enable / disable it

thanks! i’ll give that a try

I put this PR in for now https://github.com/cloudposse/terraform-aws-rds-db-proxy/pull/7

what

• Add count logic

why

• Best practices

references

• https://sweetops.slack.com/archives/CB6GHNLG0/p1656528986166119

has anyone used HCL/terraform to generate not your typical “infrastructure” resources? i like the way HCL does template files and want to generate yaml files from terraform but feels kinda weird to be using terraform for that

why not just use directly a templating system like handlebars, jinja, moustache or like even twig

aws-ia control tower uses jinja2 to template hcl

Im not recommending it by any means but it has been done

HCL is such an obscure format with so many strange syntactic aspects. Is it really wise to template YAML in it?

I’ve been impressed with gomplate for templating

i’m leaning towards the templating tools you all mentioned because I don’t want to use the wrong tool for the job. but just to answer @Alex Jurkiewicz, I needed to generate for multiple environments which using terraform workspaces makes that extremely simple as well as automatically showing terraform diffs of changes. I imagined the HCL to only use templatefile() function with a yaml template so i don’t think the syntax would be that big of an issue. I understand that terraform is technically not the tool for the job though

for those that are interested, HCL ended up being a bad idea in hindsight after doing a poc when compared to gomplate and jinja2

2022-06-30

Hi, is there any document detailing how to update a cloudposse module? I am looking to update dynamic subnet module but want to do this in a systematic way without breaking anything. If anyone knows of it then kindly share. Thanks a bunch

No document. If you see an issue or want to add a feature, the normal fork, branch, pull request model is the way we usually do it

Thank you