#terraform (2023-04)

Discussions related to Terraform or Terraform Modules

Discussions related to Terraform or Terraform Modules

Archive: https://archive.sweetops.com/terraform/

2023-04-03

Hey so I’m using the datadog terraform provider to create some dashboards using the datadog_dashboard_json resource and it works great except there’s permadrift. Any ideas how I can deal with this?

• I see in the provider that it does expose this value, but it says it’s deprecated in the actual datadog api documentation

• I do not see this value exposed really in the dashboard itself

• I’ve tried actually putting this value in the dashboard as both false and null and both don’t prevent the permadrift

# datadog_dashboard_json.dashboard_json will be updated in-place

~ resource "datadog_dashboard_json" "dashboard_json" {

~ dashboard = jsonencode(

~ {

- is_read_only = false -> null

# (8 unchanged elements hidden)

}

)

id = REDACTED

# (2 unchanged attributes hidden)

}

Anyone seen this https://aws.amazon.com/about-aws/whats-new/2023/04/aws-service-catalog-terraform-open-source/

I saw it, as well as this post … https://aws.amazon.com/blogs/aws/new-self-service-provisioning-of-terraform-open-source-configurations-with-aws-service-catalog/

but I’m just too burned by service catalog in general to be super enthused. Maybe a truly great post on how to maintain the catalog item and update resources over time, maintaining state etc, would go a long way. This post glossed over the ops side of things (as most of aws posts do)

With AWS Service Catalog, you can create, govern, and manage a catalog of infrastructure as code (IaC) templates that are approved for use on AWS. These IaC templates can include everything from virtual machine images, servers, software, and databases to complete multi-tier application architectures. You can control which IaC templates and versions are available, what […]

Is it terraform cloud light ?

Zero discussion of state or providers, so i don’t think it gets even that far

https://aws.amazon.com/fr/about-aws/whats-new/2023/04/aws-service-catalog-terraform-open-source/ You can access Service Catalog key features, including cataloging of standardized and pre-approved infrastructure-as-code templates, access control, cloud resources provisioning with least privilege access, versioning, sharing to thousands of AWS accounts, and tagging. End users such as engineers, database administrators, and data scientists simply see the list of products and versions they have access to, and can deploy them in a single action.

To get started, use the AWS-provided Terraform Reference Engine on GitHub that configures the code and infrastructure required for the Terraform open source engine to work with AWS Service Catalog. This one-time setup takes just minutes. After that, you can start using Service Catalog to create and govern Terraform open source products, and share them with your end users across all your accounts.

Talks about versioning

It “claims” versioning is possible. Give it a try and tell me if it lines up with your idea of versioning and how you use versioning across your terraform environments….

Like I said, I’m just burned. At this point, I need proof. Not just marketing talk

True burned by service catalog ?

Yep. I just haven’t seen it used well. And even AWS support (including proserve) don’t seem to understand ops over time with it. So, I’m a hard skeptic until someone can truly evangelize it and show me otherwise

2023-04-04

Heya all, trying to figure something out with Terraform. I have a list of IP addresses and I want to grab the last octet of each IP address in the list and put it into another list, but I can’t see to quite figure it out. My list looks like:

ips = [

"44.194.111.252",

"44.194.111.253",

"44.194.111.254"

]

and I’m trying to put the newly created list into a local called “ip_last_octet”

[ for ip in local.ips : split(".", ip)[3] ]

oi, too simple… I kept looking at a bunch of join/split/replace commands, etc.

I have one more for the group, although I’m not sure if it’s possible. I have a network interface that has I attached as a secondary interface to an ec2 instance. The resource is configured to configure multiple private IPs which then get an elastic IP associated with it. I want the private IP to always map to the same elastic IP as the application that runs on this ec2 instance is sensitive to IP changes (it has a virtual servers configuration file which binds to specific IPs). I’m currently doing this.

sending_ips = [

"44.194.111.252",

"44.194.111.253",

"44.194.111.254",

"44.194.111.251",

"44.194.111.250"

]

data "aws_eip" "sending_ips" {

count = length(var.sending_ips)

public_ip = var.sending_ips[count.index]

}

resource "aws_network_interface" "secondary_interface" {

subnet_id = data.aws_subnet.public.id

private_ips_count = length(var.sending_ips) - 1

}

resource "aws_network_interface_attachment" "secondary_interface" {

instance_id = aws_instance.ems.id

network_interface_id = aws_network_interface.secondary_interface.id

device_index = 1

}

resource "aws_eip_association" "sending_ips" {

count = length(var.sending_ips)

network_interface_id = aws_network_interface.secondary_interface.id

allocation_id = data.aws_eip.sending_ips[count.index].id

private_ip_address = element(data.aws_network_interface.secondary_interface.private_ips, count.index)

allow_reassociation = false

}

The problem I’m having is that if I remove or add IP addresses to the sending_ips variable the private ip to elastic ip mappings change. I want to try and figure out a way to allow AWS to dynamically assign IPs but still maintain a consistent mapping once a private IP paired with an elastic IP.

2023-04-05

v1.5.0-alpha20230405 No content.

Terraform enables you to safely and predictably create, change, and improve infrastructure. It is an open source tool that codifies APIs into declarative configuration files that can be shared amongst team members, treated as code, edited, reviewed, and versioned. - Release v1.5.0-alpha20230405 · hashicorp/terraform

v1.5.0-alpha20230405 This is a development snapshot for the forthcoming v1.5.0 release, built from Terraform’s main branch. These release packages are for early testing only and are not suitable for production use. The following is the v1.5.0 changelog so far, at the time of this snapshot: UPGRADE NOTES:

This is the last version of Terraform for which macOS 10.13 High Sierra or 10.14 Mojave are officially supported. Future Terraform versions may not function correctly on these older versions of macOS.

This is…

This is a development snapshot for the forthcoming v1.5.0 release, built from Terraform’s main branch. These release packages are for early testing only and are not suitable for production use. The…

AWS Lattice support forthcoming in Terraform https://github.com/hashicorp/terraform-provider-aws/issues/30380

Description

Support for recently announced VPC Lattice

• https://aws.amazon.com/blogs/aws/simplify-service-to-service-connectivity-security-and-monitoring-with-amazon-vpc-lattice-now-generally-available/ • https://docs.aws.amazon.com/service-authorization/latest/reference/list_amazonvpclatticeservices.html • https://awscli.amazonaws.com/v2/documentation/api/latest/reference/vpc-lattice/index.html?highlight=lattice

Requested Resource(s) and/or Data Source(s)

• aws_vpc_lattice_listener • aws_vpc_lattice_rule • aws_vpc_lattice_service • aws_vpc_lattice_service_network • aws_vpc_lattice_service_network_service_association • aws_vpc_lattice_service_network_vpc_association • aws_vpc_lattice_target_group

Potential Terraform Configuration

TBD

References

• https://aws.amazon.com/blogs/aws/simplify-service-to-service-connectivity-security-and-monitoring-with-amazon-vpc-lattice-now-generally-available/ • https://docs.aws.amazon.com/service-authorization/latest/reference/list_amazonvpclatticeservices.html • https://awscli.amazonaws.com/v2/documentation/api/latest/reference/vpc-lattice/index.html?highlight=lattice

Would you like to implement a fix?

None

2023-04-06

This kind of NOTE (see attached image) means that if a terraform module uses cloudfront function in cache of cloudfront distro, the cicd must generate a plan, query it for replacement of function, and if there is a replacement planned, must take a side path to set some custom var to false so that the cloudfront distro no longer uses the function, then terraform apply the distro as target, then finally can resume normal workflow. This is a lot of work! Did you guys have to implement something like this, or is there another trick?

The problem is only during function deletion. You can update an existing function without concern

Yeah but that’s not the issue. The problem arises when the function needs to be replaced (not just updated in-place). Then terraform aborts unless you take special action to prevent it (eg as I described bit there’s probably other ways too).

Here is my issue, I am creating a new VPC in AWS with 2 public and 2 private subnets, peering it with an existing vpc and testing things using Terratest, in which i’m basically checking ssh connectivity between a bastion host in the existing vpc and the hosts in the new vpc both in private and public subnets using ssh.CheckPrivateSshConnectionE my issue is that connectivity between the bastion and the hosts in. the private subnets in the new vpc works perfectly, but connectivity between the bastion and hosts in the public subnets in the new vpc does not happen, what could be going wrong? Please note there different route tables in the new VPC 1 for private subnets and 1 for public subnets.

Make sure have configured route to other vpc in your both different route tables and vice versa. Meaning in your existing vpc route table should point to new vpc subnets via peering in its route tables and new vpc route tables should point to existing vpc vpc subnet via peering route tables.

@Sajja Sudhakararao that part is already taken care of and like i mentioned the communication from the bastion in the existing/old vpc is working perfectly for hosts in the private subnets in the new VPC the issue is only with the hosts in the new VPC that are in the public subnet

Even for public subnet, you need to alter the route table to have a route to and from peering connection

Can u share your route table entries which is attached to public subnet

will do in some time out atm

I have simulated same scenario and it was working fine.

2023-04-07

this is a noisy feed

halp @Erik Osterman (Cloud Posse)?

Wow! What happened

I suspect it was a problem with the stack overflow rss feed

Agreed. I’m also wondering why its in the #terraform channel.

2023-04-08

2023-04-09

It’s gotta be a stack exchange bug. We have had this for maybe a year.

I will unsubscribe it for now and delete those messages

Feed 3978480641542 (Active questions tagged cloudposse - Stack Overflow) deleted

The feed is https://stackoverflow.com/feeds/tag/cloudposse (recording here to make it easier to add later)

2023-04-10

2023-04-12

Hello,

Does anybody have an example of using ip_set_reference_statement_rules in cloudposse/terraform-aws-waf ?

Specifically the statement block

I have found what is needed from the source

I was missing arn in the statement

v1.4.5 1.4.5 (April 12, 2023) Revert change from [#32892] due to an upstream crash. Fix planned destroy value which would cause terraform_data to fail when being replaced with create_before_destroy (<a href=”https://github.com/hashicorp/terraform/issues/32988” data-hovercard-type=”pull_request”…

1.4.5 (April 12, 2023)

Revert change from [#32892] due to an upstream crash. Fix planned destroy value which would cause terraform_data to fail when being replaced with create_before_destroy (#32988)

If a resource has a change in marks from the prior state, we need to notify the user that an update is going to be necessary to at least store that new value in the state. If the provider however r…

When we plan to destroy an instance, the change recorded should use the correct type for the resource rather than DynamicPseudoType. Most of the time this is hidden when the change is encoded in th…

2023-04-13

Hello! I’m wondering if anyone has examples of ECS with ALB? I’m having issues fitting the two together with the modules that are around.

@Samuel Crudge Hopefully this helps:

resource "aws_lb_target_group" "service_tg" {

name = "service-tg"

port = 80

protocol = "HTTP"

target_type = "ip"

vpc_id = <vpc id>

health_check {

path = "/"

port = 80

protocol = "HTTP"

healthy_threshold = 2

unhealthy_threshold = 2

timeout = 10

interval = 60

matcher = "200"

}

}

resource "aws_lb_listener_rule" "service_listener" {

listener_arn = <alb arn>

action {

type = "forward"

target_group_arn = <tg arn>

}

condition {

host_header {

values = "<host header value>"

}

}

}

I haven’t posted the task definition or service but I guess you have already managed to create those if you’re at the point of creating the ALB link

anyone has seen this ?

Error: Received unexpected error:

FatalError{Underlying: error while running command: exit status 1; ╷

│ Error: creating Amazon S3 (Simple Storage) Bucket (eg-test-vpc-subnets-qbp5cs): InvalidBucketAclWithObjectOwnership: Bucket cannot have ACLs set with ObjectOwnership's BucketOwnerEnforced setting

│ status code: 400, request id: KDSFKDKFFFFFF, host id: ASDFASDFADFASDFASDFASDFASDFASDFASDFASDFADFS

We are using the CloudPosse flow-log module that uses the s3-log bucket module and this works on one region on the same account but if we deploy to another region in the same account it fails (different bucket names)

seen this but not from TF. what the error tells me is that you cannot have ACLs and BucketOwnerEnforced (basically ACLs disabled) at the same time … so I would double check these… I guess you aware that this enforcement helps in case remote accounts write objects into a bucket… see docs

yes, in this case the permission is for delivery.amazon.com which is cloudwatch

Hello! Is cold start reference tutorial not available anymore?

Yes it’s still a part of our Reference Architecture!

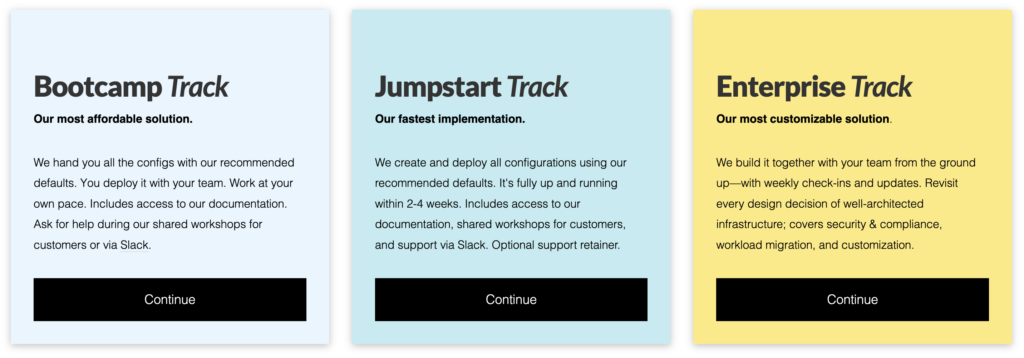

we also have a simplified version that applies to Jumpstart/Bootcamp customers https://docs.cloudposse.com/reference-architecture/scaffolding/setup/baseline/

These links seems to be behind the registration wall which says “Your registration must be approved by an administrator”. How can I get permissions?

Are you an existing customer? Sorry I’m not familiar with all our teams

no

What Cold Start reference tutorial were you referring to before?

I’m pretty sure it’s the first link you’ve sent - it’s all over the docs and older slack threads

do I need to become a customer to get access to those ^^?

This docs website is new (within a few months), but previously customers wouldve used Confluence documentation. Which has always been customer only, but please let me know if there’s something in particular you can link that used to be public

To get access you’ll need to start a contract with us. We have these newer, more affordable options for contracts, but we have also worked on even docs only access for even cheaper

got it

do you have any rough estimates or do I need to schedule a call for that?

That question would be better suited for @Erik Osterman (Cloud Posse)

I guess i’m interested in either bootcamp or even just docs access

@Erik Osterman (Cloud Posse) - I’m interested in docs access also

2023-04-14

2023-04-18

Hi everyone. I am stuck in an issue w.r.t cloudposse/iam-role/aws module. I need to configure an IAM role which needs to have below as the trust relationship. I am able to figure out actions as well as principle but having hard time figuring out the conditions. It’s a show stopper for at the moment. Can someone please help me out?

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::xxxxxxxxxxxx:saml-provider/xxxxxx"

},

"Action": "sts:AssumeRoleWithSAML",

"Condition": {

"StringEquals": {

"SAML:xxx": "<https://signin.aws.amazon.com/saml>"

}

}

}

]

}

Tried this but it says there is no function StringEquals

assume_role_conditions = [

StringEquals ( {

"SAML:xxx" = "<https://signin.aws.amazon.com/saml>"

}

)

]

Figured it out

All good here

Is there we can avoid terraform destroy to be run accidentally

remove those IAM permissions?

Not to destroy resources

I have seen prevent_destroy

But from security perspective

If someone has code

They can modify to remove prevent_destroy and run terraofmr destroy it will vanish

Hey all, I’m wondering how to configure my CI/CD.

I have a github repo configured with GHAs to talk to terraform cloud to perform plans and applies.

My repo is configured like this:

.

├── accounta

│ ├── us-east-1

│ │ └── customer-project

│ └── us-east-2

│ └── customer-project

├── accountb

│ ├── us-east-1

│ │ └── customer-project

│ └── us-east-2

│ └── customer-project

└── accountc

├── us-east-1

│ └── customer-project

└── us-east-2

└── customer-project

Every customer-project dir has it’s own state file.

I want to use a single account to manage resources in all accounts, and then allow it to assume a role that relates to that account. I have configured this, and it works well. But I’m hardcoding the assume_role arn in each customer-projects provider. Can someone more intelligent than me tell me if there’s a better way to achieve this without hardcoding the role in an automated way?

In our framework, we have a component called account-map that stores all the required ARNs as terraform outputs we look up using remote state.

This module won’t work for you directly, but maybe some patterns you can borrow.

Hi everyone. I need help in understanding one thing w.r.t terraform-aws-iam-role module. I configured multiple policy documents and while applying it, it’s failing with below error. As far as I know, I can create a single policy with using this module. Is there a possibility of splitting the policy into more than 1?

Error: updating IAM policy arn:aws:iam::xxxxxxxxxxxx:policy/xxxxx: LimitExceeded: Cannot exceed quota for PolicySize: 6144 status code: 409

2023-04-19

Any suggestions for open source UI for terraform. I am looking for something similar to terraform cloud but free.

Atlantis: Terraform Pull Request Automation

“free” means you have to run and operate it yourself, though

Thats fine but there should be an UI like terraform cloud

i don’t use a UI myself. but there are a few if you google for it. atlantis is the most mature of the “free” options i’ve seen

Thank you

Spacelift has a free plan

Flexible pricing options to fit infrastructure needs. Free Trial.

I’m looking at using the account component from https://github.com/cloudposse/terraform-aws-components/blob/master/modules/account/README.md. Can anyone confirm if this supports organizational units more than one level deep in AWS Organizations?

The README doesn’t mention this and I haven’t found any examples. I’m trying to import my existing AWS organization and manage it with this component, but I’m having trouble figuring out how it should be modeled using the organization_config variable.

Hello, Is anyone using TFLint + Terratest + Terragrunt with Atlantis, if so what does the flow look like?.

Terratest and TFLint on modules and manage their semantic versions somehow — either by putting them in a different repo keeping them in a monorepo with the terragrunt configurations, but using a private Terraform module registry (there’s a few options out there — I use Spacelift which looks for a .spacelift/config.yml file inside each module and determines the semver from there). You will need to make CI workflows that ensure the module semantic versions will only be bumped if Terratest and tflint pass.

Be careful not to make your modules too granular with Terragrunt. Otherwise you will have a million terragrunt.hcl files. The flow will be a nightmare, especially with Atlantis since it’s PR based. Your PRs will be littered with tons of Atlantis output if you have hundreds of projects because of an overly-granular Terragrunt configuration. I can tell you from experience that using Atlantis in a Terragrunt setup with 400 terragrunt.hcl files was absolutely unusable.

Then as for Terragrunt, that’s a completely different flow. Presumably you are using transcend-io/terragrunt-atlantis-config. You will need a workflow in the terragrunt monorepo that runs it automatically when terragrunt.hcl files are added or removed, and updates and commits the Atlantis config file. You could also try creating a makefile target for terragrunt-atlantis-config and creating a pre-commit hook to run that target.

Finally, the Atlantis flow will be the standard Atlantis flow that’s described in their docs.

2023-04-20

I have a simple req. So simple…. I just want to be able to create an s3 bucket in a region specified by var.aws_region. Anyone overcome this issue?

May I know what is the issue or error you are facing

You can’t do :

provider "aws" {

alias = "us-east-2"

region = "us-east-2"

}

...

resource...

provider = "aws.${var.aws_region}"

Yeah in provider we can’t use variable

I got you now

Even with that truth, it should be possible to have a module that can create an s3 bucket based a variable input…. Such a deficiency surely wouldn’t be allowed to go unaddressed by Hashicorp.

Yeah via module seems possible

I’m just not finding the answer

I am seeing this as an alternative

But that still has a hardcoded provider. I’m setup with env0 to use a dynamic backend configuration. This allows me to mitigate the code sprawl involved in having a separate module folder for everyone trying to use it… To keep this up, would really, really like to be able to be able to have dynamic resource providers…. But if there’s no easy way, I can just write a quick custom flow to do the job.

Yeah I don’t even come across dynamic provider . sorry I couldn’t help you

I got it. There’s a list of issues against hte aws provider as long as my arm for this. Solved it with env0 custom flows and a slug I can sed out dynamically before tf init runs.

I just can’t believe such a frustrating issue can’t be solved. I suppose that is the entire uvo for Terragrunt anyway. If they did solve it, tg would evaporate… From what I understand of it.

we use Atmos for that exact purpose - https://atmos.tools/

Atmos is a workflow automation tool for DevOps to manage complex configurations with ease. It’s compatible with Terraform and many other tools.

with atmos you can define some generic module for your s3 bucket, and then create that resource in multiple regions defined by var.region

I might be coming around to atmos

Terraform’s architecture doesn’t really allow this. What I’d suggest is adding an orchestration layer above Terraform to take care of it.

Some people use Terragrunt, but you can also use a tool like Spacelift or Terraform Cloud.

In short, create a configuration which creates the bucket and accepts the (single) AWS region as input variable. Then run this configuration with as many backend configurations (or workspaces) as required to create your desired buckets.

2023-04-21

2023-04-24

Hi I am trying to write an integration test for an already working mwaa module (This is already working in production) The module is almost similar to this https://github.com/cloudposse/terraform-aws-mwaa. However, when i run the test i get the error "ValidationException: Unable to read env-mwaa-staging-eu-west-1-dags/plugins.zip". If I try to upload the plugins.zip and requirement.txt. The mwaa get stucked in creating mode till the test will timeout. Has anyone encounter this before?

Terraform module to provision Amazon Managed Workflows for Apache Airflow (MWAA)

2023-04-25

I want to learn variable priority to create resuaable modules

How merging happens or how priority precendence take values of variable

this might be a good place to start https://developer.hashicorp.com/terraform/language/values/variables#variable-definition-precedence

Input variables allow you to customize modules without altering their source code. Learn how to declare, define, and reference variables in configurations.

also if you provide a string (or other values) as a parameter to be passed to a module, it will override the default value set within the module itself

2023-04-26

Alternative for ngrok

To use with Atlantis and integrate with bitbucket

v1.4.6 1.4.6 (Unreleased) BUG FIXES Fix bug when rendering plans that include null strings. (#33029) Fix bug when rendering plans that include unknown values in maps. (#33029)…

This PR fixes 2 issues in the new renderer:

Unknown values are removed from the set of after values by the json plan package for attributes. This affects maps as they are unaware of the values the…

v1.4.6 1.4.6 (April 26, 2023) BUG FIXES Fix bug when rendering plans that include null strings. (#33029) Fix bug when rendering plans that include unknown values in maps. (<a href=”https://github.com/hashicorp/terraform/issues/33029” data-hovercard-type=”pull_request”…

1.4.6 (April 26, 2023) BUG FIXES

Fix bug when rendering plans that include null strings. (#33029) Fix bug when rendering plans that include unknown values in maps. (#33029) Fix bug where the plan …

This PR fixes 2 issues in the new renderer:

Unknown values are removed from the set of after values by the json plan package for attributes. This affects maps as they are unaware of the values the…

Curious about the terraform-aws-security-group module: https://github.com/cloudposse/terraform-aws-security-group

I’ve read through the entire README and I understand the gotchas.

What I’m curious about is, if it is 100% necessary for this module to manage both the security group and its corresponding security group rules in order to maintain no interruptions (when used correctly),

how do you avoid circular dependencies when using this module as part of a for_each, where a security group rule might dynamically reference a security group within the same for_each map?

Such as:

locals = {

security_groups = {

"example-1" = {

name = "example"

attributes = ["1"]

enabled = true

description = "Security group for example-1"

vpc_id = module.vpc["example"].vpc_id

rules_map = {

"ingress" = [

{

type = "ingress"

description = "Allow inbound HTTP traffic from CloudFront."

from_port = 80

to_port = 80

protocol = "TCP"

prefix_list_ids = [

data.aws_ec2_managed_prefix_list.cloudfront.id

]

}

]

}

}

"example-2" = {

name = "example"

attributes = ["2"]

enabled = true

description = "Security group for example-2"

vpc_id = module.vpc["example"].vpc_id

rules_map = {

"ingress" = [

{

type = "ingress"

description = "Allow inbound HTTP traffic from ALB."

from_port = 80

to_port = 80

protocol = "TCP"

source_security_group_id = module.security_group["example-1"].id

}

]

}

}

}

}

module "label_security_group" {

source = "cloudposse/label/null"

version = "0.25.0"

for_each = local.security_groups

enabled = each.value.enabled

name = each.value.name

attributes = try(each.value.attributes, [])

context = module.label_default.context

}

module "security_group" {

source = "cloudposse/security-group/aws"

version = "2.0.1"

for_each = local.security_groups

vpc_id = each.value.vpc_id

security_group_description = try(each.value.description, "Managed by Terraform")

create_before_destroy = try(each.value.create_before_destroy, true)

preserve_security_group_id = try(each.value.preserve_security_group_id, false)

allow_all_egress = try(each.value.allow_all_egress, true)

rules_map = try(each.value.rules_map, {})

context = module.label_security_group[each.key].context

}

^ In this case, a circular dependency would be created due to the circular reference: source_security_group_id = module.security_group["example-1"].id

Normally, the solution for this is to break the module down into smaller modules, such as an aws_security_group_rule module, but due to the nature of security groups, security group rules, and Terraform, it almost seems impossible, and I’m kind of shocked I didn’t fully understand this until now.

For example, the CloudPosse terraform-aws-security-group module clearly states the gotchas of what happens when it is not managing both the aws_security_group and the aws_security_group_rule resources from a single instance of the module.

Any ideas?

@Jeremy G (Cloud Posse)

It appears Terraform has addressed some of these issues with the aws_vpc_security_group_ingress_rule and aws_vpc_security_group_egress_rule resources, and I imagine if CloudPosse’s terraform-aws-security-group module were to incorporate them, it would be a breaking change, however I’d also imagine it might help simplify how CloudPosse is managing all of this in the terraform-aws-security-group module.

I’m going to give it a try in our own modules, and see if separating the aws_security_group and the aws_vpc_security_group_*_rule resources into their own modules still allows for availability during updates to both the security group and its rules.

@Zach B In general, if you have 2 security groups referencing each other, they will be impossible to delete or replace via Terraform unless they are both managed in the same Terraform plan. This is because you cannot delete a security group while it is still in use, so you have to delete all the security group rules before you can delete the security groups. This is why we generally recommend avoiding using security groups in rules and instead use CIDR restrictions.

Nevertheless, Terraform modules are not the black boxes they may seem to be. You can use the partial output of one module as input into a second module before the first module is fully done creating all its resources and outputs. Did you actually try your example? I would expect it to work, because our module creates the security groups, and then creates the rules, so the reference is not circular.

@Erik Osterman (Cloud Posse) Looks like these new resources, aws_vpc_security_group_{ingress,egress}_rule, solve a bunch of problems I ran into with the aws_security_group_rule resources, including allowing rules to be tagged. On the one hand, I think we should migrate toward using them. On the other hand, I think that migration will have to cause a brief service outage as the old rules will be deleted before the new rules will be created. So it is kind of a pain, but on the other other hand, it would be good to do before updating the modules that do not currently use the module to use the module.

Yea, sounds like something we should consider before mass rollout

Especially once we add the branch manager stuff @Zinovii Dmytriv (Cloud Posse) is working on, the momentary hiccup seems worth it

@Jeremy G (Cloud Posse) That makes sense. However as you know, in many cases you can’t determine the interface / IP that the security group is attached to, so using a CIDR to resolve this issue can only effectively be done while compromising at least some, and potentially a lot of security it seems.

It looks like the aws_vpc_security_group_{ingress,egress}_rule resources came out in 4.56.0 of the hashicorp/aws provider.

At this point I’ve successfully applied several security groups/rules using our own custom modules with these new resources. I’m now going to do further testing to check the behavior of updating things like:

• Security group description

• Security group rule description

• Security group rule subject (CIDR, referenced security group, prefix list ID)

• Security group rule port ranges

• Security group rule protocol

@Zach B Please check to see if create_before_destroy now works when set on all rules. Previously it was not possible because duplicate rules were not allowed. It would be a great benefit (preventing service interruptions) if we can use create_before_destroy.

Sure thing @Jeremy G (Cloud Posse) I’ll get back to you

@Jeremy G (Cloud Posse) First gotcha of these new resources:

With the aws_vpc_security_group_{ingress,egress}_rule resources, if you wish to define “access to all protocols,” the only acceptable way to achieve this is to also allow access on all port ranges. Further, you cannot define those port ranges. (They must be null). This does mean that-in a single instance of these resources-it is currently impossible to allow access to all protocols on specific port ranges. (They would have to be broken up into multiple instances per ip_protocol)

Ref: https://github.com/hashicorp/terraform-provider-aws/issues/30035

// method 1

from_port = null

to_port = null

ip_protocol = -1

// method 2

from_port = null

to_port = null

ip_protocol = "all"

^ I experienced this myself, along with the error message from the GitHub issue above, no matter what tricks I tried.

@Jeremy G (Cloud Posse) Here’s my experience:

• Given create_before_destroy = true is set on both aws_security_group and aws_vpc_security_group_{ingress,egress}_rule

• Given I have two security groups (A and B), and a rule in security group B references security group A.

• Given security group A is added to the list of security groups for an ALB. (Cloud Posse’s ALB module)

When I change the description of security group A, which is being referenced by the ALB:

All looks well at first during the terraform plan. Terraform only shows update in-place and create replacement and then destroy operations. In fact, I can see that the order of operations during the terraform apply almost looks correct too:

• Create new security group

• Create new ingress/egress rules

• Delete original ingress/egress rules

• Delete original security group

However, the most important aspect is missing. Terraform wasn’t able to tell that it needed to update the ALBs security group. (It wasn’t even in the terraform plan). The expected order of operations would be:

• Create new security group

• Create new ingress/egress rules

• update in-place on ALB to swap security group

• Delete original ingress/egress rules

• Delete original security group

The first terraform apply actually fails deleting the original security group due to the DependencyViolation error that you’re aware of.

It isn’t until you issue a second terraform apply that Terraform recognizes an update to the ALB is necessary, and properly makes the security group swap, and successfully deletes the original security group.

I hope I’m missing something here.

The need to run twice is often due to a bug in the provider, although it can also be caused by using a data source that targets a resource you are modifying. See https://discuss.hashicorp.com/t/need-to-run-terraform-plan-apply-twice-to-effect-all-changes/50363/3

Hi @lordbyron, Unfortunately I’m having a little trouble following the details because of all of the redactions, so I wasn’t able to come to any specific conclusions so far, but I do have some general observations that I hope will give some hints for things to try next. There are two typical reasons why this sort of thing might happen. If the configuration effectively modifies its own desired state while being applied in a way that Terraform can’t deduce, such as if it’s reading some data us…

In this case, which of these resources would the data source be targeting that could cause this behavior?

Would it be security group A/B, the security group rules, or the ALB? etc.

I can double check to confirm that is not the case. And if so, it would seem it might be a bug in the provider.

Looking more into this discussion post.

Terraform needs to see that you are getting the security group ID in the rule from the security group resource and not any data source. That is how it can determine the dependency that indicates the security group resource will change and need to be updated in the ALB. If any of that data comes from a data source and not a resource, or is transformed to the point that Terraform loses track of it, then the 2 applies will be needed.

I can confirm that the security group ID passed to the ALB is coming directly from the security group ID output.

I can also confirm that the security group ID passed to security group B is coming directly from security group A output.

If you can pare that down to a simple example, it is worth opening an issue on the provider. I sometimes find a mistake I made or a workaround for the issue while trying to get to an example reproduction, so it can be worthwhile even if the issue is not addressed quickly.

Yeah I think that’s best. Ideally I can make the example as dumb as possible while replicating the behavior.

E.g.: “a rule in security group B references security group A” was totally irrelevant.

If I remove this dependency, it does not change the behavior.

So far, it seems that dependent objects of security groups that are not security group rules, are not immediately informed of updates to the security group ID. It’s difficult to understand that being the case though.

@Jeremy G (Cloud Posse) The issue was in the Cloud Posse ALB module itself: https://github.com/cloudposse/terraform-aws-alb/blob/master/main.tf#L81-L83

You pointed it out earlier: “or is transformed to the point that Terraform loses track of it, then the 2 applies will be needed”

The following code should be an acceptable replacement and allows Terraform to keep track of the input security group IDs:

security_groups = flatten([

var.security_group_ids,

try([aws_security_group.default[0].id], []),

])

security_groups = compact(

concat(var.security_group_ids, [join("", aws_security_group.default.*.id)]),

)

@Zach B My educated guess is that your replacement code will not work either. Have you tried it? I think conditional logic will be necessary (and not particularly pretty).

@Jeremy G (Cloud Posse) Yes, I have tested this to work as expected. (It fixes the issue) However I was working directly with an aws_lb resource, so I’ve created a fork of Cloud Posse’s ALB where I can test to ensure it behaves the same there too. (I expect it to, I configured the underlying aws_lb essentially the same during my initial tests)

My guess is that the issue has to do with null values, and/or the transformation to extract null values out of the list.

I also saw that the dependency graph looks the same in the current code and my code, so nothing obvious there.

Yes, compact() destroys information (the length of the list) and makes the resulting expression completely undefined at plan time.

Shouldn’t this mean that it should be an operational policy NOT to use compact() on dependent objects?

Probably. We follow that rule for instances where we need to count the length of the object. This is the first time I have seen it come up with dependent objects, so we have not had that rule.

@Jeremy G (Cloud Posse) That also gives us a lot of hope that these new resources for security group rules solves a lot. That will be my final test. (I expect that to work fine based on other tests I’ve done)

@Jeremy G (Cloud Posse) I can confirm that the aws_vpc_security_group_{ingress,egress}_rule resources work great. Using create_before_destory on these resources and aws_security_group work fully as expected without any additional tricks.

There are some gotchas with the new security group rule resources, but nothing that can’t be solved. I think most of the gotchas are the same ones as the previous security group rule resources.

• It seems as though to avoid drift, you should name your ip_protocol using lowercase letters. E.g. tcp

• If you want to allow for all protocols in any given rule, you cannot limit to specific ports. In a rule that allows for all protocols, that rule must allow for all ports as well. To work around this, you are forced to separate rules per protocol and port range if necessary.

• Use "-1" instead of "all" in ip_protocol to avoid drift

Also, my fix for the Cloud Posse ALB module did work as expected. I’ll open a PR ASAP.

OK, please post the ALB. PR link here and tag me when you are ready for review.

Thanks a lot for pointing me in the right direction on this as well

If you have the time and energy, it would also be helpful if you opened an issue with Hashicorp about this. Terraform should not lose track of dependencies just because they went through compact().

Good idea. Unfortunately, it gets even worse than that.

After my fix on the Cloud Posse ALB module, I was passing in the security_group_ids to the ALB module like so:

security_group_ids = try(each.value.security_group_ids, [])

^ This loses track of the dependency, and requires running terraform apply twice.

I found that when I changed it to:

security_group_ids = lookup(each.value, "security_group_ids", [])

^ This worked fine!

Again, if you have time, please see if adding an explicit dependency in ALB (depends_on = [var.security_group_ids, aws_security_group.default]) fixes the problem without changing other code.

Okay I’ll try this. I believe I do recall doing that to no effect. I do recall manually checking the dependency graph, and in all cases, it showed that the ALB module was dependent upon the security group / security group module. Which is very confusing to me personally.

Actually, that makes more sense to me. Terraform tracked the dependency, but could not, at plan time, see that it changed, so it preserved the resource rather than cause perpetual drift.

Oh I see, so it’s all about making sure that it can compute the value at plan time?

In which case, when terraform apply is required twice, it was not able to compute the value for some reason?

Not exactly. I don’t know the innards of Terraform, but I have a sense that it somehow tracks changes even if it doesn’t know the actual values. It needs to see at plan time that values have changed so it can update those dependencies.

I know Terraform does not have a separate way of tracking “unknown could be null” from “unknown but definitely not null” values, so it considers the output of compact() fully unknown unless all values are known at plan time.

2023-04-27

2023-04-28

2023-04-30

Hi all, Can someone help me to import the cloudsql(mysql) from gcp to terraform?? i have my sql running on one project and im trying to import it to another project directory. i have used below command

terraform import google_sql_database.default {{projectid(where my sql resides)}}/{{instance(sqlinstancename)}}/{{name(sqlinstancename)}}

but its throwing this error

Error: Error when reading or editing SQL Database Instance "{{sqlinstancename}}": googleapi: Error 400: Invalid request: instance name ({{sqlinstancename}})., invalid

can someone help on this..