#azure (2020-11)

Archive: https://archive.sweetops.com/azure/

2020-11-08

Hi all, we are trying to setup a private AKS cluster, but we want to have a public DNS resolver: We have a VPN in a peered vnet, but by default private AKS make only a private DNS zone so we cannot access the cluste

2020-11-13

hello @Padarn, luckily for you I have setup a private AKS. be sure to have follow the steps below

`

By default, when a private cluster is provisioned, a private endpoint (1) and a private DNS zone (2) are created in the cluster-managed resource group. The cluster uses an A record in the private zone to resolve the IP of the private endpoint for communication to the API server.

The private DNS zone is linked only to the VNet that the cluster nodes are attached to (3). This means that the private endpoint can only be resolved by hosts in that linked VNet. In scenarios where no custom DNS is configured on the VNet (default), this works without issue as hosts point at 168.63.129.16 for DNS that can resolve records in the private DNS zone because of the link.

In scenarios where the VNet containing your cluster has custom DNS settings (4), cluster deployment fails unless the private DNS zone is linked to the VNet that contains the custom DNS resolvers (5). This link can be created manually after the private zone is created during cluster provisioning or via automation upon detection of creation of the zone using event-based deployment mechanisms (for example, Azure Event Grid and Azure Functions).

Learn how to create a private Azure Kubernetes Service (AKS) cluster

Thanks a lot! So you have a forwarding VPN?

Learn how to create a private Azure Kubernetes Service (AKS) cluster

no,

the terraform azurerm_kubernetes_cluster should have created a MC_ resource_group

in that resource group look for the private dns zone like [xxxx-yyy.privatelink.francecentral.azmk8s.io](http://xxxx-yyy.privatelink.francecentral.azmk8s.io)

click on virtual network link

you should have one for each vnet subnet your cluster dns should be registered with

then you should be able to do kubectl get nodes --all-namespaces from your computer

then in my private dns I have a conditional forwarder for xxx-yy.privatelink.francecentral.azmk8s.io

does that answer your needs ?

I see I see

Yes I think that works, I’ll try it out and let you know, thanks a lot

Just to confirm, your kube config has the private link?

is that what you need ? the terraform code to create the link ?

locals {

aks_private_dns_zone = join(".", slice(split(".", azurerm_kubernetes_cluster.kube_infra.private_fqdn), 1, 6))

}

data "azurerm_private_dns_zone" "private_aks_zone" {

name = local.aks_private_dns_zone

resource_group_name = azurerm_kubernetes_cluster.kube_infra.node_resource_group

}

output "aks_dns_zone" {

value = local.aks_private_dns_zone

}

data "azurerm_virtual_network" "preprod_vnet" {

name = "${var.prefix}-${var.env}-vnet01"

resource_group_name = "${var.prefix}-${var.env}-networkRessourceGroup"

}

resource "azurerm_private_dns_zone_virtual_network_link" "preprod" {

name = "${var.prefix}-${var.env}-dns-vnet-link-preprod"

resource_group_name = azurerm_kubernetes_cluster.kube_infra.node_resource_group

private_dns_zone_name = data.azurerm_private_dns_zone.private_aks_zone.name

virtual_network_id = data.azurerm_virtual_network.preprod_vnet.id

}

Yes. Perfect. Thank you!

I was missing that this needed to be created separately, read the docs inccorrectly . Thanks a lot.

beware you may want to enable RBAC at cluster creation

sorry one question

then in my private dns I have a conditional forwarder for xxx-yy.privatelink.francecentral.azmk8s.io

what does it forward to?

it tells my dns to forward dns request for azmk8s.io to azure dns 168.63.129.16

Aha.. and so even locally you are able to resolve the IP of the private link

we have an azure ADDS

hey @Pierre-Yves sorry, back to this one.. how do you deploy your DNS forwarder? I’m running a core-dns one in our k8s cluster (with load balancer to access), but its unstable

hello @Padarn; it’s our external dns that forward request to the Azure dns see the screenshot:

btw I still have some work on Azure Rbac ..

what do you use as a forwarder?

do you happen to have an azure template for it?

no manually created by a colleague

haha does your colleague have a readme?

well he often creates drift ..

2020-11-15

2020-11-16

do you use ACI container instance deployed with Terraform ? deploying a new image requires to delete the container and recreate it … also it’s not handy to provide a build id to terraform at each dev code release => which implies an infrastructure terraform release .

currently my CICD pipeline calls az script .. but I want to avoid it..

do you have any solution ? or experience to share ?

2020-11-17

Another beginner Azure networking question

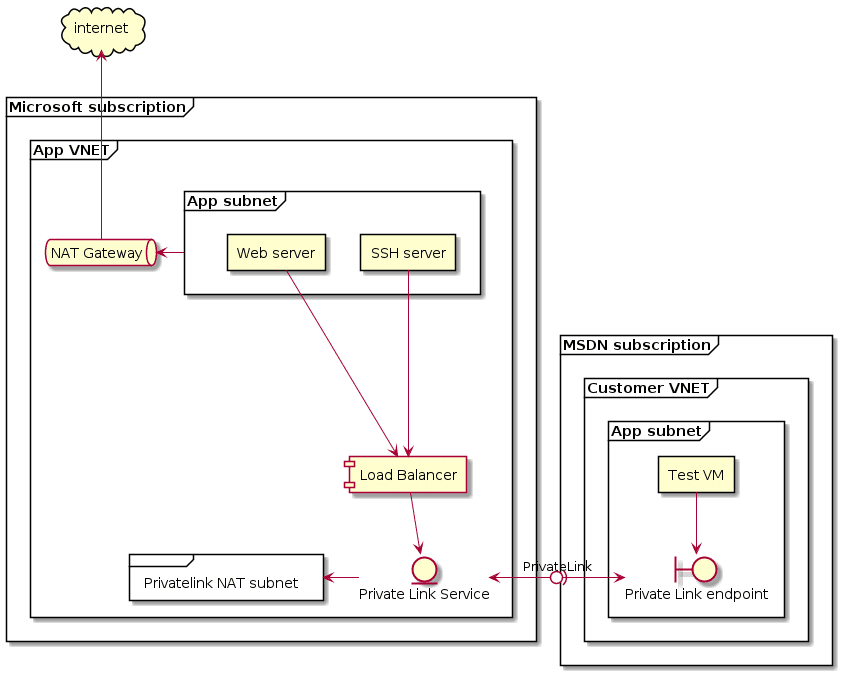

it is possible to create a private link for an arbitrary resource in one vnet, to exist in another vnet?

does azurerm_virtual_network_peering proivde to wide access ? then you may restrict access with the network security group on each subnets

actually, the issue I’m trying to solve is they cannot be peered

they have clashing CIDR

then you may look for an internal lb which will expose the service and hide ip addresses

ah

but out of curiosity .. could a private link also do this?

reading the doc it seems yes https://docs.microsoft.com/en-us/azure/private-link/private-endpoint-overview

Learn about Azure Private Endpoint

Private Link Resource The private link resource to connect using resource ID or alias, from the list of available types. A unique network identifier will be generated for all traffic sent to this resource.

in the load balance approach, if its internal, I guess it will still have an IP in the vnet where the resources are:

it looks the example makes use of private_link_service and a loadbalancer

nice, let me take a look.. thank you!

more description here https://blog.nillsf.com/index.php/2020/06/11/setting-up-a-private-link-service-as-a-service-provider/

Azure Private Link allows you to connect to public services over a private connection. I have already written about using Private Link with blob and the Azure Kubernetes Service. You can also use Private Link to expose your own custom services, and act as a service provider. This means you would build a service in […]

Yes. it even works across tenants. You can’t expose everything, sometimes you need to put a standard load balancer in front of it. Which could be a problem since a vm can only be exposed through 1 load balancer

You mean create a private link to a LB or a LB infront of a private link?

Sorry for the late reply. Private link Service to SLB.

got it, thanks

so resource is in vnet 1, but vnet 2 only accesses it via an IP in the CIDR of vnet 2

is there a way to install on Azure “HCS hashicorp consul service” by Terraform ?

2020-11-19

2020-11-23

2020-11-25

Hey all, we are creating a nodepool for our AKS cluster via terraform. We enable autoscaling and set min count to 0. When the pool is created it seems it will not auto scale even when there is demand for that pool.

It is fixed if I manually scale it to 1 and then re enable the autoscaling

Is it possible to view logs of the autoscaler? Not sure where to start debugging

resource "azurerm_monitor_diagnostic_setting" "aks_diagnostics" {

name = "${local.name_prefix}-aks01-diag"

target_resource_id = azurerm_kubernetes_cluster.aks.id

log_analytics_workspace_id = var.law_id

log {

category = "kube-apiserver"

enabled = true

retention_policy {

days = 0

enabled = false

}

}

log {

category = "guard"

enabled = true

retention_policy {

days = 0

enabled = false

}

}

log {

category = "kube-controller-manager"

enabled = true

retention_policy {

days = 0

enabled = false

}

}

log {

category = "kube-scheduler"

enabled = true

retention_policy {

days = 0

enabled = false

}

}

log {

category = "kube-audit"

enabled = false

retention_policy {

days = 0

enabled = false

}

}

log {

category = "kube-audit-admin"

enabled = false

retention_policy {

days = 0

enabled = false

}

}

log {

category = "cluster-autoscaler"

enabled = true

retention_policy {

days = 0

enabled = false

}

}

metric {

category = "AllMetrics"

enabled = false

retention_policy {

days = 0

enabled = false

}

}

}

Awesome

Learn how to enable and view the logs for the Kubernetes master node in Azure Kubernetes Service (AKS)

You have made my day. Thanks a lot

If you’re looking for a law definition this is the one I use:

resource "azurerm_log_analytics_workspace" "aks" {

name = "${local.name_prefix}-${module.tf-var-project.randomid}-law"

location = var.location

resource_group_name = azurerm_resource_group.aksmon.name

sku = "PerGB2018"

retention_in_days = 30

}

resource "azurerm_log_analytics_solution" "aks" {

solution_name = "ContainerInsights"

location = azurerm_log_analytics_workspace.aks.location

resource_group_name = azurerm_resource_group.aksmon.name

workspace_resource_id = azurerm_log_analytics_workspace.aks.id

workspace_name = azurerm_log_analytics_workspace.aks.name

plan {

publisher = "Microsoft"

product = "OMSGallery/ContainerInsights"

}

}

That we’ve already got but thanks

thanks for your input I will follow your guideline

2020-11-27

@Padarn @geertn should I set up a big azurerm_log_analytics_workspace for all logs or smaller one per application as you mentioned in the post above ?

Sorry not sure

Depends. I tend to segregate based on type of solution. Separate law for Virtual Machine logs, Separate Law for AKS logs.

I have multiple client environments streaming data to Log Analytics. What are the pros and cons to use a single instance versus individual instnaces of Log Analytics. Please point me to the business benefits . Thank you.

Thanks a lot, I will use a single one and if needed will create additional workspace ;)

My private cluster is deployed through azure devops. But once I have to create namespace and rbac from AzureDevops , terraform raise the errors for namespace and rbac:

Error: Post "<http://localhost/apis/rbac.authorization.k8s.io/v1/clusterrolebindings>": dial tcp [::1]:80: connect: connection refused

Error: Post "<http://localhost/apis/rbac.authorization.k8s.io/v1/clusterrolebindings>": dial tcp [::1]:80: connect: connection refused

did it then require a vm working as a gateway for deployment to the private cluster? or may be a network connectivity tricks from azure devops to aks ?

I didn’t work with private cluster but since you need to talk to the AKS API which is private you need to talk to it through a route to your private address space. Most logical thing to do seems to deploy your DevOps agent within Azure

I’m using the DevOps agent on/in AKS since I use API Authorized IP Ranges which doesn’t really work nice with DevOps hosted agent

In case you’re looking to run the agent in AKS, be aware that AKS uses containerd starting from 1.19 making building docker images using docker in docker impossible

thanks, as you said, it seems the only way is through an agent. here is a very nice documentation that I have foudn and will use next week https://colinsalmcorner.com/azure-pipelines-for-private-aks-clusters/

Creating private AKS clusters is a good step in hardening your Azure Kubernetes clusters. In this post I walk through the steps you’ll need to follow to enable deployment to private AKS clusters.

Tada it’s working ! next steps is having a helm user and role binding who deploy apps from Azure devops

Good busy:)